Data Fabric and Address Verification Interface

IBM Data Science in Practice

NOVEMBER 28, 2022

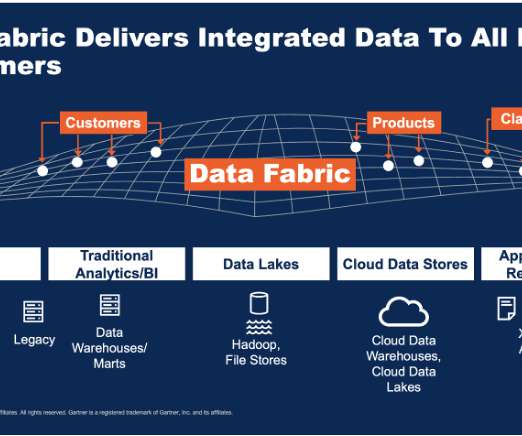

Data fabric is defined by IBM as “an architecture that facilitates the end-to-end integration of various data pipelines and cloud environments through the use of intelligent and automated systems.” The concept was first introduced back in 2016 but has gained more attention in the past few years as the amount of data has grown.

Let's personalize your content