Building your own Object Detector from scratch with Tensorflow

Mlearning.ai

MARCH 31, 2023

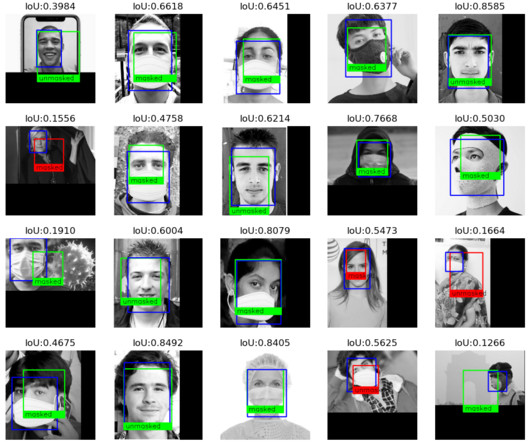

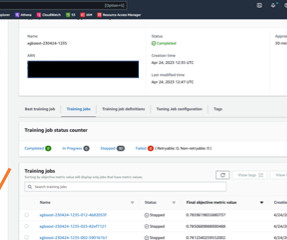

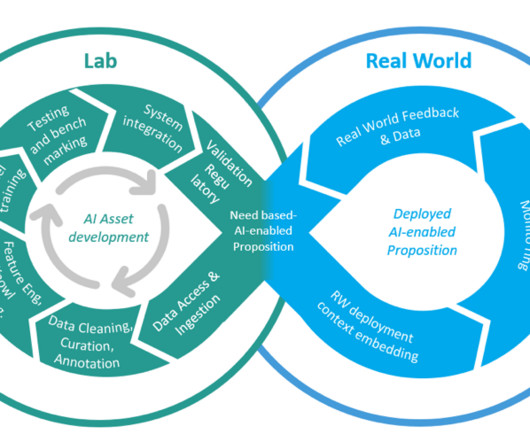

In this story, we talk about how to build a Deep Learning Object Detector from scratch using TensorFlow. Most of machine learning projects fit the picture above Once you define these things, the training is a cat-and-mouse game where you need “only” tuning the training hyperparameters in order to achieve the desired performance.

Let's personalize your content