Unlocking Reliable Generations through Chain-of-Verification: A Leap in Prompt Engineering

Explore the Chain-of-Verification prompt engineering method, an important step towards reducing hallucinations in large language models, ensuring reliable and factual AI responses.

Image created by Author with Midjourney

Key Takeaways

- The Chain-of-Thought (CoVe) prompt engineering method is designed to mitigate hallucinations in LLMs, addressing the generation of plausible yet incorrect factual information

- Through a four-step process, CoVe enables LLMs to draft, verify, and refine responses, fostering a self-verifying mechanism that enhances accuracy, Structured Self-Verification

- CoVe has demonstrated improved performance in various tasks such as list-based questions and long-form text generation, showcasing its potential in reducing hallucinations and bolstering the correctness of AI-generated text

We study the ability of language models to deliberate on the responses they give in order to correct their mistakes.

Introduction

The relentless pursuit of accuracy and reliability in the realm of Artificial Intelligence (AI) has ushered in groundbreaking techniques in prompt engineering. These techniques play a pivotal role in guiding generative models to provide precise and meaningful responses to a myriad of queries. The recent advent of the Chain-of-Verification (CoVe) method marks a significant milestone in this quest. This innovative technique aims to tackle a notorious issue in large language models (LLMs) — the generation of plausible yet incorrect factual information, colloquially known as hallucinations. By enabling models to deliberate on their responses and undergo a self-verifying process, CoVe sets a promising precedent in enhancing the reliability of generated text.

The burgeoning ecosystem of LLMs, with their capability to process and generate text based on vast corpora of documents, has showcased remarkable proficiency in various tasks. However, a lingering concern remains—the propensity to generate hallucinated information, especially on lesser-known or rare topics. The Chain-of-Verification method emerges as a beacon of hope amidst these challenges, offering a structured approach to minimize hallucinations and improve the accuracy of generated responses.

Understanding Chain-of-Verification

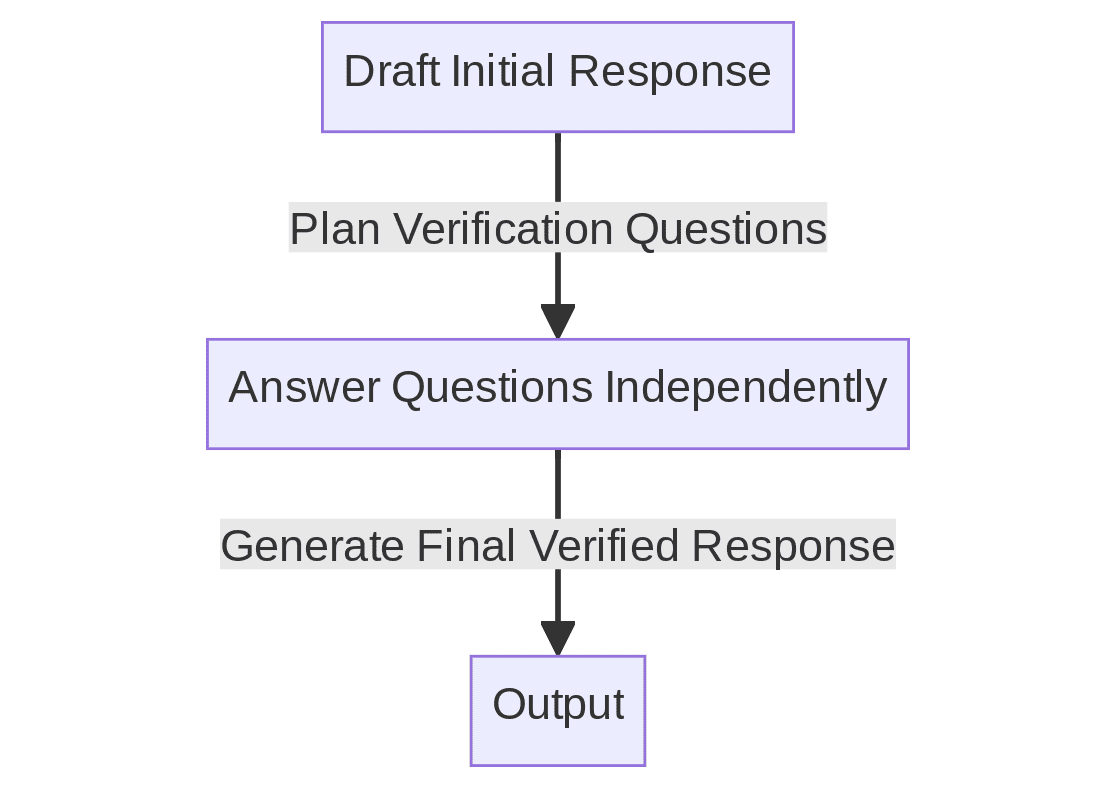

CoVe unfolds a four-step mechanism to mitigate hallucinations in LLMs:

- Drafting an initial response

- Planning verification questions to fact-check the draft

- Answering those questions independently to avoid bias

- Generating a final verified response based on the answers

This systematic approach not only addresses the concern of hallucinations but also encapsulates a self-verifying process that elevates the correctness of the generated text. The method's efficacy has been demonstrated across a variety of tasks, including list-based questions, closed book QA, and long-form text generation, showcasing a decrease in hallucinations and an improvement in performance.

Implementing Chain-of-Verification

Adopting CoVe involves integrating its four-step process in the workflow of LLMs. For instance, when tasked with generating a list of historical events, an LLM employing CoVe would initially draft a response, plan verification questions to fact-check each event, answer those questions independently, and finally, generate a verified list based on the validation received.

The rigorous verification process intrinsic to CoVe ensures a higher degree of accuracy and reliability in the generated responses. This disciplined approach toward verification not only enriches the quality of information but also fosters a culture of accountability within the AI generation process, marking a significant stride towards achieving more reliable AI-generated text.

Example 1

- Question: List notable inventions of the 20th century.

- Initial Draft: Internet, Quantum Mechanics, DNA Structure Discovery

- Verification Questions: Was the Internet invented in the 20th century? Was Quantum Mechanics developed in the 20th century? Was the structure of DNA discovered in the 20th century?

- Final Verified Response: Internet, Penicillin Discovery, DNA Structure Discovery

Example 2

- Question: Provide a list of countries in Africa.

- Initial Draft: Nigeria, Ethiopia, Egypt, South Africa, Sudan

- Verification Questions: Is Nigeria in Africa? Is Ethiopia in Africa? Is Egypt in Africa? Is South Africa in Africa? Is Sudan in Africa?

- Final Verified Response: Nigeria, Ethiopia, Egypt, South Africa, Sudan

Adopting CoVe involves integrating its four-step process in the workflow of LLMs. For instance, when tasked with generating a list of historical events, an LLM employing CoVe would initially draft a response, plan verification questions to fact-check each event, answer those questions independently, and finally, generate a verified list based on the validation received.

Figure 1: The Chain-of-Verification simplified process (Image by Author)

The methodology would require in-context examples along with the question to pose the LLM, or an LLM could be finetuned on CoVe examples in order to approach each question in this manner, should it be desired.

Conclusion

The advent of the Chain-of-Verification method is a testament to the strides being made in prompt engineering towards achieving reliable and accurate AI-generated text. By addressing the hallucination issue head-on, CoVe offers a robust solution that elevates the quality of information generated by LLMs. The method's structured approach, coupled with its self-verifying mechanism, embodies a significant leap towards fostering a more reliable and factual AI generation process.

The implementation of CoVe is a clarion call for practitioners and researchers alike to continue exploring and refining techniques in prompt engineering. Embracing such innovative methods will be instrumental in unlocking the full potential of Large Language Models, promising a future where the reliability of AI-generated text is not just an aspiration, but a reality.

Matthew Mayo (@mattmayo13) holds a Master's degree in computer science and a graduate diploma in data mining. As Managing Editor, Matthew aims to make complex data science concepts accessible. His professional interests include natural language processing, machine learning algorithms, and exploring emerging AI. He is driven by a mission to democratize knowledge in the data science community. Matthew has been coding since he was 6 years old.