Abstract

The current \(\phi\)-OTDR vibration localization and recognition methods based on predominantly relies on assumptions such as bare fiber sensing, simulated experimental environments, or single known laying scenario. Most of them either focus on the localization or recognition of events, while even some studies that consider both ignore the improvement of performance to meet real-time requirements, which limits their practical application in multiple laying scenarios. To solve the above problems, we propose a method for vibration area localization and event recognition of the underground power optical cable based on PGSD-YOLO and 1DCNN-BiGRU-AFM. First, with real multiple laying scenarios of buried underground and manholes, using an underground power optical cable as distributed optical fiber vibration sensing, a \(\phi\)-OTDR system is built to collect signals of vibration events. And then, high-pass and low-pass filters are combined for denoising to improve the signal quality. Secondly, PGSD-YOLO is designed to localize the vibration area and obtain its laying scenario. PGSD-YOLO combines the YOLOv11 with the multi-scale attention of PMSAM to enhance the feature extraction ability. Through the dynamic sampling strategy of DySample, the information loss of signals is reduced, and GSConv and VoVGSCSP are used to optimize feature fusion. Finally, based on the obtained scenario labels and the time-domain signals, 1DCNN-BiGRU-AFM is designed to recognize vibration events. 1DCNN-BiGRU-AFM combines the feature extraction ability of 1DCNN and the timing analysis ability of BiGRU, and optimizes feature fusion through the AFM mechanism. From experimental results, both PGSD-YOLO and 1DCNN-BiGRU-AFM meet the real-time and performance requirements in multiple scenarios.

Similar content being viewed by others

Introduction

Underground power optical cables have become key infrastructure for guaranteeing urban electric supply and the efficient operation of the power grid. The cables are laid in various scenarios, including in buried underground, in manholes, and in cable trenches. Among these, the cables in buried and manhole laying scenarios frequently encounter the risk of human-induced damage. For instance, in the buried laying scenario, construction activities can lead to cables being subjected to pressure, displacement, or breakage. In the manholes laying scenario, illegal excavations can affect the transmission quality of the cables. Effectively managing and maintaining underground power optical cables to ensure uninterrupted communication has emerged as a critical challenge.

The \(\phi\)-OTDR optical fiber sensing technology has the advantages of high sensitivity, long-distance detection, high reliability, and low cost. The technology uses optical fibers as the medium to sense various vibrations occurring along the optical fiber and collect vibration signals in real time. The vibration signals can be collected for analyzing human-induced damage. The technology is widely used in safety detection fields such as pipeline safety1, perimeter security2, railway transportation3, submarine cables4, etc. In power optical cables, a part of unused optical fibers is reserved for substitution when the main optical fibers fail. Therefore, when abnormal vibration events have not yet threatened the cables, the unused internal optical fibers can be used as the sensing medium for collecting vibration signals. Therefore, the \(\phi\)-OTDR optical fiber sensing technology is adopted to collect the vibration signals in the surrounding environment of underground power optical cables, and the relevant research methods are used to conduct vibration area localization and event recognition of human-induced events that may cause damage.

Currently, single-stage methods based on YOLO models5,6,7,8 converts \(\phi\)-OTDR signals into space-time images for vibration area localization, while recognizing vibration events based on the image features of area signals. This method is suitable for capturing the overall distribution characteristics of vibration signals, facilitating vibration area localization. However, the image transformation phase results in the loss of some Time-domain signal details, which can hinder vibration event recognition. At present, single-stage methods focus only on vibration area localization and event recognition within single known laying scenario. However, underground power optical cable laying scenarios are complex, involving diverse vibration event types and varying levels of interference from background noise or signals in non-target areas. Additionally, the deployment complexity of proposed methods must be adequately considered.

In order to meet the practical demands, a method for vibration area localization and event recognition in multiple laying scenarios of underground power optical cables is proposed. In the vibration area localization stage, PGSD-YOLO is proposed to gain the laying scenario and localize the vibration areas. In the vibration event recognition stage, based on the laying scenario and the Time-domain signals of the corresponding spatial point in these areas, 1DCNN-BiGRU-AFM is proposed for recognition. The method comprehensively considers buried and manhole scenarios where human-induced damages to the cables occur frequently. It can be directly applied to actual underground power optical cables to prevent potential damage and better protect the safety of the cables, which has significant practical significance and application prospects.

The main contributions of this paper include:

-

1.

We deploy the \(\phi\)-OTDR system at a substation and select an underground power optical cable in buried underground and manholes, where human threats occur frequently. Using the cable as a vibration sensing medium, we design experiments to collect real-world vibration threat events. The raw signals are preprocessed to generate self-constructed datasets for vibration area localization and event recognition;

-

2.

The PGSD-YOLO is proposed to achieve vibration area localization in both buried and manhole laying scenarios. Based on YOLOv11n, PGSD-YOLO incorporates the PMSAM module to enhance the efficiency of vibration feature extraction, the DySample to reduce feature information loss, and combines GSConv with VoVGSCSP to optimize the feature fusion process. By comparing PGSD-YOLO with lightweight YOLO models, including those previously applied to vibration area localization, it is demonstrated that PGSD-YOLO improves localization performance and real-time capability while significantly reducing spatial and computational complexity;

-

3.

The 1DCNN-BiGRU-AFM is proposed for vibration event recognition in both buried and manhole laying scenarios. The model combines the efficient feature extraction capability of 1DCNN with the sequential variation processing ability of BiGRU, capturing both time-domain and frequency-domain features of vibration signals. Additionally, it introduces an attention fusion mechanism (AFM) to further optimize vibration feature selection. 1DCNN-BiGRU-AFM demonstrates superior recognition performance, real-time capability, and reduced model complexity compared to CNN and Transformer models in a localization-then-recognition method. Furthermore, it outperforms YOLO models, which perform simultaneous localization and recognition;

The structure of the remaining parts of this paper is as follows: section “Related work” provides related works. Section “Methods” describes the proposed models of vibration area localization and event recognition. Section “Experimental design” is the experimental design in real scenarios. Section “Experimental results” discusses our experimental results. Finally, section “Conclusion and future work” presents the conclusion and future work.

Related work

Vibration area localization of \(\phi\)-OTDR signal

The vibration area localization of \(\phi\)-OTDR mainly consists of two methods: based on signal processing techniques and based on object detection models. In the actual environment, the interference noise is relatively large, which will submerge the useful signals, which is unfavorable for the vibration areas. Based on the above problems, a series of localization methods using signal processing techniques have been proposed in recent years. Wu et al.9 adopted wavelet denoising based on the simulated annealing algorithm with adaptive annealing threshold, and then performed axial localization by calculating the spatial gradient of the grayscale image using the two-dimensional edge detection method. Huang et al.10 proposed an axial localization method using overlapping phase cross-correlation. By exploiting the linear relationship between vibration signals and time, the cross-correlation of adjacent phase matrices is calculated, and the maximum value identifies the vibration location. Autocorrelation is also introduced to enhance localization performance. Both experiments used bare optical fibers for data acquisition. Reference9 simulated vibration events indoors using instruments, while reference10 conducted vibration events on a fence.

Localization methods based on signal processing techniques perform well for high-frequency, large-amplitude vibration areas but encounter difficulties with low-frequency, small-amplitude events, as well as vibrations buried deeper or located farther away. To overcome these limitations, localization methods based on object detection models have been proposed. These models offer excellent localization performance and real-time capability, enabling simultaneous vibration area localization and event recognition. Xu et al.5 proposed a YOLOv3 multi-class vibration detection model for intrusion event localization and real-time detection. Wang et al.8 introduced a CBAM-YOLOv8 model for perimeter security event recognition and vibration area localization. References5 and8 were conducted under a single deployment scenario, specifically using a fence in perimeter security, and both employed bare fiber as the sensing medium. Compared with optical cables with protective media in reality, bare optical fibers will have obvious vibrations in the vibration area, and the positioning difficulty is lower, but it cannot be applied to real scenarios. So it is necessary to solve the positioning problem along the same cable in multiple laying scenarios.

To solve the problem of vibration area localization of underground power optical cables in multiple laying scenarios, we propose PGSD-YOLO based on YOLOv11n, which locates the vibration areas while gaining the laying scenario of the vibration areas.

Vibration event recognition of \(\phi\)-OTDR signal

\(\phi\)-OTDR vibration event recognition methods can be broadly categorized into machine learning and deep learning. Machine learning methods rely on manually extracted features to differentiate and recognize \(\phi\)-OTDR vibration signals. Jia et al.11 applied the KNN model to extract signal features in both time and frequency-domains and used an SVM classifier to recognize events such as watering, knocking, climbing, pressing, and false. Cao et al.12 extracted 32 features from \(\phi\)-OTDR signals and employed the SVM model to recognize background noise, digging, knocking, watering, shaking, and walking events. Reference12 adopted bare optical fibers and simulated vibration events indoors-for example, by partially burying the optical fiber in a sandbox to mimic buried conditions. Wang et al.13 utilized a random forest classifier to learn the features of time-domain interference signals, effectively recognizing watering, knocking, pressing, and no-disturbance events. Reference13 also used bare optical fibers and simulated vibration events, for instance, by using a tire to simulate pressing.

The vibration signal characteristics produced by activities such as digging and pickaxe are similar. When using machine learning for recognition, it is difficult to obtain distinguishable features through manual extraction, and the performance of recognition is difficult to be guaranteed. In addition, the signals of the optical fiber with protective medium in the real environment is different from that of the bare optical fiber, and the simulated vibration event without environmental interference is not real enough. To solve the problems of machine learning methods, deep learning models extract features in an adaptive manner, which can better distinguish the vibration signals of similar events and improve the recognition performance. Deep learning models applied to recognition tasks mainly include YOLO, Transformer, and CNN.

YOLO models offer fast recognition and real-time capabilities. Xu et al.5 proposed a real-time multi-class detection method based on YOLO, which effectively recognizes signals from calm state, rigid collisions against the ground, hitting the protective net, shaking the protective net, cutting the protective net in real time.

Transformer models excel at capturing long-range dependencies, enabling better analysis of signal variation characteristics. Shi et al.14 proposed a method combining Transformer with \(\phi\)-OTDR to recognize events such as background, cycling, flushing, patting, and walking in buried scenarios. Zhu et al.15 introduced a method integrating Swin Transformer with two-dimensional vibration signal images to recognize environmental signals of farmland, village, and mountain, as well as strong interference signals such as expressway and railway, and intrusion signals like excavator.

CNN models excel at extracting local features, and their parameter-sharing mechanism reduces model complexity. Shi et al.16 applied CNN to \(\phi\)-OTDR spatiotemporal data matrices to recognize events such as background, walking, jumping, beating with a shovel, and digging with a shovel. Wu et al.17 collected vibration signals of background noise, traffic interferences, excavator operation, road breaker operation, and manual digging in urban areas using DAS. They proposed a 1DCNN-BiLSTM recognition method, where 1DCNNs were used to extract space-time features of signals, and BiLSTM captured spatial relationships between signal nodes. Tian et al.18 integrated attention mechanisms with CNN and proposed ATCN-BiLSTM to recognize events including background without threats, climbing the fence, and raining.

Current deep learning methods primarily focus on recognition performance, but real-time capabilities are also critical in practical applications. YOLO models excel in real-time performance; however, their complexity increases as they simultaneously handle recognition and localization, which can result in decreased localization and recognition performance. Transformer models improve recognition performance through self-attention mechanisms but require substantial computational resources. CNN models demand fewer computational resources but lack the ability to effectively process global information. At present, the research on vibration event identification mainly focuses on fewer types and a single laying scenario, without considering the issue of vibration event identification in multiple laying scenarios.

To solve the above problems, we propose a 1DCNN-BiGRU-AFM vibration event recognition method that considers multiple laying scenarios, real-time capabilities, recognition performance, and model scale. According to the laying scenario labels of the vibration events, the pre-trained weights of the corresponding scenarios are used to recognize the event types.

Methods

For the vibration events in multiple laying scenarios of underground power optical cables, by improving YOLOv11n and CNN, a vibration area localization and event recognition method based on PGSD-YOLO and 1DCNN-BiGRU-AFM is constructed. As shown in Fig. 1: the vibration signal preprocessing phase (green lines), the vibration area localization phase (red lines), and the vibration event recognition phase (orange lines).

In the vibration signal preprocessing phase, the \(\phi\)-OTDR signals are denoised through high-pass and low-pass filters to obtain the space-time signals. Subsequently, these space-time signals are transformed into space-time images.

In the vibration area localization phase, the vibration areas in the images are located through PGSD-YOLO, generating the located space-time images and the corresponding laying scenario labels. Then, based on the located spatial points, the time-domain signals are extracted from the space-time signals.

In the vibration event recognition phase, according to the laying scenario labels and the extracted time-domain signals, the pre-trained 1DCNN-BiGRU-AFM of the corresponding scenario is selected for vibration event recognition to obtain vibration event labels.

Vibration signal preprocessing algorithm

In the vibration signal preprocessing of underground power optical cables in multiple laying scenarios, the signals collected by \(\phi\)-OTDR system often contain high-frequency internal machine noise and low-frequency background noise interference. These noises can mask the effective features of the vibration signals and affect the localization and recognition performance of the subsequent models. Therefore, in this paper, an algorithm combining high-pass and low-pass filters19,20 is adopted to preprocess \(\phi\)-OTDR signals, remove irrelevant noises from the frequency-domain, and improve the signal quality. Due to the short processing time of high-pass filter and low-pass filter, the algorithm can efficiently preprocess the vibration signal.

High-pass filter

High-pass filters21 allow the high-frequency components of a signal to pass while suppressing the low-frequency components. In \(\phi\)-OTDR signals, low-frequency noise often originates from background noise during system operation, which may obscure the detailed features of vibration events. By applying high-pass filters, the low-frequency noise can be removed, highlighting the critical changes in the vibration signals. The transfer function of the high-pass filter is defined as:

where \({H}_{HPE}\left( f\right)\) is the transfer function of the high-pass filter, \({f}_{c}\) is the cutoff frequency, \({f}_{p}\) is the passband frequency, \({A}_{s}\) is the stopband attenuation.

The corresponding impulse response of the high-pass filter is obtained by the inverse Fourier transform of its transfer function:

where \({h}_{HPF}\left( n\right)\) is the impulse response of the high-pass filter, \(\mathcal {F}^{-1}\) is the inverse Fourier transform operator, \(n\) is the current time step.

The output signal of the high-pass filter can be expressed as:

where \(x\left[ k\right]\) is the output signals, \({h}_{HPF}\left[ n-k\right]\) is the impulse response of the high-pass filter, \(N\) is the filter length, \({y}_{HPF}\left[ n\right]\) is the corresponding output signal at each time step.

Low-pass filter

Low-pass filters22 allow low-frequency components of signals to pass while suppressing high-frequency noise. For \(\phi\)-OTDR signals, high-frequency noise is typically caused by electromagnetic interference within machinery or random fluctuations of minor vibration signals. By applying low-pass filters, these high-frequency components are removed, preserving the main trend information of the vibration signals. This enables a more effective reflection of the spatial distribution of the vibrations. The transfer function of the low-pass filter is defined as:

where \({H}_{LPF}\left( f\right)\) is the transfer function of the low-pass filter, \({f}_{c}\) is the cutoff frequency, \({f}_{p}\) is the passband frequency, \({A}_{s}\) is the stopband attenuation.

The corresponding impulse response of the low-pass filter is obtained by the inverse Fourier transform of its transfer function:

where \({h}_{LPF}\left( n\right)\) is the impulse response of the low-pass filter, \(\mathcal {F}^{-1}\) is the inverse Fourier transform operator, \(n\) is the current time step.

The output signal of the low-pass filter can be expressed as:

where \(x\left[ k\right]\) is the is the output signals, \({h}_{LPF}\left[ n-k\right]\) is the impulse response of the low-pass filter, \(N\) is the filter length, \({y}_{LPF}\left[ n\right]\) is the corresponding output signal at each time step.

Combination of high-pass filter and low-pass filter

In the vibration signal preprocessing of underground power optical cable, low-pass filters are used to retain the main trend of the vibration signals, such as vibration events with large amplitudes such as foreign object falling and digging. high-pass filters are used to extract the detailed changes of the vibration signals, such as the event characteristics caused by high-frequency vibrations such as striking or hammer drill. By combining high-pass filter and low-pass filter, the performance of the proposed algorithms the tasks of vibration area localization and event recognition can be optimized.

Firstly, the high-pass filtering process is applied to remove low-frequency noise, and it is defined as:

where \(x\left[ m\right]\) is the original \(\phi\)-OTDR signals from the \(\phi\)-OTDR system before filtering, \({h}_{HPF}\left[ k-m\right]\) is the impulse response of the high-pass filter, \({y}_{HPF}\left[ k\right]\) is the corresponding output signal after high-pass filtering.

Secondly, the low-pass filtering process is applied to smooth out high-frequency components. The final combined filtering equation incorporating both high-pass and low-pass filtering is:

where \({h}_{LPF}\left[ n-k\right]\) is the impulse response of the low-pass filter, \({y}_{filtered}\left[ k\right]\) is the final output signal after both high-pass and low-pass filtering.

The filter parameters are determined based on the characteristics of the dataset, optimizing the filtering process for the given vibration signals. Furthermore, in section “Data preprocessing and dataset construction”, we provide the specific filtering parameters along with experimental visualizations, demonstrating the effectiveness of the filtering approach through comparative analysis.

Vibration area localization model based on PGSD-YOLO

The vibration area localization model for underground power optical cables in multiple laying scenarios requires not only locating vibration areas but also generating laying scenario labels. In the manhole laying scenario, vibration signals exhibit significant diffusion and concentrated intensity, whereas in the buried laying scenario, the signals are influenced by the casing and soil medium, resulting in limited diffusion and weaker intensity. YOLO models23,24 demonstrate excellent real time capabilities and detection performance in object detection tasks, making them well-suited for vibration area localization and generating laying scenario labels. Compared to previous versions, YOLOv1125 achieves significant improvements. However, its high computational complexity and parameter count make it unsuitable for environments with limited computational resources. Furthermore, the complexity of laying scenarios increases the difficulty of vibration area localization, demanding enhanced adaptability and feature extraction capabilities from the model, highlighting the necessity of improving YOLOv11.

To solve the above problems, we propose the PGSD-YOLO vibration area localization model. It is designed to account for the differences in signal intensity and distribution between buried and manhole scenarios in underground power optical cables. The model optimizes the YOLOv11 backbone and neck networks to meet the requirements of real time capabilities, localization performance, and lightweight deployment. By introducing the PMSAM module as a replacement for the C2PSA module and integrating the MSAM26 attention mechanism, the model captures multi-scale features and enhances deep feature extraction efficiency. Additionally, the GSConv and VoVGSCSP27 modules are employed to optimize the quality of feature fusion, while the DySample28 upsampling layer reduces feature map information loss. The overall structure of PGSD-YOLO is shown in Fig. 2.

Feature extraction module PMSAM

The C2PSA module in the backbone network of YOLOv-11n extracts deep features from vibration space-time images through multiple PSAs and skip connections and utilizes these features for fusion in the neck network. The PSA structure is shown in Fig. 3a. However, in processing vibration signals of underground power optical cables, such as foreign object falling and lead ball, similar space-time image features are generated across different laying scenarios. Its similarity increases the difficulty of generating laying scenario labels, particularly when vibration events exhibit similar frequency and time-domain variations. To solve this issue, we propose PMSAM as a replacement for C2PSA to improve deep feature extraction. PMSAM retains the framework structure of PSA but replaces its attention mechanism with MSAM. The structure of PMSAM is illustrated in Fig. 3b. This design reduces the model’s parameters and computational complexity while effectively capturing critical information in vibration space-time images. Consequently, it significantly enhances the model’s adaptability to complex vibration signals, providing high-quality input for feature fusion in the subsequent neck network.

The channel attention calculation part applies pooling operations to the input feature maps, converting them into pixels, which leads to a significant loss of spatial information. Although CBAM alleviates this issue to some extent, it primarily optimizes features through channel and spatial weighting, making it difficult to effectively capture relationships between targets at different scales. To solve this issue, we improve upon CBAM29 and propose the MSAM attention mechanism, replacing CBAM’s channel attention with MSCA. This mechanism uses multi-scale convolution to enhance the model’s ability to perceive complex vibration signals. As a result, it significantly improves the localization performance of the same vibration events from different laying scenarios. The MSCA structure is shown in Fig. 4.

The MSCA mainly consists of three components: \(5\times 5\) convolution, multi-branch convolution, and \(1\times 1\) convolution. The \(5\times 5\) convolution extracts local features, the multi-branch convolution captures multi-scale relationships across different channels, and the 1\(\times\)1 convolution applies weighting to the original feature map, enhancing its representational capability. The computation process is as follows:

where \(X\) is the input feature map, \(Conv _{1\times i}\) and \(Conv _{i\times 1}\) are \(2D\) convolutions with different kernel sizes. \(M1\), \(M2\), and \(M3\) are three branches, each processing the input with convolutions of varying kernel sizes. \(Y\) is the feature map obtained after applying weight processing through a \(1\times 1\) convolution to the input.

Upsampling layer DySample

The neck network of YOLOv11n contains two upsampling layers, which are used to expand the small-sized feature maps from the deep layers of the backbone network to match the size of the feature maps from shallow to deep layers. However, the nearest neighbor interpolation algorithm expands the feature map size by copying the nearest pixel values, without considering the correlation between pixels, which leads to block effects or jaggedness in the feature map. This characteristic may cause the loss of key details in the signals and affect the model’s vibration area localization performance. In order to reduce the loss of feature map information, we replace the upsampling layer in the neck network of YOLOv11n with DySample30.

DySample replaces the algorithm based on the kernel31,32, and it adopts point sampling and introduces offsets. Upsampling is constructed from the perspective of point sampling, avoiding time-consuming dynamic convolution and additional sub-networks to generate dynamic kernels, significantly reducing the amount of calculation and delay. The offsets are used to adjust the positions of the sampling points, which helps to better reflect the relationship between pixels in the input feature map and prevent the loss of the restored feature map information. The structure of DySample is shown in Fig. 5.

In DySample, \(X\) is the input feature map with dimensions \(C\times W\times H\), \(s\) is the upsampling scale factor, \(g\) is the number of groups into which the channels are divided, and \(\alpha\) is the static range factor. To process image features across different channels and reduce computational costs, the input feature map is divided into \(g\) groups, and the features within each group are independently processed. Within each group, the feature map first passes through a linear layer with an input channel size of C and an output channel size of \(2g{s}^{2}\), scaled by the static range factor \(\alpha\), generating a feature map of size \(2{s}^{2}\times W\times H\). This feature map then passes through the Pixel Shuffle module to generate an offset map O of size \(2\times sW\times sH\). The original sampling grid G is adjusted by adding this offset O, resulting in sampling points that better capture the local characteristics of the feature map. The Grid Sample module processes each group individually, and the results from all groups are merged to form a complete output feature map. This ensures continuity and smoothness in the feature map while preserving more details and critical information related to vibration events. The specific computation steps of the \(i^{\text {th}}\) group are summarized as follows:

where \({X}_{i}\) is the input feature map, Pixel is the pixel transformation, Linear is the linear transformation, \({O}_{i}\) is the offset generated, \({S}_{i}\) is the sampling point set, \({X}_{i}\) is the merged sampling point set, Concat is the concatenation operation along the channel dimension, \({G}_{i}\) is the original sampling grid, Grid is the Grid Sample function, and \({X}^{\prime }\) is the complete output feature map after upsampling.

Feature fusion modules GSConv and VoVGSCSP

The traditional convolutional Conv and C3k2 modules in the neck network of YOLOv11n process all channel information through global aggregation. Although they can extract rich feature information, their high computational complexity and parameter count increase the computational burden of the model in multiple laying scenarios. Vibration signals in the manhole laying scenario typically demonstrate strong local variations, while signals in the buried laying scenario are more influenced by environmental factors such as soil medium and cable casing, resulting in smoother signals. Therefore, the model not only needs to extract rich multi-channel features, but also effectively handle the space-time differences of signals. As a result, we replace Conv in the neck network of YOLOv11n with GSConv33 and replace C3k2 with VoVGSCSP34.

Compared to traditional convolution, depthwise separable convolution excels in reducing computational complexity. However, its drawback lies in partially ignoring inter-channel information relationships, leading to feature information loss. To combine the advantages of both depthwise separable convolution and traditional convolution, GSConv fuses global feature information obtained from traditional convolution with channel-specific feature information from depthwise separable convolution. It effectively preserves multi-channel information while reducing computational complexity.

GSConv mainly includes Conv, DWConv, Concat and Shuffle. Conv is composed of traditional convolution, batch normalization and activation function, and DWConv is composed of depthwise separable convolution, batch normalization and activation function. Suppose a feature map with C1 channels is input. It will be firstly processed by traditional convolution to generate a feature map with C2/2 channels, and then processed by depthwise separable convolution to generate another feature map with C2/2 channels. With the number of channels unchanged, the results of depth convolution and depthwise separable convolution are concatenated. Finally, the feature information from traditional convolution and depthwise separable convolution is integrated through the channel fusion mechanism of Shuffle to generate an output feature map with C2 channels. The structure of GSConv is shown in Fig. 6.

In order to fully utilize the function of GSConv in the neck network of YOLOv11n, without changing the input and output size of the original C3k2, C3k2 is replaced with VoVGSCSP. This module adopts a single-level aggregation strategy and designs an efficient cross-level network module. By integrating traditional convolution and GSConv using the residual structure, while maintaining the performance, the inference time complexity and the number of model parameters are reduced. The structure of VoVGSCSP is shown in Fig. 7.

Vibration event recognition model based on 1DCNN-BiGRU-AFM

The time-domain signals extracted from the vibration area localization model in section “Vibration area localization model based on PGSD-YOLO”. However, due to the diversity and complexity of vibration events, the time-domain signals still exhibit nonlinearity, long-short term dependence, and frequency domain feature overlap problems. Therefore, we propose a vibration event recognition model based on 1DCNN-BiGRU-AFM. It first selects the pre-trained weight of 1DCNN-BiGRU-AFM corresponding to the laying scenario label obtained by the localization model, and then inputs the extracted time-domain signal into 1DCNN-BiGRU-AFM for recognition to obtain the vibration event label. The structure of 1DCNN-BiGRU-AFM is shown in Fig. 8.

The model first converts the time-domain signal into the frequency-domain signal using FFT, then extracts local time-domain and frequency-domain features of the signal using 1DCNN35 and BiGRU36, respectively, to capture the long-term and short-term dependencies of the time-domain and frequency-domain features and adapt to the characteristics of multiple scenarios. Then, the attention fusion module (AFM) dynamically weights the contributions of the time-domain and frequency-domain features to enhance the focus on key vibration features and improve the ability to distinguish the signal features of multiple scenarios. Finally, the classification module is used to recognize the vibration events. In the following sections, the principles of frequency domain conversion, 1DCNN, BiGRU, and AFM are explained in detail.

Frequency-domain conversion of time-domain signals

In order to obtain more event feature information from the extracted time-domain signal, we add the frequency-domain signal as an input to the model in Fig. 8. Fast Fourier Transform (FFT)37 can obtain the frequency-domain information of time-domain signals at a lower computational cost, and has higher processing efficiency. Considering that underground power optical cable needs to strike a balance between real-time capabilities and recognition performance, we select FFT as the main algorithm for frequency-domain conversion to meet the requirements of real-time signal processing.

FFT is an efficient implementation of Discrete Fourier Transform. For a signal sequence \(X= \left\{ {x}_{0},...,{x}_{N-1}\right\}\) with a sampling point number of \(N\), DFT is defined as:

where \(x\left( n\right)\) is the time-domain signal, \(X\left( k\right)\) is the corresponding frequency-domain signal, \(N\) is the length of the signal, \(j\) is the imaginary unit, and \({e}^{-\frac{j2\pi }{N}kn}\) is the rotation factor.

Local feature extraction module 1DCNN

1DCNN38 has fewer parameters and lower computing resource requirements. At the same time, it can preserve the sequential relationship between local features. Compared with 2DCNN, it is usually more efficient in processing one-dimensional data. Therefore, in this paper, 1DCNN is selected to extract the local features of vibration events. 1DCNN includes 1DCNNblock1, 1DCNNblock2, and 1DCNNblock3. Each convolution block contains a convolution layer, a max pooling layer, a Relu layer, and a normalization layer. The convolution layer uses convolution operations for feature extraction to capture local information. The max pooling layer reduces the spatial size of the feature matrix, reduces the number of parameters, improves computing efficiency. The Relu layer is used to increase the nonlinearity of the network and alleviate the problem of vanishing gradients. To alleviate the shift phenomenon of internal covariates and improve the feature extraction ability, a batch normalization (BN) layer is added after the output of each convolution block. The structure of 1DCNN is shown in Fig. 9.

Sequence variation extraction module BiGRU

1DCNN can only extract the local features of each time step of the time-domain and frequency-domain signals, but cannot capture the dynamic relationship between the local features. For instance, in the manhole laying scenario, the vibration patterns of hook pulling and striking are similar. BiGRU39 helps the model identify the differences between electric drill and striking through reverse information flow. Electric drill presents as multiple sharp pulses, while striking is a longer fluctuation. BiGRU can capture these subtle differences between different time steps and can better combine the time-domain and frequency-domain signals of the two vibration events for recognition performance. The simplicity and efficiency of BiGRU enable it to process signal data in real time under conditions of limited resources. Therefore, BiGRU is selected to analyze the change relationship of the local feature sequence.

GRU is composed of multiple GRU units, and the structure is shown in Fig. 10. Each GRU unit internally contains the reset gate and the update gate. The role of the reset gate is to selectively forget irrelevant information from the previous time step and reduce the interference of irrelevant information on key features. The update gate is used to reflect the ability of the current moment to retain the state information of the previous time step, thereby further strengthening the association between temporal features. The calculation process of each GRU unit is as follows:

where \({x}_{t}\) and \({x}_{t-1}\) are the local features at time \(t\) and \(t-1\), respectively, \({r}_{t}\) is the reset gate and the value of \({r}_{t}\) is closer to 0, indicating that more information from the previous time step needs to be forgotten. \({z}_{t}\) is the update gate, and its value closer to 1 indicates that more information from the previous time step is retained. \({\tilde{h}}_{t}\) is the candidate hidden state, reflecting the input information at time t and selectively retaining information from the previous time step \({h}_{t-1}\). \({h}_{t}\) is the output of the hidden layer at time t. \(\sigma\) is the Sigmoid function, tanh is the activation function; \({W}_{r}\), \({U}_{r}\), \({W}_{z}\), \({U}_{z}\), \({h}_{t-1}\), \(W\), and \(U\) are the training parameter matrices in the GRU unit.

BiGRU is composed of two GRUs with opposite propagation directions, and its structure is shown in Fig. 11. Structurally, BiGRU can be regarded as a bidirectional recurrent network combining two GRUs with opposite propagation directions. From the perspective of the information flow direction, BiGRU adds the data flow from the future to the past on the basis of GRU.

The output of each BiGRU unit at each time step is jointly influenced by the bias at time step \(t\), the output \({\overrightarrow{h}}_{t}\) of the forward-propagating GRU, and the output \({\overleftarrow{h}}_{t}\) of the backward-propagating GRU. The specific calculation process is as follows:

where \(GRU\left( \cdot \right)\) is the computation process of GRU, \({\overleftarrow{h}}_{t}\) and \({\overleftarrow{h}}_{i}\) are the forward and backward hidden layer outputs of GRU, \({\alpha }_{t}\) and \({\beta }_{t}\) are the weight outputs of the corresponding hidden layers, and \({c}_{t}\) is the bias of the hidden layer corresponding to \({h}_{t}\).

Fusion module AFM

Directly concatenating time-domain features and frequency-domain features may lead to the neglect of the correlation between the two, and fail to fully understand the characteristics of vibration events in multiple laying scenarios. Moreover, simply fusing the two branches will cause the loss of feature information. To solve these problems, we proposes the Attention Fusion Module (AFM). AFM reorganizes the two types of features by analyzing the relationship between time-domain and frequency-domain features, ensuring that each branch incorporates the key information from the other. To retain the original features and avoid overfitting during the fusion process, the residual structure and normalization layers are introduced in the module. Finally, the two processed branches are concatenated. Compared with the traditional direct concatenation module, this module can more accurately recognize vibration events in multiple scenarios. The structure of AFM is shown in Fig. 12.

Let \({X}^{1}\in {R}^{t\times d}\), \({X}^{2}\in {R}^{t\times d}\) be the time-domain feature sequence and the frequency-domain feature sequence respectively, where \(t\) is the length of the time step and \(d\) is the local feature dimension of this time step. The calculation formula of AFM is as follows:

First, \({X}^{1}\), \({X}^{2}\) are transformed by linear transformation matrices \({W}^{Q}\in {R}^{d\times d}\), \({W}^{K}\in {R}^{d\times d}\) and \({W}^{V}\in {R}^{d\times d}\) to generate \(Q\), \(K\), \({V}^{1}\), \({V}^{2}\):

Secondly, by calculating the similarity between \(Q\) and \(K\) and applying the activation function \(Softmax\left( ./ \sqrt{{d}_{k}}\right)\), the relation matrices of \({X}^{1}\) and \({X}^{2}\) are obtained. \({V}^{1}\) and \({V}^{2}\) are respectively operated with the relation matrices to obtain \({Y}^{1}\) and \({Y}^{2}\) that contain the correlation relationship:

Then, \({X}^{1}\) and \({X}^{2}\) are respectively connected with \({Y}^{1}\) and \({Y}^{2}\) through residual connections.

Finally, the results of both are passed through the normalization layers respectively and concatenated:

Experimental design

Experimental deployment

Take an underground power optical cable with multiple laying scenarios in a substation in Changchun City as the experimental object. The experiment is conducted in an open area next to a substation. The buried section is located beneath a landscaped grass surface, while the manhole is situated within the same grassy area. A roadway is approximately 300 m away, with occasional pedestrians, bicycles, and cars passing by. The weather is clear and dry, with no rain or snow before data collection, and the soil remains dry throughout the experiment.

The optical cable route extends from the current substation to the next substation, forming a typical urban underground power optical cable link. A schematic diagram of the cable layout on the side of the starting substation is shown in Fig. 13. The total length of the cable is 1.98 km, of which 0.42 km is inside the substation, 1.5 km is buried outside the substation, and 0.03 km in each manhole. The buried section of the cable is laid at a depth of approximately 2.95 m and is protected by casings (represented by red lines in Fig. 13). Each manhole has a depth of about 3 m, where the optical cable is placed directly on the concrete floor without any protective casing. Similarly, the section of the cable inside the substation is also laid without casings.

To accurately collect the vibration signals of the cable in the given buried area and manhole, a 1 km G652D optical fiber is used to connectethe \(\phi\)-OTDR system and the cable. To ensure the quality of signal acquisition and meet real-time requirements of the \(\phi\)-OTDR system, the pulse width of the acquisition is set to 32 ns, the frequency is set to 1000 Hz, and the spatial resolution is 2 m. The experimental scenario is shown in Fig. 13.

For the given buried and manhole laying scenarios in Fig. 13, 15 different types of vibration event experiments are conducted.The vibration events in the buried laying scenario are shown in Table 1; the vibration events in the manhole laying scenario are shown in Table 2.

Data preprocessing and dataset construction

The \(\phi\)-OTDR system is used to collect vibration signals within the designated experimental area, while manually recording the event types, spatial locations, and laying scenarios of different vibrations. The \(\phi\)-OTDR system outputs the collected \(\phi\)-OTDR signals as multiple \(1024\times 781\) MAT files.

The \(\phi\)-OTDR signals are filtered using a high-pass filter (instrument preset high-pass filter coefficients b2, cutoff frequency of 2 Hz, passband of 5 Hz, and stopband attenuation of − 80 dB).Then the filtered signals are processed by a low-pass filter (instrument preset low-pass filter coefficients b3, cutoff frequency of 150 Hz, passband of 100Hz, and stopband attenuation of − 80 dB), ultimately yielding the preprocessed space-time signals.

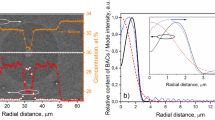

By applying low-pass and high-pass filtering in combination, we compare their effects on different vibration events, such as foreign object falling and digging, which require low-pass filtering to retain the main signal trend, and striking or hammer drill, which require high-pass filtering to extract high-frequency details. These visualizations in Figs 14 and 15 provide a clearer demonstration of the filtering impact on signal quality and feature retention.

The space-time signals are converted into \(1024\times 781\) space-time images, and based on the manually recorded spatial locations and laying scenarios, Labelme is used to annotate laying scenario labels and vibration areas, forming the localization dataset. In the localization dataset, background noise refers to signals without vibration events, and such samples are not annotated with laying scenario labels or vibration areas. Here, 1024 represents the length of each data sample in the time dimension, while 781 represents the number of spatial sampling points.

To construct the recognition dataset, the time-domain signals of four adjacent spatial points in the vibration area are extracted from the space-time signals based on the manually recorded vibration locations. The extracted time-domain signals are then categorized into buried and manhole recognition datasets according to the recorded laying scenarios. Finally, the extracted time-domain signals are assigned category labels based on the recorded event types. For background noise samples, four spatial points are randomly selected from non-vibrating space-time signals in both buried and manhole laying scenario regions. Each time-domain signal is a \(1024\times 1\) time series.

Table 3 summarizes the time cost of each processing step for vibration area localization and event recognition based on a \(1024 \times 781\) \(\phi\)-OTDR signal. To further evaluate the real-time performance of the proposed method, we refer to the testing times of the models in section “Results in the vibration area localization stage” (for vibration area localization) and section “Results in the vibration event recognition stage (for vibration event recognition), which are integrated into the steps of the table.

Figures 16 and 17 show space-time images of vibration area localization in buried and manhole scenarios, respectively. In the buried laying scenario, the underground power optical cable is encased in casings, which significantly attenuate the propagation intensity of time-domain signals. This attenuation is particularly evident in the high-frequency range, where the amplitude reduction is more pronounced. Consequently, the amplitude of the same vibration event is lower in the buried laying scenario. In contrast, in the manhole laying scenario, due to the absence of casings and soil medium insulation, the intensity of the time-domain signals is closer to the original vibration. Figures 18 and 19 represent the visualization of time-domain signals for some vibration events in buried and manhole scenarios.

The localization dataset, buried recognition dataset, and manhole recognition dataset are all randomly divided into training, validation, and test sets at a 7:2:1 ratio. The detailed information of the datasets is shown in the Table 4.

To compare the performance of recognizing time-domain signals extracted from space-time signals versus directly recognizing space-time signals, the space-time images in the localization dataset are divided into the buried space-time image recognition dataset and the manhole space-time image recognition dataset according to the laying scenarios, with event type labels assigned. The number of samples in each subset is the same as in the localization dataset.

Hyperparameter settings

We use PyTorch 1.11.0 as the method framework of vibration area localization and event recognition. All experiments are conducted on a workstation equipped with an NVIDIA GeForce RTX 2080 GPU with 64 GB of memory and CUDA version 11.3.All vibration area localization models are trained with a batch size of 8 and 100 epochs. All vibration event recognition models are trained with a batch size of 16, 50 epochs, and a learning rate of 0.0003.

Evaluation metrics

When evaluating different models in vibration area localization, we use Parameters and GFLOPs to measure the model’s scale. Parameters represent the spatial complexity of the model, while GFLOPs describe the computational complexity. The testing time reflects the average localization time of the model.

To evaluate the performance of vibration area localization, Precision, Recall, mAP@50, and mAP@50-95 are used as key indicators, each reflecting a different aspect of the localization process:

Precision focuses on the accuracy of vibration area predictions. A high precision ensures that detected vibration areas truly correspond to actual vibration sources, reducing the misidentification of background noise or environmental disturbances as vibration events.

Recall emphasizes the model’s ability to capture all existing vibration areas. A higher recall means the system effectively detects weak or small-scale vibrations, which is critical for identifying subtle disturbances in underground power optical cables.

mAP@50 evaluates the correctness of vibration area localization when allowing a moderate overlap with ground truth (IoU = 50%). This metric is particularly relevant for practical applications where rough localization of vibration events is sufficient for early warning and monitoring.

mAP@50-95 provides a stricter evaluation by assessing localization accuracy across multiple IoU thresholds. This reflects the model’s capability to precisely delineate the boundaries of vibration areas, which is essential for distinguishing overlapping or closely spaced vibration events.

When evaluating models in vibration recognition, we also use Parameters to measure the model’s scale, and testing time to represent the average recognition time of the model. For performance evaluation, metrics such as Accuracy, Precision, Recall, and F1-score are adopted to assess each model’s recognition performance.

Experimental results

Results in the vibration area localization stage

To validate the localization effectiveness of PGSD-YOLO, we conduct ablation and comparison experiments, analyzing the results on the test set of the localization dataset. In the ablation experiments, we verify the improvement effects of each module by progressively incorporating PMSAM, GSConv with VoVGSCSP, and DySample. In the comparison experiments, we comprehensively compare PGSD-YOLO with YOLOv3-tiny, YOLOv5n, and YOLOv8n, among other lightweight YOLO models. The results show that PGSD-YOLO achieves optimal performance in localization, computational complexity, model parameters, and real-time capabilities.

Ablation experiment of PGSD-YOLO

We conducted ablation experiments of PGSD-YOLO based on YOLOv11n, combined with PSMSAM, GSConv, VoVGSCSP, and DySample, where GSVO represents GSConv and VoVGSCSP. The experimental results are shown in Table 5.

First, we use PSMSAM to improve C2PSA in the backbone network. Compared with YOLOv11n, the parameters of the improved model are reduced, and the amount of calculation remains basically unchanged. The precision, mAP@50, and mAP@50-95 are increased by 0.31%, 0.07%, and 0.59%, respectively, but the Recall is decreased by 0.33%, and the testing time is increased by 0.286ms. PSMSAM improves some of the performance of YOLOv11n by improving the PSA module and abandoning the cross-connection structure of the original model.

Next, we replace the Conv of the neck network with GSConv and C3k2 with VoVGSCSP. Experimental results show that GSConv and VoVGSCSP fully extract the deep features of PSMSAM. Compared with improving PSMSAM only in YOLOv11n, the parameters, computational complexity and testing time of the model are reduced, and the Precision, mAP@50, mAP@50-95 and Recall are improved. However, in comparison to YOLOv11n, the Recall is decreased by 0.12%.

The upsampling layer of YOLOv11n loses some information of the deep features, which is not conducive to the feature fusion in the neck network. Based on the improvement of PSMSAM and GSVO in YOLOv11n, the original upsampling layer is replaced by DySample. Experimental results show that although some indicators of PGSD-YOLO are not as good as those of the other ablation models, all performance indicators of PGSD-YOLO are superior to the original YOLOv11n model.Experimental results show that although some indicators of PGSD-YOLO are not as good as those of the other ablation models, all performance indicators of PGSD-YOLO are superior to the original YOLOv11n model. The Precision, Recall, mAP@50, and mAP@50-95 are increased by 0.35%, 0.93%, 0.13%, and 1.13%, respectively. The computational complexity, computational amount, and testing time of PGSD-YOLO are all decreased.

Performance analysis of PGSD-YOLO

We select lightweight models of the YOLO series to verify the effectiveness of PGSD-YOLO. These models include YOLOv3-tiny6, YOLOv5-tiny40, YOLOv7-tiny41, YOLOv8n42, YOLOv8n-CBAM8, YOLOv9n43, YOLOv10n44, and YOLOv11n. The experiments are carried out under the same conditions. Among them, YOLOv3-tiny and YOLOv8n-CBAM have already been applied to single laying scenarios for perimeter security applications, while the remaining models are general object detection models that have not been applied to vibration area localization tasks. The results are shown in Table 6.

The backbone network of YOLOv5n is simplified to CSPDarknet-tiny, significantly reducing the number of layers and the quantity of residual blocks. The basic spatial pyramid pooling module of the SPPCSPC is only retained by YOLOv7-tiny, reducing the level of feature fusion. Although the real-time requirements of the vibration area localization is met by the YOLOv5-tiny and YOLOv7-tiny, the performance metrics are significantly lower than that of PGSD-YOLO. Compared with YOLOv5-tiny, the Precision, Recall, mAP@50, and mAP@50-95 of PGSD-YOLO are increased by 1.65%, 2.1%, 1.02%, and 1.81%, respectively. Compared with YOLOv7-tiny, the Precision, Recall, mAP@50, and mAP@50-95 of PGSD-YOLO are increased by 3.85%, 6.17%, 4.08%, and 7.5%, respectively.

The network structure of YOLOv3-tiny is relatively larger and more complex compared to other YOLO models. Moreover, the multi-scale vibration space-time images cannot be fully adapted to by the fixed parameters of the anchor frames. More computing resources are needed for vibration area localization, and the real-time capabilities is poor. Compared with PGSD-YOLO, the GFLOPS, parameters, and test time of YOLOv3-tiny are increased by 12.9, 9.57 M, and 2.319 ms, respectively.

The deep features of SPPF in YOLOv8n and YOLOv8n-CBAM are obtained through multi-scale pooling. However, repetitive information may be contained in the features of different scales, resulting in feature redundancy. When applied directly to the neck network, the performance of vibration area localization will be affected.Compared with PGSD-YOLO, the Precision, Recall, mAP@50, and mAP@50-95 of YOLOv8n are decreased by 1.29%, 0.94%, 0.16%, and 0.89%, respectively. The Precision, Recall, mAP@50, and mAP@50-95 of YOLOv8n-CBAM are decreased by 2.38%, 1.12%, 0.64%, and 1.33%, respectively.

GELAN is utilized as a part of the backbone network by YOLOv9n, replacing the traditional convolutional layers and residual blocks, to enhance the ability of feature extraction. However, GELAN adopts a multi-branch structure, which will significantly increase the computational cost and test time of YOLOv9n. Compared with PGSD-YOLO, the GFLOPS of the YOLOv9n model is increased by 6.0 and the testing time is increased by 4.603 ms.

PSA is adopted by YOLOv10n to further extract the deep features of SPPF, focusing on the key information of the features and reducing the interference of redundant information to improve the feature fusion efficiency. However, the multi-head attention mechanism adopted inside PSA will lead to the loss of some local detail information, which is not conducive to the vibration area localization. Compared with PGSD-YOLO, the Precision, Recall, mAP@50, and mAP@50-95 of YOLOv10n are decreased by 2.87%, 1.98%, 1.42%, and 3.82%, respectively.

The Precision, Recall, mAP@50, and mAP@50-95 of YOLOv3-tiny, YOLOv8n, YOLOv8n-CBAM, YOLOv9n, and YOLOv10n are compared, and PGSD-YOLO achieves the best performance across all metrics. In addition, PGSD-YOLO presents the lowest GFLOPS, parameter count, and testing time, thereby meeting the real-time requirements and offering low deployment complexity. Specifically, the total processing time for localizing one \(\phi\)-OTDR sample includes 27.30 ms for the combination of high-pass and low-pass filtering, 13.04 ms for converting the signal into a space-time image, and 6.817 ms testing time of PGSD-YOLO, summing to only 47.157 ms. Given that the acquisition time for one \(\phi\)-OTDR signal is 1000 ms, PGSD-YOLO can complete the entire localization process well before the next signal is acquired, which fully satisfies the real-time localization requirement. Overall, PGSD-YOLO demonstrates superior localization performance for underground power optical cable vibration signals collected by the \(\phi\)-OTDR system, along with strong computational efficiency.

To further assess the performance of PGSD-YOLO in vibration area localization, we compare its box loss, classification loss (cls_loss), Precision, Recall, mAP@50, and mAP@50-95 on the validation set with those of existing lightweight YOLO models, as illustrated in Fig 20. The box loss reflects the accuracy of the predicted bounding boxes of vibration areas compared to the ground truth, where a lower value indicates better spatial localization precision. The classification loss measures the model’s ability to correctly classify the laying scenario of the vibration area. As the number of iterations increases, PGSD-YOLO consistently achieves higher performance.

After the vibration signals collected in real time by the\(\phi\)-OTDR system in the underground power optical cable are preprocessed, the vibration area localization is carried out. Every laying scenario label in each image is correctly marked by PGSD-YOLO, and it can accurately locate the areas of different types of space-time images. Visualization results of some vibration area localization are shown in Fig. 21.

In the buried laying scenario, motorcycle appear in the space-time images as continuous high-frequency energy streaks with high intensity and wide coverage. The object boxes fully cover the high-frequency signal areas without interference from other noise. Striking signals are irregularly distributed, consisting of multiple short-duration high-energy shock waves with minimal background noise. The object box of striking can ensure complete signal coverage. Digging signals are represented in the space-time images as intermittent strong signals, similar in frequency to background noise but with higher energy. The model successfully filters out background noise and localizes the vibration signal areas. Platform cart signals are characterized by low-frequency, continuous energy streaks and low intensity. The object boxes fully annotate the low-frequency signal areas without missing critical information.

In the manhole laying scenario, drilling signals are concentrated in the center of the space-time images, appearing as continuous high-frequency energy streaks with moderate range. The object boxes accurately annotate the areas while avoiding background interference. Walking signals are intermittent, with sparse yet distinct energy streaks and minimal background noise. The object boxes fully annotate all intermittent signals without missing any major areas. Striking signals are scattered, characterized by multiple short-duration high-energy signal peaks, with background noise in the intervals. The model uses object boxes to cover all high-energy signal areas, unaffected by background noise. Falling object signals appear in the space-time images as continuous high-energy streaks that gradually decay. The object boxes cover the entire signal path from high to low energy, ensuring complete coverage of the vibration areas.

In summary, PGSD-YOLO demonstrates superior vibration area localization performance in both buried and manhole laying scenarios. The laying scenario labels and vibration area spatial points obtained by the model are used for vibration event recognition in a multi-scenario underground power optical cable.

Results in the vibration event recognition stage

To validate the effectiveness of 1DCNN-BiGRU-AFM for vibration event recognition in a multi-scenario underground power optical cable, we conduct ablation and comparison experiments, analyzing the results on the test set of the recognition dataset.

In the ablation experiments, we verify the improvement in recognition performance by progressively introducing the 1DCNN, BiGRU, and AFM modules. In the comparison experiments, we compare 1DCNN-BiGRU-AFM with other CNN models such as DenseNet-121 + TAM and LSTM + DWT + STFT + CNN, and Transformer models such as FL-SwinT-L and ViT-VSEC. The results show that 1DCNN-BiGRU-AFM achieves superior recognition performance and real-time capabilities in both buried and manhole laying scenarios.

Ablation experiment of 1DCNN-BiGRU-AFM

We conduct ablation experiments on 1DCNN-BiGRU-AFM using the recognition datasets from buried and manhole laying scenarios, with the experimental results shown in Tables 7 and 8. Input indicates whether time-domain or frequency-domain signals are included as input, with the selected signals being fed into 1DCNN. BiGRU denotes whether BiGRU is included. Concat represents the direct concatenation of features obtained from specific modules for each input, while AFM denotes the fusion of these features using the AFM module.

According to Models 1 and 3 in the two tables, using 1DCNN alone can only extract local features from either the time-domain or frequency-domain, resulting in the lowest Precision, Recall, and F1-score. This indicates that 1DCNN struggles to capture global relationships between features, leading to limited recognition performance.

According to Models 2 and 4 in the two tables, improvements in Precision, Recall, and F1-score are achieved by incorporating BiGRU, which enables dynamic changes and long-term dependencies in the time-domain or frequency-domain to be captured.In the buried laying scenario, compared to Model 1, Model 2 achieves improvements in Precision, Recall, and F1-score by 2.47%, 2.64%, and 2.48%, respectively. Compared to Model 3, Model 4 achieves increases in these metrics by 30.67%, 29.05%, and 30.89%, respectively.In the manhole laying scenario, compared to Model 1, Model 2 achieves improvements in Precision, Recall, and F1-score by 14.98%, 17.88%, and 23.46%, respectively. Similarly, compared to Model 3, Model 4 achieves increases in these metrics by 13.10%, 12.34%, and 15.70%, respectively.

By directly concatenating time-domain and frequency-domain features, the model can leverage the complementarity of both feature types, achieving better performance compared to using a single feature type. As shown in the data from the two tables, Precision, Recall, and F1-score are all superior to those of models with single-feature input.However, concatenation is a linear fusion method that fails to deeply capture the complex relationships between the two feature types, potentially resulting in partial information loss.

In order to achieve better results, direct concatenation is replaced with AFM, which employs alternating fusion and attention mechanisms to successfully capture the nonlinear relationships between time-domain and frequency-domain features, further enhancing performance.In the buried laying scenario, compared to Model 5, Model 6 achieves improvements in Precision, Recall, and F1-score by 5.85%, 5.84%, and 6.22%, respectively. In the manhole laying scenario, compared to Model 5, Precision, Recall, and F1-score for Model 6 are improved by 6.25%, 6.19%, and 6.27%, respectively.In both buried and manhole laying scenarios, the proposed 1DCNN-BiGRU-AFM achieves the highest Precision, Recall, and F1-score among all models.

Performance analysis of 1DCNN-BiGRU-AFM

We compare 1DCNN-BiGRU-AFM with Dense Net-121 + TAM45, LSTM + DWT + STFT + CNN46, FL-SwinT-L15, and ViT-VSEC47. The Performance (Accuracy and Loss) curves of these models on the validation set with increasing epochs are shown in Fig. 22. For 1DCNN-BiGRU-AFM, DenseNet-121 + TAM, and LSTM + DWT + STFT + CNN, the validation sets consist of time-domain signals from buried and manhole laying scenarios, while FL-SwinT-L and ViT-VSEC use space-time image recognition dataset from the same scenarios.

The 1DCNN-BiGRU-AFM model achieves high Accuracy with fewer epochs, demonstrating faster convergence during the early training phase compared to other models. This allows it to improve Accuracy in a shorter time, highlighting its efficiency in learning critical features of vibration signals. This efficiency reduces the time required for model training, making it particularly advantageous for real-time recognition of underground power optical cable vibration events. In contrast, other models, such as DenseNet-121 + TAM, LSTM + DWT + STFT + CNN, FL-SwinT-L, and ViT-VSEC, show slower Accuracy improvement during the initial training phase, requiring more epochs to reach comparable Accuracy levels.

The Loss curves of 1DCNN-BiGRU-AFM drops rapidly and stabilizes at an early epoch, demonstrating good convergence. Compared to other models, its Loss remains consistently low with minimal fluctuations, indicating strong stability. In contrast, other models exhibit larger Loss fluctuations or slower decline during training, whereas 1DCNN-BiGRU-AFM effectively captures key features and maintains a low validation Loss, showcasing superior recognition performance.

The CNN models utilize the dataset from the vibration event recognition stage. Among the CNN models, the recognition performance of 1DCNN-BiGRU-AFM is compared with LSTM + DWT + STFT + CNN and DenseNet-121 + TAM, as shown in Tables 9 and 10. The results indicate that 1DCNN-BiGRU-AFM achieves the highest Accuracy as well as the highest macro-average Precision, Recall, and F1-score. LSTM + DWT + STFT + CNN has lower parameters and testing time compared to 1DCNN-BiGRU-AFM in both buried and manhole laying scenarios. However, LSTM + DWT + STFT + CNN simply concatenates the features from the STFT, DWT, and LSTM-CNN paths before inputing them into the fully connected layer without deeply interacting with features from different paths. This model may result in certain critical features being underutilized. DenseNet-121 + TAM has significantly higher parameters and testing time than 1DCNN-BiGRU-AFM in both buried and manhole laying scenarios. This model convert the time-domain signals into images through STFT before recognition, which increases the preprocessing time. During recognition, each layer also needs to handle inputs from all preceding layers, significantly increasing storage requirements.

In Transformer models, this study classifies the vibration space-time images from the buried space-time image recognition dataset and the manhole space-time image recognition dataset. The recognition performance of 1DCNN-BiGRU-AFM is compared with ViT-VSEC and Swin Transformer, as shown in Tables 9 and 10. The results indicate that 1DCNN-BiGRU-AFM achieves the highest Accuracy as well as the highest macro-average Precision, Recall, and F1-score.

ViT-VSEC and Swin Transformer require image-based samples to achieve optimal recognition performance. However, these models have significantly larger parameters and testing times, which do not meet real-time requirements and result in higher deployment costs. Compared to 1DCNN-BiGRU-AFM, ViT-VSEC has an additional 49.9 M parameters, with testing time increased by 2.15 ms in the buried laying scenario and by 2.07 ms in the manhole laying scenario. Swin Transformer adds 26.76 M parameters, with testing time increased by 2.54 ms in the buried laying scenario and by 2.27 ms in the manhole laying scenario.

In the buried laying scenario, due to the soil medium and casing protection, the crushing effects of motorcycles and handcarts are similar. In the manhole laying scenario, as both hook pulling and striking directly act on the underground power optical cable at the same frequency, they produce similar deformations. The vibration frequencies of these events have significant overlapping areas, resulting in similar frequency-domain features obtained through STFT and DWT.

The confusion matrices in the buried laying scenario for 1DCNN-BiGRU-AFM, LSTM + DWT + STFT + CNN, and DenseNet-121 + TAM are shown in Fig. 23. Compared with 1DCNN-BiGRU-AFM, the Precision, Recall and F1-score of LSTM + DWT + STFT + CNN for recognizing motorcycle are decreased by 18.50%, 11.24% and 15.05% respectively, and the Precision, Recall and F1-score for recognizing platform cart are decreased by 18.33%, 51.44% and 39.02% respectively. In DenseNet-121 + TAM, the Precision, Recall and F1-score for recognizing motorcycle are decreased by 15.12%, 14.21% and 14.67% respectively, and the Precision, Recall and F1-score for recognizing platform cart are decreased by 28.67%, 20.73% and 24.93% respectively. The confusion matrices in the manhole laying scenario for 1DCNN-BiGRU-AFM, LSTM + DWT + STFT + CNN, and DenseNet-121 + TAM are shown in Fig 24. Compared with 1DCNN-BiGRU-AFM, the Precision, Recall and F1-score of LSTM + DWT + STFT + CNN for recognizing hook pulling are decreased by 15.57%, 23.50% and 19.73% respectively, and the Precision, Recall and F1-score for recognizing striking are decreased by 21.57%, 14.16% and 18.03% respectively.

According to the confusion matrices for ViT-VSEC and Swin Transformer in Fig. 23, the Precision, Recall, and F1-score for recognizing vibration events such as digging, pickaxe, lead ball, and walking all reach 100%. From the confusion matrices for ViT-VSEC and Swin Transformer in Fig. 24, the Precision, Recall, and F1-score for recognizing vibration events such as electric drill, foreign object falling, background noise, climbing, and walking all exceed 97%. In the manhole laying scenario, ViT-VSEC and Swin Transformer exhibit excellent recognition performance, with the Precision, Recall, and F1-score for various vibration events being comparable to those of 1DCNN-BiGRU-AFM. In the buried laying scenario, ViT-VSEC and Swin Transformer demonstrate strong global dependency modeling capabilities when recognizing space-time images. However, for hammer drill in the buried scenario, the signals’ localized characteristics and prominent frequency-domain features make them overly dispersed and lack significant variation in the space-time images. This limits the models’ ability to effectively capture the features of hammer drill in space-time images.

In contrast, 1DCNN-BiGRU-AFM, through the extraction of both time-domain and frequency-domain features from time-domain signals, combined with BiGRU’s ability to capture long-term dependencies, is better suited for extracting the local features of hammer drill. Compared with 1DCNN-BiGRU-AFM, the Precision, Recall and F1-score of ViT-VSEC for recognizing hammer drill are decreased by 11.88%, 13.75% and 12.84% respectively. In Swin Transformer, the Precision, Recall and F1-score for recognizing hammer drill are decreased by 13.92%, 16.25% and 15.12% respectively.

Discussion of single-stage and two-stage recognition models

To comprehensively compare the performance of single-stage and two-stage vibration event recognition methods, we use the original \(1024 \times 781\) \(\phi\)-OTDR signals from the test set of the localization dataset. Each sample is annotated with vibration event labels. Since the background noise corresponds to non-vibration regions, event labels are not assigned to these samples. Therefore, to ensure a fair comparison, background noise is excluded from the recognition evaluation. The recognition results are evaluated by comparing the truth labels of each original \(\phi\)-OTDR signal with the predicted labels generated by the single-stage and two-stage vibration event recognition methods. Both recognition methods share the same preprocessing steps-Combination of High-pass and Low-pass Filtering and Conversion to space-time image-to ensure consistent input conditions.

The single-stage recognition models include YOLOv8n-CBAM8 and YOLOv3-tiny6, while the two-stage recognition model includes 1DCNN-BiGRU-AFM. YOLO models perform both localization and recognition on the localization dataset simultaneously, requiring additional computation for localization information. The recognition performance is shown in Tables 11. In contrast, 1DCNN-BiGRU-AFM performs recognition on time-domain signals from the recognition datasets for each laying scenario separately and its parameter and testing time of the 1DCNN-BiGRU-AFM include those of the PGSD-YOLO during the vibration area localization stage. The ressults are shown in Table 11.

Compared with 1DCNN-BiGRU-AFM, the parameters of YOLOv8n-CBAM are decreased by 0.24 M. The testing time for recognizing vibration events in the buried laying scenario and manhole laying scenario is decreased by 1.39 ms and 1.22 ms, respectively. Although the testing time and parameters of the two-stage method are higher than those of YOLOv8n-CBAM, they both meet the sampling frequency and model deployment conditions of the \(\phi\)-OTDR system. The parameters of YOLOv3-tiny is increased by 8.79 M. When the vibration events in the buried laying scenario are recognized, the testing time is increased by 0.76 ms. When the vibration events in the manhole laying scenario are recognized, the testing time is increased by 0.93 ms. YOLO models primarily distinguish vibration events in space-time images by analyzing spatial position changes. However, they are unable to directly handle the dynamic characteristics of time-domain signals and cannot capture the relationship between frequency-domain and time-domain variations. Additionally, since background noise is not labeled in the space-time images, there are no corresponding recognition metrics for it.

As shown in Tables 11, compared to YOLOv8n-CBAM and YOLOv3-tiny, the Precision, Recall, and F1-score for recognizing other vibration events, are all lower than those of 1DCNN-BiGRU-AFM. Therefore, the results obtained by single-stage recognition models are inferior to those achieved by two-stage recognition models.

Conclusion and future work

This paper proposes a method for vibration area localization and event recognition of the underground power optical cable across multiple laying scenarios. In the vibration area localization stage, PGSD-YOLO is proposed to localize vibration areas and obtain laying scenario labels. The extracted signals are segmented into time-domain signals. In the vibration event recognition stage, the extracted time-domain signals are recognized using 1DCNN-BiGRU-AFM based on the laying scenario labels. The rationality of the two models is validated in terms of real-time capabilities, deployment complexity, and performance. PGSD-YOLO, based on YOLOv11n, replaces specific modules with PSMSAM, GSConv, VoVGSCSP, and DySample. The improved PGSD-YO achieves the best performance in Precision, mAP@50, mAP@50-95, and Recall while maintaining low parameters, GFLOPs, and testing time.1DCNN-BiGRU-AFM extracts local features in the time and frequency-domains using 1DCNN, captures temporal dependencies with BiGRU, and enhances features and assigns weights through AFM. This model achieves the highest Precision, Recall, and F1-score in both buried and manhole laying scenarios while maintaining low parameter count and testing time. Finally, the comparison reveals that single-stage recognition models cannot achieve the performance of the two-stage recognition model, validating the superiority of our proposed localization-first and recognition-later method.

Future research will conduct in-depth studies on power optical cables in complex laying scenarios such as buried underground, manholes, cable trenches and overhead. In these new multiple scenarios, the frequency characteristics and variation laws of vibration signals are more complex, and may involve a variety of different vibration modes. For example, overhead cables may be affected by different types of vibration sources such as wind, lightning strikes, and bird activities. The frequency range, intensity and propagation mode of these vibration signals are different from those in underground power optical cables. In order to improve the adaptability and robustness of the model, future work will focus on the feature extraction and analysis of vibration signals in these complex scenarios and further optimize the model to achieve localization and recognition in complex laying scenarios.

Data availability

Data will be made available on request. For access, please visit E-mail(s): 1917224121@qq.com.

References

Yang, Y., Zhang, H. & Li, Y. Long-distance pipeline safety early warning: A distributed optical fiber sensing semi-supervised learning method. IEEE Sens. J. 21(17), 19453–19461. https://doi.org/10.1109/JSEN.2021.3087537 (2021).

Li, D. et al. Multi-source threatening event recognition scheme targeting drone intrusion in the fiber optic DAS system. IEEE Sens. J. https://doi.org/10.1109/JSEN.2024.3449440 (2024).