Abstract

The continued rise in global carbon dioxide (\(CO_2\)) emissions challenges international climate policy, particularly the goals of the Paris Agreement. This study forecasts \(CO_2\) emissions through 2030 for the eleven highest-emitting nations–China, the United States, India, Russia, Japan, Iran, Indonesia, Saudi Arabia, Canada, South Korea, and Germany–while assessing their progress toward Nationally Determined Contributions (NDCs). Using data from 1990 to 2023, we apply a robust data pipeline comprised of six machine learning models and sequential squeeze feature selection incorporating eleven economic, industrial, and energy consumption variables. We have modelled the scenario with an average prediction accuracy of 96.21%. Results indicate that Russia is on track to exceed its reduction targets, while Germany and the United States will fall slightly short. China, India, Japan, Canada, South Korea, and Indonesia are projected to miss their commitments by significant margins. At the same time, Iran and Saudi Arabia are expected to increase emissions rather than reduce them. These findings highlight the need for strengthened energy efficiency policies, expanded renewable energy adoption, enhanced carbon pricing mechanisms, and stricter regulatory enforcement. Emerging economies require international collaboration and investment to support low-carbon transitions. This study provides a data-driven assessment of emission trajectories, emphasizing the urgency of coordinated global action, technological innovation, and adaptive policy measures to align emissions with the 1.5\(^{\circ }{\hbox {C}}\) warming threshold. This work represents a novel integration of multivariate machine learning modelling, data-driven feature selection, and policy-oriented emission forecasts, establishing new methodological and empirical benchmarks in climate analytics.

Similar content being viewed by others

Introduction

Since the onset of the Industrial Revolution, fossil fuel combustion has led to a substantial rise in atmospheric greenhouse gas (GHG) concentrations, with carbon dioxide (\(CO_2\)) being a primary contributor. Consequently, global temperatures have been increasing unprecedentedly, with profound implications for the Earth’s climate and ecosystems.

Between 1990 and 2023, GHG concentrations increased by 51.5%, with \(CO_2\) contributing approximately 81% of this rise1, making it the dominant driver of anthropogenic global warming. The atmospheric accumulation of these gases is closely linked to the emissions of the world’s largest carbon-producing nations, predominantly industrialized countries and rapidly developing economies with high fossil fuel dependence. According to the 2024 Energy Institute Statistical Review of World Energy2, the top eleven \(CO_2\)-emitting countries in 2023 were China, the United States, India, Russia, Japan, Iran, Indonesia, Saudi Arabia, Canada, South Korea, and Germany, with China alone contributing 31.2% of global emissions. In this study, we focus on nations whose carbon emissions account for at least 1.5% of the global total, ensuring that the analysis captures the most significant contributors to worldwide emissions.

To curb global carbon emissions and mitigate climate change, world leaders convened for the first time at the Paris Climate Agreement, held in Paris from 30 November to 11 December 2015. The agreement established a collective commitment to limit the increase in global average temperature to well below \(2^o C\) above preindustrial levels, to restrict warming to \(1.5^o C\). Each participating country formulated its own Nationally Determined Contributions (NDCs) to meet this goal, describing the policies and actions it would take to support the global mitigation effort and customized to its national circumstances, as outlined in Table 2.

Despite global recognition of the importance of the Paris Agreement, progress remains uneven. Major emitters like China and India exhibit upward trends in emissions, threatening the collective goal of limiting global warming to \(1.5^o C\). A lack of robust and scalable forecasting models hinders the ability of policymakers to implement timely interventions.

The alarming increase in \(CO_2\) emissions has made it a critical subject for global researchers, policymakers, and environmentalists. Previous studies have explored that \(CO_2\) emission is influenced by multiple variables such as GDP3,4,5, energy consumption6,7,8,9,10, electric power consumption11, population3,12, industry13, manufacturing14,15, fertilizer consumption16, trade17, renewable electricity output18, renewable energy consumption4, domestic credit to private sector4, etc. In their study, Faruque et al.19 have summarised the correlation of \(CO_2\) emissions with multiple factors and the significance of considering the broad spectrum of variables that shape carbon emissions.

Y. Li13 proposed an innovative hybrid model integrating the Kalman Filter, Long Short-Term Memory, and Support Vector Machine (KLS) to predict China’s \(CO_2\) emissions. The model demonstrated high predictive accuracy, achieving a Mean Squared Error (MSE) of 0.0039 and a Mean Absolute Error (MAE) of 0.061. Although the study did not generate explicit forecasts for future emissions, it underscored the efficacy of combining multiple machine-learning techniques for time series prediction. The study uses a five-year fixed lag in the LSTM model to predict the next year’s \(CO_2\) emissions. This fixed window size may not be optimal for all periods and could reduce forecasting flexibility.

Y. Huang et al.20 employed Grey Relational Analysis (GRA) to identify 16 key factors influencing carbon emissions and utilized Principal Component Analysis (PCA) to minimize data redundancy. Their study found that LSTM outperformed both the Back Propagation Neural Network (BPNN) and Gaussian Process Regression (GPR) in predicting carbon emissions, highlighting the utility of PCA and LSTM in supporting emission reduction strategies in China. The study uses industrial structure, the proportion of thermal power generation and the energy consumption per unit of GDP as improved factors; after adding these factors, the LSTM predictions are closer to the true carbon emissions. As a result, they have suggested policy interventions focusing on these three factors rather than the original fifteen factors considered in the study.

J. Ofosu-Adarkwa et al.21 introduced a novel grey prediction model, V-GM(1, N), for forecasting \(CO_2\) emissions in the Chinese cement sector. The proposed approach achieved an impressive accuracy of 97.29% for data spanning 2005 to 2018, surpassing traditional forecasting techniques. This framework provides policymakers with a robust and cost-effective tool for reliable emissions forecasting and mitigation planning. The paper largely focuses on the cement sector of China for predicting models, missing out on the potential interaction between variables like economic growth, energy and industry-related variables.

M. Ahmed et al.3 employed an LSTM model to analyze carbon emissions in China and India from 1990 to 2014. The model yielded an MSE of 0.42 and a Mean Absolute Percentage Error (MAPE) of 6.52%. While the study did not include future projections, it demonstrated the effectiveness of LSTM in capturing complex patterns within emission datasets. The study uses a three-year fixed lag to predict the next year’s \(CO_2\) emissions. Moreover, the study uses data from 1990 to 2014, which may not fully capture recent economic and environmental policy changes in China and India.

S. Kumari et al.22 applied multiple machine learning techniques, including ARIMA, SARIMAX, and LSTM, to forecast \(CO_2\) emissions in India from 1980 to 2019. The LSTM model exhibited superior performance, achieving a MAPE of 3.101% and a Root Mean Squared Error (RMSE) of 60.635. Their projections suggest that India’s \(CO_2\) emissions could reach approximately five thousand metric tons by 2030, providing a critical forecast aligned with the country’s emission reduction targets. The paper focuses on univariate data for \(CO_2\) emissions without considering external factors such as population growth, economic growth, energy, etc.

L. Chang et al.23 utilized Projection Pursuit Regression (PPR) in combination with machine learning models, particularly Artificial Neural Networks (ANNs), to predict \(CO_2\) emissions in China from 1970 to 2021. Their ANN model achieved an RMSE of 0.206. The study’s findings indicated that, in the absence of significant policy interventions, emissions from building electricity consumption are projected to rise substantially by 2050, emphasizing the necessity of policy-driven climate action. Although the study is policy-oriented, it mostly includes policy directions on energy efficiency; it does not include specific policy scenarios in renewable energy policies and carbon taxes.

B. P. Chukwunonso et al.24 focused on predicting \(CO_2\) emissions in the United States using data from the U.S. Energy Information Administration (1973-2022). The study leveraged advanced neural networks, including Layered Recurrent Neural Networks (L-RNN), which achieved high predictive accuracy with MAPE (1.187), MSE (135.5669), and RMSE (11.6433). The study tests the model performance of \(CO_2\) emissions for 2011 to 2022; no actual forecast is reported in the study.

The study of M. Ahmed et al.25 investigated the energy consumption of China, India, the USA, and Russia and its trend in greenhouse gas emissions (\(CO_2\), methane, and Nitrous oxide (\(N_2O\)) using datasets spanning from 1980 to 2018 and then forecast the trend of greenhouse gas emissions in these countries from 2019 to 2023. The predictive results suggested LSTM is the best-performing model against SVM and ANN. The study has limitations in lag selection (a fixed 3-year lag for training the models ), model diversity, and short-term forecasting from 2019 to 2023; long-term forecasting would be more useful to policymakers.

C. Dong et al.4 examined the likelihood of achieving the Paris Agreement targets among the top ten \(CO_2\)-emitting countries, including China, the USA, India, Russia, Japan, and others. Using a combination of trend extrapolation models and BP neural networks, their analysis suggested that China, India, and Russia might meet their NDCs under specific conditions. In contrast, the USA, Japan, and other nations were at risk of failing to do so. The study underscored the urgent need for enhanced policy interventions to align emissions trajectories with global climate goals.

A. Mardani et al.26 employed dimensionality reduction techniques (Singular Value Decomposition), clustering (Self-Organizing Maps), and machine learning algorithms (ANFIS and ANN) to analyze emissions data of G20 countries. Their best-performing model achieved an MAE of 0.065. Despite the methodological sophistication, the study’s lack of actionable long-term forecasts limited its applicability in strategic climate planning.

Jena et al.12 applied Multilayer Artificial Neural Networks (MLANN) to assess emissions trajectories in the 17 top emitters. Their analysis revealed substantial disparities in compliance with climate commitments; while some European nations demonstrated alignment with climate goals, China and Saudi Arabia remained on high-emission trajectories. However, the temporal coverage of the dataset that ended in 2012 reduces its relevance for contemporary policy discourse.

X. Li27 discussed various approaches to \(CO_2\) emissions prediction in China, categorizing them into statistical and machine learning models. The study compared different models to determine the most accurate approach for near-real-time forecasting. LSTM was the best-performing model for near-real-time daily \(CO_2\) emissions prediction with an accuracy value of 98.44%. The study analyzed \(CO_2\) emissions in China by considering the sum of emissions from power, industry, ground transport, residential, domestic, and international aviation. The exclusion of crucial exogenous economic and energy consumption variables limited the model’s comprehensiveness. The focus on daily predictions may neglect long-term trends vital for effective policymaking.

Wang et al.28 proposed a novel two-stage forecasting framework, which first predicted independent variables and then forecast \(CO_2\) emissions using machine learning (ML) models, reducing errors caused by time lag. The study focuses on China and analyzes quarterly data from 1985 to 2020. It applies a sliding window method (lag = 3) to generate forecasting samples. Among the four two-stage ML models tested, SVR-ANN performed best, achieving the lowest root mean square error (RMSE) and mean absolute error (MAE) across different forecast horizons. The model significantly reduced forecasting errors compared to single-stage models. The study fixes the sliding window width at 3, meaning only the past three observations are used to predict the next period. Exploring different window sizes might yield better accuracy. Moreover, no policy directions are suggested in this study.

Table 1 provides a structured summary of recent key contributions to \(CO_2\) emissions forecasting, reflecting the evolution of modelling approaches–from univariate statistical methods to advanced machine learning and hybrid techniques. Many of these studies focus on a single country (often China or the U.S.) and use a narrow set of predictors. Several studies (e.g., Jena et al., Ahmed et al.) are limited in temporal scope or lack long-term forecasting. More recent studies (e.g., Magazzino et al., 2024; Amin et al., 2025) have emphasized the role of green investment and energy transition variables, which are partially captured in our feature set through renewable electricity output and industrial value added. Our study addresses these limitations using a multivariate ML framework across eleven high-emitting countries, extending the forecasting horizon to 2030 and incorporating a robust feature selection algorithm to enhance interpretability and accuracy.

A significant limitation in the existing literature is the predominant reliance on annual data for key variables, which is insufficient to effectively forecast \(CO_2\) emissions using deep learning models. Consequently, performance metrics such as the coefficient of determination (\(R^2\)) are often omitted, potentially concealing underlying model inefficiencies. In instances where RMSE and MAE are reported, these metrics are frequently computed on scaled data rather than raw observations, leading to overestimating model accuracy. Many studies focus narrowly on univariate emissions data or single sectors–such as the cement industry in China–thereby neglecting the dynamic interplay between broader economic, energy, and industrial variables. Some works attempt to enhance forecasting accuracy by incorporating improved factors like industrial structure, the proportion of thermal power generation, and energy consumption per unit of GDP. Using feature selection has yielded predictions that align more closely with true emissions; they ultimately guide policy recommendations toward these limited factors rather than addressing the full spectrum of influences originally considered. Additional methodological constraints further diminish the robustness of these studies. Using a fixed sliding window size may not be optimal across different periods, reducing forecasting flexibility. The temporal coverage of datasets, often ending in 2012 or 2014, limits the relevance of the findings in the context of recent economic transformations and evolving environmental policies in major economies such as China and India. Despite some studies offering broad policy recommendations focused primarily on energy efficiency, they overlook the integration of comprehensive external factors such as population growth, overall economic performance, and aggressive policy scenarios like renewable energy incentives or carbon taxation. This gap results in a dearth of targeted, country-specific measures essential for strategic, long-term climate planning. Collectively, these shortcomings highlight the urgent need for methodological advancements that embrace diverse data sources, flexible forecasting techniques, and actionable policy frameworks to better align emissions trajectories with global climate goals.

Despite considerable advancement, existing models often suffer from narrow feature selection, fixed lag structures, or limited temporal horizons, undermining predictive robustness. This study addresses these gaps through a multi-country, multivariate ML modelling framework enhanced by a robust feature selection algorithm (SSFS), thereby generating forecasts with high empirical accuracy and policy fidelity. This study seeks to address these gaps by forecasting \(CO_2\) emissions for the top eleven global emitters through 2030, employing advanced ML methodologies (in order to gain better performance on low data volume over deep learning models given the availability of annual time granularity data) to assess their progress toward meeting their NDCs under the Paris Agreement. In selecting predictor variables, the study draws on established literature linking macroeconomic activity (GDP, manufacturing, and industrial value added), demographic pressures (population growth), and energy-related factors (fossil fuel energy consumption, electric power use, renewable energy penetration) to \(CO_2\) emissions dynamics. Prior studies–such as Faruque et al.19, Li13, and Huang et al.20–concluded that the interplay of economic growth, technological structure, and energy mix drives emissions. The feature set considered in the study thus operationalizes these dimensions with historically available and policy-relevant data. Table 12 illustrates the literature-backed validation of the features selected for the study. Distinguished by its methodological rigour and comprehensive scope, this research provides a more precise and policy-relevant analysis than previous studies. More recent studies (e.g., Magazzino et al., 2024; Amin et al., 2025) have emphasized the role of green investment and energy transition variables, which are partially captured in our feature set through renewable electricity output and industrial value added.

The uniqueness and contributions of this study from the previous literature are outlined as follows:

-

1.

The study performs a multivariate analysis of \(CO_2\) emissions employing a rigorous data pipeline comprised of state-of-the-art ML models panel for time series analysis with a comprehensive feature engineering framework, including temporal, target-derived, and lagged features, to capture complex emission dynamics to provide a robust forecast that can lead to more informed policy and mitigation strategies.

-

2.

The study models the carbon emission of the top eleven emitters using the panel’s best-performing model, and the Support Vector Regression (SVR) model demonstrated the highest accuracy of \(99.75\%\) in predicting \(CO_2\) emissions for Canada with an average accuracy of \(96.21\%\) which is the highest among the known academic body of knowledge.

-

3.

The study integrates Sequential Squeeze Feature Selection (SSFS)29 within the data pipeline to achieve an average accuracy of 96.21%, which is \(16.38\%\) higher than the accuracy achieved on baseline data, and the result is statistically significant using Wilcoxon rank sum test.

-

4.

The study offers a critical tool for understanding and mitigating the challenges of global climate change. The study also provides the current status of \(CO_2\) emissions of these countries and actionable insights into policy interventions required to reduce emissions effectively.

To the best of our knowledge, this study is the first to holistically integrate methodological innovation, empirical breadth, and policy linkage in forecasting \(CO_2\) emissions for the top global emitters.

The manuscript is organized as follows: The Materials and Methods section outlines the study’s data sources and methodological framework. The Results section presents the key findings. The Discussion section provides an overall discussion of the study, including a comparative study of our research and Policy Directions, which explores policy implications for \(\hbox {CO}_2\) emission reduction. The Conclusion provides a summary and closing remarks on the study’s outcomes.

Materials and method

Data

The dataset for the eleven countries was obtained from the World Bank30, covering the period from 1990 to 2023. The dependent variable, \(\hbox {CO}_2\), represents total \(\hbox {CO}_2\) emissions per unit of GDP. A detailed dataset summary, including all independent variables, is presented in Table 3. Since the data are recorded annually, missing values were addressed using a three-year moving average.

Technical specification

The simulations conducted in this study were performed using Python version 3.11.1 and Scikit-learn version 1.3.2. Details regarding the technical specifications of the computing systems utilized for the computations are provided in Table 4.

Methodology

With the continuous rise in global \(\hbox {CO}_2\) emissions and their environmental consequences, this study aims to develop a robust forecasting framework, as illustrated in Figure 1, utilizing advanced machine learning techniques tailored for time series analysis. The objective is to provide accurate predictions of future \(\hbox {CO}_2\) emissions, leveraging eleven key economic, energy and industrial indicators from the world’s top eleven \(\hbox {CO}_2\)-emitting countries. The variables incorporated into the forecasting framework can be viewed as proxies for well-known drivers in the IPAT identity and Kaya decomposition: economic output (GDP), energy intensity (energy consumption per GDP), carbon intensity of energy (fossil vs. renewable consumption), and structural factors such as industrial and manufacturing output31,32. Fertilizer consumption is included as an indicator of agricultural industrialization, which has growing relevance in high-emitting developing economies. These features not only enhance the model’s predictive power but also align it with interpretive frameworks used in environmental and energy economics. A detailed explanation of these variables, their significance, and their role in the model is presented in the data section. This subsection further elaborates on data pre-processing strategies and methodological considerations that enhance the model’s accuracy and reliability.

The rest of this section presents the empirical framework of the study in a stepwise and accessible manner. We begin by outlining the data cleaning and variable construction. We then detail the feature engineering strategy, model selection process, and evaluation metrics. Special attention is paid to the rationale behind choosing specific statistical tests and machine learning models to ensure transparency and reproducibility.

Workflow of the study. The figure illustrates the methodological workflow of this study. Initially, data was gathered from the World Bank and refined by eliminating irrelevant entries. After cleaning, feature engineering was performed, and any missing values generated during this process were addressed, resulting in the final dataset. The next step involved data pre-processing, including scaling and train-test splitting, to prepare the dataset for machine learning models. Subsequently, the best-performing model was selected from a set of candidates using cross-validation over a predefined hyperparameter space. Lastly, Sequential Squeeze Feature Selection (SSFS) was applied to eliminate redundant features, ensuring optimal model performance.

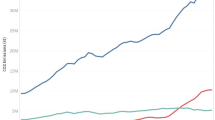

Global \(CO_2\) heat map of the base year (as per Table 9) vs. the forecasted year (2030). The figure illustrates the global heat map of carbon emission per GDP of the top eleven emitters of the base year (a) and forecast carbon emission by 2030 (b), which is, on average, \(96.21\%\) accurate. Russia is set to exceed its 2030 target with a 46.9% \(\hbox {CO}_2\) reduction from 1990 levels, while Germany and the USA will fall within 10% of their goals, cutting emissions by 61.76% and 44.33%, respectively. Canada, China, Japan, India, Indonesia, and South Korea are projected to miss their targets by over 10%, whereas Iran and Saudi Arabia will see rising emissions, with increases of 6.33% and 16.62%, respectively. The figure was generated using Python55 and Plotly56.

Data cleaning

The data obtained from the sources were mostly clean, but a few variables (fossil fuel energy consumption, electric power consumption, fertilizer consumption, renewable electricity output) had around \(2\%\) missing data, which were imputed using the moving average of the last three years. In case of data missing at the beginning of the time series, we have dropped the missing values; hence, the time scale of all the countries is not necessarily the same. We have observed 66.71% and 88.24% of missing values for renewable energy consumption (REC) and renewable electricity output (REO) at the beginning of the time series of Saudi Arabia. As a result, we have dropped the variables. For the same reason, we also have dropped the domestic credit to the private sector (DC) (58.82% missing data) for Indonesia. A graphical representation of all variables (Figure 3-14) of all eleven countries have been included in the Additional Information section. Descriptive statistics of data for all eleven countries are illustrated in Table 13 of the Additional Information section.

Feature engineering

Feature engineering in time series analysis involves creating or modifying features to enhance the predictive capabilities of machine learning models. This process is crucial for capturing the data’s underlying patterns and temporal dependencies. The feature engineering methods employed in this study can be classified into three main categories:

-

1.

Temporal Features: Temporal features are derived from the time component of the dataset. Since the data used in this study is recorded annually, the primary temporal feature considered is the year.

-

2.

Target-Derived Features: These features are generated based on the target variable and typically include lagged values, rolling and expanding window transformations, differencing, and statistical decompositions such as trend and seasonality extraction. Incorporating these features enhances predictive performance by leveraging past behaviours of the target variable, thereby improving forecast accuracy33,34. They aid in model interpretability by identifying influential past values, which is particularly useful for decision-makers. Rolling averages help smooth short-term fluctuations, whereas differencing makes the data stationary33. Seasonal decomposition is crucial in refining time series forecasting by separating data into different components, such as trend and seasonality, ultimately improving prediction accuracy34. For instance, the Seasonal Decomposition for Human Occupancy Counting (SD-HOC) model applies trend-seasonal decomposition with moving averages to enhance prediction accuracy in human occupancy forecasting35. The integration of seasonal decomposition in forecasting models allows for more precise estimations and informed decision-making. For seasonality decomposition we used additive model36 as illustrated in Equation (1).

$$\begin{aligned} y_t=Trend_t+Seasonailty_t+Residual_t \end{aligned}$$(1)The target-derived features employed in this study include:

-

Rolling window features: Mean and standard deviation.

-

Expanding window features: Mean and standard deviation.

-

Seasonal decomposition features: Trend and seasonality components.

-

Difference feature: First-order differencing of the target variable.

To capture both short- and long-term effects, window sizes ranging from 2 to 10 years were selected.

-

-

3.

Lagged features: Lagged features, representing past values of a time series, are fundamental in forecasting as they capture temporal dependencies. By incorporating these features, models can identify historical patterns to improve future predictions. However, including excessive lagged features can introduce redundancy, thereby reducing model efficiency and increasing computational overhead. Statistical methods such as Granger’s causality test were employed to ensure the selection of relevant lagged features. This test, proposed by Clive Granger, evaluates whether past values of one-time series contain significant predictive information for another37. While it does not establish true causality, it helps determine whether incorporating a particular lagged feature improves forecasting accuracy. The test is based on the following hypotheses:

-

a.

\(\mathbf {H_0:}\) Time series X does not Granger-cause time series Y.

-

b.

\(\mathbf {H_A:}\) Time series X Granger-causes time series Y.

-

Granger’s causality test is particularly useful in selecting the most relevant lagged features for the predictive model38, leading to a more efficient and generalizable model. To account for both short- and long-term influences on the target variable lags ranging from 1 to 6 were examined. Granger’s causality test was conducted on the 11 selected features at a \(5\%\) significance level. A summary of the number of lagged features chosen for each country is presented in Table 5, with the detailed test results available in Tables 14-24.

-

The Granger causality test was employed to select meaningful lagged features based on statistical dependency rather than arbitrary time lags. Although it does not establish causation in the philosophical sense, it determines whether the past values of one variable contain information that helps predict another. In this study, it ensures that only temporally predictive lags are retained, improving both accuracy and computational efficiency.”

The feature engineering process described earlier, especially the development of lagged features, has led to the occurrence of missing values. Typically, machine learning algorithms struggle to handle these missing values effectively. As a result, we have decided to eliminate the missing values from our dataset. While this decision shortens the time span of our data frame, it enhances the dataset by increasing the number of features, which may improve the performance of our machine learning models. Following the feature engineering process, the final feature set for each country comprises the following elements: original features, lagged features identified through Granger’s causality test, temporal features, rolling window features (window size:2-10), expanding window features, difference feature, and seasonal decomposition features. The rationale for selecting these features is elaborated upon in the corresponding paragraphs within the “Feature Engineering” subsection. The changes in the datasets post feature engineering regarding the number of features and data volume are well illustrated in Table 6. On average, a 282.64% increase in the number of features and a 190.72% increase in data points have been observed.

Data preprocessing

Considering that the dataset contains features with differing magnitudes, we apply normalization using a MinMax scaler, as described in Equation (2), to scale the values to the range [0, 1]. Due to its magnitude, this normalization process guarantees that no feature receives undue weight in the model training phase.

The final dataset is divided into three segments: \(70\%\) for the training set, \(10\%\) for the validation set, and \(20\%\) for the test set. The validation data is reserved for optimizing the model using SSFS.

Empirical forecasting equation

While the machine learning models used in this study do not estimate explicit structural equations, the final predictive framework may be conceptualized as a reduced-form empirical model of the following general structure:

where \(\widehat{CO_{2,t}^i}\) represents CO2 emissions per unit of GDP in country i at time t, \(X_{t-k}^{i}\) is a vector of lagged predictor variables selected through statistical and causal relevance (using Granger causality and SSFS), \(\theta\) denotes model parameters learned through supervised training, and \(\varepsilon _{t}^{i}\) captures unexplained variance.

While the functional form \(f(\cdot )\) is non-parametric and varies by algorithm, the core conceptual relationship reflects the economic-energy-environment nexus extensively discussed in the emissions literature.

This framing underscores that although ML approaches are data-driven, their architecture can still be interpreted through the lens of established environmental modelling frameworks. This interpretation is particularly useful when translating empirical results into policy directions.

Model selection by hyper parameter tuning

Once the final dataset is prepared, we conduct a grid search across a predefined hyperparameter space for each model illustrated in Figure 1. The optimal hyperparameters for each model are determined based on cross-validation scores obtained through five-fold time series cross-validation on the dataset. The models considered for this study include Decision Tree (DT), K Nearest Neighbors (KNN), Support Vector Regressor (SVR), Random Forest (RF), Gradient Boosting (GradBoost), and Extreme Gradient Boosting (XGBoost). A detailed description of the best model from each variant utilized in the study can be found in Table 25. The models selected–Decision Tree, KNN, SVR, Random Forest, Gradient Boosting, and XGBoost–are well-established in time-series regression and environmental forecasting due to their robustness in capturing non-linear relationships, ease of interpretability, and suitability for small-to-medium sized datasets (as is the case with annual data). Support Vector Regression (SVR), for example, is especially advantageous in high-dimensional spaces with limited data, offering strong regularization properties. XGBoost and Gradient Boosting are included for their ability to minimize overfitting via ensemble learning. The inclusion of diverse model types allows for comparative benchmarking across tree-based, kernel-based, and distance-based learners, ensuring robust selection of the best-performing model by country.

Subsequently, we fit the hyperparameter-tuned models to the training dataset and assess their performance on the test set to identify the final model. The evaluation metrics employed for this assessment are:

-

Root Mean Square Error, \(\textrm{RMSE}=\sqrt{\displaystyle \frac{1}{n} \sum _{t=1}^n\left( y_t-{\hat{y}}_t\right) ^2}\)

-

Mean Absolute Error, \(\textrm{MAE}=\displaystyle \frac{1}{n} \sum _{t=1}^n\left| y_t-{\hat{y}}_t\right|\)

-

Mean Absolute Percentage Error, \(\textrm{MAPE}=\displaystyle \frac{100 \%}{n} \sum _{t=1}^n\left| \displaystyle \frac{y_t-{\hat{y}}_t}{y_t}\right|\)

-

Coefficient of determination/R Squared, \(R^2=1-\displaystyle \frac{\displaystyle \sum _{t=1}^n\left( y_t-{\hat{y}}_t\right) ^2}{\displaystyle \sum _{t=1}^n\left( y_t-{\bar{y}}\right) ^2}\)

Sequential squeeze feature selection (SSFS)

Among various pre-processing steps, feature selection (FS) is an important pre-processing step of ML that prepares data before training any model39. Recently, SSFS has emerged as a notable advancement in the feature selection literature and has demonstrated strong performance in modelling Dengue propagation in Bangladesh29,36. Given the selection of a substantial number of features and the extensive feature engineering undertaken to capture temporal dependencies concerning the target variable while also addressing the limited data availability due to the annual frequency of observations. A robust feature selection algorithm is essential for eliminating redundant variables and enhancing model performance29.

The Sequential Squeeze Feature Selection (SSFS) method was selected over traditional sequential forward or backward selection algorithms because of its bidirectional nature and dynamic structure. SSFS is particularly suited to high-dimensional time-series datasets with engineered lagged features, where redundancy and collinearity can distort model performance. By assessing each feature’s contribution to predictive accuracy iteratively, the algorithm retains only those variables that materially improve model generalizability. Unlike Lasso or other penalized regression methods, SSFS provides direct, model-driven interpretability of feature importance29.

Let \(F = F_0 = \{f_i : i \in {\mathbb {N}}_{n(F)}\}\) represent the initial feature set, corresponding to the \(0^{th}\) iteration, where n(F) denotes the cardinality of the feature set F, and \({\mathbb {N}}_m\) is the initial segment of the natural numbers, defined as \({\mathbb {N}}_m = \{1,2,3,\dots ,m\}\). The feature set at the \(k^{th}\) iteration is given by \(F_k = \{f_i : i \in {\mathbb {N}}_{n(F)-k}\},\) where \(\forall i \in {\mathbb {N}}_{n(F)-k}\) and \(\forall k \in {\mathbb {N}}_{n(F)-1}\). The proposed accuracy-driven objective function for any feature \(f_i\) at the \(k^{th}\) iteration is defined as follows:

where \(A_{F_k}\) represents the accuracy of the machine learning model applied to the feature subset \(F_k\), and \(E_k\) denotes the set of features that have been eliminated up to the \(k^{th}\) iteration. Based on the objective function defined above, we introduce the following mathematical definition for a redundant feature:

Definition 1

(Redundant Features) For \(\epsilon > 0\), a feature \(f_i \in F\) is considered redundant if and only if

A well-known challenge in sequential feature selection methods is the nesting effect. The proposed Sequential Squeeze Feature Selection (SSFS) algorithm addresses this issue by allowing bidirectional movement of a feature \(f_i\) between the feature space \(F_k\) and the eliminated feature set \(E_k\)29. Since the algorithm is based on the formal definition of redundant features, unlike Sequential Forward Selection (SFS) and Sequential Backward Selection (SBS), the final number of selected features does not need to be predetermined. Instead, the selection process is entirely data- and model-driven29.

The algorithm employs both top-down and bottom-up approaches in a sequential manner to determine the final feature subset, hence the name Sequential Squeeze Feature Selection. The pseudocode for the proposed SSFS algorithm is provided in Algorithm 1.

The output of the custom objective function utilized in our approach can be reformulated to quantify the proportion of the model’s accuracy attributable to each feature. Let the SSFS algorithm run for a finite number of iterations, denoted as N, resulting in a final selected feature set \(F^* = F_N\). The contribution of an individual feature \(f_i \in F_N\) to the overall model accuracy, expressed as a percentage, is defined as follows:

To assess the efficacy of the feature selection method employed in this study, we will perform a Wilcoxon signed rank test to compare the model’s predictive accuracy before and after feature selection, thereby determining whether the observed improvement is statistically significant relative to the baseline model performance. The Wilcoxon signed-rank test, a non-parametric alternative to the paired t-test, is employed to assess whether the observed improvement in model accuracy after feature selection using SSFS is statistically significant. This test is particularly suitable when the differences between paired observations (in this case, model accuracies before and after SSFS) cannot be assumed to follow a normal distribution. By evaluating the magnitude and direction of these paired differences, the test determines whether the application of SSFS yields a consistent and statistically meaningful enhancement in predictive performance. The null and alternate hypotheses of the test are as follows:

-

\(\mathbf {H_0:}\) The two samples come from populations with identical distributions, implying no significant difference between them.

-

\(\mathbf {H_A:}\) The two samples come from populations from different distributions, indicating that one sample tends to have larger (or smaller) values than the other.

The test is widely used in various scientific domains, including bio-informatics, climate studies, and machine learning model evaluations, to assess whether observed differences in performance metrics, such as accuracy or error rates, are statistically significant.

To summarize, the empirical approach of this study involves:

-

Multivariate time series modelling of annual \(CO_2\) emissions across eleven countries using ML regression algorithms.

-

Construction of temporally dependent features and selection of informative lags using Granger causality.

-

Elimination of redundant predictors through SSFS.

-

Performance benchmarking across models using RMSE, MAE, MAPE, and \(R^2\).

-

Statistical significance of improvements is validated using the Wilcoxon test.

This pipeline balances predictive rigour with interpretability and is tailored for medium-sized datasets typical of international climate indicators.

Results

Feature selection

SSFS algorithm, on average, has provided \(16.38\%\) better performance in comparison to the baseline as depicted in Table 7. The findings have been found statistically significant by Wilcoxon rank sum test with test statistics \(\approx 0\), p-value \(=0.0009765625\) and degree of freedom \(=20\) comparing the accuracy of pre and post feature selection as illustrated in Table 7.

Feature engineering

Based on the SSFS algorithm, we derive the feature importance in terms of the model’s accuracy explained by each variable. Table 10 represents the most common important features for all countries except Iran and Saudi Arabia. Electric power consumption is the most significant and influential factor across seven nations (Canada, China, India, Iran, Japan, Russia, and the USA). Renewable energy consumption and renewable electricity output are crucial in six countries (China, Japan, Russia, the USA, Germany, and India). Meanwhile, fossil fuel energy consumption remains a key driver of emissions in five nations (China, Canada, Japan, Iran, and Saudi Arabia). These findings highlight that shifting towards clean energy and improving electricity efficiency is central to reducing \(CO_2\) emissions. GDP (Canada, China, Germany, Iran, Japan), population growth (Canada, China, India, the USA, South Korea), fertilizer consumption (Canada, Germany, India, Iran, Russia), and manufacturing (Canada, Indonesia, the USA, Saudi Arabia, South Korea) output are the most influential features in five countries, indicating that economic expansion and agricultural-industrial activities are key determinants of emission trends. Industrial value added is a significant factor in Canada, Germany and Russia.

Model performance

In our experiment, DT, KNN, SVR, RF, GradBoost, and XGBoost models have been used to forecast \(CO_2\) emissions of the top eleven countries of the world. Tables 26-29 illustrate all models’ accuracy, RMSE, MAPE and MAE values for the eleven top \(CO_2\) emitting countries. It has been observed from these tables that, in most cases, the SVR model outperforms the other models with an average accuracy of 95.19%. Table 8 depicts accuracy, RMSE, MAE and MAPE values of the best models of the world’s top eleven \(CO_2\) emitting countries. It is evident from Table 8 that SVR, GradBoost, KNN and XGBoost models achieved an accuracy exceeding 90% for all countries, except for Japan (88.10%), which is also very good. 96.21% is the average accuracy value achieved by the best-performing models across all countries. The values of accuracy demonstrate the strong predictive capabilities of these models to forecast \(CO_2\) emissions in the eleven countries.

Forecast

Building upon the previously applied feature engineering and data preprocessing techniques, we have constructed a forecasting dataset for 2024-2030. We have estimated their values for variables lacking future data, such as economic, industrial and energy consumption-related factors by averaging the corresponding data from the past three years. The remaining features were then derived using prior feature engineering methodologies. Utilizing this dataset and the optimal predictive model–trained on historical data–we have projected the \(CO_2\) emissions of various countries for the years 2024-2030, as depicted by the red lines in Figures 15-25. Table 9 outlines the forecast results and highlights the alignment of the results with the NDCs under the Paris Agreement. A pictorial representation of the table has been included in Fig. 2. According to the forecast, all countries except Iran and Saudi Arabia will achieve their NDC targets to some extent if they continue with the current policy to mitigate global warming and climate change. According to the forecast, Russia will achieve a \(46.9\%\) reduction in \(CO_2\) emissions from its 1990 levels, surpassing its target of \(30\%\) reduction by 2030. Germany and the USA are projected to reduce emissions by \(61.76\%\) and \(44.33\%\), respectively, from their base year, which is less than \(10\%\) short of achieving their target by 2030. Canada, China, Japan, India, Indonesia and South Korea will lag behind their targets with more than \(10\%\) of a shortage from their target. Unlike other countries, Iran and Saudi Arabia will remain far from their targets to achieve. According to the forecast, Iran’s emissions will increase by \(6.33\%\) rather than decrease, while Saudi Arab’s emissions will increase by \(16.62\%\) rather than decrease. Detailed forecast figures of all the top emitters have been portrayed in Figures 15-25.

Discussion

This study distinguishes itself from prior efforts not only by its high forecasting accuracy but also by the deployment of a rigorous feature optimization pipeline, demonstrating significant methodological progress in climate-oriented ML applications. The empirical findings presented in Tables 26-29 demonstrate that the Support Vector Regressor (SVR) model outperforms other machine learning approaches in forecasting \(CO_2\) emissions, achieving an average accuracy of 96.21%. SVR delivers the highest prediction accuracy for nearly all countries except Canada, Iran, and Japan, where alternative models–such as XGBoost, Gradient Boosting and KNN–show superior performance. The most influential predictors of \(CO_2\) emissions across nations include renewable energy consumption (REC), electric power consumption (EPC), GDP, population growth, fertilizer consumption, manufacturing output, and industrial activity.

Our projections reveal mixed progress among the world’s top \(CO_2\)-emitting countries toward their Paris Agreement targets. Russia appears to be on track to surpass its emission reduction commitments, while Germany and the United States have made significant strides but remain slightly below their targets. Conversely, China, India, Japan, Canada, South Korea, and Indonesia are projected to fall short by considerable margins. Iran and Saudi Arabia are anticipated to experience rising emissions rather than reductions. These insights underscore the urgency of policy interventions tailored to each country’s specific challenges.

Our results are consistent with, yet in some cases surpass, prior studies in predictive accuracy. For instance, Desai et al40 identified Random Forest (RF) as the best-performing model for Canada, achieving an accuracy of 83%. However, in our study, XGBoost demonstrates superior performance for Canada with an accuracy of 98.24%. Similarly, Jena et al.12 reported a MAPE of 2.9358 and an RMSE of 0.0277 for Canada using MLANN, whereas our XGBoost model achieves significantly lower error metrics (MAPE: 0.00420, RMSE: 0.00169).

For China, Li et al27 suggested LSTM as the best-performing model with an accuracy of 98.44%, while our study finds SVR to be more effective, achieving an accuracy of 99.13%. Wang et al.28 reported an RMSE of 0.0099 for the SVR-ANN model in China, whereas our study achieves a substantially lower RMSE of 0.00408.

For India, Ahmed et al.25 found that ANN performed best, achieving an RMSE of 0.008 and a MAPE of 0.705, while Jena et al.12 reported MAPE and RMSE values of 2.928 and 0.0235, respectively, for MLANN. In contrast, our SVR model significantly outperforms both, achieving MAPE: 0.00165, RMSE: 0.00239.

Similar improvements are observed for Iran, where Jena et al.12 reported a MAPE of 2.3610 and RMSE of 0.0277 using MLANN, whereas our Gradient Boosting model achieves MAPE: 0.00388 and RMSE: 0.00709. A comparable trend is found for Indonesia, where Jena et al.12 reported MAPE: 9.6898 and RMSE: 0.1077, while our SVR model achieves dramatically lower errors (MAPE: 0.00540, RMSE: 0.00357).

For Japan, Jena et al.12 reported a MAPE of 3.5206, while our study achieves a MAPE of 0.00819 with SVR. For Russia, Ahmed et al.25 found that SVM was the best-performing model with an RMSE of 0.0176, whereas our SVR model achieves an even lower RMSE of 0.00904.

Our models exhibit significant improvements for Saudi Arabia and South Korea as well. Jena et al.12 reported a MAPE of 5.9153 for Saudi Arabia using MLANN, whereas our SVR model reduces the error to MAPE: 0.01748. Similarly, for South Korea, where Jena et al.12 reported a MAPE of 2.4803, our SVR model achieves a MAPE of 0.00576.

Finally, for the United States, Jena et al.12 reported a MAPE of 2.7168, while our study achieves a MAPE of 0.01488, and B. P. Chukwunonso et al.24 reported a MAPE of 1.187, and RMSE of 11.6433, whereas our study achieves a MAPE of 0.01488 and RMSE of 0.00340, reaffirming the superior predictive performance of SVR.

Compared to Dong et al.4, which employed trend extrapolation and back-propagation neural networks to predict a 26.5-36.5% increase in emissions for the top ten emitters, our study offers a more hopeful outlook on emission trends while also providing a detailed, country-specific analysis that highlights both successes and ongoing challenges. Their analysis suggested that while China, India, and Russia might meet their targets under certain conditions, the USA, Japan, Germany, and South Korea were likely to fall short, with particularly severe emission trajectories projected for Saudi Arabia, Iran, and Indonesia. Our findings align with these conclusions but offer a more refined and data-driven assessment.

Ahmed et al.25 reported that between 2019 and 2023, China and India exhibited increasing trends in \(CO_2\), methane, and \(N_2O\) emissions, while the USA and Russia showed slowing trends. Our study confirms this pattern, with China and India expected to continue on a high-emission trajectory and the USA demonstrating moderate reductions. Similarly, Jena et al.12 forecast that from 2017 to 2019, China, India, Iran, Indonesia, Saudi Arabia, and South Korea would continue increasing emissions, whereas the USA and Japan were expected to achieve marginal reductions. Our results largely corroborate these trends but with more precise estimates.

The prominence of variables such as electric power consumption, fossil fuel use, and GDP in our model’s feature importance rankings reaffirms findings from earlier emissions studies. For instance, Jena et al.12 and Chukwunonso et al.24 identify these variables as core predictors of national emissions trajectories. Our inclusion of features such as renewable electricity output and fertilizer consumption–less common in prior literature–broadens the scope of emissions modeling to account for clean energy transitions and agro-industrial dynamics, especially in emerging economies.

These findings highlight the critical need for stronger policy enforcement, increased renewable energy adoption, and international collaboration, particularly for emerging economies facing financial and infrastructural constraints. Future work should focus on integrating socioeconomic factors and policy interventions into predictive models to enhance the accuracy and applicability of emission forecasts.

Policy directions

This section builds on the earlier empirical forecasts to draw actionable policy conclusions. Our ML framework not only identifies emission trends but also reveals the most influential drivers for each country–such as energy intensity, industrial output, and reliance on fossil fuels as illustrated in Table 11. These insights offer a robust, data-driven foundation for designing or adjusting national policies. Moreover, our comparative analysis allows us to derive tailored recommendations for countries at different economic development and technological transition stages. The forecasts generated by this novel ML framework provide a robust evidentiary basis for country-specific policy recommendations. Unlike coarse trend extrapolations, our model’s accuracy enables more precise diagnostics of policy effectiveness, as demonstrated in the case of Russia, the USA, and Germany. Russia, Germany, and the United States have made notable progress through the expansion of renewable energy, energy efficiency improvement, and energy sector reform, particularly in the electricity consumption sector, as well as strong policy frameworks. In the remainder of the policy direction section, we will provide suggestions based on the policy framework of the successful countries, redesigning them in the socio-economic context of the countries that need policy interventions to achieve their NDCs goals.

According to National Communication 841, greenhouse emissions of Russia were reduced by \(35.1\%\) in 2020 compared to 1990 levels. In 2021, \(38.3\%\) of electricity generation in Russia came from non-emission or low-emission sources, including renewables like hydropower, nuclear power, and others. The energy consumption per GDP of the Russian economy decreased by \(16\%\) in 2005-201542 due to energy efficiency and the use of clean technology in different sectors such as electricity, industry, and transport. The Russian government has approved a plan to support renewable energy from 2025 to 2035, investments in the construction of green stations will amount to 360 billion rubles42. Russia approved the National Low-Carbon Strategy in 2021, emphasizing energy efficiency, electric transport development, and modern solutions in agriculture and forestry43. Russia is focusing on increasing the share of clean energy in its mix, with 85% of its energy currently coming from low-emission sources such as natural gas, nuclear, and hydropower44. The country is investing in technologies for capturing, utilizing, and storing carbon dioxide and methane to reduce greenhouse gas emissions. The forecast results show that Russia’s measures to reduce carbon emissions are very effective. The continuity of current policies of Russia is essential to fulfil the target of zero carbon neutrality by 2060.

Germany’s \(CO_2\) emission decreased significantly (25%) in 2022, compared to 200045. Germany stands out as a global leader in renewable energy adoption, with 37.8% of electricity coming from renewables in 201846. Germany has also been called the world’s first major renewable energy economy with a \(17.6\%\) share of modern renewables in final energy consumption in 2021, a 37.6% increase compared to 200045. Policies such as the Renewable Energy Act (EEG), the Climate Action Law, the Energiewende Policy Framework, Carbon Pricing Mechanism and the National Coal Phase-out Strategy have played a pivotal role in reducing \(CO_2\) emission in Germany46. Based on our forecast, Germany will be short of \(3.24\%\) from their target by 2030. Germany needs to pay more attention to reducing fossil fuel consumption, increasing energy-efficient technology, maintaining grade stability, and expanding battery storage to support intermittent renewable. Leveraging digital technologies like AI-driven smart grids, and launching nationwide climate literacy and behavioral change campaigns are also crucial

The USA has already achieved its 2020 target of net \(CO_2\) emissions reductions in the range of \(17\%\) below 2005 levels47. Between 1990 and 2020, \(CO_2\) emissions from fossil fuel combustion decreased from 4,731.2 Mt \(CO_2\)e to 4,342.7 Mt \(CO_2\)e, an 8.2 percent total decrease47. US energy?related \(CO_2\) emissions declined by 3% in 2023 from the previous year, more than 80% of US energy-related \(CO_2\) emissions reductions in 2023 occurred in the electric power sector. The decline resulted from a modest reduction in electricity demand, which decreased by approximately 1% in 2023. Additionally, coal-based electric power generation dropped by 19%. Most of this generation was replaced by natural gas, which rose by 7% (113 TWh), and solar energy, which increased by 14% (21 TWh). The energy consumption per unit of GDP of the US economy decreased by 40% in 2005-2023, reflecting this country’s energy efficiency. In 2021, renewables (including wind, hydroelectric, solar, biomass, and geothermal energy) produced about 20 per cent of all electricity generated in the United States48, and renewable generation was approximately double what it was in 201049. The USA has implemented various policies and programs at the state, local, and tribal levels to combat climate change. For instance, the Clean Power Plan (CPP) (2015), American Jobs Plan (2021), Executive Orders on Climate Change (2021), Energy Independence and Security Act (2007), Carbon Pricing and Market Mechanisms, State-level renewable portfolio standards (RPS) and tax relief for renewable energy technologies50. Despite these gains, natural gas remains the dominant electricity source49, requiring further investments in offshore wind and green technology, grid modernization, and energy storage to meet the 50-52% emission reduction target by 2030.

Despite their relatively mature policy frameworks, countries like the USA and Germany must now transition from broad emissions reductions to more granular, sectoral regulation. For example, our model suggests electricity consumption remains a high-leverage variable in the US, indicating the need for deep retrofitting of buildings, electrification of heating, and enhanced grid resilience.

Our model identifies fossil fuel use and manufacturing intensity as dominant predictors in China and India. Targeted decarbonization in high-emission sectors–such as steel and cement–through industrial electrification and performance-based subsidies can offer high-impact results. Additionally, national-level renewable energy auctions and cross-border transmission infrastructure could enable more efficient clean energy integration

With a massive population of approximately 1.41 billion (as of 2023), China significantly contributes to \(CO_2\) emissions due to high energy consumption, urbanization, and industrialization. The country faces the challenge of balancing its economic growth with the need for emission reductions. To address this, China can expand the National Emissions Trading System (ETS)51 to cover a broader range of industrial sectors and implement stronger decarbonization measures in high-emission industries such as steel and cement. Adopting renewable energy and improving energy efficiency, as demonstrated by countries like the United States, Russia, and Germany, can help China achieve its emission reduction targets.

India, now the world’s most populous country with over 1.42 billion people (as of 2023) and an annual population growth rate of 0.8%, faces increasing \(CO_2\) emissions driven by high electricity demand, urbanization, and industrialization. To mitigate these trends, India can strengthen the Perform, Achieve, and Trade (PAT)52 scheme to promote industrial energy efficiency. Expanding family planning policies and urban sustainability initiatives can help manage the environmental impact of rapid population growth. Stricter fuel efficiency standards in the transportation sector can further reduce emissions. Like China, India can benefit from adopting renewable energy and energy efficiency strategies, following the examples of the United States, Russia, and Germany.

Japan is expected to reduce approximately 16% of its \(CO_2\) emissions from its base year emissions. As a developed economy, Japan must focus on reducing fossil fuel consumption, expanding renewable energy, increasing energy efficiency, and adopting green technology to meet its Paris Agreement targets. One key area for improvement is offshore wind energy, where Japan currently has only 1 GW of installed capacity, far below its 10 GW target by 2030. Japan has implemented a carbon tax of $3 per ton of \(CO_2\), which is significantly lower than the levels in Canada and the EU. To enhance its climate strategy, Japan should expand its carbon pricing mechanisms and strengthen efforts in renewable energy development to accelerate emissions reductions.

Like Japan, South Korea is also projected to reduce approximately 16% of its \(CO_2\) emissions from its base year levels. However, South Korea does not have a nationwide carbon tax. Instead, it operates the Korea Emissions Trading Scheme (K-ETS) as its primary carbon pricing mechanism. To meet its Paris Agreement commitments, South Korea should focus on reducing fossil fuel dependence, increasing renewable energy adoption, and improving energy efficiency. Implementing a nationwide carbon pricing mechanism and expanding emission trading mechanisms similar to those in the United States and Germany will be essential for achieving its 2030 climate targets.

The approach of Canada, which includes a steadily increasing carbon tax from 20 per tonne in 2019 to 170 per tonne by 203053, provides clear market signals to industries and households, incentivizing investments in energy efficiency and renewable technologies. To mitigate the target of the Paris Agreement by 2030, Canada should improve industrial and electricity energy efficiency more intensively. Expanding hydroelectric and wind energy projects can also help Canada meet its net-zero emissions target by 2050.

According to the Paris Agreement, Saudi Arabia aims to reduce emissions by 278 Mt\(CO_2\)e annually by 2030, with 2019 as the base year. But the recent trend of \(CO_2\) emissions in Saudi Arabia is increasing54. To fulfil its target by 2030, Saudi Arabia should reduce dependence on fossil fuel exports by diversifying into hydrogen and carbon capture technologies and implementing stronger electricity, building, and industrial energy efficiency regulations. Saudi Arabia should properly implement the Saudi Green Initiative (2021) with stricter renewable energy adoption targets.

Indonesia and Iran are developing nations, which face financial and infrastructural barriers to emissions reductions and require international support to transition to low-carbon pathways. These countries should focus on decentralized energy systems such as microgrids and mini-grids in rural areas to reduce dependency on diesel generators. Indonesia’s vast archipelago could benefit from community-based solar or wind projects. Iran can utilize its desert regions for large-scale solar farms. They can seek partnerships with developed countries for technology transfer in sectors like energy storage, carbon capture, and grid modernization. They can provide subsidies or tax incentives for adopting energy-efficient equipment in manufacturing and residential settings.

International funds such as the Green Climate Fund (GCF) need to be mobilized to finance renewable energy projects and energy-efficient infrastructure. Developed countries should provide grants or low-interest loans to support clean energy transitions to developing countries.

For nations like Saudi Arabia and Iran, where emissions are projected to increase, there is a pressing need to accelerate diversification strategies. The adoption of carbon capture, hydrogen fuel production, and grid modernization will require external technical and financial assistance, ideally through mechanisms such as the Green Climate Fund or bilateral climate partnerships.

By leveraging the predictive capacity of machine learning, this study offers a forward-looking, evidence-based perspective on how national policies can be prioritized and sequenced. Countries that fall short of their targets are not monolithic; they diverge by industrial structure, energy profile, and developmental context. Hence, policy interventions must be equally nuanced. Integrating empirical modelling into climate governance provides early warning signals and a diagnostic map for targeted adaptive policy design.

Conclusion

This study examines the likelihood of the top eleven carbon-emitting countries achieving their Paris Agreement and NDC targets based on emission trends from 1990 to 2023. We have considered eleven economic, industry and energy consumption variables to forecast carbon dioxide emissions for these countries. Using six widely adopted machine learning algorithms, feature selection, and the SSSF method, our analysis identifies SVR, XGBoost, GradBoost, and K-Nearest Neighbors as the most accurate models, achieving an average prediction accuracy of 96.21%. The efficacy of the SSFS method is evident, leading to an average accuracy improvement of 16.38% compared to the baseline models, a statistically significant enhancement confirmed through the Wilcoxon rank sum test (p = 0.00097, df = 20). Our forecasts show Russia will exceed its Paris Agreement target before 2030. The USA and Germany will fall short of their targets by less than 10%. China, India, Japan, Canada, South Korea, and Indonesia are expected to miss their targets by more than 10%. Iran and Saudi Arabia will likely continue on an increasing trajectory of \(CO_2\) emissions.

While several countries have implemented strong policy measures, such as Russia’s National Low-Carbon Strategy, Germany’s Renewable Energy Act (EEG), and the USA’s Clean Power Plan and State-level Renewable Portfolio Standards, some of these nations still face significant challenges to fulfilling their Paris Agreement target by 2030. Canada has introduced a steadily increasing carbon tax to reduce emissions but is still far from fulfilling its NDCs target. Canada should enhance industrial and electricity efficiency while expanding hydroelectric and wind energy to achieve its net-zero emissions target by 2050. India and China must strengthen industrial energy efficiency and expand renewable energy adoption, while Japan and South Korea must expand renewable energy adoption, carbon pricing mechanisms, and emissions trading systems. Saudi Arabia, Iran, Indonesia, and other emerging economies require international support, infrastructure investment, and financial mechanisms like the Green Climate Fund to facilitate low-carbon transitions. These findings highlight the need for stronger policy enforcement, increased investments in clean technology, and global cooperation to meet climate goals effectively.

While our study provides valuable insights into \(CO_2\) emission forecasting using machine learning models, several limitations must be acknowledged. The models employed, including SVR, XGBoost, and Gradient Boosting, do not inherently provide confidence or prediction intervals, making uncertainty quantification challenging. Traditional bootstrapping methods are unsuitable for time series data due to temporal dependencies, limiting standard approaches for estimating uncertainty. Our models rely on historical patterns, assuming their continuity, which may not account for external disruptions such as economic shocks, policy changes, or technological advancements. As machine learning models capture complex nonlinear relationships, their interpretability remains limited. Future research could explore hybrid models, probabilistic forecasting techniques, and exogenous event modelling to improve uncertainty estimation and predictive robustness.

Future research can explore sector-specific emissions forecasting to assess the impact of different industries on overall \(CO_2\) reduction targets. More advanced deep-learning techniques can be used to uncover intricate dynamics between the dependent and independent variables upon the availability of large-scale data, such as monthly data.

Additional information

Literature validation of feature selection (Table 12)

Data visualization (Figs. 3–14)

\(\mathbf {CO_2}\)

Domestic credit to the private sector

Electric power consumption

Fertilizer consumption

Fossil fuel energy consumption

Industry

GDP

Manufacturing

Population

Renewable energy consumption

Renewable energy output

Trade

Descriptive statistics (Table 13)

Granger causality result (Tables 14-24)

Canada

China

Germany

India

Indonesia

Iran

Japan

Russia

Saudi Arabia

South Korea

USA

Model hyper parameter tuning result (Table 25)

Evaluation metrics values of different models (Tables 26-29)

Results of forecasts (Figs. 15-25)

Canada

China

India

Indonesia

Iran

Japan

Germany

Russia

Saudi Arabia

South Korea

USA

Data availability

Data supporting the conclusions of this manuscript will be available from the corresponding author upon request.

References

World Meteorological Organization. Greenhouse gas concentrations surge again to new record in 2023. Accessed: 2025-02-02 (2023).

Energy Institute. Statistical review of world energy. Accessed: 2025-01-25. (2025).

Ahmed, M., Shuai, C. & Ahmed, M. Influencing factors of carbon emissions and their trends in china and india: a machine learning method. Environ. Sci. Pollut. Res. Int. 29, 48424–48437 (2022).

Dong, C., Dong, X., Jiang, Q., Dong, K. & Liu, G. What is the probability of achieving the carbon dioxide emission targets of the paris agreement? evidence from the top ten emitters. Sci. Total Environ. 622, 1294–1303 (2018).

Mutascu, M. \(\text{ Co}_2\) emissions in the usa: new insights based on ann approach. Environ. Sci. Pollut. Res. Int. 29, 68332–68356 (2022).

Ito, K. \(\text{ Co}_2\) emissions, renewable and non-renewable energy consumption, and economic growth: Evidence from panel data for developing countries. Int. Econ. 151, 1–6 (2017).

Magazzino, C., Madaleno, M., Waqas, M. & Leogrande, A. Exploring the determinants of methane emissions from a worldwide perspective using panel data and machine learning analyses. Environ. Pollut. 348, 123807 (2024).

Amin, A. et al. Driving sustainable development: The impact of energy transition, eco-innovation, mineral resources, and green growth on carbon emissions. Renew. Energy 238, 121879 (2025).

Magazzino, C., Cerulli, G., Haouas, I., Unuofin, J. O. & Sarkodie, S. A. The drivers of ghg emissions: A novel approach to estimate emissions using nonparametric analysis. Gondwana Res. 127, 4–21 (2024).

Magazzino, C., Cerulli, G., Shahzad, U. & Khan, S. The nexus between agricultural land use, urbanization, and greenhouse gas emissions: Novel implications from different stages of income levels. Atmospheric Pollut. Res. 14, 101846 (2023).

Bella, G., Massidda, C. & Mattana, P. The relationship among \(\text{ co}_2\) emissions, electricity power consumption and gdp in oecd countries. J. Policy Model. 36, 970–985 (2014).

Jena, P. R., Managi, S. & Majhi, B. Forecasting the \(\text{ co}_2\) emissions at the global level: A multilayer artificial neural network modelling. Energies 14, 6336 (2021).

Li, Y. Forecasting chinese carbon emissions based on a novel time series prediction method. Energy Sci. Eng. 8, 2274–2285 (2020).

Matsumoto, K. I. et al. Addressing key drivers of regional \(\text{ co}_2\) emissions of the manufacturing industry in japan. The Energy J. 40, 233–258 (2019).

Yan, X. & Fang, Y. P. \(\text{ Co}_2\) emissions and mitigation potential of the chinese manufacturing industry. J. Clean. Prod. 103, 759–773 (2015).

Rehman, A. et al. Carbonization and agricultural productivity in bhutan: Investigating the impact of crops production, fertilizer usage, and employment on \(\text{ co}_2\) emissions. J. Clean. Prod. 375, 134178 (2022).

Ansari, M. A., Haider, S. & Khan, N. A. Does trade openness affects global carbon dioxide emissions: evidence from the top \(\text{ co}_2\) emitters. Manag. Environ. Qual. An Int. J. 31, 32–53 (2020).

Mentel, U., Wolanin, E., Eshov, M. & Salahodjaev, R. Industrialization and \(\text{ co}_2\) emissions in sub-saharan africa: the mitigating role of renewable electricity. Energies 15, 946 (2022).

Faruque, M. O. et al. A comparative analysis to forecast carbon dioxide emissions. Energy Reports 8, 8046–8060 (2022).

Huang, Y., Shen, L. & Liu, H. Grey relational analysis, principal component analysis and forecasting of carbon emissions based on long short-term memory in china. J. Clean. Prod. 209, 415–423 (2019).

Ofosu-Adarkwa, J., Xie, N. & Javed, S. A. Forecasting \(\text{ co}_2\) emissions of china’s cement industry using a hybrid verhulst-gm (1, n) model and emissions’ technical conversion. Renew. Sustain. Energy. Rev. 130, 109945 (2020).

Kumari, S. & Singh, S. K. Machine learning-based time series models for effective \(\text{ co}_2\) emission prediction in india. Environ. Sci. Pollut. Res. Int. 30, 116601–116616 (2023).

Chang, L., Mohsin, M., Hasnaoui, A. & Taghizadeh-Hesary, F. Exploring carbon dioxide emissions forecasting in china: a policy-oriented perspective using projection pursuit regression and machine learning models. Technol. Forecast. Soc. Change 197, 122872 (2023).

Chukwunonso, B. P. et al. Predicting carbon dioxide emissions in the united states of america using machine learning algorithms. Environ. Sci. Pollut. Res. Int. 31, 33685–33707 (2024).

Ahmed, M., Shuai, C. & Ahmed, M. Analysis of energy consumption and greenhouse gas emissions trend in china, india, the usa, and russia. Int. J. Environ. Sci. Technol. 20, 2683–2698 (2023).

Mardani, A., Liao, H., Nilashi, M., Alrasheedi, M. & Cavallaro, F. A multi-stage method to predict carbon dioxide emissions using dimensionality reduction, clustering, and machine learning techniques. J. Clean. Prod. 275, 122942 (2020).

Li, X. & Zhang, X. A comparative study of statistical and machine learning models on near-real-time daily co2 emissions prediction. Environ. Sci. Pollut. Res. Int. 30, 117485–117502 (2023).

Wang, C., Li, M. & Yan, J. Forecasting carbon dioxide emissions: Application of a novel two-stage procedure based on machine learning models. J. Water Clim. Chang. 14, 477–493. https://doi.org/10.2166/wcc.2023.331 (2023).

Al Mobin, M. Forecasting dengue in bangladesh using meteorological variables with a novel feature selection approach. Sci. Rep. 14, 32073 (2024).

World Bank. World Bank DataBank. https://databank.worldbank.org/home.aspx. Accessed: 2025-03-10.

Yokobori, K. & Kaya, Y. Environment, energy and economy: Strategies for sustainability (BookwellPublications, Delhi, 1999).

Yamaji, K., Matsuhashi, R., Nagata, Y. & Kaya, Y. A study on economic measures for co2 reduction in japan. Energy policy 21, 123–132 (1993).

Feng, Y., Zhang, Y. & Wang, Y. Out-of-sample volatility prediction: Rolling window, expanding window, or both?. J. Forecast. https://doi.org/10.1002/for.3046 (2023).

Raghuvanshi, S. Assessing the impact of seasonal decomposition on the time series analysis accuracy: A comprehensive study. Int. J. for Res. Appl. Sci. Eng. Technol. https://doi.org/10.22214/ijraset.2024.61811 (2024).

Arief-Ang, I. B., Salim, F. D. & Hamilton, M. Sd-hoc: seasonal decomposition algorithm for mining lagged time series. https://doi.org/10.1007/978-981-13-0292-3_8 (2017).

Al Mobin, M. & Kamrujjaman, M. Downscaling epidemiological time series data for improving forecasting accuracy: An algorithmic approach. Plos one 18, e0295803 (2023).

Granger, C. W. Investigating causal relations by econometric models and cross-spectral methods. Econom. J. Econom.Soc. 424–438 (1969).

Sun, Y. et al. Using causal discovery for feature selection in multivariate numerical time series. Mach. Learn. 101, 377–395 (2015).

Begum, A. M., Mondal, M. R. H., Podder, P. & Kamruzzaman, J. Weighted rank difference ensemble: A new form of ensemble feature selection method for medical datasets. BioMedInformatics 4, 477–488 (2024).

Desai, A., Gandhi, S., Gupta, S., Shah, M. & Patel, S. Carbon emission prediction on the world bank dataset for canada. arXiv preprint arXiv:2211.17010 (2022).

Russian Federation. 8th national communication of the russian federation under the united nations framework convention on climate change. Accessed: 2025-02-01. (2020)

Siakwah, P., Ermolaeva, Y., Agyekum, B. & Personalities, C. S. Sustainable energy transition in russia and ghana within a multi-level perspective. Chang. Soc. Pers. 7, 165–185 (2023).

International Energy Agency. National low-carbon strategy (2020). Accessed: 2025-02-01.

Low-Carbon Power. Low-carbon energy in russia (2024). Accessed: 2025-02-01.

International Energy Agency. Germany - country profile (2024). Accessed: 2025-02-01.

Chen, C., Xue, B., Cai, G., Thomas, H. & Stückrad, S. Comparing the energy transitions in germany and china: Synergies and recommendations. Energy Reports 5, 1249–1260. https://doi.org/10.1016/j.egyr.2019.08.041 (2019).

United States of America. 8th national communication of the united states under the united nations framework convention on climate change (2019). Accessed: 2025-02-01.

U.S. Energy Information Administration. Carbon dioxide emissions from energy consumption (2024). Accessed: 2025-02-01.

U.S. Energy Information Administration. Electricity in the united states: Generation, capacity, and sales (2024). Accessed: 2025-02-01.

Fekete, H. et al. A review of successful climate change mitigation policies in major emitting economies and the potential of global replication. Renew. Sustain. Energy. Rev. 137, 110602. https://doi.org/10.1016/j.rser.2020.110602 (2021).

Government of the People’s Republic of China. The People’s Republic of China Fourth National Communication on Climate Change (2023). Submitted to the United Nations Framework Convention on Climate Change (UNFCCC) on December 29, 2023.

Government of India. Third national communication and initial adaptation communication to the united nations framework convention on climate change (2023). Submitted to the UNFCCC on December 9, 2023.