Abstract

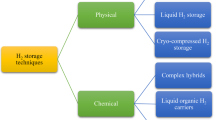

The global transition to clean energy has highlighted hydrogen (H2) as a sustainable fuel, with underground hydrogen storage (UHS) in geological formations emerging as a key solution. Accurately predicting fluid interactions, particularly interfacial tension (IFT), is critical for ensuring reservoir integrity and storage security in UHS. IFT is key in fluid behavior, influencing structural and residual trapping capacities. However, measuring IFT for H2–brine systems is challenging due to H2’s volatility and the complexity of reservoir conditions. This study applies machine learning (ML) techniques to predict IFT between H2 and brine across various salt types, concentrations, and gas compositions. A dataset was used with variables such as temperature, pressure, brine salinity, and gas composition (H2, CH4, CO2). Several ML models, including Random Forests (RF), Gradient Boosting Regressor (GBR), Extreme Gradient Boosting Regressor (XGBoost), Artificial Neural Networks (ANN), Decision Trees (DT), and Linear Regression (LR), were trained and evaluated. RF, GBR, and XGBoost achieved R2 values over 0.99 in training, 0.97 in testing, and all exceeded 0.975 in validation. These top models achieved RMSE values below 1.3 mN/m and MAPE values under 1.5%, confirming their high predictive accuracy. Residual frequency analysis and APRE results further confirmed these ensemble models’ low bias and high reliability, with error distributions centered near zero. DT performed slightly lower, with R2 values of 0.93, while LR struggled to model the non-linear behavior of IFT. A novel salt equivalency metric was introduced, transforming multiple salt variables into a single parameter and improving model generalization while maintaining high prediction accuracy (R2 = 0.98). Sensitivity analysis and SHAP (Shapley Additive Explanations) analysis revealed temperature as the dominant factor influencing IFT, followed by CO2 concentration and pressure, while divalent salts (CaCl2, MgCl2) exhibited a stronger impact than monovalent salts (NaCl, KCl). This study optimizes hydrogen storage by offering a generalized, high-accuracy ML model that captures nonlinear fluid interactions in H2–brine systems. Integrating real-world experimental data with ML-driven insights enhances reservoir simulation accuracy, improves hydrogen injection strategies, and supports the global transition toward sustainable energy storage solutions.

Similar content being viewed by others

Introduction

The worldwide shift towards cleaner energy sources has positioned hydrogen (H2) as a versatile and sustainable fuel alternative1,2. The significance of hydrogen in energy distribution and storage has increased as global initiatives aim to reduce reliance on fossil fuels. Underground Hydrogen Storage (UHS) within geological formations, particularly saline aquifers, represents one of the most promising methodologies for large-scale hydrogen storage2,3,4,5,6. This methodology capitalizes on the distinctive properties of these formations for efficient hydrogen storage, thereby achieving a balance between the supply and demand of renewable energy. UHS in saline aquifers has attracted considerable attention owing to its technical feasibility and economic viability. The effectiveness of UHS is significantly contingent upon a thorough understanding and management of fluid–fluid and fluid–rock interactions within the reservoir. The interfacial tension (IFT) between hydrogen and brine is critical in these processes’ interactions.

IFT significantly impacts how H2 behaves within the reservoir, influencing injectivity, capillary trapping, and overall storage efficiency. The IFT between H2 and brine influences the capillary forces that govern brine displacement by H2, which is critical for increasing storage capacity and ensuring long-term stability. Higher IFT values can improve capillary trapping, boosting H2 retention in the reservoir while potentially limiting recoverability during production. In contrast, lower IFT can improve H2 mobility and injectivity but may impair storage security by reducing capillary trapping and raising the likelihood of H2 migration7,8,9.

The interaction between H2 and brine generates complicated flow patterns that must be precisely modeled to maximize storage efficiency. Temperature, pressure, salinity, and gas impurities have a substantial influence on H2–brine IFT values, and storage capacity10,11,12,13,14,15. Chow et al.10 conducted IFT measurements for the deionized water/H2 system under varying pressures (0.5–45 MPa) and temperatures (298.15–448.15 K), also incorporating the effect of CO210. The study found that IFT decreased with increasing pressure and temperature and that adding CO2 reduced IFT between the gas and water phases even more. Janjua et al.15 extended these observations to H2–brine systems, finding a notable reduction in IFT with rising temperature and a slight decrease with increasing pressure, while higher salinity caused IFT to increase15. Alanazi et al.14 investigated the impact of CH4 on the IFT between H2 and brine, revealing that the IFT values for the H2–brine system exceeded those of the CH4-brine system. This suggests that using CH4 as a cushion gas may enhance the potential for hydrogen geo-storage by reducing the IFT14. Al-Mukainah et al.11 noted a marginal pressure-dependent decrease in IFT. In contrast, Mirchi et al.13 demonstrated that an increase in temperature or methane (CH4) concentration within the gas phase led to a further reduction in the H2–methane (CH4)/brine IFT11,13. Higgs et al.12 examined the effects of salinity and pressure, observing a decreasing trend in IFT with increasing pressure; however, they found no discernible correlation between salinity, particularly sodium chloride (NaCl), and IFT12.

IFT has conventionally been evaluated in laboratory settings using the pendant drop method. However, the extreme volatility of hydrogen (H2) and the complexities of replicating reservoir conditions make direct experimental testing exceptionally challenging. Laboratory measurements are time-consuming and costly, especially in high-pressure, high-temperature (HPHT) environments, and also prone to inconsistencies due to experimental sensitivity. Molecular dynamics (MD) simulations offer molecular-scale insights into interfacial behavior, yet they are computationally intensive and constrained to limited system sizes and short timescales. These limitations underscore the growing need for alternative predictive frameworks. ML models provide a data-driven solution that overcomes many challenges by learning complex relationships from existing data and offering fast, scalable, and accurate predictions across various conditions. Therefore, developing robust ML models is essential for enabling efficient IFT estimation in H2–brine systems, which directly impacts hydrogen storage design by influencing key factors such as injectivity, capillary trapping, and long-term migration risk. By enabling more accurate and efficient modeling of hydrogen–brine interfacial behavior, this work advances underground hydrogen storage technologies, which are essential for balancing seasonal energy supply and demand, integrating renewable energy sources, and accelerating the global shift toward a low-carbon hydrogen economy.

Recent advancements in machine learning, particularly hybrid and ensemble methods, have significantly improved the accuracy of predictions for applications like rock petrophysics properties prediction, carbon capture and CO2 sequestration in saline aquifers. These contributions highlight the growing role of machine learning (ML) in enhancing predictive accuracy and optimizing operational strategies in different applications16,17,18,19. Similarly, sophisticated modeling techniques have emerged as effective instruments for predicting IFT and enhancing ultra-high strength (UHS) performance. Hosseini and Leonenko20 established four intelligent models—Decision Trees (DT), Random Forests (RF), Support Vector Machines (SVM), and Multi-Layer Perceptron (MLP)—designed to forecast IFT based on input parameters such as pressure, temperature, and molality20. Their analysis highlighted the superior performance of the RF model, which achieved an R2 of 0.96, RMSE of 1.50, and AARD of 1.84%. The ensemble nature of RF, which excels at capturing complex data relationships, was credited for its enhanced predictive accuracy. Temperature was the most significant variable affecting IFT, followed by molality and pressure. Gbadamosi et al.21 proposed ML models, including Gaussian Process Regression (GPR), Elman Neural Network (ENN), and Logistic Regression, to predict IFT in H2–brine systems21. GPR outperformed others, yielding a mean absolute percentage error of 0.0512, making it the most reliable model for IFT prediction. Ng et al.22 employed ML methods, including genetic programming (GP), gradient boosting regressor (GBR), and a multi-layer perceptron (MLP) optimized with Levenberg–Marquardt and Adam algorithms, to model IFT22. The study’s findings demonstrated that the MLP-LMA model exhibited the highest level of accuracy, achieving a coefficient of determination (R2) of 0.9997 and an Average Absolute Percentage Relative Error (AAPRE) of 0.1907%. Kohzadvand et al.23 investigated the prediction of contact angles in H2/mineral/brine systems, employing models such as Multi-Layer Perceptron (MLP), Radial Basis Function (RBF), and Least Square Support Vector Machine (LSSVM)23. The LSSVM model outperformed the others, achieving an R2 of 0.994, RMSE of 1.61, and AARD of 1.69%.

Despite significant advances in experimental and computational methods, accurately predicting IFT in H2–brine systems under realistic subsurface conditions remains a complex challenge, especially due to hydrogen’s volatility and the variability in reservoir conditions such as pressure, temperature, salinity, and gas composition. Most existing models are either limited in scope or do not generalize well across different chemical environments and operational settings. To address this gap, this study develops a set of different ML models that incorporate a broad range of influencing parameters, including gas impurities (e.g., CH4, CO2) and diverse salt types. A key novelty of this work lies in introducing physically meaningful input transformations—such as salt equivalency and gas property generalization—which reduce input dimensionality while maintaining high model accuracy. This approach enhances model interpretability and applicability and supports robust IFT prediction across various hydrogen storage scenarios in saline aquifers. The following sections outline the complete workflow, beginning with the dataset description and methodology in “Methodology” Section, followed by model development and performance evaluation in “Results and discussion” Section. “Conclusions” Section discusses the sensitivity and interpretability analyses, Sect. 5 introduces the input parameter generalization strategy, and finally, Sect. 6 presents the key conclusions and implications of the study.

Methodology

Data collection and preparation

A diverse dataset was compiled from various literature sources, encompassing IFT measurements under varying pressure, temperature, salinity, and gas composition conditions10,11,12,13,14,15,24,25. A thorough data processing procedure ensured only relevant IFT measurements were included. To ensure data quality, all collected measurements underwent a QA/QC process, which included unit consistency checks, outlier removal, and duplicate filtering. Visual inspection and statistical diagnostics (e.g., skewness) were also employed to validate the integrity and distribution of the dataset. Table 1 provides a univariate statistical analysis of the input and output parameters from a dataset comprising around 500 data points. The temperature (T) ranges from 71.6 to 348.3°F, while pressure (P) varies between 14.5 psi and 6554 psi. The concentration of gas impurities, such as CO2 and CH4, spans from 0% (Pure H2 gas) to 100.0% for each. The total salinity is reflected by the molar concentrations of salts, including Na2SO4, NaCl, CaCl2, MgCl2, and KCl, with values ranging from 0.0 to 3.0 M for each salt type. The IFT ranges between 24.2 and 89.0 mN/m. The standard deviation for pressure and temperature are notably high at 1724 psi, and 51.6 °F, respectively, indicating significant variability in the pressure and temperature data. The skewness coefficient reveals that temperature and most salts exhibit a positive skew, suggesting a longer tail on the right side of the distribution. In contrast, the IFT has a slightly negative skew, indicating a distribution with a longer tail on the left.

Figure 1 illustrates the seaborn.pairplot, which depicts cross plots of the parameters relative to one another, with the diagonal component representing the individual data distributions. The last row emphasizes the correlation between IFT values and various properties. A pronounced negative correlation is identified between IFT, operating pressure, temperature, and impurities such as CH4 and CO2. Conversely, elevated salt concentrations positively correlate with IFT, as increased salt levels correspondingly enhance the IFT values. The diagonal plots delineate the distribution of each parameter, revealing that IFT approximates a normal distribution. In contrast, other parameters demonstrate slightly right-skewed distributions, as their positive skewness coefficients indicate.

The Pearson correlation coefficient (R) was calculated to analyze how the IFT value depends on the input parameters. R-values were derived using Eq. 1.

R = correlation coefficient, xi = sample input values, x̅ = mean of input values, yi = sample output values, y̅ = mean of output values.

Figure 2a illustrates a heat map displaying the correlation coefficients among all parameters. Meanwhile, Fig. 2b summarizes the correlation coefficients between IFT and the input parameters, revealing distinct strength and direction relationships. Among the salts, Na₂SO₄ (0.32), CaCl2 (0.33), and MgCl2 (0.32) exhibit high positive correlations, indicating that an increase in the concentration of these salts leads to an increase in IFT. NaCl (0.10) and KCl (0.11) have weaker positive correlations, indicating minimal IFT effects. Temperature (T) shows a strong negative correlation (− 0.60) with IFT, making it the most influential factor in reducing IFT, likely due to molecular agitation that disrupts cohesive forces. CO2 (− 0.47) and CH4 (− 0.27) also have negative correlations, with CO2 being more effective at reducing IFT, possibly due to its higher solubility. Pressure (P) exhibits a weaker negative correlation (− 0.24), suggesting only a slight reduction in IFT with increasing pressure. Overall, temperature and CO2 are key factors in reducing IFT, while salts like Na₂SO₄ and CaCl2 highly increase it. All input features were scaled using Min–Max normalization to ensure consistent input ranges and enhance training stability, particularly for neural network models.

Model development

Figure 3 outlines the process of developing ML models. It starts with Data Preparation, which entails gathering and processing relevant data to provide high-quality input for model development. This study employed a random subsampling cross-validation method, where the data is split randomly into training and testing sets with different ratios, ranging from 40/60 to 20/80, to assess model performance across various splits. The next phase is Model Training, where hyperparameters are fine-tuned to achieve optimal model accuracy. Subsequently, model testing occurs, allowing adjustments to the split ratio for further performance refinement. The Model Validation step uses 100 unseen data points to evaluate the model’s generalizability, ensuring it performs well on data not used during training. Additionally, to further confirm the effectiveness of the developed ML model, a tenfold cross-validation approach was utilized26,27,28. After validation, Sensitivity Analysis is conducted to understand the impact of different input variables on the model’s predictions. Finally, the process concludes with a step to generalize the input data, simplify the model, and generalize the input parameters so the model can be used in a wide range of input parameters.

The evaluation matrix of the model comprises the coefficient of determination (R2), Mean Absolute Percentage Error (MAPE), Average Percentage Relative Error (\(\text{APRE})\), and the root mean square error (RMSE). The calculations for R2 and RMSE can be conducted utilizing Eqs. 2 through 5.

SSE is the sum of residual squares, n is the number of data points, yi is the actual data values, \(\hat{y}_{i}\) is the predicted values and \(S_{YY}\) is the sum of data variation squares from the mean.

In pursuing high-accuracy prediction models for H2–brine IFT, a diverse set of ML algorithms was selected based on their proven efficacy in handling complex, nonlinear, and multivariate datasets. These models include Linear Regression (LR), DT, RF, GBR, Extreme Gradient Boosting (XGBoost), and Artificial Neural Networks (ANN). This selection balances interpretability and predictive power, comprehensively evaluating simple and advanced modeling techniques.

LR is a baseline model that offers a straightforward method for evaluating linear dependencies. Despite its limitations in modeling nonlinear relationships, it is useful for benchmarking and understanding the minimum complexity required to capture trends in the dataset.

DT are rule-based models that recursively partition data according to feature values. They provide transparency and interpretability, making them attractive for model-explainability applications. However, single DT are prone to overfitting, especially when the large feature space or interactions are complex. Ensemble methods were included to overcome these limitations. RF, introduced by Breiman29, builds multiple DT on bootstrapped samples and averages their outputs, reducing variance and improving robustness. RF is known for its stability, resistance to overfitting, ability to capture nonlinear interactions, and feature importance rankings.

GBR and XGBoost further enhance prediction by sequentially training trees on the residuals of previous ones. GBR is known for its performance in many structured data applications, while XGBoost incorporates regularization and parallel computing, making it both accurate and computationally efficient30. These models are well-suited for regression problems involving high-dimensional or correlated variables, such as brine salinity and gas composition.

ANNs, on the other hand, offer a powerful paradigm for capturing complex, highly nonlinear relationships through their layered architecture and activation functions31. ANNs have been widely adopted in subsurface modeling, including wettability, porosity prediction, and CO2 storage potential. Their flexibility allows them to learn intricate dependencies among variables like pressure, temperature, and impurity concentrations in fluid systems.

The combination of tree-based and neural models ensures a holistic comparison across model families, capturing both interpretable structure (in RF, DT) and deep pattern learning (in ANN). This methodological diversity increases the robustness of conclusions. It facilitates the identification of models best suited for real-world UHS scenarios, where physical interactions are often nonlinear and multivariate in nature.

Results and discussion

Linear regression model results (LR)

The LR model was applied to examine the linear relationship between input parameters and IFT. In Fig. 4a, a cross plot compares predicted IFT values from the LR model against the actual IFT values for both the training and testing datasets. Ideally, if the model were accurate, the points would align along the 45-degree line, representing perfect prediction. However, the plot shows a noticeable deviation from this line in both sets, with an R2 value of 0.76, indicating that the LR model only explains 76% of the variance in IFT, leaving significant error. This deviation suggests that the linear model fails to capture the complexity of the relationship between IFT, operating conditions, and gas composition.

Additionally, a residual analysis (shown in Fig. 4b) was performed by plotting the difference between actual and predicted IFT values. The residuals, ideally hovering around zero if the model is accurate, display substantial variation. The errors in predicted IFT reach up to 20 mN/m, with residual values frequently deviating from zero across different IFT values. This further confirms that the LR model struggles to accurately predict IFT, particularly due to the model’s oversimplification of non-linear interactions between the input variables and IFT. Consequently, these findings point toward the need for a more complex model that can better represent the underlying physics or non-linear patterns in the data.

Machine learning models results

Several ML models were developed to predict the IFT between brine and H2, including RF, DT, GBR, XGBoost, and ANN. Each model hyperparameters were tuned to optimize their performance, and the optimized hyperparameters are summarized in Table 2. Grid search method was utilized to optimize the hyperparameters for each ML model to enhance their performance. The grid search approach systematically explored a range of values for each hyperparameter and searched for the optimum hyperparameter combination that gave the highest performance. For the RF model, the optimal hyperparameters included using “sqrt” as the maximum feature selection method, a maximum depth of 25, and 125 estimators. The GBR model was optimized with a learning rate of 0.2, 50 estimators, and a subsample size of 0.5. The DT model achieved optimal performance with a maximum depth of 7 and the “sqrt” as the maximum feature. The ANN architecture consisted of a Sequential model with an explicit input layer, followed by three dense layers, each containing 50 neurons. The ‘relu’ activation function was used for the first and third layers, while ‘tanh’ was applied to the second layer. With a single neuron, the output layer was designed to predict IFT values. The data was split with a 70/30 training-to-testing ratio, and approximately 100 data points were set aside for validation purposes. Figure 5 shows the convergence plots for the RF, GBR, XGBoost, and ANN. It illustrates the training progression in terms of RMSE over iterations or epochs. For RF, the RMSE decreases sharply as the number of trees increases initially and then stabilizes, indicating that adding more trees beyond a certain point provides diminishing returns in terms of error reduction. The GBR plot shows a similar trend where early iterations significantly improve RMSE, which plateau as the number of boosting stages increases. The XGBoost convergence graph reveals a consistent decrease in training RMSE, showcasing effective learning and generalization over the iterations. However, the ANN plot displays a dramatic drop in training RMSE within the initial epochs, quickly converging to a stable low error rate, suggesting that the model rapidly captures the underlying patterns in the data.

Figure 6 demonstrates the actual versus predicted IFT values crossplots for the developed ML models of training, and testing datasets. RF, XGBoost, and GBR outperform the other models, achieving R2 values close to 0.996 during training and remaining high on the testing dataset at 0.970, 0.975, and 0.972, respectively. This indicates that these models are highly effective in capturing the underlying trends in the data, with minimal overfitting or loss of predictive power when exposed to new data. The ANN model shows a moderate performance, with an R2 of 0.975 during training and a slightly lower 0.960 on the testing data. While the drop is insignificant, it suggests that ANN is reasonably effective but performs slightly less than RF, XGBoost, and GBR. The DT model, however, shows clear signs of overfitting. While it achieves an R2 value of 0.991 in the training set, its performance drops significantly to 0.853 in the testing set. This discrepancy indicates that the DT model is fitting too closely to the training data and cannot generalize the prediction of the new data, a typical sign of overfitting.

The findings from both R2 and the cross-plots indicate that non-linear models, such as RF, GBR, and ANN, significantly outperformed the linear model (LR). RF, XGBoost and GBR showed the strongest predictive capabilities, with tightly clustered data points along the 45-degree line in the cross-plots, reflecting their ability to accurately predict the IFT as a function of the input parameters. The ANN’s architecture of dense layers with ‘relu’ and ‘tanh’ activations allowed it to capture complex non-linear relationships in the IFT data, but its cross-plot trends suggest that further tuning could reduce the observed residuals and improve prediction accuracy.

Models validation

The evaluation of various ML models on the validation dataset, which comprises 100 previously unseen data points, offers a more comprehensive insight into their generalization capabilities. Figure 7 compares each model’s predicted IFT values (represented by solid lines) against the actual IFT values (depicted as dots). Among the models, the RF, XGBoost, and GBR models demonstrated superior predictive power, closely aligning with the actual IFT values throughout the validation set. These models maintained strong performance, as indicated by their high R2 higher than 0.975, and low RMSE values less than 1.3 and similar MAPE values less than 1.5%, confirming their ability to generalize beyond the training and testing datasets accurately.

In contrast, although relatively accurate, the DT model exhibited signs of overfitting. This is evident from its slight misprediction of IFT values for a few data points in the validation set, likely due to its excessive focus on the training data patterns with R2 value of 0.870 and RMSE of 2.732 (MAPE of 2.7%), where the model struggles to capture the full variability in the unseen data, a direct consequence of overfitting.

While capturing the general trend of the data, the LR model failed to account for the variations in IFT values, resulting in lower accuracy. With an R2 of 0.760 and RMSE of 3.250 (MAPE of 3.4%), the LR model shows its limitations in handling complex, non-linear relationships present in the data.

The ANN demonstrated robust performance, with an R2 of 0.980 and RMSE of 2.074 (MAPE of 2%) in the validation dataset. While not as precise as RF and GBR, the ANN model still accurately captured the IFT behavior, offering good predictive capability, though it slightly underperformed compared to the ensemble methods.

Figure 8 summarizes the R2 and RMSE values across the training, testing, and validation datasets, highlighting the differences in model performance. RF, XGBoost and GBR models were the most reliable, accurately predicting the IFT values across all datasets, including the unseen validation set. While following the trend, the DT model struggled with overfitting, and the LR model missed much of the data’s variation. The ANN model provided solid performance, slightly trailing the RF and GBR models but still offering a reliable prediction of IFT values.

The superior performance of ensemble-based models such as RF, GBR, and XGBoost can be attributed to their ability to capture complex, nonlinear interactions among input variables and their robustness against overfitting. These models inherently leverage multiple decision trees and either bagging (RF) or boosting (GBR and XGBoost) strategies to reduce variance and bias, resulting in more stable and accurate predictions. Given the high-dimensional and heterogeneous nature of the dataset—characterized by variables such as pressure, temperature, multiple gas components, and varying salt types—the ability of these models to handle feature interactions and nonlinear relationships is critical. In contrast, simpler models like LR and DT lack the flexibility to model these complexities effectively, which explains their comparatively lower accuracy and wider error distributions. This reinforces the suitability of ensemble learning techniques for interfacial tension prediction in multiphase, multicomponent subsurface systems.

Furthermore, to evaluate the distribution of the residual (Actual—predicted values), Fig. 9 presents the APRE (Fig. 9a) and the residual frequency distribution (Fig. 9b) for the different ML models. APRE measures the average signed percentage difference between predicted and actual values, providing insight into model bias—whether a model tends to overestimate or underestimate the target variable. All models exhibit APRE values very close to zero (between approximately − 0.4% and + 0.1%) as shown in Fig. 9a, indicating that none of the models show significant bias reflecting good regression process except LR shows slightly −ve APRE value reflecting slight overestimation. Figure 9b shows all developed ML models’ normalized residual frequency distributions. Ideally, an unbiased model should have a histogram centered around zero and display a roughly normal distribution, reflecting balanced prediction errors and consistent performance. The RF, GBR, and XGBoost models exhibit sharply peaked, lightly tailed distributions centered at zero, indicating low bias and minimal error spread—consistent with their high accuracy and low variance reported in Fig. 8. The ANN residuals are also fairly centered but show a slightly broader spread, suggesting moderate variance. In contrast, LR and DT display more flattened and heavily tailed distributions, highlighting their greater sensitivity to outliers and lower predictive accuracy. These error patterns confirm that the ensemble models reduce mean error and control prediction variance more effectively, supporting their robustness for IFT prediction.

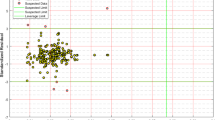

Identifying outlier data is a critical step in model validation, as it directly influences the reliability and generalization of any machine learning model. Outliers are defined as data points that deviate substantially from most observations. In large experimental datasets, such anomalies are common and, if left unchecked, can skew model performance evaluations. In the present study, the leverage method—a well-established statistical tool—was employed to identify potential outliers and define the applicability domain of each developed model. This method involves computing the Hat matrix to measure leverage and evaluating standardized residuals to detect deviations from experimental data. Points with leverage values greater than a critical threshold ℎ ∗ are influential, while standardized residuals beyond ± 3 denote statistically significant prediction errors32.

As shown in the combined William’s plot for the test set (Fig. 10), the majority of the model predictions fall within the defined applicability domain (leverage ≤ ℎ ∗ = 0.10 and ∣R∣ ≤ 3), reflecting strong predictive reliability. Ensemble-based models such as RF, GBR, and XGBoost demonstrate particularly tight clustering of residuals near zero and minimal high-leverage outliers. This indicates both high generalization capacity and statistical robustness of the developed models. The ANN also performs well, although with a slightly broader residual spread. In contrast, LR and DT show more frequent deviations, suggesting these simpler models may not adequately capture complex, nonlinear patterns in the IFT dataset. Only a few points (relative to the full test set) exceeded either the residual or leverage thresholds, which is consistent with experimental noise and expected variance. Thus, the plot confirms that the bulk of the predictions are statistically valid and fall within the model’s domain of applicability, reinforcing both the quality of the modeling and the consistency of the underlying experimental data.

To assess the model’s robustness, a tenfold cross-validation technique was implemented, advancing beyond the random subsampling method. This process divides the dataset into ten subsets (folds), using each as a validation set once, while the remaining nine train the model. Repeating this cycle ensures comprehensive evaluation across the dataset, reducing bias and enhancing insights into performance26,27,28.

The results of this rigorous cross-validation process are depicted in Fig. 11a, which presents a cross-plot of the predicted versus actual values for the validation set. As observed in the plot, the close alignment of all data points along the 45-degree line indicates excellent model performance. This alignment suggests that the predictions are accurate and consistent across different subsets of data, confirming the model’s strong generalization capabilities across the entire dataset. Figure 11b summarizes the R2 values obtained from each fold, consistently indicating high model performance. The R2 values ranged from 0.94 to 0.988, with an average of 0.975 and a standard deviation of 0.013, demonstrating the model’s effectiveness without any signs of overfitting. This meticulous approach to testing ensures the model’s robustness and ability to generalize across varied datasets.

Sensitivity analysis

The RF model was used for the sensitivity analysis of input parameters on IFT values. It predicted IFT between brine and H2 using random values for T, P, CO2, CH4, Na2SO4, NaCl, CaCl2, MgCl2, and KCl. Sobol sensitivity analysis assessed the influence of each parameter on predicted IFT, effectively quantifying first-order and total-order sensitivities. First-order indices (S1) indicate the direct effect of a single parameter on the output, while total-order indices (ST) measure the combined effect of a parameter, including its interactions with other parameters. This sensitivity analysis helps identify which input factors have the most significant impact on IFT predictions, guiding future experimental and modeling efforts.

Random values for the input parameters were generated within the minimum and maximum values for each parameter as indicated in Table 1. 10,000 realizations of IFT were estimated based on the different input parameters. The results indicate that temperature has the most significant influence on IFT, with a first-order Sobol index (S1) of approximately 0.5618, meaning that around 56% of the variance in the IFT can be attributed to temperature alone (Fig. 12). Pressure and CO2 concentration also exhibit moderate effects, with S1 values of 0.0888 and 0.1659, respectively. Smaller contributions come from CH4, Na2SO4, NaCl, CaCl2, and MgCl2, while KCl shows a low first-order effect, with a negative S1 value. Among the different salts, CaCl2 and MgCl2 showed the highest index, compared to the monovalent salts.

The total-order indices (ST) confirm these findings, showing that temperature’s total effect is 0.5965, and both CO2 and pressure contribute significantly with ST values of 0.2152 and 0.1280, respectively. The relatively small differences between S1 and ST for most parameters suggest minimal interactions between variables, except for CH4 and some of the salts, where the total-order effects are slightly larger, indicating some interaction with other variables. Overall, temperature, pressure, and CO2 concentration are the dominant factors influencing IFT in this system.

The correlation coefficient shown in Fig. 13 illustrates the linear relationship between IFT and various input parameters. Operating pressure and temperature, along with gas impurities such as CO2 or CH4, are associated with lower IFT values between brine and H2. Conversely, different salts generally increase IFT values, with divalent salts (MgCl2 and CaCl2) having a more pronounced effect compared to monovalent salts.

The cumulative frequency plot illustrates a clear correlation between IFT values and temperature, emphasizing the significant impact temperature has on IFT, as depicted in Fig. 14. When the temperature reaches 350°F, all potential IFT measurements remain below 60 mN/m, irrespective of other parameter variations. This indicates that IFT consistently decreases at elevated temperatures. Conversely, at temperatures under 100°F, over 20% of the IFT readings surpass 60 mN/m, reflecting a wider spectrum of higher IFT values at lower temperatures. This trend underscores the essential role temperature plays in influencing IFT behavior, with lower temperatures leading to markedly higher IFT values, highlighting its crucial role in regulating interfacial interactions in systems with brine and H2.

SHAP (SHapley Additive exPlanations) quantifies the individual contributions of each input parameter to a model’s predictions by calculating the marginal effects of features across all possible combinations of feature values. SHAP is a model-agnostic interpretability technique that originates from cooperative game theory. This comprehensive approach ensures a fair attribution of impact to each feature, effectively capturing both linear and nonlinear relationships and interactions between features.

SHAP values were calculated for each feature using a trained ML model. They were used to assess the global importance of features, as shown in the bar chart in Fig. 15. This chart indicates that temperature exhibits the highest average SHAP value, suggesting a significant influence on the model’s predictions. CO2 and CaCl2 also have substantial impacts. CH4 and MgCl2 contribute notably, though their effects are less pronounced than the top contributors. In contrast, Na2SO4, NaCl, and KCl demonstrate minimal influence on the model’s output.

The forced SHAP plot in Fig. 16 elaborates on how various parameters impact the model’s predictions across a specified range. In this visualization, parameters in blue reduce the IFT predictions, indicating a negative impact, whereas red parameters increase the IFT, suggesting a positive effect. Case a features high salinity, pressure, temperature, and CO2, with CaCl2, MgCl2, and Na2SO4 positively influencing the IFT, yet higher pressure and temperature alongside gas impurities push it down, balancing near the base case. Conversely, Case b, with lower input values, results in a prediction close to the base. Case c, showcasing high CO2 and pressure without salts, lowers the IFT to 52.4 mN/m, below the base of 66.3 mN/m. Case d adds high temperature to the mix in case c, pushing the IFT even lower to 37.6 mN/m. In cases e and f, increased salinity drives the IFT up to 70.24 mN/m in the absence of gas impurities, pressure, and high temperature.

SHAP forces plots for local interpretability, (a) high input parameter values, (b) low input parameters values, (c) gas impurities and pressure effect, (d) Temperature, Pressure, and gas impurities effects on IFT, (e) increasing the salinity in presence of CO2, and (f) increasing the salinity in absence of CO2.

The sensitivity analysis using the Random Forest (RF) model, based on Sobol indices, was conducted to quantify input parameters’ individual and interactive effects on IFT prediction. Temperature emerged as the most influential factor, followed by CO2 concentration and pressure, with both first-order and total-order indices confirming their dominant roles. These findings were further supported by correlation analysis and SHAP values, which consistently highlighted temperature as the leading contributor to model predictions. Beyond identifying key features, these results directly affect underground hydrogen storage (UHS) operations and experimental design. For instance, higher temperatures and elevated CO2 levels reduce IFT, enhancing hydrogen injectivity but potentially decreasing capillary trapping. Pressure also plays a crucial role in modulating fluid behavior and storage dynamics. From an operational standpoint, this insight can guide optimizing injection strategies and cushion gas composition to balance efficiency and containment. In laboratory settings, the results suggest that experimental efforts can be streamlined by focusing on these dominant variables, thus reducing testing complexity while capturing the most impactful conditions for UHS performance.

Generalize the input parameters

To simplify the model, in this section the input parameters were combined and replaced with equivalent parameters that reflect their impact. Initially, all salts were replaced with NaCl equivalent, which was calculated using the following equation.

where \(Mw_{salt}\), and \(Mw_{NaCl}\) are the molecular weight of the different salt type and the NaCl salt, respectively. Having the salt equivalent instead of the individual salt, highlights the impact of the salt type and the total salinity simultaneously. Hence, the dependence of the IFT on the salinity highly improved and the correlation coefficient between the NaCl equivalent increased to 0.6 compared to the low R values for the individual salts (0.1–0.33) as shown in Fig. 17. While for the gas composition, the overall specific gas gravity (\(\gamma_{g}\)) was used to reflect the composition of the gas stream, in addition to the pseudo-critical pressure and temperatures (Ppc, Tpc) were used. Similarly, the correlation coefficient between \(\gamma_{g}\), Ppc, Tpc, and IFT improved to − 0.56, − 0.49, and − 0.48, respectively, compared to R values less than 0.47 for the individual gas compositions.

RF model was developed using the newly derived input parameters. The results of this generalized model are illustrated in the accompanying figure, which shows performance across different datasets. The cross plots for the training and testing data sets reveal a near-perfect correlation between the actual and predicted IFT values, with R2 values of 0.99 for the training data and 0.98 for the testing data.

Additionally, Fig. 18 demonstrates the RF model’s ability to predict IFT values as a function of all parameters listed in Table 1 and the equivalent parameters used in this study. Both trends exhibit complete alignment, indicating that the equivalent parameters related to salinity and gas composition successfully capture the effects of varying salt types, concentrations, and gas compositions. This alignment confirms that these generalized parameters are reliable for representing the impact of different salts and gases on IFT. While simplifying the model inputs, the equivalent salt concentration metric may lose granularity when applied to other types of salts, which could impact the accuracy and generalizability of the results. As such, while the metric has proven effective for the salts tested, its application to other salts might require additional validation.

This study introduced ML models capable of predicting IFT values with high accuracy and speed. While experimental measurements also yield accurate results, they are constrained by the experimental limitations associated with high-pressure, high-temperature (HPHT) handling of H2, as well as potential human interaction effects. Furthermore, molecular dynamics (MD) simulations offer detailed insights at a molecular level. Still, they are restricted by substantial computational demands, limiting their use to smaller systems and shorter simulation times33,34. In contrast, ML models—particularly ensemble methods such as RF and Gradient Boosting—have shown great promise in managing large datasets and delivering rapid predictions across a wide range of reservoir conditions with significantly lower computational requirements. However, a primary limitation of these models is their potential lack of accuracy when extrapolating to new or untested conditions outside the range of the training data. Despite this, the models developed in this study offer significant potential for integration with reservoir simulation platforms and real-time monitoring systems. Their ability to generate fast, reliable IFT predictions can support dynamic scenario analysis, improve uncertainty quantification, and inform operational decisions in underground hydrogen storage (UHS) workflows. Moreover, when coupled with real-time field data, these models could enable adaptive control strategies, such as adjusting injection pressures or gas compositions, enhancing storage efficiency and safety.

Conclusions

This work uses machine learning (ML) techniques to improve the prediction of interfacial tension (IFT) in hydrogen–brine systems, addressing the complexities and limitations associated with existing experimental methods. The study created a generalized machine-learning model to estimate IFT under various conditions, such as temperature, pressure, brine salinity, and gas composition. The following are the main conclusions.

-

The Random Forests (RF), Gradient Boosting Regressor (GBR), and Extreme Gradient Boosting (XGBoost) models achieved superior predictive performance, with R2 values above 0.97, outperforming linear regression and decision trees models.

-

Residual frequency distribution plots and Average Percentage Relative Error (APRE) analysis confirmed that the ensemble models produced unbiased predictions with low variance and minimal bias, with residuals tightly centered around zero.

-

Sensitivity and SHAP analyses identified temperature as the most influential factor, significantly reducing IFT at higher values, while CO2 concentration and pressure also played critical roles.

-

Divalent salts (CaCl2, MgCl2) exhibited a more pronounced effect on increasing IFT than monovalent salts (NaCl, KCl), highlighting the importance of considering salt type variability in predictive models.

-

Introducing an equivalent salt concentration metric simplified the input parameters while retaining predictive accuracy, demonstrating its effectiveness in modeling the combined impact of different salt types on IFT.

The application of ML to forecast IFT in H2–brine systems is a noteworthy development in underground hydrogen storage (UHS) optimization. It provides a novel approach to boosting storage effectiveness and advancing sustainable energy solutions.

Data availability

Data is provided within the manuscript or supplementary information files.

Abbreviations

- ANN:

-

Artificial neural networks

- DT:

-

Decision trees

- GBR:

-

Gradient boosting regression

- IFT:

-

Interfacial tension

- H2 :

-

Hydrogen

- LR:

-

Linear regression

- RF:

-

Random forests

- RMSE:

-

Root mean square error

- ML:

-

Machine learning

- R2 :

-

Coefficient of determination

- NaCl, M:

-

Sodium chloride molar concentration

- T:

-

Operating temperature, F

- P:

-

Operating pressure, psi

- SSE :

-

Summation of residual squares

- N:

-

Number of data

- \(y_{i}\) :

-

Actual data values

- \(\hat{y}_{i}\) :

-

Predicted data values

- \(S_{YY}\) :

-

Summation of squares of data variation relative to the data mean value

- UHS:

-

Underground hydrogen storage

References

Aravindan, M. & Kumar, P. Hydrogen towards sustainable transition: A review of production, economic, environmental impact and scaling factors. Res. Eng. 20, 101456 (2023).

Cheekatamarla, P. Hydrogen and the global energy transition: Path to sustainability and adoption across all economic sectors. Energies 17(4), 807 (2024).

Jafari Raad, S. M., Leonenko, Y. & Hassanzadeh, H. Hydrogen storage in saline aquifers: Opportunities and challenges. Renew. Sustain. Energy Rev. 168, 112846 (2022).

Bai, M. et al. An overview of hydrogen underground storage technology and prospects in China. J. Petrol. Sci. Eng. 124, 132–136 (2014).

Aneke, M. & Wang, M. Energy storage technologies and real life applications: A state of the art review. Appl. Energy 179, 350–377 (2016).

Tarkowski, R. Underground hydrogen storage: Characteristics and prospects. Renew. Sustain. Energy Rev. 105, 86–94 (2019).

Behnamnia, M., Mozafari, N. & Dehghan Monfared, A. Rigorous hybrid machine learning approaches for interfacial tension modeling in brine-hydrogen/cushion gas systems: Implication for hydrogen geo-storage in the presence of cushion gas. J. Energy Stor. 73, 108995 (2023).

Dehury, R., Chowdhury, S. & Sangwai, J. S. Dynamics of hydrogen storage in subsurface saline aquifers: A computational and experimental pore-scale displacement study. Int. J. Hydrog. Energy 69, 817–836 (2024).

Zhou, J. D. & Kovscek, A. R. Hydrogen storage in saline aquifers: Experimental observations of viscous-dominated flow. in Paper Presented at the Paper Presented at the SPE Western Regional Meeting (2024). https://doi.org/10.2118/218944-MS

Chow, Y. T. F., Maitland, G. C. & Trusler, J. P. M. Interfacial tensions of (H2O + H2) and (H2O + CO2 + H2) systems at temperatures of (298–448) K and pressures up to 45 MPa. Fluid Phase Equilib. 475, 37–44 (2018).

Al-Mukainah, H., Al-Yaseri, A., Yekeen, N., Hamad, J. A. & Mahmoud, M. Wettability of shale–brine–H2 system and H2–brine interfacial tension for assessment of the sealing capacities of shale formations during underground hydrogen storage. Energy Rep. 8, 8830–8843 (2022).

Higgs, S. et al. In-situ hydrogen wettability characterisation for underground hydrogen storage. Int. J. Hydrog. Energy 47(26), 13062–13075 (2022).

Mirchi, V., Dejam, M. & Alvarado, V. Interfacial tension and contact angle measurements for hydrogen-methane mixtures/brine/oil-wet rocks at reservoir conditions. Int. J. Hydrog. Energy 47(82), 34963–34975 (2022).

Alanazi, A. et al. Influence of organics and gas mixing on hydrogen/brine and methane/brine wettability using Jordanian oil shale rocks: Implications for hydrogen geological storage. J. Energy Stor. 62, 106865 (2023).

Janjua, A. N., Ali, M., Murtaza, M., Patil, S. & Kamal, M. S. Effects of salinity, temperature, and pressure on H2–brine interfacial tension: Implications for underground hydrogen storage. J. Energy Stor. 95, 112510 (2024).

Al-Mudhafar, W. J., Rao, D. N., Srinivasan, S., Vo Thanh, H. & Al Lawe, E. M. Rapid evaluation and optimization of carbon dioxide-enhanced oil recovery using reduced-physics proxy models. Energy Sci. Eng. 10(10), 4112–4135 (2022).

Vo Thanh, H., Yasin, Q., Al-Mudhafar, W. J. & Lee, K.-K. Knowledge-based machine learning techniques for accurately predicting CO2 storage performance in underground saline aquifers. Appl. Energy 314, 118985 (2022).

Zhang, H. et al. Improving predictions of shale wettability using advanced machine learning techniques and nature-inspired methods: Implications for carbon capture utilization and storage. Sci. Total Environ. 877, 162944 (2023).

Ibrahim, A. F. Application of various machine learning techniques in predicting coal wettability for CO2 sequestration purpose. Int. J. Coal Geol. 252, 103951 (2022).

Hosseini, M. & Leonenko, Y. Prediction of hydrogen−brine interfacial tension at subsurface conditions: Implications for hydrogen geo-storage". Int. J. Hydrog. Energy 58, 485–494 (2024).

Gbadamosi, A. et al. New-generation machine learning models as prediction tools for modeling interfacial tension of hydrogen–brine system. Int. J. Hydrog. Energy 50, 1326–1337 (2024).

Ng, C. S. W., Djema, H., Nait Amar, M. & Jahanbani Ghahfarokhi, A. Modeling interfacial tension of the hydrogen–brine system using robust machine learning techniques: Implication for underground hydrogen storage. Int. J. Hydrog. Energy 47(93), 39595–39605 (2022).

Kohzadvand, K., Kouhi, M. M., Barati, A., Omrani, S. & Ghasemi, M. Prediction of interfacial wetting behavior of H2/mineral/brine: Implications for H2 geo-storage. J. Energy Stor. 72, 108567 (2023).

Hosseini, M., Fahimpour, J., Ali, M., Keshavarz, A. & Iglauer, S. H2–brine interfacial tension as a function of salinity, temperature, and pressure; implications for hydrogen geo-storage. J. Petrol. Sci. Eng. 213, 110441 (2022).

Isfehani, Z. D. et al. Interfacial tensions of (brine+ H2+ CO2) systems at gas geo-storage conditions. J. Mol. Liq. 374, 121279 (2023).

Rahimi, M. & Riahi, M. A. Reservoir facies classification based on random forest and geostatistics methods in an offshore oilfield. J. Appl. Geophys. 201, 104640 (2022).

Al-Mudhafar W. J. Incorporation of bootstrapping and cross-validation for efficient multivariate facies and petrophysical modeling. in Paper presented at the SPE Low Perm Symposium (2016). https://doi.org/10.2118/180277-MS

Wang, G., Ju, Y., Carr, T. R., Li, C. & Cheng, G. Application of artificial intelligence on black shale Lithofacies prediction in Marcellus shale, Appalachian Basin. in Paper Presented at the SPE/AAPG/SEG Unconventional Resources Technology Conference. https://doi.org/10.15530/URTEC-2014-1935021

Breiman, L. Random forests. Mach. Learn. 45, 5–32 (2001).

Chen, T. & Guestrin, C. Xgboost: A scalable tree boosting system. in Proceedings of the 22nd Acm Sigkdd International Conference on Knowledge Discovery and Data Mining 785–794 (2016).

Goodfellow, I., Bengio, Y., Courville, A. & Bengio, Y. Deep Learning (MIT Press, 2016).

Mehrjoo, H., Riazi, M., Rezaei, F., Ostadhassan, M. & Hemmati-Sarapardeh, A. Modeling interfacial tension of methane-brine systems at high pressure high temperature conditions. Geoenergy Sci. Eng. 242, 213258 (2024).

Lbadaoui-Darvas, M. et al. Molecular simulations of interfacial systems: Challenges, applications and future perspectives. Mol. Simul. 49(12), 1229–1266. https://doi.org/10.1080/08927022.2021.1980215 (2023).

Elgack, O. et al. Molecular dynamics simulation and machine learning-based analysis for predicting tensile properties of high-entropy FeNiCrCoCu alloys. J. Market. Res. 25, 5575–5585 (2023).

Acknowledgements

The author(s) would like to acknowledge the support provided by the King Fahd University of Petroleum & Minerals (KFUPM) Consortium for funding this work through project No. H2FC2314 from H2 Future Consortium, KFUPM.

Author information

Authors and Affiliations

Contributions

AI participated and completed all aspects of the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Ibrahim, A.F. Advanced generalized machine learning models for predicting hydrogen–brine interfacial tension in underground hydrogen storage systems. Sci Rep 15, 18972 (2025). https://doi.org/10.1038/s41598-025-02304-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-02304-4