Abstract

Stroke is a neurological complication that can occur in patients admitted to the intensive care unit (ICU) for non-neurological conditions, leading to increased mortality and prolonged hospital stays. The incidence of stroke in ICU settings is notably higher compared to the general population, and delays in diagnosis can lead to irreversible neurological damage. Early diagnosis of stroke is critical to protect brain tissue and treat neurological defects. Therefore, we developed a machine learning model to diagnose stroke in patients with acute neurological manifestations in the ICU. We retrospectively collected data on patients’ underlying diseases, blood coagulation tests, procedures, and medications before neurological symptom onset from 206 patients at the Chungbuk National University Hospital ICU (July 2020–July 2022) and 45 patients at Chungnam National University Hospital between (July 2020–March 2023). Using the Categorical Boosting (CatBoost) algorithm with Bayesian optimization for hyperparameter selection and k-fold cross-validation to mitigate overfitting, we analyzed model-feature relationships with SHapley Additive exPlanations (SHAP) values. Internal model validation yielded an average accuracy of 0.7560, sensitivity of 0.8959, specificity of 0.7000, and area under the receiver operating characteristic curve (AUROC) of 0.8201. External validation yielded an accuracy of 0.7778, sensitivity of 0.7500, specificity of 0.7931, and an AUROC of 0.7328. These results demonstrated the model’s effectiveness in diagnosing stroke in non-neurological ICU patients with acute neurological manifestations using their electronic health records, making it valuable for the early detection of stroke in ICU patients.

Similar content being viewed by others

Introduction

In the intensive care unit (ICU), more than 12% of patients hospitalized for non-neurological conditions develop neurological complications (hypertensive encephalopathy, hypoxic brain injury, metabolic encephalopathy, meningitis, mononeuropathy, seizure, status epilepticus, ischemic stroke and hemorrhagic stroke) during treatment1,2. These complications include altered mental status, hemiparesis or weakness of the extremities, seizures, changes in pupil size or pupillary light reflexes (PLR), and aphasia3,4. Neurological events can be attributed to metabolic causes, neurological injuries, epilepsy, sepsis, and stroke5,6. In particular, patients with stroke have nearly double the risk of ICU mortality, 2.5 times the length of ICU stay, and nearly double the overall length of hospitalization compared with patients without neurological complications7.

The stroke incidence rate in the ICU is 1.2%, which is significantly higher than that in the general population (232 per 100,000 person-years)8. However, even when neurological manifestations occur in patients, delays arise due to the medical equipment currently in use preventing timely brain imaging. Additionally, medications such as sedatives may obscure neurological manifestations, leading to delays in stroke detection3. Consequently, the patient’s brain cells lead to permanent neurological deficits and brain death owing to a lack of blood and oxygen. Moreover, a poor patient neurologic prognosis in the event of a stroke can negatively influence the decision to withdraw life-sustaining therapy (WLST)9.

According to ICU practice guidelines10, neurological evaluations, including the Glasgow Coma Scale, pupil size, PLR, and muscle strength (Medical Research Council scale), should be performed and documented by nurses every 1–2 h. For patients under sedation, it is recommended that the sedative be temporarily withdrawn to perform neurological evaluations to monitor the neurological status7. In case of any abnormal neurological findings during routine assessments, nurses notify the clinicians that the patient has a neurologic event.

Identifying the onset of stroke is critical for protecting the surviving brain tissue and restoring neurological defects in patients11. Diagnoses typically involve magnetic resonance imaging (MRI) and computed tomography (CT). However, this is often delayed owing to challenges such as differentiating between sedative-induced mental state alterations and those caused by a stroke. Mechanical ventilation, metabolic encephalopathy, unstable vital signs and extracorporeal membrane oxygenation (ECMO) application the unavailability of neurological tests further contribute to these delays3.

When patients are managed by a specialist neurocritical care team, as in the neuro-ICU, the risk of death and neurological deficits are reportedly reduced, even if the stroke occurs in the ICU12. Consequently, many patients with neurological pathologies will have a more informed view of their situation, better knowledge of the treatments required, and increased accuracy in predicting good functional outcomes, thereby avoiding unnecessary treatments13. Surprisingly, the brain—the most complex organ in the body—is frequently the most undermonitored during ICU treatments. The increasing availability of non-invasive monitoring options presents a great opportunity to integrate neurocritical care concepts into general ICU care14,15.

Recent advancements have led to the development of clinical decision support systems that incorporate machine learning techniques. Retrospectively collected medical data are increasingly used for epidemiology, prognosis, and patient condition assessment16. Therefore, we aimed to develop a machine learning model by analyzing the clinical data before the onset of neurological symptoms and vital signs. The training cohort of this study included only patients with non-neurological diseases and was designed to support clinicians’ decision-making in cases where brain imaging is delayed for any patient who have acute neurological manifestations in the ICU.

Study population and dataset

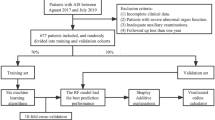

In total, 746 patients were admitted to the ICU for non-neurological diseases during our study period; among these, 206 were included and 540 were excluded based on predefined criteria. Inclusion and exclusion criteria were applied to ensure the selection of a cohort relevant to our study objectives and to maintain the integrity and reliability of the research findings. The specific inclusion criteria were: (a) age 18 years or older, (b) non-neurological patients admitted to the ICU, (c) patients without acute stroke before admission, and (d) patients who underwent brain imaging after the onset of neurological symptoms.

The exclusion criteria were: (a) age less than 18 years (n = 13), (b) no brain imaging results in the ICU (n = 287), (c) no acute neurological symptoms in the ICU (n = 91), (d) acute ischemic or hemorrhagic lesions detected on brain imaging in the emergency room or at a previous hospital (n = 99), (e) neurological symptoms occurred before admission to the ICU (emergency room, hospital before transfer, or during transport) (n = 40), (f) cardiac arrest without any additional neurological symptoms in the ICU (n = 3), (g) microhemorrhage after hypoxic brain injury (n = 5), and (h) patients with unknown timing of neurologic symptoms (n = 2).

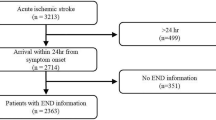

The internal dataset comprised 206 patients, with 60 classified as stroke cases and 146 as non-stroke cases (Fig. 1). There were six missing values in the entire dataset, which was replaced with the median value. Figure 2 illustrates the process of obtaining the external dataset, which included 45 patients (16 stroke and 29 non-stroke cases) with no missing values in the dataset. The baseline characteristics of the 206 patients included in this study are presented in Table 2. The individuals included in our study had a verified diagnosis of hemorrhage or ischemic stroke using MRI or CT scans.

According to analytical studies17,18,19,20. the underlying diseases relevant to the risk of a stroke occurring in non-neurological patients are coronary artery diseases (CADs), including myocardial infarction and angina, diabetes mellitus, and atrial fibrillation. Diagnostic blood tests included platelet (PLT) counts and international normalized ratios (INRs)21,22,23,24. Procedural risk factors included ECMO, cardiovascular surgery such as percutaneous coronary intervention (PCI), mechanical ventilation (MV), and renal replacement therapy25. The use of antiaggregants and anticoagulants was also classified as a risk factor26,27. as shown in Table 1. Our study included 18 categorical and 4 numerical features that were carefully selected based on their importance, as reported in previous studies28,29.

Machine learning approaches to stroke diagnosis

To diagnose stroke patients, we compared the performance of three different models—Categorical Boosting (CatBoost)30, support vector machine31, and logistic regression32. After evaluating these models, we selected CatBoost, which demonstrated the best performance, as the final model for stroke prediction. CatBoost is a gradient-boosting algorithm that is particularly effective in handling categorical features. This algorithm employs a breadth-first-search method to construct symmetric tree structures and utilizes ordered target encoding to prevent prediction shifts and target leakages. The use of oblivious trees, which ensure equal treatment of features at each split in the tree, and permutation-based gradient calculations also help to prevent overfitting.

Bayesian optimization was employed to select the optimal hyperparameters33. This process uses Bayes’ theorem to identify the optimal values for the objective function34. We utilized the BayesSearchCV algorithm from the Scikit-Optimize library for this purpose35. The selected hyperparameters are listed in Table 3.

Specifically, “Border_count” represents the number of divisions for numerical features, assisting in converting continuous features into categorical forms for model processing. “Iterations” indicate the number of trees the model constructs, which is a key factor in the model’s complexity and diagnostic power. “Depth” specifies the tree depth of the model, and it influences the extent of detailing in the model’s learned patterns. “L2_leaf_reg” refers to the coefficient in the cost function for L2 regularization to reduce overfitting by penalizing large weights. “Learning_rate” indicates the speed at which the model updates weights after each training iteration; a lower learning rate can lead to more accurate learning, albeit at a slower pace. “Eval_metric,” the evaluation metric used for validation data, was selected as the area under the receiver operating characteristics (AUROC) metric for our model. This metric effectively measures the ability of the model to differentiate between classes, which is crucial for an accurate stroke diagnosis.

Performance evaluation

To evaluate the performance of our model, we used four evaluation metrics: accuracy, sensitivity, specificity, and the AUROC36,37. The evaluation metrics were calculated using the following formulae:

,

,

.

Here, TP, FP, TN, and FN represent the numbers of true positives, false positives, true negatives, and false negatives, respectively.

The accuracy measures the overall correctness of the model’s predictions. The sensitivity measures the true positive rate, indicating the model’s ability to identify the positive cases. Specificity measures the true negative rate, indicating the model’s ability to identify the negative cases. AUROC is the area under the ROC curve, which plots the true positive rate against the false positive rate at various threshold settings. AUROC ranges from 0 to 1, with higher values indicating a better-performing model.

Methods for training and internal validation

To validate the reliability and consistency of our machine learning model, we conducted an internal validation using a 4-fold cross-validation with the CBNUH dataset. We allocated 20% of the dataset for testing, to ensure an accurate proportion of patients with stroke. The remaining 80% was divided into four equal folds with an even distribution of stroke patients. In each fold, 25% of the dataset was used for validation and the remaining 75% for training.

Because our training dataset had a class imbalance (36 stroke and 87 non-stroke patients), we employed the synthetic minority oversampling technique (SMOTE) to augment the minority group38. Thus, we obtained a balanced training dataset with equal numbers of stroke and non-stroke patients, with 87 patients in each group.

For data standardization, we employed RobustScaler for numerical features such as prothrombin time (PT), PLT count, diastolic blood pressure (DBP), and respiratory rate (RR). This technique is particularly useful when the dataset contains outliers or is not normally distributed. This scaler scales the data based on the median and interquartile range (IQR), and calculated using the following formula:

,

where \(\:y\) is the standardized data, \(\:x\) is the raw data, and the \(\:\text{I}\text{Q}\text{R}\) is the difference between the 75th and 25th percentiles of the data. By utilizing the median and IQR, which measures the spread of the middle 50% of the data, the scaled data becomes less sensitive to the presence of outliers. Therefore, robust scaling is particularly well-suited for handling data that are not normally distributed, ensuring more reliable scaling in diverse datasets.

For internal validation, we evaluated the performance of our machine learning model using the CBNUH dataset, making diagnosis based on an optimally determined threshold. During the training process, the model generated thresholds for each fold in the cross-validation. We selected the optimal threshold that maximized the sum of sensitivity and specificity. This approach ensures the best balance between correctly identifying true positives (sensitivity) and true negatives (specificity), which is crucial for accurately diagnosing stroke events in patients.

Method of external validation

To verify the reliability of our model, we evaluated its performance on the CNUH dataset. For external validation, we used the same model, scaler, and threshold as those determined in the training and internal validation processes. We adjusted the optimal threshold for each fold to a value of 0.5 through a threshold adjustment process. The predicted values were adjusted based on threshold scaling using the following formula:

,

,

where \(\:p\) is the prediction value, \(\:t\) is the optimal threshold for each fold, and \(\:{\:p}^{{\prime\:}}\) is the scaled prediction value at the best threshold. The predictions were adjusted in the same proportion after adjusting the optimal threshold for each fold to scale to 0.5.

Relative feature importance

We employed SHapley Additive exPlanations (SHAP)47 to analyze the relative importance of the input features used in our model. Originating from game theory, SHAP values provide a way to understand and explain the individual diagnoses made by the model. The diagnosis was deconstructed to demonstrate the impact of each contributing feature. Our analysis began with a global feature importance assessment that collectively examined the model’s interaction with all features. This was followed by an individual feature importance analysis, focusing on the impact of each feature on the model’s diagnosis for each patient. This approach provides a comprehensive understanding of the factors driving the decision-making process of the model.

Statistical analyses

Statistical analysis was performed to compare the stroke and non-stroke groups within our dataset and determine the relationship between each feature and its dependent variable (stroke/non-stroke). Numerical features were described using average values and standard deviations, whereas categorical features were analyzed using frequencies and proportions. For categorical features, we employed the chi-squared (χ2) test or Fisher’s exact test to assess the significance of associations39,40. The t-test was used for numerical features41.

Results

The CBNUH dataset included 206 patients, comprising 60 stroke and 146 non-stroke patients (Table 4). Most patients underwent MV, in contrast to the small number of patients who underwent ECMO. Altered mental status (AMS) was the most common neurological manifestation of stroke, followed by abnormal motor functions and PLRs. Motor symptoms were most strongly associated with stroke (p < 0.001), followed by CAD (p < 0.0449) as an underlying disease, ECMO (p < 0.0208) and PCI (p < 0.0467) as procedures, sedative use (p < 0.0373) as medication, and DBP (p < 0.0142) as a vital sign.

Internal validation

For internal validation of the model, we analyzed its performance using the AUROC curve and confusion matrix based on 4-fold cross-validation (Fig. 3). The model achieved a maximum AUROC of 0.8583, with the red dots on the AUROC curve representing the optimal threshold. The confusion matrix provided quantitative metrics, with an average accuracy of 0.7560, sensitivity of 0.8959, specificity of 0.7000, and an AUROC of 0.8201 (Table 5).

External validation

For external validation of the model, we analyzed its performance using the ROC curves and confusion matrices. To evaluate the generalizability of the model across different datasets, the optimal threshold for each fold was scaled to 0.5. The scaled predictions were averaged to form the basis for the final predictions of the model. The model achieved an average accuracy of 0.7778, sensitivity of 0.7500, specificity of 0.7931, and an AUROC of 0.7328. These results, detailed in Fig. 4; Table 6, demonstrate the model’s performance on an external dataset, providing a realistic measure of its applicability in various clinical settings.

Feature importance using SHAP

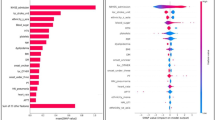

To better understand the feature importance of each feature in the model, we visualized the SHAP values42. Figure 5 (top) shows the summary plot of the SHAP values for the training dataset. A positive SHAP value indicates the contribution of different stroke features, whereas a negative SHAP value indicates its contribution in diagnosing a non-stroke condition. The feature values are visually represented by a color gradient, with red indicating higher values and blue indicating lower values.

Figure 5 (bottom) illustrates the absolute summary plot of the SHAP values for the training dataset. The absolute SHAP values indicate the degree of influence of each feature on the model diagnosis. Notably, the most influential features for stroke diagnosis were motor function, anticoagulant use, DBP, seizure presence, and RR in descending order of impact. SHAP analysis demonstrates the effect of various combinations of factors on the model outcome. For example, patients with low DBP and sedative use have a higher risk of stroke.

Additionally, we analyzed the importance of each feature for each patient. Figure 6 illustrates the SHAP force plots, where features that increase the risk of stroke are marked in red, and those that decrease the risk are shown in blue. The length of the colored segments indicates the magnitude of the impact of each feature on the diagnosis. For example, Fig. 6A shows a case in which the patient was correctly classified as non-stroke, exhibiting no motor symptoms, no anticoagulant or sedative use, and a normal DBP. By contrast, Fig. 6B and C illustrate cases in which patients were classified as stroke; the former shows the symptoms of motor dysfunction, an AMS, and an abnormal PLR, and the latter presents motor symptoms and anticoagulant use post-ICU admission but no RRT. Notably, the case in Fig. 6C had a DBP of 56 mm Hg and RR of 24 b/min at the onset of neurological symptoms. These results underscore the significance of factors such as neurological symptoms, underlying diseases, coagulation tests, and sedation in the diagnosis of stroke in ICU patients.

Discussion

We developed a machine learning model to diagnose stroke in non-neurological ICU patients with acute neurological manifestations. The developed model helps mitigate the difficulties in neurological assessment and improves patient prognoses. Although numerous studies have assessed the risk of stroke following specific disease conditions or surgeries, there is a paucity of systematic studies on the frequency of in-hospital stroke43,44. The opportunity to perform brain imaging, which serves as the confirmatory test for stroke, is limited owing to the equipment used in the ICU for patient treatment or unstable conditions. Effective evaluation of stroke in these situations is a critical challenge, and stroke has a massive impact on a patient’s WLST decisions and prognosis.

Our study represents a significant first step in developing a machine learning model for the early diagnosis of stroke in non-neurological ICU patients. While there are some limitations due to the relatively small sample size and the retrospective nature of the dataset, the study introduces a new approach to complement existing diagnostic tools in the ICU environment. In particular, it demonstrates the potential to improve the efficiency of patient care by leveraging clinical data for timely diagnosis of stroke, even in situations where brain imaging is delayed or unavailable. Although the model is still in its early stages, it demonstrates significant promise as a useful tool to augment clinical assessment and existing diagnostic methods.

We trained a CatBoost model using data from electronic health records (EHRs) for stroke diagnosis in ICU patients with neurological symptoms. This marks a significant departure from traditional imaging-heavy methods. Previous studies, such as Sheth et al.45, have primarily focused on imaging-based models for stroke detection; by contrast, our approach emphasizes the analysis of clinical symptoms and vital signs. Al-Mekhlafi et al.46 proposed early detection of stroke using AlexNet + SVM, achieving an AUROC of 0.99, primarily through image analysis. Wang et al.47 developed a model for automatically segmenting chronic stroke lesions on MRIs using the Deep Lesion Symmetry ConvNet. Subudhi et al.48 introduced an automated approach for detecting ischemic stroke on brain MRI using Delaunay Triangulation, achieving a dice similarity index of 0.94. These studies emphasize the prevailing focus on advanced imaging techniques for stroke detection. By prioritizing EHR data, we offer an alternative that can be particularly useful in situations where immediate imaging is not feasible, thereby widening the scope of early and efficient stroke detection in diverse clinical scenarios.

As evidenced by both internal and external validation, the model demonstrated robustness and reliability. These metrics are essential for clinical decision-making as they ensure the model’s proficiency in accurately identifying at-risk patients while minimizing false positives. Such capabilities are vital in a clinical setting where the balance between accuracy and timeliness of diagnosis can significantly impact patient outcomes. Furthermore, the SHAP analysis was instrumental in revealing the significance of certain features, such as neurological symptoms, underlying diseases, and blood coagulation tests in the model’s diagnostic process. This insight not only enhances our understanding of the model’s functionality but also highlights the critical role played by these specific factors in stroke diagnoses. Understanding these relationships can guide future model refinement, particularly for fine-tuning feature selection and weighting.

In our study, the initial features included patient demographics, eight underlying diseases (hypertension, diabetes mellitus, hyperlipidemia, atrial fibrillation, cancer, cerebrovascular accident, coronary artery disease, valvular heart disease), six procedures (cardiovascular surgery, non-cardiovascular surgery, PCI, MV, RRT, ECMO), three blood coagulation tests (PT, aPTT, platelet count), four medications (antiaggregant, anticoagulant, vasopressor, sedative), and vital signs (systolic blood pressure, DBP, heart rate and RR), which are known risk factors from similar studies3,49,50,51,52,53,54,55,56,57,58,59,60. However, using all the features in the model to train and validate the model yielded unsatisfactory performance. Accordingly, we tested various combinations of collected features for both training and validation. Through this experimental approach, we found that our model exhibited the best performance with certain combinations, as listed in Table 1, which were used to develop a model for stroke diagnosis. By exploring the optimal combination of various features, we considered their correlations and importance. Although a prior history of stroke or diabetes mellitus is a well-established risk factor for stroke49,59, and admission diagnosis is known to be associated with stroke occurrence61,62, these features were excluded during the exploration of optimal combinations due to the decreased performance of our model when used as input features. Contrary to our initial expectations, only some of the features we selected improved model performance, while others did not. However, this does not mean that there is no association with stroke, and it cannot be ruled out that this may change with changes in the study design (more sample data, different ways of measuring the variables, etc.). While these features were excluded in our study, future research with an expanded sample size should further explore their potential associations. Furthermore, future research may consider incorporating a broader range of clinical indicators obtainable in the ICU, potentially identifying novel significant features. Such an expansion is expected to improve patient outcomes by improving the performance of early stroke diagnostic model.

Many studies have demonstrated a significant association between motor deficits and stroke3,63,64. Furthermore, our model analysis shows that motor deficits have the most impact on the model’s predictions, which may make it seems like our model is able to predict stroke based on motor deficits alone. However, in our cohort, only 25 out of 48 patients who exhibited motor deficits presenting with motor deficits were confirmed to have a stroke. These findings indicate that although motor deficits are a critical indicator, they are not the only disorder in which stroke is associated with motor deficits7. Therefore, relying on motor deficits alone is insufficient for reliably diagnosing stroke, underscoring the need for a comprehensive assessment incorporating additional features.

Limitations and future scope

This study had several limitations. First, being a retrospective study based on EHRs and considering only non-neurological ICU patients, it may not have included all the relevant information such as that related to neurological evaluation or the onset of neurological symptoms. Second, the dataset employed for our model was relatively small compared with those used in existing studies65,66,67. A total of 746 patients were screened; 540 were excluded based on the exclusion criteria, and 206 patients were included. Third, the reason for patient admission was not included in the model training due to the constraints of the limited sample size. Finally, the initial design of our study was to predict stroke occurrence using vital signs at the time of neurologic manifestation, the previous 24 h, and the next 24 h, for a total of 49 time points. However, the model performed rather poorly when using time serial data, thus the study was redesigned to exclude the time from hospitalization to the onset of neurological manifestations.

The prevalence of stroke in patients with acute neurological manifestations identified using brain imaging was high (29.12%). Patients in the ICU who did not exhibit neurological alterations because of sedation, medical procedures, or other underlying conditions but displayed imaging evidence of stroke were excluded from the study because of the challenge in determining the precise onset of neurological changes. The expected prevalence in our study was projected to be greater when asymptomatic patients were included and was notably higher than the findings from a previous study8.

We envisage our model as a screening instrument for non-neurological ICU patients. The challenges in diagnosing stroke in ICU patients frequently lead to insufficient treatment (thrombolysis or thrombectomy for ischemic stroke and decompression surgery for hemorrhagic stroke). Our model can potentially help determine the correct treatment for the underlying patient condition, thereby improving the prognoses of ICU patients.

We have a limitation of having a small sample size and a small validation cohort, which may lead to less generalizability. However, the probability of stroke in non-neurological ICU patients is reported to be less than 9% 3,7. This means that while our dataset is small compared to the datasets used in studies involving AI models on other topics, it is not small when restricted to non-neurological critically ill patients and only those with neurological symptoms. Nevertheless, we plan to increase the reliability and accuracy of the model through further analysis and refinement in future research. Specifically, we will focus on increasing the cohort size, incorporating previously unlearned factors, and including more detailed neurological examination findings to strengthen the model’s performance across diverse medical environments and datasets. The model is in the early stages and relies on specific features, which may limit its applicability in scenarios where such data is incomplete or unavailable. Future research involving larger and more diverse datasets and prospective data collection will be critical to refining the model and realizing its full potential to improve patient outcomes in the ICU.

Conclusions

Our study demonstrates the applicability of machine learning in the field of medical diagnostics, particularly for the early detection of strokes in ICU patients. The model was trained on the vast amount of data stored in EHRs. In the internal validation, the model achieved an average accuracy of 0.7560, sensitivity of 0.8959, specificity of 0.7000, and an AUROC of 0.8201, demonstrating its ability to detect in-hospital stroke cases. In the external validation, the model achieved an average accuracy of 0.7778, sensitivity of 0.7500, specificity of 0.7931, and an AUROC of 0.7328, demonstrating the feasibility of in-hospital stroke diagnosis using machine learning.

Data availability

The datasets used and analyzed in our study are not publicly available because of health privacy concerns but are available from the corresponding author upon reasonable request.

References

Ortega-Gutierrez, S. et al. Neurologic complications in non-neurological intensive care units. Neurologist 15, 254–267 (2009).

Bleck, T. P. et al. Neurologic complications of critical medical illnesses. Crit. Care Med. 21, 98–103 (1993).

Jo, S., Chang, J. Y., Jeong, S., Jeong, S. & Jeon, S. B. Newly developed stroke in patients admitted to non-neurological intensive care units. J. Neurol. 267, 2961–2970 (2020).

Howard, R. S. Neurological problems on the ICU. Clin. Med. 7, 148 (2007).

Davies, N. W., Sharief, M. K. & Howard, R. S. Infection–associated encephalopathies—their investigation, diagnosis, and treatment. J. Neurol. 253, 833–845 (2006).

Shorvon, S. The management of status epilepticus. J. Neurol. Neurosurg. Psychiatry 70, 22–27 (2001).

Rubinos, C. & Ruland, S. Neurologic complications in the intensive care unit. Curr. Neurol. Neurosci. Rep. 16, 1–18 (2016).

Park, H. et al. Comparison of the characteristics for in-hospital and out‐of‐hospital ischaemic strokes. Eur. J. Neurol. 16, 582–588 (2009).

Steinberg, A. et al. Frequency of withdrawal of life-sustaining therapy for perceived poor neurologic prognosis. Crit. care Explorations. 3, e0487 (2021).

Bersten, A. D. & Handy, J. Oh’s Intensive Care Manual E-Book (Elsevier Health Sciences, 2013).

Fransen, P. S. et al. MR CLEAN, a multicenter randomized clinical trial of endovascular treatment for acute ischemic stroke in the Netherlands: study protocol for a randomized controlled trial. Trials 15, 1–11 (2014).

Pham, X. et al. Association of Neurocritical care services with mortality and functional outcomes for adults with brain injury: a systematic review and meta-analysis. JAMA Neurol. (2022).

Finley Caulfield, A. et al. Prognostication of ICU patients by providers with and without neurocritical care training. Neurocrit. Care. 37, 190–199 (2022).

Patel, B. K. et al. Effect of early mobilisation on long-term cognitive impairment in critical illness in the USA: a randomised controlled trial. Lancet Respir. Med. 11, 563–572 (2023).

Hodgson, C. L., et al. Early active mobilization during mechanical ventilation in the ICU. N. Engl. J. Med. 388, 572–573 (2022).

Halevy, A., Norvig, P. & Pereira, F. The unreasonable effectiveness of data. IEEE. Intell. Syst. 24, 8–12 (2009).

Bucerius, J. et al. Stroke after cardiac surgery: a risk factor analysis of 16,184 consecutive adult patients. Ann. Thorac. Surg. 75, 472–478 (2003).

Boehme, A. K., Esenwa, C. & Elkind, M. S. Stroke risk factors, genetics, and prevention. Circ. Res. 120, 472–495 (2017).

Frontera, J. A. et al. Risk factors, mortality, and timing of ischemic and hemorrhagic stroke with left ventricular assist devices. J. Heart Lung Transplant. 36, 673–683 (2017).

Hankey, G. J. Population impact of potentially modifiable risk factors for stroke. Stroke 51, 719–728 (2020).

Kirkpatrick, A. C., Vincent, A. S., Dale, G. L. & Prodan, C. I. Increased platelet procoagulant potential predicts recurrent stroke and TIA after lacunar infarction. J. Thromb. Haemost. 18, 660–668 (2020).

Mayda-Domaç, F., Mısırlı, H. & Yılmaz, M. Prognostic role of mean platelet volume and platelet count in ischemic and hemorrhagic stroke. J. Stroke Cerebrovasc. Dis. 19, 66–72 (2010).

Karhausen, J. A. et al. Platelet counts and postoperative stroke after coronary artery bypass grafting surgery. Anesth. Analg. 125, 1129 (2017).

Prodan, C. I., Stoner, J. A., Cowan, L. D. & Dale, G. L. Higher coated-platelet levels are associated with stroke recurrence following nonlacunar brain infarction. J. Cereb. Blood Flow Metab. 33, 287–292 (2013).

Karhausen, J. A. et al. Platelet counts and postoperative stroke after coronary artery bypass grafting surgery. Anesth. Analg. 125, 1129 (2017).

Heuschmann, P. U. et al. Control of main risk factors after ischaemic stroke across Europe: data from the stroke-specific module of the EUROASPIRE III survey. Eur. J. Prev. Cardiol. 22, 1354–1362 (2015).

An, S. J., Kim, T. J. & Yoon, B.-W. Epidemiology, risk factors, and clinical features of intracerebral hemorrhage: an update. J. Stroke 19, 3 (2017).

Kerber, K. A., Brown, D. L., Lisabeth, L. D., Smith, M. A. & Morgenstern, L. B. Stroke among patients with dizziness, vertigo, and imbalance in the emergency department: a population-based study. Stroke 37, 2484–2487 (2006).

Süt, N. & Çelik, Y. Prediction of mortality in stroke patients using multilayer perceptron neural networks. Turk. J. Med. Sci. 42, 886–893 (2012).

Prokhorenkova, L., Gusev, G., Vorobev, A., Dorogush, A. V. & Gulin, A. CatBoost: unbiased boosting with categorical features. Adv. Neural. Inf. Process. Syst. 31 (2018).

Hearst, M. A., Dumais, S. T., Osuna, E., Platt, J. & Scholkopf, B. Support vector machines. IEEE Intell. Syst. Their Appl. 13, 18–28 (1998).

Pregibon, D. Logistic regression diagnostics. Annals Stat. 9, 705–724 (1981).

Snoek, J. et al. Advances in neural information processing systems. Practical Bayesian Optim. Mach. Learn. Algorithms, 2951–2959 (2012).

Berrar, D. Bayes’ theorem and naive Bayes classifier. Encyclopedia Bioinf. Comput. Biology: ABC Bioinf. 403, 412 (2018).

Tim Head, M. et al. rene rex, Kejia (KJ) Shi, Justus Schwabedal, carlosdanielcsantos, Hvass-Labs, Mikhail Pak, SoManyUsernamesTaken, Fred Callaway, Loïc, Estève, L. B., Mehdi Cherti, Karlson Pfannschmidt, Fabian Linzberger,Christophe Cauet, A. G., Andreas Mueller, and in (2018). https://doi.org/10.5281/zenodo.1207017.

Hanley, J. A. & McNeil, B. J. The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology 143, 29–36 (1982).

Hicks, S. A. et al. On evaluation metrics for medical applications of artificial intelligence. Sci. Rep. 12, 5979 (2022).

Chawla, N. V., Bowyer, K. W., Hall, L. O. & Kegelmeyer, W. P. SMOTE: synthetic minority over-sampling technique. J. Artif. Intell. Res. 16, 321–357 (2002).

McHugh, M. L. The chi-square test of independence. Biochemia Med. 23, 143–149 (2013).

Jung, S. H. Stratified Fisher’s exact test and its sample size calculation. Biom. J. 56, 129–140 (2014).

Kim, T. K. T test as a parametric statistic. Korean J. Anesthesiology. 68, 540–546 (2015).

Lundberg, S. M. & Lee, S. I. A unified approach to interpreting model predictions. Adv. Neural. Inf. Process. Syst. 30 (2017).

Kimura, K., Minematsu, K. & Yamaguchi, T. Characteristics of in-hospital onset ischemic stroke. Eur. Neurol. 55, 155–159 (2006).

Kelley, R. & Kovacs, A. Mechanism of in-hospital cerebral ischemia. Stroke 17, 430-433 (1986).

Sheth, S. A. et al. Machine learning–enabled automated determination of acute ischemic core from computed tomography angiography. Stroke 50, 3093–3100 (2019).

Al-Mekhlafi, Z. G. et al. Deep learning and machine learning for early detection of stroke and haemorrhage. Computers Mater. Continua. 72, 775–796 (2022).

Wang, Y., Katsaggelos, A. K., Wang, X. & Parrish, T. B. in IEEE International Conference on Image Processing (ICIP). 111–115 (IEEE). (2016).

Subudhi, A., Acharya, U. R., Dash, M., Jena, S. & Sabut, S. Automated approach for detection of ischemic stroke using Delaunay Triangulation in brain MRI images. Comput. Biol. Med. 103, 116–129 (2018).

Bucerius, J. et al. Stroke after cardiac surgery: a risk factor analysis of 16,184 consecutive adult patients. Ann. Thorac. Surg. 75, 472–478 (2003).

Boehme, A. K., Esenwa, C. & Elkind, M. S. Stroke risk factors, genetics, and prevention. Circul. Res. 120, 472–495 (2017).

Frontera, J. A. et al. Risk factors, mortality, and timing of ischemic and hemorrhagic stroke with left ventricular assist devices. J. Heart Lung Transplantation. 36, 673–683 (2017).

Hankey, G. J. Population impact of potentially modifiable risk factors for stroke. Stroke 51, 719–728 (2020).

Mayda-Domaç, F., Mısırlı, H. & Yılmaz, M. Prognostic role of mean platelet volume and platelet count in ischemic and hemorrhagic stroke. J. Stroke Cerebrovasc. Dis. 19, 66–72 (2010).

Karhausen, J. A. et al. Platelet counts and postoperative stroke after coronary artery bypass grafting surgery. Anesth. Analg. 125, 1129 (2017).

Kirkpatrick, A. C., Vincent, A. S., Dale, G. L. & Prodan, C. I. Increased platelet procoagulant potential predicts recurrent stroke and TIA after lacunar infarction. J. Thromb. Haemost. 18, 660–668 (2020).

Prodan, C. I., Stoner, J. A., Cowan, L. D. & Dale, G. L. Higher coated-platelet levels are associated with stroke recurrence following nonlacunar brain infarction. J. Cereb. Blood Flow. Metabolism. 33, 287–292 (2013).

Heuschmann, P. U. et al. Control of main risk factors after ischaemic stroke across Europe: data from the stroke-specific module of the EUROASPIRE III survey. Eur. J. Prev. Cardiol. 22, 1354–1362 (2015).

An, S. J., Kim, T. J. & Yoon, B. W. Epidemiology, risk factors, and clinical features of intracerebral hemorrhage: an update. J. Stroke. 19, 3 (2017).

Kerber, K. A., Brown, D. L., Lisabeth, L. D., Smith, M. A. & Morgenstern, L. B. Stroke among patients with dizziness, vertigo, and imbalance in the emergency department: a population-based study. Stroke 37, 2484–2487 (2006).

Süt, N. & Çelik, Y. Prediction of mortality in stroke patients using multilayer perceptron neural networks. Turk. J. Med. Sci. 42, 886–893 (2012).

Blacker, D. J. In-hospital stroke. Lancet Neurol. 2, 741–746 (2003).

Vera, R. et al. In-hospital stroke: a multi‐centre prospective registry. Eur. J. Neurol. 18, 170–176 (2011).

Kothari, R. et al. Patients’ awareness of stroke signs, symptoms, and risk factors. Stroke 28, 1871–1875 (1997).

Beal, C. C. Gender and stroke symptoms: a review of the current literature. J. Neurosci. Nurs. 42, 80–87 (2010).

Jo, S., Chang, J. Y., Jeong, S., Jeong, S. & Jeon, S.-B. Newly developed stroke in patients admitted to non-neurological intensive care units. J. Neurol. 267, 2961–2970 (2020).

Park, H. et al. Comparison of the characteristics for in‐hospital and out‐of‐hospital ischaemic strokes. Eur. J. Neurol. 16, 582–588 (2009).

Kanda, M. et al. Effect of admission in the stroke care unit versus intensive care unit on in-hospital mortality in patients with acute ischemic stroke. BMC Neurol. 23, 402 (2023).

Acknowledgements

This research was supported by a grant from the Korea Health Technology R&D Project through the Korea Health Industry Development Institute (KHIDI), funded by the Ministry of Health and Welfare, Republic of Korea (grant number: HI21C1074070021).

Funding

This work was supported by the Bio&Medical Technology Development Program of the National Research Foundation(NRF) funded by the Korean government(MSIT) (No. RS-2024-00440787).

Author information

Authors and Affiliations

Contributions

SP and KSY contributed equally to the conception and design of the study; JWK and JYM contributed to data acquisition and analysis; and JBS and GHK contributed to model construction and analysis. JBS and JYM wrote the manuscript. All authors reviewed and edited the manuscript and approved the final version of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval and study design

This study was approved by the Institutional Review Boards (IRBs) of Chungbuk National University Hospital (CBNUH) and Chungnam National University Hospital (CNUH) (IRB No: 2021-02-034-001). In addition, all methods used in this study were carried out under the IRBs of CBNUH and CNUH guidelines and regulations. We utilized a comprehensive hospitalization dataset of patients from CBNUH between July 2020–July 2022.

Informed consent

The need for written informed consent was waived owing to the retrospective nature of the study.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Maeng, J., Sung, J., Kim, GH. et al. Machine learning-based diagnostic model for stroke in non-neurological intensive care unit patients with acute neurological manifestations. Sci Rep 14, 29610 (2024). https://doi.org/10.1038/s41598-024-80792-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-80792-6