Abstract

It aimed to analyze the value of deep learning algorithm combined with magnetic resonance imaging (MRI) in the risk diagnosis and prognosis of endometrial cancer (EC). Based on the deep learning convolutional neural network (CNN) architecture residual network with 101 layers (ResNet-101), spatial attention and channel attention modules were introduced to optimize the model. A retrospective collection of MRI image data from 210 EC patients was used for model segmentation and reconstruction, with 140 cases as the test set and 70 cases as the validation set. The performance was compared with traditional ResNet-101 model, ResNet-101 model based on spatial attention mechanism (SA-ResNet-101), and ResNet-101 model based on channel attention mechanism (CA-ResNet-101), using accuracy (AC), precision (PR), recall (RE), and F1 score as evaluation metrics. Among the 70 cases in the validation set, there were 45 cases of low-risk EC and 25 cases of high-risk EC. Using ROC curve analysis, it was found that the area under the curve (AUC) for the diagnosis of high-risk EC of the proposed model in this article (0.918) was visibly larger as against traditional ResNet-101 (0.613), SA-ResNet-101 (0.760), and CA-ResNet-101 models (0.758). The AC, PR, RE, and F1 values of the proposed model for the diagnosis of EC risk were visibly higher (P < 0.05). In the validation set, postoperative recurrence occurred in 13 cases and did not occur in 57 cases. Using ROC curve analysis, it was found that the AUC for postoperative recurrence prediction of the patients by the proposed model (0.926) was visibly larger as against traditional ResNet-101 (0.620), SA-ResNet-101 (0.729), and CA-ResNet-101 models (0.767). The AC, PR, RE, and F1 values of the proposed model for postoperative recurrence prediction were visibly higher (P < 0.05). The proposed model in this article, assisted by MRI, presented superior performance in diagnosing high-risk EC patients, with higher sensitivity (Sen) and specificity (Spe), and also demonstrated excellent predictive AC in postoperative recurrence prediction.

Similar content being viewed by others

Introduction

EC is one of the most common malignant tumors in the female reproductive system, primarily originating from the endometrium and typically occurring in postmenopausal women1,2,3. The incidence of EC significantly increases with the age of women. The typical clinical manifestations of EC include abnormal uterine bleeding (especially postmenopausal bleeding), pelvic pain, and increased vaginal discharge4. Although these symptoms are common, they serve as important indicators in the early diagnosis of EC. EC of different types and grades varies in growth rate and invasiveness, with severe cases potentially spreading to surrounding tissues and distant organs, such as the fallopian tubes, ovaries, and peritoneum5. Epidemiological studies have shown that the incidence of EC is significantly higher in developed countries than in developing countries, closely related to the widespread increase in chronic diseases such as aging, obesity, and diabetes. Additionally, long-term use of estrogen replacement therapy (ERT), infertility treatments, and genetic factors (such as a family history of EC) are also considered significant risk factors for the development of EC6.

Although the diagnosis of EC mainly relies on histopathological examination, its early symptoms lack Spe and are often mistaken for more common non-neoplastic diseases, such as endometrial hyperplasia or endometriosis7,8. Therefore, early recognition and differentiation of these symptoms, especially the diagnosis of postmenopausal bleeding, face significant challenges. Traditional imaging examinations such as ultrasound, CT, and magnetic resonance imaging (MRI) have limitations in the diagnosis of EC, often failing to clearly display the depth of tumor invasion and the spread to surrounding tissues, leading to missed diagnoses or misdiagnoses9,10,11,12. With the rapid development of artificial intelligence (AI) technology, its application in the field of medical imaging is increasingly becoming a focus. Especially in the differential diagnosis and prognostic prediction of EC, AI-assisted MRI technology shows great potential and value13,14. MRI, as a non-invasive, high-resolution imaging technique, has been widely used in the clinical diagnosis of EC. However, traditional MRI image interpretation relies on the experience and professional knowledge of clinical doctors, which poses a risk of subjectivity and misdiagnosis in complex cases15. The introduction of AI technology provides new possibilities for solving these problems, enabling AI to extract complex features from a large amount of imaging data through deep learning algorithms and big data analysis, thereby improving the diagnostic AC and efficiency of EC16,17.

This article aims to analyze the value of deep learning algorithms combined with MRI in the risk diagnosis of EC and the prediction of postoperative recurrence in patients. By optimizing the deep learning CNN architecture ResNet-101 and introducing spatial attention and channel attention modules, the aim is to enhance the diagnostic AC and PR for high-risk EC patients. The specific goal is to evaluate the performance of the improved model in the risk identification of EC and the prediction of postoperative recurrence, and to compare it with traditional models, in hopes of providing a more effective diagnostic tool for clinical practice.

Literature review

The application of AI technology in medical imaging has been increasingly widespread. Clinically, through machine learning and deep learning techniques, AI can identify disease characteristics, quantify lesion areas, and assist doctors in making more accurate diagnoses and treatment plans. To improve the computational intensity and time consumption of existing algorithms for whole-slide tumor image analysis, Rong et al. (2023)18 used Yolo (HD-Yolo) for histology-based detection and verified that HD-Yolo was superior to existing analysis methods in terms of nuclear detection, classification AC, and computational time for three types of tumor tissues. Shen et al. (2023)19 proposed a medical image segmentation algorithm based on deep neural network technology in response to the problem of edge blurring and noise interference in medical image segmentation. This algorithm uses a similar U-Net backbone structure and obtains medical image segmentation results through a decoder path with residual and convolutional structures. It was verified that for medical images with complex shapes and adhesion between lesions and normal tissues, and it can effectively improve segmentation AC. Zhang et al. (2023)20 designed an improved U-type network (BN-U-Net) algorithm and applied it to the segmentation of spinal MRI medical images in 22 research subjects. The results presented that the processing time of the BN-U-Net al.gorithm was visibly less as against fully convolutional network (FCN) and U-Net al.gorithms, while AC, Sen, and Spe were higher, proving that the algorithm visibly improved the quality of spinal MRI images through automatic segmentation of MRI images. Gao et al. (2020)21 proposed a new method for identifying outliers in unbalanced datasets, using the concept of imaging complexity, enabling deep learning models to optimally learn the inherent imaging features related to a single class, thereby effectively capturing image complexity and enhancing feature learning. Guan et al. (2023)22 proposed the collaborative integration of model-based learning and data-driven learning in three key components. The first component uses a linear vector space framework to capture the global dependencies of image features; the second component uses a deep network to learn the mapping from linear vector space to nonlinear manifolds; the third component uses a sparse model to capture local residual features. The model was evaluated using MRI data and was found to improve reconstruction in the presence of data perturbations and/or novel image features. Although hysteroscopy combined with endometrial biopsy is the gold standard for diagnosing endometrial lesions, the experience of physicians remains crucial for accurate diagnosis. Raimondo et al. (2024)23 developed a deep learning model to automatically detect and classify endometrial lesions in hysteroscopic images. The model was replied with 1,500 images from 266 patients, and the results indicated that while the introduction of clinical data could slightly enhance the model’s diagnostic capability, the overall performance still needs improvement, suggesting that future studies need to further optimize the model to enhance its practicality.

In addition, AI technology can not only assist in the diagnosis of patient conditions but also be applied to the prediction of patient prognosis. She et al. (2020)24 developed a deep learning survival neural network model based on non-small cell lung cancer (LC) case data and compared it with the tumor, node, and metastasis staging system for LC-specific survival. It was found that the deep learning survival neural network model was more promising than the tumor, node, and metastasis stage on the test dataset in predicting LC-specific survival rates. This novel analysis method can provide reliable individual survival information and treatment recommendations. Zhong et al. (2022)25 developed deep learning features for the prediction of metastasis and prognostic stratification of clinical stage I non-small cell LC and found that a higher deep learning score predicted poorer overall survival and relapse-free survival, suggesting that deep learning features could accurately predict the disease and stratify the prognosis of clinical stage I non-small cell LC. Dong et al. (2020)26 constructed a deep learning imaging nomogram based on CT images of 730 patients with locally advanced gastric cancer to preoperatively predict the number of lymph node metastases in patients. The validation found that the deep learning imaging nomogram had good discrimination for the number of lymph node metastases in patients, visibly better than the conventionally used clinical N staging, tumor size, and clinical models, and was visibly related to patient survival rates. Jiang et al. (2024)27 developed and externally validated a deep learning-based prognostic stratification system for automatically predicting the overall survival and cancer-specific survival of patients with resected colorectal cancer (CRC). It was found that the prognosis was worse, and the disease-specific survival was shorter in the high-risk scoring group as against the low-risk scoring group. It was ultimately proposed that attention-based unsupervised deep learning could robustly provide prognosis for the clinical outcomes of CRC patients, be promoted in different populations, and serve as a potential new prognostic tool in the clinical decision-making of CRC management. Jiang et al. (2022)28 conducted a retrospective analysis of two positron emission tomography (PET) datasets of diffuse large B-cell lymphoma. A 3D U-Net architecture was trained on patches randomly sampled from the patient’s PET images, and ultimately, an FCN model with a U-Net architecture was proposed. This model can accurately segment lymphoma lesions and provide quantitative information for accurately predicting tumor volume and prognosis in patients.

In summary, AI technology has made visible progress in the application of medical image analysis and prognostic prediction, especially in tumor detection, image segmentation, and survival prediction. Existing studies have shown that AI technology can visibly improve diagnostic AC, reduce processing time, and provide more accurate prognostic assessments through deep learning and machine learning algorithms. However, the application of these technologies in EC still needs further exploration. This article aims to explore the application and value of AI-assisted MRI technology in the differential diagnosis and prognostic prediction of EC, in hopes of providing new ideas and methods for clinical diagnosis and treatment.

In this article, PubMed and Google Scholar databases were initially utilized for literature retrieval, with keywords including “artificial intelligence”, “medical imaging”, “deep learning”, “tumor detection”, etc. The search time range spanned from 2010 to 2024. Based on predefined inclusion and exclusion criteria, relevant studies were selected, with a particular focus on the application of machine learning and deep learning techniques in medical imaging. The specific inclusion criteria encompassed published peer-reviewed studies with subjects covering various tumor types such as lung cancer, liver cancer, breast cancer. For data extraction, it collected information on model types, performance evaluation metrics (such as AC, Sen, Spe), and application examples from each literature. In summary, this article aims to comprehensively collate the current progress of AI technology in medical imaging to guide subsequent research and clinical practice.

Research mode

Model construction

An image processing model based on deep learning residual network was constructed. Based on ResNet-10129, attention mechanism was introduced into the model to improve the concentration of the model on the region of interest. The attention module includes spatial attention and channel attention, which are combined to improve the feature representation ability of the model. The overall running framework of the model is illustrated in Fig. 1.

To make the model pay more attention to the important channel features in the feature graph tensor, a channel attention module is added to each node of the ResNet-101 architecture to obtain a new residual module (Fig. 2). The operation steps of this channel attention residual module are as follows: first, the image features are processed by global average pooling, and then the generated feature images are compressed. Then, two fully connected layers are used to model the relationship between the channels, so that the weight of the output features is consistent with that of the input features. Sigmoid activation function was used to normalize the weight, and the obtained weight was used to represent the attention level of each feature channel. The feature weight value of the channel was normalized.

Z represents attention weight, L represents input feature, \(\:L\text{*}\) represents weighted feature, \(\:{\upphi\:}\)() represents ReLU activation function, M means multi-layer perceptron, \(\:\text{A}\text{v}\text{e}\text{r}\text{a}\text{g}\text{e}\:\text{p}\text{o}\text{o}\text{l}\text{i}\text{n}\text{g}\left(\text{L}\right)\) means global average pooling of input feature L, \(\:\text{M}\text{a}\text{x}\text{i}\text{m}\text{u}\text{m}\:\text{p}\text{o}\text{o}\text{l}\left(\text{L}\right)\) represents global maximum pooling of input feature L.

The application of spatial attention mechanism can improve the model’s attention to important spatial information. The spatial attention module is added to each node of the ResNet-101 architecture to obtain a new spatial attention residual module (Fig. 3). The specific operation steps are as follows: The input features are processed using max pooling and global average pooling to obtain two channel descriptions. The two channel descriptions are jointly applied to transform the double-layer feature map into a single-layer feature map. After a 5 × 5 convolutional operation, the Sigmoid activation function is used to obtain the weight coefficients.

Due to the serial structure having the stacking effect of more nonlinear activation functions, better non-residual blocks can be obtained. Therefore, in the model, the spatial attention module and the channel attention module exist in a serial form. This form can simultaneously calculate the information of different channels in the feature map as well as the local spatial information of each channel, thereby enhancing the model’s ability to learn image features. Thus, an improved ResNet-101 model based on spatial attention and channel attention mechanisms was completed.

Evaluation indicators of the model

AC, PR, RE, and F1 were used to evaluate the diagnostic performance of the model. Traditional ResNet-101, SA-ResNet-101, CA-ResNet-101 models were introduced. Using ROC curve, analysis of model on the prognosis of patients with cervical cancer risk and prediction performance was performed.

TP denotes true positive, FN denotes false negative, TN denotes true negative, and FP denotes false positive.

Datasets collection

Retrospectively, 210 patients with EC who underwent pelvic MRI examinations at the imaging center of XXX Hospital from January 2021 to May 2024 were included as study samples. Among them, 140 cases were used as the test set, and 70 cases as the validation set. All patients were pathologically confirmed, and information such as basic patient data (Table 1), imaging pictures, and postoperative recurrence was collected, taking whether the patient has a recurrence after surgery as the endpoint event. According to the ESMO-ESTRO-ESP guidelines, patients were divided into low-risk EC and high-risk EC.

The patients’ MRI images were transported to the workstation, where image processing algorithms were utilized to segment and reconstruct the images.

Inclusion criteria: (1) Patients diagnosed with EC; (2) Age between 30 and 64 years; (3) Patients who underwent pelvic MRI within the specified time and had complete imaging data; (4) Complete clinical information, including basic data, imaging pictures, postoperative recurrence status, etc.

Exclusion criteria: (1) Patients with other malignant tumors; (2) Substandard MRI image quality (such as motion artifacts, severe noise); (3) Incomplete follow-up data.

Experimental environment

The experiments were primarily conducted under the TensorFlow deep learning framework, accelerated by GPU. The models and training code were written in Python version 3.6, with the integrated development environment being PyCharm. The configuration was as follows: the graphics card was NVIDIA GeForce RTX 2080 Ti, with 64GB of memory, a central processor of AMD Ryzen Threadripper 2950X, and the system was Windows 10.

Parameters setting

The model parameters were as follows: the convolutional kernel size was 5 × 5, the convolution operation step was 1, the filter size was 5 × 5, the depth was set to 101 layers, the scaling factor was 16, the regularization parameter was 2, and the initial learning rate was 0.01.

Statistical processing

SPSPS 22.0 statistical software was employed. Quantitative data conforming to normal distribution were presented as mean ± sd (\(\:\overline{\text{x}}\) ± s), quantitative data that did not conform to normal distribution were expressed using the median and interquartile range, and categorical data were expressed using frequency and percentage (%). Non-normally distributed quantitative data were analyzed by Mann-Whitney test, normally distributed quantitative data by one-way ANOVA, and categorical data by chi-square test. The diagnostic performance of each model was assessed by plotting the ROC curves, and the AUC was calculated to compare the Sen and Spe of different models. A two-tailed test with P < 0.05 was considered the standard for statistical significance. The statistical analysis results indicated that the improved deep learning model demonstrated significant statistical advantages in the diagnosis of high-risk EC patients and in the prediction of postoperative recurrence.

Performance evaluation

Imaging data of cases

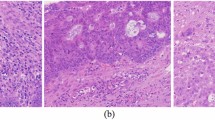

In Fig. 4, an MRI image of a female patient showed low signal intensity on T1WI, elevated signal intensity on T2WI, and increased signal on DWI, with the endometrium being abnormally thickened. The pathological results indicated that the patient had endometrioid carcinoma, which was moderately to highly differentiated, and the depth of cancer cell infiltration was less than half the thickness of the myometrium, also involving the cervical canal.

Figure 5 presents the MRI image of a female patient with moderate signal on T1WI, high signal on T2WI, and high signal on DWI, and abnormal thickening of the endometrium. The pathological results suggested that the patient was endometrial adenocarcinoma, moderately or highly differentiated, and the depth of infiltration of cancer cells was greater than half of the thickness of the myometrium.

Diagnostic performance of different models for EC

In the validation set, there were 45 cases of low-risk EC and 25 cases of high-risk EC. According to the ROC curve analysis (Fig. 6), the AUC of the proposed model was 0.918, the SA-ResNet-101 model was 0.760, the CA-ResNet-101 model was 0.758, and the traditional ResNet-101 model was 0.613. The AUC of the proposed model for the diagnosis of high-risk EC was markedly larger.

Further comparison of the evaluation indicators suggested that the AC, PR, RE, and F1 values of the proposed model were markedly higher as against the other three models (P < 0.05) (Fig. 7).

Diagnostic performance of different models for patient prognosis

After follow-up data collation, among the 70 patients in the validation set, 13 cases (9 at the primary site, 3 in lymph nodes, and 1 in the abdominal cavity) were observed to have recurrence after surgery, while 57 cases (34 at the primary site, 11 in lymph nodes, 8 in the cervix, and 4 in the abdominal cavity) did not experience recurrence. Figure 8 illustrates that the AUC of the proposed model was 0.926, the SA-ResNet-101 model was 0.729, the CA-ResNet-101 model was 0.767, and the traditional ResNet-101 model was 0.620. The AUC of the proposed model for postoperative recurrence prediction of patients was markedly larger.

Figure 9 illustrates that the AC, PR, RE, and F1 values of the proposed model for postoperative recurrence prediction of patients were markedly higher as against the other three models (P < 0.05).

Discussion

In this article, a deep learning-based model was developed, which, combined with MRI, successfully enhanced the diagnostic AC and predictive ability for postoperative recurrence of EC. By incorporating spatial attention and channel attention modules, the improved model demonstrated significantly higher Sen and Spe in identifying high-risk EC patients compared to the traditional ResNet-101 model and other comparative models. Furthermore, in predicting postoperative recurrence, the improved model also showed higher AC, PR, RE, and F1 score. These results indicate that deep learning technology holds significant potential in improving the diagnosis and management of EC.

The early diagnosis of EC is primarily challenging due to its nonspecific symptoms, such as abnormal bleeding and menstrual irregularities, which are often confused with other gynecological diseases30,31. Although imaging examinations like ultrasound, CT, and MRI are widely used, they have limited Sen for small or locally invasive tumors. Confirmation of diagnosis typically relies on histopathological examination, but the process is often complex due to the diversity of pathological types and grading32,33. Therefore, integrating clinical manifestations, imaging, and pathological results is crucial for accurate diagnosis. Deep learning image segmentation algorithms can precisely identify key structures in medical imaging, providing quantitative analysis and diagnostic support for physicians34.

Based on this, this article used ResNet-101 as the foundation, and introduced spatial attention and channel attention modules for optimization, allowing the model to calculate the information of different channels in the feature map and the local spatial information of each channel at the same time. In the past, deep learning models often needed to process a large amount of feature information when dealing with complex tasks. Traditional CNN such as ResNet-101 usually globally weight and process the features of each channel when dealing with feature maps, without fully considering the interaction between different channels and local spatial information35. The introduction of spatial attention and channel attention modules enables the model to be more flexible and accurate when learning features, effectively improving the model’s ability to learn complex features and relationships36. To further analyze the performance of the model, 210 EC patients were retrospectively included as study samples. Among the 70 cases in the validation set, there were 45 cases of low-risk EC and 25 cases of high-risk EC. Using ROC curve analysis, it was found that the AUC of the proposed model (0.918) for the diagnosis of high-risk EC was visibly larger as against the traditional ResNet-101 model (0.613), the SA-ResNet-101 model (0.760), and the CA-ResNet-101 model (0.758). This is similar to the research results of Men et al. (2018)37, indicating that the proposed model has better Sen and Spe in the diagnosis of high-risk EC, and can more effectively identify high-risk patients, which is of great significance for clinical decision-making and patient management. The study by Bús et al. (2021)38, based on data from patients who underwent radical hysterectomy, analyzed the reliability of preoperative MRI in the staging of early EC. They found that conventional MRI had a low Sen but high Spe in EC staging, emphasizing the importance of combining other imaging methods for more accurate assessment. However, the model proposed in this article showed significantly higher AC, PR, RE, and F1 values in EC risk diagnosis than traditional methods (P < 0.05), which differs from the conclusions of Bús et al. This discrepancy may be attributed to several reasons: firstly, the model in this article incorporated deep learning technology, leveraging improved feature extraction and analysis capabilities to more accurately capture the risk characteristics of EC. Secondly, the MRI method in the study by Bús et al. may not have fully utilized the latest imaging processing technologies, leading to its insufficient Sen. Therefore, the advantage of the improved model in this article lies in its comprehensive performance and discriminative ability, which makes it perform more outstandingly in the diagnosis of high-risk EC.

The prediction of postoperative recurrence probability is meaningful for improving patient prognosis. By integrating pathological factors, molecular markers, imaging examinations, hematological indicators, and individual characteristics, it is possible to identify the risk of recurrence early, adjust follow-up and treatment plans in a timely manner, thereby improving the survival rate and quality of life (QoL) of patients39,40. Eriksson et al. (2021)41 used ProMisE for preoperative tumor recurrence classification and prediction in EC women. Compared with the ESMO, the combination of demographic characteristics, ultrasound examination results, and ProMisE subtypes has better preoperative predictive ability for tumor recurrence or progression, supporting its application in preoperative risk stratification of EC women. In this article, in the validation set, there were 13 cases of postoperative recurrence and 57 cases without recurrence. The AUC of the proposed model (0.926) for predicting postoperative recurrence in patients was visibly larger as against the traditional ResNet-101 model (0.620), the SA-ResNet-101 model (0.729), and the CA-ResNet-101 model (0.767). This is similar to the aforementioned research results, suggesting that the improved model has better Sen and Spe in predicting postoperative recurrence, and can more effectively identify high-risk recurrence. It can provide a more accurate basis for clinical decision-making, optimize treatment and follow-up plans, and improve patient prognosis42. In addition, the AC, PR, RE, and F1 values of the proposed model for predicting postoperative recurrence in patients were visibly higher as against the other three models (P < 0.05). This high predictive AC can help doctors intervene earlier in patients at high risk of recurrence, provide personalized treatment strategies, and reduce the risk of postoperative recurrence and progression. However, the F1 value of the improved model was relatively low. The reason for this may be the balance problem between PR and RE in the model, which may lead to insufficient performance of the model in identifying specific categories or situations. Therefore, future improvements should focus on optimizing the model’s training data, adjusting the model’s threshold, or further optimizing the model’s deep learning structure to improve its performance in complex medical images.

Conclusion

Research contribution

By using ResNet-101 and introducing spatial attention and channel attention modules, the diagnostic and postoperative recurrence prediction capabilities for high-risk EC patients have been successfully enhanced. Compared with traditional models, the improved model shows superior performance in diagnosing high-risk EC in the validation set, with higher Sen and Spe, and also shows excellent predictive AC in postoperative recurrence prediction. In summary, the improved model can not only help doctors discover and diagnose high-risk EC patients earlier but also effectively predict the risk of postoperative recurrence, providing a scientific basis for the formulation of personalized treatment and follow-up plans, and helping to improve patients’ survival rates and QoL. These findings emphasize the potential and importance of deep learning image segmentation algorithms in the field of medical imaging, and further verification and promotion of the application of this technology should be carried out in future research and clinical practice.

Future works and research limitations

Although the improved deep learning model has performed well in the diagnosis and postoperative recurrence prediction of EC, there are still some deficiencies and directions for future improvement.

Firstly, the sample size of this article is relatively small, especially for the validation set of postoperative recurrence prediction. This may affect the model’s extensive applicability and generalization ability, and it is necessary to expand the sample size in the future to verify the stability and reliability of the model. Secondly, although spatial attention and channel attention modules have been introduced, further optimization of the model’s deep learning architecture and parameter settings is still needed to improve the model’s Sen and Spe when dealing with complex medical images and pathological features. In addition, this article mainly relies on medical imaging and pathological results as the main basis for model training and verification, and in the future, more clinical data and molecular marker information can be integrated to establish a more comprehensive and multi-level prediction model. The dataset lacked specific information on the location of recurrences (such as local regions or distant metastases), and this limitation may restrict a comprehensive understanding of the recurrence prediction performance.

Future research directions include further optimizing the algorithms and architecture of deep learning models, exploring multi-modal data fusion methods, such as combining imaging, molecular biology, and clinical features, to enhance the comprehensive diagnostic ability of EC. In addition, applying remote monitoring technology and big data analysis to achieve real-time tracking and prediction of long-term follow-up and treatment effects of patients will help the development and promotion of personalized medicine. Additionally, to enhance the completeness and Acc of the study, future research should consider collecting and analyzing detailed data on the location of recurrences to further assess the impact of different recurrence sites on the performance of predictive models. This will aid in formulating more precise clinical decisions and personalized treatment plans.

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author Xinyu Qi on reasonable request via e-mail 13094781739@163.com.

References

Crosbie, E. J. et al. Endometrial cancer. Lancet 399(10333), 1412–1428 (2022).

Nees, L. K. et al. Endometrial hyperplasia as a risk factor of endometrial cancer. Arch. Gynecol. Obstet. 306(2), 407–421 (2022).

Wang, X., Glubb, D. M. & O’Mara, T. A. Dietary factors and endometrial cancer risk: a Mendelian randomization study. Nutrients 15(3), 603 (2023).

Zhao, S. et al. Endometrial cancer in Lynch syndrome. Int. J. Cancer 150(1), 7–17 (2022).

Sbarra, M., Lupinelli, M., Brook, O. R., Venkatesan, A. M. & Nougaret, S. Imaging of endometrial cancer. Radiol. Clin. N. Am. 61(4), 609–625 (2023).

Karpel, H. C., Slomovitz, B., Coleman, R. L. & Pothuri, B. Treatment options for molecular subtypes of endometrial cancer in 2023. Curr. Opin. Obstet. Gynecol. 35(3), 270–278 (2023).

Marín-Jiménez, J. A., García-Mulero, S., Matías-Guiu, X. & Piulats, J. M. Facts and hopes in immunotherapy of endometrial cancer. Clin. Cancer Res. 28(22), 4849–4860 (2022).

Karpel, H., Slomovitz, B., Coleman, R. L. & Pothuri, B. Biomarker-driven therapy in endometrial cancer. Int. J. Gynecol. Cancer 33(3), 343–350 (2023).

Jamieson, A. & McAlpine, J. N. Molecular profiling of endometrial cancer from TCGA to clinical practice. J. Natl. Compr. Cancer Netw. 21(2), 210–216 (2023).

Tronconi, F. et al. Advanced and recurrent endometrial cancer: state of the art and future perspectives. Crit. Rev. Oncol. Hematol. 180, 103851 (2022).

Banz-Jansen, C., Helweg, L. P. & Kaltschmidt, B. Endometrial cancer stem cells: where do we stand and where should we go? Int. J. Mol. Sci. 23(6), 3412 (2022).

Maheshwari, E. et al. Update on MRI in evaluation and treatment of endometrial cancer. Radiographics 42(7), 2112–2130 (2022).

Oaknin, A. et al. Endometrial cancer: ESMO Clinical Practice Guideline for diagnosis, treatment and follow-up. Ann. Oncol. 33(9), 860–877 (2022).

Shen, Y., Yang, W., Liu, J. & Zhang, Y. Minimally invasive approaches for the early detection of endometrial cancer. Mol. Cancer 22(1), 53. https://doi.org/10.1186/s12943-023-01757-3 (2023). Erratum in: Mol. Cancer 22(1), 76 (2023).

Kuhn, T. M., Dhanani, S. & Ahmad, S. An overview of endometrial cancer with novel therapeutic strategies. Curr. Oncol. 30(9), 7904–7919 (2023).

Yang, Y., Wu, S. F. & Bao, W. Molecular subtypes of endometrial cancer: implications for adjuvant treatment strategies. Int. J. Gynaecol. Obstet. 164(2), 436–459 (2024).

Salinas, L. et al. Goniotomy using the kahook dual blade in severe and refractory glaucoma: 6-month outcomes. J. Glaucoma 27(10), 849–855 (2018).

Rong, R. et al. A deep learning approach for histology-based nucleus segmentation and tumor microenvironment characterization. Mod. Pathol. 36(8), 100196 (2023).

Shen, T., Huang, F. & Zhang, X. CT medical image segmentation algorithm based on deep learning technology. Math. Biosci. Eng. 20(6), 10954–10976 (2023).

Zhang, Q. et al. Spine medical image segmentation based on deep learning. J. Healthc. Eng. 2021, 1917946. https://doi.org/10.1155/2021/1917946 (2021). Retraction in: J. Healthc. Eng. 2023, 9810950 (2021).

Gao, L., Zhang, L., Liu, C. & Wu, S. Handling imbalanced medical image data: a deep-learning-based one-class classification approach. Artif. Intell. Med. 108, 101935 (2020).

Guan, Y. et al. Subspace model-assisted deep learning for improved image reconstruction. IEEE Trans. Med. Imaging 42(12), 3833–3846 (2023).

Raimondo, D. et al. Detection and classification of hysteroscopic images using deep learning. Cancers (Basel) 16(7), 1315 (2024).

She, Y. et al. Development and validation of a deep learning model for non-small cell lung cancer survival. JAMA Netw. Open 3(6), e205842 (2020).

Zhong, Y. et al. Deep learning for prediction of N2 metastasis and survival for clinical stage I non-small cell lung cancer. Radiology 302(1), 200–211 (2022).

Dong, D. et al. Deep learning radiomic nomogram can predict the number of lymph node metastasis in locally advanced gastric cancer: an international multicenter study. Ann. Oncol. 31(7), 912–920 (2020).

Jiang, X. et al. End-to-end prognostication in colorectal cancer by deep learning: a retrospective, multicentre study. Lancet Dig. Health 6(1), e33–e43 (2024).

Jiang, C. et al. Deep learning-based tumour segmentation and total metabolic tumour volume prediction in the prognosis of diffuse large B-cell lymphoma patients in 3D FDG-PET images. Eur. Radiol. 32(7), 4801–4812 (2022).

Üreten, K., Maraş, Y., Duran, S. & Gök, K. Deep learning methods in the diagnosis of sacroiliitis from plain pelvic radiographs. Mod. Rheumatol. 33(1), 202–206 (2023).

Liu, M. Z. et al. Weakly supervised deep learning approach to breast MRI assessment. Acad. Radiol. 29(Suppl 1), S166–S172 (2022).

Sharma, N. et al. UMobileNetV2 model for semantic segmentation of gastrointestinal tract in MRI scans. PLoS ONE 19(5), e0302880 (2024).

Azam, H. et al. Fully automated skull stripping from brain magnetic resonance images using mask RCNN-based deep learning neural networks. Brain Sci. 13(9), 1255 (2023).

Cekic, E., Pinar, E., Pinar, M. & Dagcinar, A. Deep learning-assisted segmentation and classification of brain tumor types on magnetic resonance and surgical microscope images. World Neurosurg. 182, e196–e204 (2024).

Lefebvre, T. L. et al. Development and validation of multiparametric MRI-based radiomics models for preoperative risk stratification of endometrial cancer. Radiology 305(2), 375–386 (2022).

Zhao, M. et al. MRI-based radiomics nomogram for the preoperative prediction of deep myometrial invasion of FIGO stage I endometrial carcinoma. Med. Phys. 49(10), 6505–6516 (2022).

Ironi, G. et al. Hybrid PET/MRI in staging endometrial cancer: diagnostic and predictive value in a prospective cohort. Clin. Nucl. Med. 47(3), e221–e229 (2022).

Men, K. et al. Cascaded atrous convolution and spatial pyramid pooling for more accurate tumor target segmentation for rectal cancer radiotherapy. Phys. Med. Biol. 63(18), 185016 (2018).

Bús, D., Nagy, G., Póka, R. & Vajda, G. Clinical impact of preoperative magnetic resonance imaging in the evaluation of myometrial infiltration and lymph-node metastases in stage I endometrial cancer. Pathol. Oncol. Res. 27, 611088 (2021).

Kim, J. Y. Mixed endometrial stromal and smooth muscle tumor of the uterus with unusual morphologic features in a 35-year-old nulliparous woman: a case report. Am. J. Case Rep. 23, e935944 (2022).

Iida, T. et al. Small cell neuroendocrine carcinoma of the endometrium with difficulty identifying the original site in the Uterus. Tokai J. Exp. Clin. Med. 45(4), 156–161 (2020).

Eriksson, L. S. E. et al. Combination of proactive molecular risk classifier for endometrial cancer (ProMisE) with sonographic and demographic characteristics in preoperative prediction of recurrence or progression of endometrial cancer. Ultrasound Obstet. Gynecol. 58(3), 457–468 (2021).

Asami, Y. et al. Predictive model for the preoperative assessment and prognostic modeling of lymph node metastasis in endometrial cancer. Sci. Rep. 12(1), 19004 (2022).

Author information

Authors and Affiliations

Contributions

Xinyu Qi: Conceptualization, methodology, software, validation, formal analysis, investigation, resources, data curation, writing—original draft preparation, writing—review and editing, visualization, supervision, project administration, funding acquisition.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

The studies involving human participants were reviewed and approved by Medical School, Taizhou University Ethics Committee (Approval Number: 2022.954521). The participants provided their written informed consent to participate in this study. All methods were performed in accordance with relevant guidelines and regulations.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Qi, X. Artificial intelligence-assisted magnetic resonance imaging technology in the differential diagnosis and prognosis prediction of endometrial cancer. Sci Rep 14, 26878 (2024). https://doi.org/10.1038/s41598-024-78081-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-78081-3