Abstract

With the rapidly increasing amount of materials data being generated in a variety of projects, efficient and accurate classification of atomistic structures is essential. A current barrier to effective database queries lies in the often ambiguous, inconsistent, or completely missing classification of existing data, highlighting the need for standardized, automated, and verifiable classification methods. This work proposes a robust solution for identifying and classifying a wide spectrum of materials through an iterative technique, called symmetry-based clustering (SBC). Because SBC is not a machine learning-based method, it requires no prior training. Instead, it identifies clusters in atomistic systems by automatically recognizing common unit cells. We demonstrate the potential of SBC to provide automated, reliable classification and to reveal well-known symmetry properties of various materials. Even noisy systems are shown to be classifiable, showing the suitability of our algorithm for real-world data applications. The software implementation is provided in the open-source Python package, MatID, exploiting synergies with popular atomic-structure manipulation libraries and extending the accessibility of those libraries through the NOMAD platform.

Similar content being viewed by others

Introduction

Materials science plays a pivotal role in advancing technology and addressing global challenges by discovering novel materials with tailored properties and functionalities. With the ever-expanding materials data collections1,2,3,4,5,6,7,8,9,10,11 driven by pioneering projects, efficient identification, and classification of structures is essential for effective searches and for guiding materials design and optimization. Since the properties of a material depend very sensitively on its structure, targeted analyses require properly tagged database entries, ideally based on a standardized classification system. However, achieving consistent classifications is a challenge. Many datasets have no classification at all, and when they do, they often rely on custom or unspecified definitions, leading to inconsistency and ambiguity. Therefore, automated and verifiable methods for analyzing and categorizing atomistic structures are crucial for handling not only the vast and heterogeneous data from atomistic calculations but also the wealth of experimental data that is becoming increasingly available.

Libraries like ASE12 and pymatgen13 provide valuable routines for automating various tasks, including surface creation, generating crystal structures with desired symmetry properties, constructing supercells for adsorbate systems, and more. In contrast, our focus is on solving the inverse problem of reconstructing fundamental building blocks from a given structure. Previous efforts in the automatic classification of atomistic structures have focussed on specific domains, such as defect detection14,15,16,17,18, identification of local structural environments in molecular systems19, or low-dimensional components in layered solids20. Also machine-learning based approaches encompass a wide range of related applications. These include deep-learning neural networks for the classification of crystal-structures21,22, the microstructure of low carbon steel23, or X-ray powder diffraction24. Often, specific materials like metals, oxides, or hierarchical structures like zeolites are in the focus of dedicated studies25. For example, an automated metallographic image-segmentation method using an ensemble of modified U-Nets for determining morphological features of microstructures in metals has been proposed26. The detection of porosity defects in aluminum alloys using convolutional neural networks has been explored27. Furthermore, perovskite crystal structures are classified using the Light Gradient Boosting Machine (Light GBM) algorithm, predicting structures based on features like electronegativity and bond lengths28. Similarly, a layered-crystal determination program based on the topology-scaling algorithm has identified layered di-metal chalcogenides (LDCs) with various structures and properties, including band-gap layered semiconductors and magnetic materials29.

Notably, many of the machine-learning-based methods discussed above share a limitation: while they demonstrate proficiency in dealing with specific material domains, their potential for general applicability across a diverse spectrum of materials or structures remains an open issue. The Inorganic Structural Database alone currently contains 290 000 samples, with about 16 000 structures added each year30. In this vast and rapidly expanding materials space, developing a comprehensive classification scheme that relies on a specific training set presents significant challenges. To stay relevant, these models would require frequent retraining because they struggle to generalize beyond their initial training data. While retraining can nowadays be relatively straightforward, the process of identifying new training sets and performing the retraining can quickly become impractical.

In this work, we pursue a general approach for material classification. Our goal is not only to detect particular regions in a very broad range of multi-phased materials, but also to comprehensively describe them using established symmetry concepts. To this end, we present a new iterative technique, called symmetry-based clustering (SBC). It extends the principles established in previous work31, which focused on classifying and analyzing the topology of surfaces and 2D materials. This previous method was able to handle outlier atoms like adsorbates or defects, but was limited to identifying only one distinct component per structure. Our approach goes beyond by disassembling complex atomistic structures into multiple distinct components based on their symmetry properties. Unlike the work of Ref. 31, SBC aims at identifying all meaningful clusters of atoms within a structure, enabling a comprehensive understanding of its composition. Additionally, SBC facilitates symmetry analysis for each identified component by providing information about the unit cell from which a cluster originates. This process is illustrated in Fig. 1. Importantly, it eliminates the need for training data or the use of predefined search patterns, and also provides the ability to link components in multi-phase structures to known entries in material databases through the symmetry analysis of the unit cells involved.

In the following sections, we describe the underlying principles and extensions with respect to previous efforts. The presented results offer insight into the strengths and limitations of the algorithm. Our discussion encompasses implications for materials science and future research directions, showing how SBC can accelerate materials discovery and design by automating the classification of materials and providing valuable guidance in a data-driven materials landscape. The clustering tools are implemented in the newest version of the Python package MatID https://github.com/nomad-coe/matid, which is part of the NOMAD data platform2. In addition, MatID can be integrated with ASE12, a widely used library for manipulating atomic structures.

Methods

Symmetry-based clustering

The basis for SBC is provided by a tool developed by Himanen and coworkers31, which finds regions in an atomistic structure that exhibit repeating patterns. Starting from an atom of choice, it searches its neighborhood for repetitions of the same atomic species, to construct the most likely unit cell. If such a unit cell is found, all atoms that can be replicated by repetition of this unit cell are assigned to a single region. This region is then returned. More information about this procedure can be found in Ref. 31. In the SBC approach, we use this algorithm iteratively until all atoms in the structure have been visited and no new regions can be found. Importantly, we also post-process these regions to resolve overlaps between clusters and to exclude non-bonded atoms from the clusters. A sketch of the different steps is shown in Fig. 2 and can be summarized as follows:

-

1.

Initial cluster detection: In this first step, we iteratively assign a unit-cell type to repeating patterns until all atoms in the system have been examined. This provides an initial guess for the clusters, see step 1 in Fig. 2. A detailed description of the pattern identification procedure can be found in Ref. 31.

-

2.

Merging clusters: If two clusters have large overlaps, this indicates that the same region has been found more than once with slightly different sets of atoms. This can be seen in Fig. 2, where the yellow cluster is contained entirely within the blue cluster. Such overlap may occur due to structural imperfections, since atoms may be assigned to different clusters depending on where the search starts. To deal with this, the algorithm merges these overlapping clusters, see step 2 in Fig. 2.

-

3.

Cluster assignment: After merging, there may still be unresolved smaller overlaps between clusters. This can be seen in Fig. 2, where some atoms are assigned to both blue and red clusters. These small overlaps occur near the interface between two separate clusters, and unlike with large overlaps, merging is no longer sensible. To ensure that all atoms are allocated to a single cluster, we resolve such situations by assigning atoms to the cluster that has more atoms in the immediate neighborhood, see step 3 in Fig. 2. In cases where ambiguities still persist, the final assignment is made randomly to avoid introducing bias. Although this choice is random, the result is reproducible because a fixed random seed is used.

-

4.

Connectivity check: The final stage involves the elimination of “dangling” atoms by assessing connectivity through the measurement of interatomic distances. Atoms separated by distances greater than a typical bond length for their atomic species, as determined by the covalent radii32, are considered not chemically bonded, as illustrated in step 4 of Fig. 2. This step is vital as the periodic search could identify matches that fit the unit-cell pattern without being chemically bonded, resulting in incorrectly identified clusters.

After these steps, the remaining clusters are reported back to the user, together with the underlying unit cell from which the cluster is constructed from. The returned unit cell is typically some type of a primitive cell, but can also come in other shapes. For more details, see the Methods section in Ref. 31. In order to understand the underlying material better, it is typical to perform symmetry analysis on the returned unit cell to e.g. extract the conventional cell or other symmetry properties. The MatID package also provides tools for doing such analysis based on the Python library spglib33.

Benchmark datasets

To test the SBC algorithm, we first create two reference datasets. The first dataset consists of stacked structures of surfaces and 2D materials built from the unit cells shown in Fig. 3. Each layer has an in-plane dimension of 12 Å ⋅ 12 Å. In the vertical direction, we create all possible combinations of two- and three-layer structures using the five different surface slabs and five different 2D materials. The surface slabs are constructed from bulk materials with a thickness of at least three layers of the unit cell, sufficient to be considered as surfaces rather than 2D materials. In total, this amounts to 1100 structures with 3200 layers. The interlayer distances are estimated from the van der Waals radii34,35,36 of the involved chemical species. An example of such stacked structures is given in Fig. 4 on the left-hand side.

The second dataset consists of grain boundaries composed of two differently oriented grains of the same bulk material. They are built from the unit cells shown in the bottom row of Fig. 3. For each material, we created five different grain orientations, resulting in a total of 100 structures. Each grain has a size of 12 Å ⋅ 12 Å in the plane of the grain boundary. The minimum thickness of the grains is 12 Å. If the combined thickness of three unit cells exceeds this minimum, the total thickness of these three unit cells then defines the vertical dimension. An example of such a grain boundary is illustrated in Fig. 4 on the right-hand side.

In real-world scenarios, perfectly symmetric positioning of atoms is often unattainable, and the algorithm’s robustness against noise becomes crucial. SBC includes a position tolerance (PT) parameter that defines the spherical radius within which the search for the expected atomic position is conducted. To measure how robust SBC is against noise, and to see what the optimal choices for this PT are, we add controlled noise to test our algorithm.

The test systems are perturbed by applying a displacement of a fixed length in a uniformly random direction to each atom, referred to as noise in the following discussion. To illustrate the effect of different noise levels, we refer to Fig. 5. It displays systems with varying degrees of noise and its effect on the outcome. For small noise values, the algorithm adeptly handles minor perturbations, capturing all cluster atoms effectively. However, as the noise level increases, the algorithm faces challenges in accurately classifying these distorted patterns.

For a fixed position tolerance of PT = 0.4 Å, noise of 0 Å (top) and 0.9 Å (bottom) is added. The larger image shows the input, i.e., the original graphene structure, while the smaller image represents the outcome generated by the SBC algorithm, with atoms belonging to the found cluster shown in red.

Performance metrics

The performance of the SBC is measured using three key metrics:

-

1.

Accuracy: An atom is correctly clustered if it belongs to the unit cell that was used to generate the dataset. We define accuracy as the average percentage of correctly clustered atoms over the entire dataset, i.e., it indicates how many of the atoms can be expected to be handled correctly by SBC. Ideally, it should be maximized. This criterion is specific to this dataset, which is designed to evaluate the algorithm’s performance using known unit cells. In real-world scenarios, the unit cell used to generate a system is often unknown.

-

2.

False-negative rate: Average percentage of atoms that are found not to belong to any cluster. Since in the reference data each atom belongs to a cluster, these cases represent scenarios where SBC fails to recognize that an atom is part of a cluster.

-

3.

False-positive rate: Average percentage of atoms either assigned to a wrong cluster or to a cluster that is not supposed to exist. A false positive rate indicates the tendency of the algorithm to find incorrect patterns in the structure and is typically more problematic than the false-negative rate.

These three metrics sum up to 100%, and the percentages are calculated with respect to the system size. In this work, we assume that the original unit cell is the only correct choice and do not explore alternative interpretations. However, it’s worth noting that, for example, hexagonal graphite can be viewed as being built from a single unit cell with space group 194, or as a structure containing several individual graphene layers.

Results

Stacked 2D structures

Figure 6 shows for the dataset of stacked 2D structures how the three metrics – accuracy, false-positive rate, and false-negative rate – evolve with increasing noise level and different PT values. The average metrics are displayed together with their standard deviation over 10 different runs, where the clustering was started at different random positions. The results demonstrate that the SBC achieves near 100% accuracy when dealing with low-noise systems, i.e., when the PT is set to a correspondingly small value, and that the starting location does not significantly affect the clustering results as seen from the small standard deviation. Since many real-world structures such as those that have undergone geometry optimization, do not exhibit such perfect symmetry, we focus here on the regions where additional noise has been added, and on how the choice of the PT affects the accuracy. It can be observed that the false negative rate converges to zero as the PT is increased, no matter how much noise is present. However, this is balanced out by an increase in the false-positive rate, indicating that the algorithm starts to find non-existent patterns when the tolerances are too large.

Figure 7 displays the accuracy-weighted average PT as a function of the noise coefficient, as measured by the displacement length in Å. Using the accuracy-weighted average gives more robust metrics compared to using the PT with maximum accuracy, since there are often multiple PT values that produce very similar results. This figure can help to determine a good PT, if an estimate of the noise can be made. It shows an approximately linear relationship between the tolerance value producing good accuracy and the expected noise level. The linear fit is y = 0.66x + 0.44 with an R2 value of 0.99.

Grain boundaries

Figure 8 and 9 show the same properties as Figs. 6 and 7, but for the grain-boundary dataset. The linear fit in Fig. 9 is y = 0.67x + 0.43 with an R2 value of 0.99. Although there is a small difference in the linear fits, the general trend in both datasets is very similar, suggesting that estimates for the optimal PT may be transferable between datasets. In the grain-boundary example, the standard deviations are bigger compared to the 2D stacked structures. This shows that the point at which the clustering starts, has a bigger impact, although the effects are still relatively small.

The linear fits for both datasets have a non-zero offset and a slope that is below 1, indicating that simply setting the PT to the same value as the expected noise may not yield optimal results. This can be partially explained by the Gaussian-like peak in false-positives, which appears close to the region where the noise coefficients are slightly above the position-tolerance values.

Figure 10 illustrates the two primary failure modes of the SBC method: false negatives and false positives. These examples underscore the challenge of determining the optimal PT in SBC. Each panel presents a visualization of scenarios, where the SBC method fails to produce the expected results. The larger image displays the original system, while the inset in the bottom right corner depicts the clustering result, with unclustered atoms marked in gray. On the left, a misclassified stacked structure demonstrates accurate recognition and classification of lower layers but struggles with the topmost layer. Due to a too small PT, one atom is incorrectly identified as an outlier (gray, false negative). Conversely, the example on the right illustrates a partially misclassified grain boundary, where due to a too large PT the clustering process erroneously assigns several atoms to the wrong cluster (false positive).

Larger images show the original systems, the insets the results of the clustering. Atoms assigned to a wrong cluster are encircled. In the example on the left, one atom in the top layer is not assigned to any cluster (false negative). In the example on the right, atoms at the grain boundary are assigned to the wrong cluster (false positive).

Real-world data

Two example structures from real-world data are examined to assess the applicability of the SBC algorithm. Both structures come from simulations where the structure has undergone geometry optimization. The first example is a three-layer stacked system with the chemical formula Ba32In8La8O116Sn22Ti8, extracted from the NOMAD dataset https://doi.org/10.17172/NOMAD/2022.01.11-137. The second example is a tungsten grain boundary from the NOMAD dataset https://doi.org/10.17172/NOMAD/2024.03.20-138, consisting of 258 W atoms.

In order to estimate a good PT for these structures, we need to quantify the noise that is present in the final geometries. This will allow us to use the fits from Figs. 7 and 9 to identify a PT value giving optimal clustering results. We start out by comparing the final optimized structure to the initial structure built from perfectly symmetric components. This initial structure may be available as the first step of the geometry optimization, or may be manually recreated. Directly calculating the displacement of atoms between these two geometries is not an effective measure of noise, as this method would incorrectly interpret the translation of the entire structure as noise. Instead, we calculate how much the six nearest neighbors of each atom have been displaced on average. This gives a reasonable localized measure and can be used to estimate the noise of individual atoms by multiplying with a factor of 3/4 as shown in the Supplementary Information. Figures 11 and 12 show how the noise estimated in this way is distributed in both structures.

As seen from the noise distributions, realistic systems tend to not have uniform noise distributions, whereas SBC is currently designed to only have a single position tolerance value that is used throughout the clustering process. For this analysis, we simply use the mean noise. It should be noted that often a very detailed noise analysis cannot be performed for each individual structure due to computational reasons or because there is no knowledge about the starting configuration. In these cases one can often still often produce meaningful clustering with SBC by using the default position tolerance value, or by trying a range of position tolerance (PT) values to identify the one that yields the most consistent and reliable results.

The mean noise for the stacked system is 0.18 Å, and with the linear fit from Fig. 7 we can estimate the ideal PT to be PT = 0.66*0.18 + 0.44 ≈ 0.56. Figure 13 shows the result of the clustering with this estimate. The algorithm successfully identifies two layers but fails to correctly identify the middle layer built from cubic lanthanum indium oxide (LaInO3), where the noise is much higher than the mean value. An incorrect unit cell is identified for this middle layer (false positive), which also leads to some of the atoms in that layer not being assigned to any cluster (false negative). Symmetry analysis of the unit cells found by SBC reveals that the two successfully identified layers consist of cubic barium titanate (BaTiO3) and cubic barium stannate (BaSnO3), both space group 221.

Atoms are colored according to their atom species (top panel and insets) or according to their cluster affiliation, using an estimated PT of 0.56 Å (bottom panel). The insets below the bottom panel show the identified conventional cells. The SBC algorithm correctly identifies cubic barium stannate (BaSnO₃) on the left and cubic barium titanate (BaTiO₃) on the right, but identifies the wrong unit cell and misses some atoms in the middle layer.

The mean noise for the grain boundary is 0.16 Å, and with the linear fit from Fig. 9 we can estimate the ideal PT to be PT = 0.67*0.16 + 0.43 ≈ 0.54. Figure 14 shows the result of the clustering with this estimate. The algorithm accurately identifies the two grains, but near the interface it is unclear even with human inspection whether the atoms with highest noise can be attributed to a specific cluster. By performing symmetry analysis on the units cells identified by SBC, we find that both grains are built from bcc tungsten (space group 229).

We note that in both examples, the geometry optimization produces displacements from the original ideal structures, especially near the interface where two different clusters meet. Such atoms, displaced from their expected positions by more than the selected PT, often end up as unassigned after the SBC procedure and may even be difficult for a human to classify.

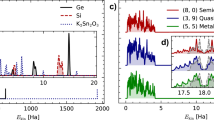

Comparison with machine-learning-based classification

To assess how the SBC compares with machine-learning-based approaches, we compare it with ARISE, which is a crystal-structure identification method based on Bayesian deep learning22. This method has been trained on more than 100 crystal structures and can identify local regions in a structure or provide a global classification for a monocrystalline structure. Notably, it also returns the prediction confidence as a percentage, which can help in interpreting the results. It should be noted that unlike SBC, ARISE does not consider the atomic species for classification, but only the geometry.

We use two example structures, namely fcc Cu (space group 225) and α-NaFeO2 (space group 166), shown in Fig. 15. The fcc structure is widely prevalent and is included in the ARISE training set, while α-NaFeO2 is chosen to represent a more complex unit cell and is notably absent from the ARISE training set. For SBC, we report the space group number of the largest identified cluster and the percentage of the entire structure that the cluster occupies. For ARISE, we report the most probable space group number along with its certainty. To also compare how the two methods deal with noise, we show the results for different noise levels. Both methods are used with their built-in default settings, and the results of the comparison are shown in Table 1.

While these two methods are not directly comparable, the inconsistent results generated by ARISE for α-NaFeO2 underscore a key limitation of machine-learning-based approaches, namely their inability to generalize beyond the training set. The fcc structure, which was included in the ARISE training set, is handled well by both methods, even in the presence of noise.

Computational performance

The scalability of the algorithm is assessed by measuring the total elapsed computational time and peak memory usage in finding all clusters. The reported peak memory usage includes only allocations made by the SBC algorithm; any initial memory allocations from the Python interpreter and the loaded dependencies are ignored. The measurements were performed with the default settings on a laptop with a 6-core, 2.6 GHz processor and 16 GB of RAM, which is fairly typical for modern hardware. Although the SBC algorithm itself does not explicitly use any form of parallelization, it heavily utilizes the numpy library39 for linear algebra which can utilize multiple cores.

The measurements are made with two types of systems - crystalline ordered system and amorphous disordered system - which represent two extremes, with most real-life structures falling somewhere in between. Both types of systems are constructed using a cubic simulation cell and the same density. Using the same density is important, because the effective search space the algorithm has to go through is determined by how many atoms are within a fixed search radius that can extend beyond the periodic boundaries. Therefore, in both systems, which were configured with periodic boundary conditions, we kept the system densities consistent for a fair comparison.

The accuracy of the clustering during these performance tests is not tracked in detail, as this analysis is done in Section 3. The accuracy is assumed to be independent of the system size, and this assumption is further confirmed by checking that in the ordered systems all of the atoms belong to the same cluster constructed from the correct conventional cell, resulting in 100% accuracy. In disordered systems, it is not generally possible to assert specific clustering results because of spurious false positive results that naturally arise from the random structures.

The result of the computational performance analysis is shown in Fig. 16. Both the elapsed time and the peak memory usage show non-linear behavior for both types of systems, with disordered structures having worse scaling behavior. The non-linear scaling is partially explained by the calculation of pairwise atomic distances, the computation and storage of which scale as \({\mathcal{O}}({n}^{2})\) with system size n. In disordered structures, SBC has to attempt to initialize the cluster search with multiple starting points, which explains the worse scaling behavior. A detailed analysis of the analytical computational and memory complexity is not performed here, but will be a part of future optimization efforts.

Overall, the results show that the SBC is not yet scalable to very large structures due to non-linear scaling of both memory and computational time. The scaling behavior is expected to improve over time as more optimal methods for calculating and storing the interatomic distances are implemented in the codebase and the computationally intensive bottlenecks of the program can be moved to a precompiled C++ extension.

Discussion

The symmetry-based clustering algorithm represents an advancement in identifying and classifying complex atomistic structures. By leveraging symmetry properties, the SBC effectively identifies and categorizes distinct components within these structures. Through evaluation metrics including accuracy, false-positive rate, and false-negative rate, we demonstrate the algorithm’s effectiveness on two distinct datasets: one consisting of stacked surfaces and the other of grain boundaries. Our results showcase the high accuracy and robustness of the SBC across various noise levels and position tolerances, particularly in handling structures with noise and defects. As such, the SBC algorithm holds significant implications for materials science and related fields. By providing precise labeling of the components within arbitrary atomistic structures, this method enhances the value of structural data. Researchers can now efficiently target specific structures in search queries, streamlining the exploration of vast materials datasets. Furthermore, the automated approach offers several advantages over manual labeling. It ensures reproducibility and delivers consistent results, eliminating the inherent subjectivity that may arise from human interpretation. Notably, this method stands out from existing approaches by its versatility. It is not confined to a particular domain of materials and does not rely on prescribed search patterns or extensive training. This adaptability makes it a valuable tool across diverse materials research applications.

Despite the significant advances made with the new SBC algorithm, there are several avenues for improvement and future research. While SBC demonstrates strong performance on synthetic datasets, its evaluation on diverse real-world materials remains limited. Expanding the dataset used for validation and testing could help to assess its generalizability and applicability to real-world scenarios. A notable challenge is the selection of the position tolerance (PT) parameter. Because the optimal PT value can vary significantly between different structures, and even locally within a single structure, parameter selection can be challenging for users. The use of a single PT value across an entire structure does not account for variable noise distributions within systems, and regions with higher noise or imperfections may suffer from misclassification. Developing automated, adaptive mechanisms for PT determination could enhance both accuracy and usability, especially for large, diverse datasets. Additionally, the algorithm’s computational time and memory usage scale non-linearly with system size, limiting its use in large-scale or high-throughput studies. Atomistic simulations of surfaces, interfaces, heterostructures, etc. may easily involve well over thousands of atoms and ongoing efforts are directed at enhancing the algorithm’s scalability to enable the study of such larger systems.

In conclusion, the new clustering algorithm represents a significant advancement in the identification and classification of structures in the material genealogy. It demonstrates high accuracy while also highlighting challenges in handling noise and disordered structures. This study provides valuable insights into the performance and limitations of the algorithm, guiding future research directions to enhance its robustness, scalability, and applicability. By addressing these challenges, the SBC algorithm holds great potential to contribute to accelerated materials discovery and design, facilitating the development of novel materials with tailored properties.

Data availability

The structure data and topologies used in the analysis of 2D stacked structures and grain boundaries are provided as .xyz and JSON files under the DOI: 10.5281/zenodo.14604736.

References

Jain, A. et al. The Materials Project: A materials genome approach to accelerating materials innovation. APL Mater. 1, 011002 (2013).

Scheidgen, M. et al. Nomad: A distributed web-based platform for managing materials science research data. J. Open Source Softw. 8, 5388 (2023).

Curtarolo, S. et al. Aflowlib.org: A distributed materials properties repository from high-throughput ab initio calculations. Comput. Mater. Sci. 58, 227–235 (2012).

Materials Cloud (2023). https://www.materialscloud.org/home.

Open Materials Database (2023). https://openmaterialsdb.se/.

NIMS Materials Database (MatNavi) (2023). https://mits.nims.go.jp/.

NREL MatDB (2023). https://materials.nrel.gov/.

Computational Materials Repository (2023). https://cmr.fysik.dtu.dk/.

Theoretical Crystallography Open Database (2023). http://www.crystallography.net/tcod/.

The Electronic Structure Project (2023). http://gurka.fysik.uu.se/ESP/.

Computational 2D Materials Database (C2DB) (2023). https://cmr.fysik.dtu.dk/c2db/c2db.html.

Larsen, A. H. et al. The atomic simulation environment – a python library for working with atoms. J. Phys.: Condens. Matter 29, 273002 (2017).

Ong, S. P. et al. Python materials genomics (pymatgen): A robust, open-source python library for materials analysis. Comput. Mater. Sci. 68, 314 – 319 (2013).

Stukowski, A. & Albe, K. Dislocation detection algorithm for atomistic simulations. Model. Simul. Mater. Sci. Eng. 18, 025016 (2010).

Ackland, G. J. & Jones, A. P. Applications of local crystal structure measures in experiment and simulation. Phys. Rev. B 73, 054104 (2006).

Stukowski, A. Structure identification methods for atomistic simulations of crystalline materials. Model. Simul. Mater. Sci. Eng. 20, 045021 (2012).

Stukowski, A.Dislocation Analysis Tool for Atomistic Simulations, 1545–1558 (Springer International Publishing, Cham, 2020). https://doi.org/10.1007/978-3-319-44677-6_20.

Goryaeva, A. M. et al. Reinforcing materials modelling by encoding the structures of defects in crystalline solids into distortion scores. Nat. Commun. 11, 4691 (2020).

DeFever, R. S., Targonski, C., Hall, S. W., Smith, M. C. & Sarupria, S. A generalized deep learning approach for local structure identification in molecular simulations. Chem. Sci. 10, 7503–7515 (2019).

Ashton, M., Paul, J., Sinnott, S. B. & Hennig, R. G. Topology-scaling identification of layered solids and stable exfoliated 2d materials. Phys. Rev. Lett. 118, 106101 (2017).

Ziletti, A., Kumar, D., Scheffler, M. & Ghiringhelli, L. M. Insightful classification of crystal structures using deep learning. Nat. Commun. 9, 2775 (2018).

Leitherer, A., Ziletti, A. & Ghiringhelli, L. M. Robust recognition and exploratory analysis of crystal structures via Bayesian deep learning. Nat. Commun. 12, 6234 (2021).

Azimi, S. M., Britz, D., Engstler, M., Fritz, M. & Mücklich, F. Advanced steel microstructural classification by deep learning methods. Sci. Rep. 8, 2128 (2018).

Park, W. B. et al. Classification of crystal structure using a convolutional neural network. IUCrJ 4, 486–494 (2017).

Banik, S. et al. Cegann: Crystal edge graph attention neural network for multiscale classification of materials environment. npj Comput. Mater. 9, 23 (2023).

Biswas, M. et al. Microstructural segmentation using a union of attention guided u-net models with different color transformed images. Sci. Rep. 13, 5737 (2023).

Nikolić, F., Štajduhar, I. & Čanađija, M. Casting defects detection in aluminum alloys using deep learning: a classification approach. Int. J. Metalcast. 17, 386–398 (2023).

Behara, S., Poonawala, T. & Thomas, T. Crystal structure classification in abo3 perovskites via machine learning. Comput. Mater. Sci. 188, 110191 (2021).

Wei, J., Wang, C., Zhang, T., Dai, C.-M. & Chen, S. High-throughput screening and classification of layered di-metal chalcogenides. Nanoscale 11, 13924–13933 (2019).

Inorganic Crystal Structure Database – ICSD (2024). https://www.fiz-karlsruhe.de/en/produkte-und-dienstleistungen/inorganic-crystal-structure-database-icsd.

Himanen, L., Rinke, P. & Foster, A. S. Materials structure genealogy and high-throughput topological classification of surfaces and 2d materials. npj Comput. Mater. 4, 52 (2018).

Cordero, B. et al. Covalent radii revisited. Dalton Trans. 2832–2838 (2008). https://doi.org/10.1039/B801115J.

Togo, A. & Tanaka, I.Spglib: a software library for crystal symmetry search. https://github.com/spglib/spglib (2018). arXiv:1808.01590.

Bondi, A. van der waals volumes and radii. J. Phys. Chem. 68, 441–451 (1964).

Mantina, M., Chamberlin, A. C., Valero, R., Cramer, C. J. & Truhlar, D. G. Consistent van der waals radii for the whole main group. J. Phys. Chem. A 113, 5806–5812 (2009).

Batsanov, S. S. Van der waals radii of elements. Inorg. Mater. 37, 871–885 (2001).

Fang, L., Aggoune, W., Ren, W. & Draxl, C. How a ferroelectric layer can tune a two-dimensional electron gas at the interface of laino3 and basno3: A first-principles study. ACS Appl. Mater. Interfaces 15, 11314–11323 (2023).

Scheiber, D., Razumovskiy, V. I., Puschnig, P., Pippan, R. & Romaner, L. Ab initio description of segregation and cohesion of grain boundaries in w–25at.% re alloys. Acta Mater. 88, 180–189 (2015).

Harris, C. R. et al. Array programming with NumPy. Nature 585, 357–362 (2020).

Acknowledgements

This work received funding by the German Research Foundation (DFG) through the NFDI consortium FAIRmat, project 460197019. We thank Nathan Daelman and Ahmed Mansour for valuable feedback to the manuscript. We acknowledge support by the Open Access Publication Fund of Humboldt-Universität zu Berlin.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Contributions

T.D. generated the datasets, conducted the analysis, and generated the figures. L.H. contributed to and supervised the development of the algorithm. M.S. and C.D. provided supervision throughout the project. All authors contributed to the writing of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

41524_2024_1498_MOESM1_ESM.pdf

Supplementary Information to: Automated identification of bulk structures, two-dimensional materials, and interfaces using symmetry-based clustering

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Denell, T., Himanen, L., Scheidgen, M. et al. Automated identification of bulk structures, two-dimensional materials, and interfaces using symmetry-based clustering. npj Comput Mater 11, 25 (2025). https://doi.org/10.1038/s41524-024-01498-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41524-024-01498-x