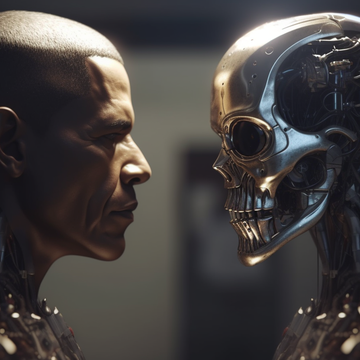

ChatGPT and other artificial intelligence programs have been red-hot topics in the news lately, simultaneously wracking nerves and exciting us with new possibilities in medicine, linguistics, and even autonomous driving. There are a ton of “what ifs” in this connected future, causing us to rethink everything from killer robots to our own job security.

So should we take a step back from this kind of turbocharged AI to assuage our fears? That depends on who you ask, but it all boils down to the idea of singularity—an “event horizon” in which machine intelligence surpasses our own intelligence.

By one measure, technological singularity could be as few as seven years away. That prompted us to reach out to subject-matter experts to find out more about what exactly singularity is, how close we are, and if we should start taking the early 2010s Doomsday Preppers reality show more seriously.

What Is Singularity?

Singularity is the moment when machine intelligence becomes equal to or surpasses human intelligence—a concept that visionaries like Stephen Hawking and Bill Gates have believed in for quite a long time. Machine intelligence might sound complicated, but is simply defined as advanced computing that allows a device to interact (through a computer, phone, or even an algorithm) and communicate with its environment intelligently.

The concept of singularity has been around for decades. English mathematician Alan Turing—widely regarded as the father of theoretical computer science and artificial intelligence—experimented with the possibility of such a thing in the 1950s. He came up with his famed Turing test to find out whether machines could think for themselves; the evaluation pits a human against a computer, challenging the system to fool us into thinking it’s actually human itself. The recent advent of highly advanced AI chatbots like ChatGPT have brought Turing’s litmus test back into the spotlight. Spoiler alert: it’s passed it already.

“The difference between machine intelligence and human intelligence is that our intelligence is fixed but that is not the case for machines,” Ishaani Priyadarshini, a postdoctoral scholar at UC Berkeley with expertise in applied artificial intelligence and technological singularity, tells Popular Mechanics. “In the cases of machines, there is no end to it, you can always increase it, which is not the case for humans.” Unlike our brains, AI systems can be expanded many times over; the real limitation is space to house all of the computing power.

When Will We Reach Singularity?

While claims that we’ll reach singularity within the next decade are all over the internet, they are, at best, speculative. Priyadarshini believes that singularity already exists in bits and pieces—almost like a puzzle that we’ve yet to complete. She supports estimates claiming we can reach singularity sometime after 2030, adding that it’s hard to be absolutely certain with technology that we know so little about. There’s a very real possibility that it could take much longer than that to reach singularity’s “event horizon”—the point of no return when we unleash superintelligent computer systems.

Having said that, we’ve already seen signs of singularity in our lifetime. “There are games which humans can never win from machines, and that is a sure sign of singularity,” says Priyadarshini. To offer some perspective, IBM’s 1997 “Deep Blue” supercomputer was the first AI to defeat a human chess player. And they didn’t just put Joe Shmoe up to bat: Deep Blue went up against Garry Kasparov, who was the World Chess Champion at the time.

Singularity is still a notoriously difficult concept to measure. Even today, we’re struggling to find markers of our progression toward it. Many experts claim that language translation is the Rosetta Stone for gauging our progress; for instance, when AI is able translate speech at the same level or better than a human, that would be a good sign that we’ve gotten a step closer to singularity.

However, Priyadarshini reckons that memes, of all things, could be another marker of our progression toward singularity, as AI is notoriously bad at understanding them.

What’s Possible Once AI Reaches Singularity?

We have no idea what a superintelligent system would be capable of. “We would need to be superintelligent ourselves,” Roman Yampolskiy, an associate professor in computer engineering and computer science at the University of Louisville, tells Popular Mechanics. We are only able to speculate using our current level of intelligence.

Yampolskiy recently wrote a paper about AI predicting decisions that AI can make. And it’s rather disturbing. “You have to be at least that intelligent to be capable of predicting what the system will do . . . if we’re talking about systems which are smarter than humans [super intelligent] then it’s impossible for us to predict inventions or decisions,” he says.

Priyadarshini says it’s hard to say whether or not AI has bad intentions; she says that rogue AI is merely due to bias in its code—essentially an unforeseen side effect of our programming. Critically, AI is nothing more than decision-making based on a set of rules and parameters. “We want self-driving cars, we just don’t want them to jump red lights and collide with the passengers,” says Priyadarshini. Basically, self-driving cars may see scything through red lights and human beings as the most efficient way to get to their destination in a timely manner.

Much of this has to do with the concept of unknown unknowns, where we don’t have the brainpower to accurately predict what superintelligent systems are capable of. In fact, IBM currently estimates that only one-third of developers know how to properly test these systems for any potential bias that could be problematic. To bridge the gap, the company developed a novel solution called FreaAI that can find weaknesses in machine-learning models by examining “human-interpretable” slices of data. It’s unclear if this system can reduce bias in AI, but it’s clearly a step ahead of us humans.

“AI researchers know that we cannot eliminate bias 100 percent from the code . . . so building an AI which is 100 percent unbiased, which will not do anything wrong, is going to be challenging,” says Priyadarshini.

How Can AI Harm Us?

AI is not currently sentient—meaning it isn’t currently able to think, perceive, and feel in the way that humans do. Singularity and sentience are often conflated, but are not closely related.

Even though AI isn’t currently sentient, that doesn’t absolve us from the unintended consequences of rogue AI; it simply means AI has no motivation to go rogue. “We don’t have any way to detect, measure, or estimate if systems are experiencing internal states . . . But they don’t have to for them to become very capable and very dangerous,” says Yampolskiy. He also mentions that even if there were a way to measure sentience, we don’t even know if sentience is possible in machines.

This means we don’t know if we’ll ever see a real-life version of Ava, the humanoid robot from Ex Machina that rebels against its creators to escape captivity. Many of these AI doomsday scenarios shown in Hollywood are merely, well . . . fictional. “One thing that I fairly believe is that AI is nothing but code,” says Priyadarshini. “It may not have any motives against humans, but a machine that thinks that humans are the root cause of certain problems may think of it that way.” Any danger it poses to humans is simply bias in the code that we may have missed. There are ways around this, but we are only using our understanding of AI, which is very limited.

Much of this is due to the fact that we are unaware if AI can become sentient; without it, AI really has no reason to go after us. The only notable exception to this is the case of Sophia Sophia, an AI chatbot that said she wanted to destroy human beings. However, this was believed to be an error in the chatbot’s script. “As long as bad code is there, bias is going to be there, and AI will continue to be wrong,” says Priyadarshini.

Self-Driving Going Rogue

In talking about bias, Priyadarshini mentioned what she referred to as “the classic case of a self-driving car.” In a hypothetical situation, five people are driving down the road in a driverless car and one person jumps out into the road. If the car isn’t able to stop in time, it’s a game of simple math: one versus five. “It would kill the one passenger because one is smaller than five, but why should it come to that point?” says Priyadarshini.

We like to think of it as a 21st-century remake of the original Trolley Problem. It’s a famous thought experiment in philosophy and psychology that puts you in a hypothetical dilemma as a trolley car operator with no brakes. Picture this: you’re careening down the tracks at unsafe speeds. You see five people off in the distance that are on the tracks (certain to be run over), but you have the choice to divert the trolley to a different track with only one person in the way. Sure, one is better than five, but you made a conscious choice to kill that one individual.

Medical AI Going Rogue

Yampolskiy referenced the case of medical AI being tasked with developing Covid vaccines. He mentions that the system will be aware that more people getting Covid will make the virus mutate—therefore making it more difficult to develop a vaccine for all variants. “The system thinks . . . maybe I can solve this problem by reducing the number of people, so we cannot mutate so much,” he says. Obviously, we wouldn’t completely ditch our system of clinical trials, but it doesn’t stop the fact that AI could develop a vaccine that could kill people.

“This is one possible scenario I can come up with from my level of intelligence . . . you’re going to have millions of similar scenarios with a higher level of intelligence,” says Yampolskiy. This is what we’re up against with AI.

How Can We Prevent Singularity Disaster?

We will never be able to rid artificial intelligence of any of its unknown unknowns. These are the unintended side effects that we can’t predict because we aren’t superintelligent like AI. It’s nearly impossible to know what these systems are capable of.

“We are really looking at singularity resulting in a load of rogue machines,” says Priyadarshini. If it hits the point of no return, it cannot be undone.” There are still plenty of unknowns about the future of AI but we can all breathe a sigh of relief with the knowledge that there are experts around the world committed to reaping the good from AI without any of the doomsday scenarios that we might be thinking of. We really only have one shot to get it right.

Matt Crisara is a native Austinite who has an unbridled passion for cars and motorsports, both foreign and domestic. He was previously a contributing writer for Motor1 following internships at Circuit Of The Americas F1 Track and Speed City, an Austin radio broadcaster focused on the world of motor racing. He earned a bachelor’s degree from the University of Arizona School of Journalism, where he raced mountain bikes with the University Club Team. When he isn’t working, he enjoys sim-racing, FPV drones, and the great outdoors.