Abstract

The World Health Organization predicts that by 2030, depression will be the most common mental disorder, significantly affecting individuals, families, and society. Speech, as a sensitive indicator, reveals noticeable acoustic changes linked to physiological and cognitive variations, making it a crucial behavioral marker for detecting depression. However, existing studies often overlook the separation of speaker-related and emotion-related features in speech when recognizing depression. To tackle this challenge, we propose a Mixture-of-Experts (MoE) method that integrates speaker-related and emotion-related features for depression recognition. Our approach begins with a Time Delay Neural Network to pre-train a speaker-related feature extractor using a large-scale speaker recognition dataset while simultaneously pre-training a speaker’s emotion-related feature extractor with a speech emotion dataset. We then apply transfer learning to extract both features from a depression dataset, followed by fusion. A multi-domain adaptation algorithm trains the MoE model for depression recognition. Experimental results demonstrate that our method achieves 74.3% accuracy on a self-built Chinese localized depression dataset and an MAE of 6.32 on the AVEC2014 dataset. Thus, it outperforms state-of-the-art deep learning methods that use speech features. Additionally, our approach shows strong performance across Chinese and English speech datasets, highlighting its effectiveness in addressing cultural variations.

Similar content being viewed by others

Introduction

As societal competition intensifies, individuals increasingly face a variety of stressors related to life, education, emotions, and employment, contributing to a rise in psychological issues. If left unaddressed, these challenges can escalate into severe mood disorders, with depression ranking among the most prevalent. The World Health Organization forecasts that by 2030, depression is likely to become the leading mental health condition, posing significant challenges for individuals, families, and society as a whole1. In extreme cases, it can even lead to increased suicide rates. Given this context, early detection and intervention are crucial for effective treatment of depression. However, many patients struggle to receive timely diagnoses due to the significant disparity in the global doctor-patient ratio. Currently, the diagnosis of depression largely relies on subjective patient reports, the clinical judgment of psychiatrists, and standardized assessment tools2. These approaches can be inherently subjective and limited3, making them susceptible to biases4. For example, patients may unintentionally downplay or conceal their symptoms, leading to inaccuracies in diagnosis. This situation highlights the urgent need for accurate, practical, and objective quantitative indicators to support clinicians in making more precise diagnoses of depression5. Addressing this gap can enhance clinical outcomes and improve the overall quality of mental health care.

The speech reflects an individual’s unique identity and communicates many emotional nuances. In recent years, the potential of speech as a tool for automatic depression prediction has garnered increasing interest5. Depressed individuals often display distinct speech patterns that differentiate them from those who are not experiencing depression, prompting researchers to focus on extracting a variety of acoustic features to improve depression detection6,7. However, it is essential to recognize that the vocal anatomy of non-depressed individuals can sometimes produce speech that closely resembles the characteristics typically associated with depression8. Additionally, a speaker’s voice develops unique traits influenced by environmental factors and interpersonal interactions9, which may similarly manifest in features associated with depressive speech. Moreover, research indicates that the emotional experiences of non-depressed individuals, particularly during fleeting moments of low mood or sadness-can closely resemble those of clinical depression. The voices of individuals experiencing depression often mirror those of people who perceive themselves as sad or unfortunate, typically characterized by a somber tone. Non-verbal vocal expressions of emotion can vary significantly among individuals and across different contexts10, making it crucial to isolate vocal features linked explicitly to depression from those influenced by personal traits and transient emotional states. Addressing this critical challenge could significantly enhance the identification of unique vocal characteristics in depressed patients. Despite its importance, this aspect remains insufficiently explored within current deep-learning frameworks aimed at speech-based depression recognition.

Recent advancements in deep learning techniques for depression classification and prediction have prompted a significant shift from traditional machine learning methods to more advanced deep learning approaches11,12. However, concerns regarding sensitivity and privacy surrounding depression-related data limit the availability of adequate datasets. This scarcity can lead to overfitting during the training of deep models due to the small sample sizes. To address this challenge, leveraging transfer learning through pre-trained models on large datasets has emerged as an effective strategy to mitigate overfitting in situations with limited data13. Nonetheless, a significant issue arises because pre-training datasets often differ from those used in depression recognition tasks. Consequently, feature sets or models that perform well on one dataset may translate poorly to others, particularly when there are disparities in data generation methodologies14. As a result, research involving cross-corpus evaluations of depression frequently produces performance levels that approach random chance when applied to mismatched datasets15,16,17. Unfortunately, this critical issue has not been thoroughly investigated in existing deep-learning models focused on speech-based depression recognition, highlighting a significant gap that requires further exploration. By addressing this gap, researchers can enhance the effectiveness of deep learning approaches in accurately identifying and classifying depression across various datasets.

To tackle the challenges outlined above, this paper presents a Mixture of Experts (MoE)-based approach that simultaneously utilizes speaker individuality and emotional features for depression recognition. We assessed the performance of this method using a self-constructed localized Chinese depression dataset and the publicly available AVEC dataset. The results indicate that our approach performs effectively across Chinese and English datasets, successfully bridging the cultural differences associated with different languages.

Methods

This paper introduces a framework for speech-based depression recognition utilizing a Mixture of Expert (MoE) model that combines speaker-related and emotion-related features, as illustrated in Figure 1. Our framework comprises two primary components: depression feature extraction and recognition model training. A Time Delay Neural Network (TDNN)18 is initially pre-trained on a large-scale speaker recognition dataset to extract speaker-related features. In parallel, a separate speaker’s emotion-related feature extractor is pre-trained using an extensive speech emotion dataset. Following this, we extract and fuse the speaker-related and emotion-related features of individuals with depression using transfer learning on the depression dataset. Finally, a multi-source domain adaptation algorithm is employed to train the MoE model for effective depression recognition.

Extracting speaker-related features of depressive patients

Speaker-related features can be extracted using TDNN or their variants, such as ECAPA-TDNN19. While the ECAPA-TDNN architecture demonstrates significant advantages over traditional TDNN on speaker recognition, these enhancements primarily arise from the incorporation of computer vision techniques into neural networks. However, for sensitive and limited datasets, like those used for depression analysis, this approach may not be feasible or broadly applicable in real-world contexts. Moreover, the main objective of this study is to highlight the importance of both speaker-related and emotion-related features in depression recognition and to demonstrate how their integration can enhance overall recognition performance. This emphasis sets our work apart from traditional speaker recognition tasks, where extracting optimal speaker features is not a primary concern. Therefore, we have developed a traditional TDNN-based feature extractor to generate x-vector features20 from individual speakers, which serve as the speaker-related features, as illustrated in Figure 2. This extractor consists of three essential components: an Encoder Network that extracts frame-level features from 40-dimensional Fbank features computed from each frame of the input speech, a Global Temporal Pooling Layer that converts the frame-level features into a single vector for each utterance, capturing the overall representation of the speech, and a Feedforward Classification Network that processes the pooled vectors to produce probabilities related to various speaker categories. The x-vector features are derived from the first affine transformation following the pooling layer, providing the speaker-related features for individuals with depression.

Extracting speaker’s emotion-related features of depressive patients

The speaker’s emotion-related features of individuals with depression are extracted using a pre-trained emotional speech classifier through transfer learning. For this purpose, we used the ResNet-50 as our speech emotion classifier, which was pre-trained on a comprehensive emotional speech dataset. The architecture of this network includes an image input layer, a convolutional layer dedicated to feature extraction, a max pooling layer, four convolutional residual modules, an average pooling layer, and a fully connected layer. We use spectrograms derived from speech as the input images for the classifier. By applying the dataset referenced in AVEC2014 to the pre-trained ResNet-50, we successfully developed a speaker’s emotion-related feature extractor designed explicitly for depression recognition. Additionally, we employed a self-constructed Chinese local depression dataset (CLDD)21 as an out-of-domain test set to extract the relevant emotional features. The detailed methodology of this process is illustrated in Figure 3.

Mixture of experts model

Following the extraction of the speaker-related and emotion-related features from individuals with depression, this study incorporates the concept of a Mixture of Experts (MoE)22 to recognize depression. We use the methodology described in the paper23 to model multiple source domains for the MoE. To assess the contribution of each expert to various target samples, we employ the Mahalanobis distance as a measurement tool. In the end, predictions from all experts are aggregated through a weighted sum to facilitate accurate depression recognition. Each expert model corresponds to a specific domain classifier, thereby enhancing the model’s ability to capture diverse characteristics. The MoE classification model is presented in Figure 4.

The speaker-related features \(F_{1}\) and emotion-related features \(F_{2}\) of individuals with depression are transformed into a shared latent representation (E(x)) using an encoder E built on a convolutional neural network. The output of this shared latent representation, E(x), is forwarded to n expert classifiers, each generating prediction values for specific domains, which correspond to posterior probabilities. Additionally, a gating network G is designed to calculate the weights for each expert based on the current input, thereby generating a weight vector. The final prediction is obtained by taking the weighted sum of the outputs from the multiple expert models, enhancing the overall decision-making process.

For sample x drawn from the depression dataset, the final prediction result is computed as the weighted sum of the posterior probabilities generated by n expert models across various classes, as illustrated in Equation 1.

where, \(p^{s_i}\) represents the posterior probability distribution generated by the sample x at the i-th expert, which can be expressed as \(p^{s_i}(y|x)=softmax(W^{s_i}E(x))\), Here, \(W^{s_i}\) denotes the weights of the output layer for the i-th expert network. The parameter w serves as a metric function to evaluate the level of trust the sample x assigned to a specific expert. This trust level is computed using the Mahalanobis distance between the sample x and the i-th domain \(s_i\), as shown in Equation 2.

where, \(\mu ^{s_i}\) represents the average vector of features for the i-th expert \(s_i\), while M is an arbitrary positive semidefinite matrix estimated during the training process, satisfying \(M=\textrm{UU}^\textrm{T}\), where \(U\in \mathbb {R}^{h\times r}\), with h indicating the dimensionality of the hidden layer, and r is the hyperparameter that controls the rank of M. In a binary classification task, \(\mu ^{s_i}\) may be close to the decision boundary.

Once we obtain the distance d, we compute the confidence value for each expert using \(e(x,s_i)=f(d(x,s_i))\). A smaller distance indicates a lower confidence value for the corresponding classifier, which can contradict the actual scenario. To address this, this study adopts a method based on maximum cluster discrepancy24 to define the confidence value by analyzing the distance differences between the sample x and different classes in the i-th expert \(s_i\), as shown in Equation 3.

where, \(s_i^+\) and \(s_i^-\) represent the positive and negative sample spaces associated with the expert \(s_i\), respectively.

When the sample x is located far from \(s_i\) or near the class boundary, it is assigned a lower confidence value. Conversely, if the sample x is closer to a specific class within \(s_i\) than to others, that expert receives a higher confidence value. The final metric \(w(x,s_i)\) is obtained by normalizing the confidence values, as expressed in Equation 4.

We selected datasets corresponding to five stimulus tasks from the CLDD to serve as source domains for training five expert models. Each source domain is treated as a target, referred to as “source-target.” In contrast, the remaining domains are classified as “source-source.” This setup generates five pairs of (source-source, source-target) training data for domain adaptation learning. By learning the metric weights w from these domain training pairs, the loss function for training the Mixture of Experts (MoE) network is defined as shown in Equation 5.

Experiments

Experimental data

This paper utilizes three types of datasets: speaker recognition, emotional speech recognition, and depression recognition, as summarized in Table 1. The speaker recognition dataset was used to pre-train a speaker-related feature extractor, while the emotional speech recognition dataset was used to develop a speaker’s emotion-related feature extractor. We chose an emotional speech dataset comprising three languages in which professional actors expressed various emotions using the same text. We specifically selected emotional corpora reflecting happiness and sadness. The speech data from these three languages were combined to create a comprehensive dataset for pre-training the speech emotion classifier. The depression recognition dataset was utilized for transfer learning to extract the speaker-related and emotion-related features from individuals with depression. For this purpose, we selected the dataset referenced in AVEC201425 alongside the CLDD.

Drawing from Russell’s core affect theory, which quantifies emotions along the orthogonal axes of arousal and valence26, we acknowledge that these two emotional dimensions are integral to assessing depression. As a result, this paper categorizes speech emotions from the two depression datasets into two classes: one class reflects negative emotional valence, representing sadness, while the other captures positive emotional valence, representing happiness.

For all datasets except AVEC2014, we divided the data according to the number of subjects, allocating 70% for training, 10% for validation, and 20% for testing. This approach ensures subject independence by preventing individuals from appearing in the training and testing sets.

The method proposed in this paper was ultimately validated using the CLDD. This dataset includes 286 subjects, consisting of 79 male patients with depression, 79 female patients with depression, 64 male normal controls, and 64 female normal controls. All participants provided informed consent, and we took measures to safeguard data protection and privacy. To ensure fairness in our algorithm, we first balanced the screened data to achieve gender equity, ensuring an equal representation of male and female participants. Additionally, we matched individuals based on proximity, grouping those with similar educational backgrounds (e.g., primary school to junior high school) while maintaining an age difference of no more than three years. Table 2 provides detailed demographic information for these subjects. Speech data were collected as subjects completed five stimulus tasks, each designed to evoke three different emotional valences, with each individual contributing 21 audio files27.

Table 3 presents comprehensive statistical information about the data, it can be observed that the audio durations of participants vary significantly when completing different tasks. For analysis, the dataset was partitioned by subject, allocating 80% for the training set, 10% for the validation set, and 10% for the test set.

Data preprocessing

We performed two preprocessing steps on the experimental data.

Shallow feature extraction from the speech samples across all datasets

We selected Fbank and spectrogram as the shallow features of the speech signal. For the extraction of Fbank features, a Hamming window was used as the window function, with a frame length of 25 milliseconds and a frameshift of 10 milliseconds. From each speech frame, forty-dimensional Fbank features were extracted. Once the Fbank features for all frames were obtained, a 3-second sliding window was employed to perform mean normalization on these features. To calculate the spectrogram, a 1024-point Fast Fourier Transform (FFT) was applied to each speech frame, and the FFT energy spectra from all frames of a single utterance were concatenated to create the final spectrogram.

For emotion feature extraction based on the spectrogram, both the quantity and size of the spectrogram can significantly influence the quality of the extracted feature representations. We proposed a dynamic adaptive spectrogram cropping method to address the issue of unbalanced sample quantities arising from varying utterance durations.

The procedure begins with the computation of the spectrogram for a given utterance. Utilizing a 1024-point FFT results in 512 discrete frequencies along the vertical axis of the spectrogram. Consequently, the overall size of the spectrogram is \(512 \times n_{\text {frame}}\), where \(n_{\text {frame}}\) represents the total number of frames. The vertical axis of the spectrogram is then compressed to 256 by averaging adjacent points, and the top 32 samples with the lowest energy levels are discarded, resulting in a new spectrogram size of \(224 \times n_{\text {frame}}\).

Since the duration of the speech can vary, dynamic adaptive cropping must be performed on the horizontal axis of the spectrogram. The specific calculations for this adaptive cropping method are defined in Equation (6).

where x represents the clipping interval, N represents the number of clipped spectrograms.

Definition and selection of emotional labels and emotional speech from the depression dataset

In the AVEC 2014 dataset, 23 evaluators continuously recorded valence and arousal values for each audio recording, normalizing these values to the range of [-1, 1]. As a result, each recording is associated with continuous valence and arousal values28.

Utilizing the two-dimensional Valence-Arousal (VA) emotional model, each segment of speech is mapped into one of four quadrants: the first quadrant (Valence > 0, Arousal > 0), the second quadrant (Valence < 0, Arousal > 0), the third quadrant (Valence < 0, Arousal <0), and the fourth quadrant (Valence > 0, Arousal < 0). The emotional label for each speech segment is determined based on the majority (60%) of the valence and arousal values that map to a specific quadrant.

In the AVEC 2014 dataset, each participant’s recordings were conducted 1 to 4 times, with a two-week interval between measurements. Given that this study focuses on a cross-sectional analysis of depression, we selected only the first recording from each participant for our corpus. Ultimately, 259 speech samples were collected from 259 participants across the “Freeform” and “Northwind” tasks, comprising 132 samples categorized as happy and 127 as sad.

Model training

We assessed the effectiveness of traditional TDNN and ECAPA-TDNN in extracting speaker-related features, with particular emphasis on inference time and parameter count. The results of this evaluation are presented in Table 4. The results reveal that traditional TDNN requires the fewest parameters and achieves the fastest inference time under identical hardware conditions. This efficiency makes it suitable for clinical applications in environments with limited computational resources.

The speaker-related feature extractor is implemented using the nnet3 neural network library from the Kaldi speech recognition toolkit29, training a TDNN on 3,684 speakers from the training set. Each training sample consists of approximately 3 seconds of Fbank features and corresponding speaker labels.

The TDNN architecture includes three delays and two fully connected layers, with specific parameter configurations outlined in Table 5. The delay layers utilize 1D dilated convolutions with kernel sizes of 5, 3, and 3, and dilation factors of 1, 2, and 3, respectively. All layers, except for the last fully connected layer-has 1,500 output channels-are composed of 512 channels. The statistical pooling layer computes the mean and standard deviation for frames of length T, resulting in a 1,500-dimensional vector for each statistic. These vectors are concatenated to create a 3,000-dimensional feature representation, which is then processed through two feedforward layers for affine transformation, yielding a 128-dimensional embedding feature with a softmax output layer.

Throughout the model, the ReLU activation function is utilized for non-linearity. The batch size is set to 64 during training, and the network is trained for 21 epochs using Stochastic Gradient Descent (SGD). Adam was chosen as the optimizer, with an initial learning rate of 0.001 and a decay rate of 0.3. Upon completion of the training process, the x-vector is extracted from the output of the sixth affine layer (embedding 6).

Based on the definitions of emotional labels outlined in Section “Definition and selection of emotional labels and emotional speech from the depression dataset”, the three emotional corpora were initially pre-trained using the ResNet-50 architecture. Following this, the dataset referenced in AVEC2014 was employed to transfer emotional features to those of depressed patients, thereby developing an emotional classifier tailored for this population. Finally, the CLDD served as an external test set for feature extraction.

During the model training process, a batch size of 110 was used, and Stochastic Gradient Descent (SGD) was implemented for a total of 60 training epochs. The ADAM optimizer was utilized with default values for the parameters \(\beta _{1}\), \(\beta _{2}\), and \(\varepsilon\), set at 0.9, 0.999, and \(10^{-8}\), respectively. The initial learning rate was established at 0.001, with a decay rate of 0.1, and a dropout factor of 0.5 was applied to all fully connected layers to prevent overfitting.

The encoder E, which integrates the personalized features of depression patients with speech emotion features, consists of two convolutional layers. The first convolutional layer includes 48 convolutional kernels of size \(3 \times 3\), with a stride of 4 and padding of 2. This is followed by a max pooling layer with a kernel size of \(3 \times 3\) and a stride of 2.

The second convolutional layer contains 128 convolutional kernels of size \(5 \times 5\), with a stride of 1 and padding of 2, followed by another max pooling layer with the same kernel size of \(3 \times 3\) and a stride of 2.

The expert model is a deep neural network (DNN) with three hidden layers, each containing 1,024 neurons. To mitigate the risk of overfitting, a dropout rate of 0.5 is applied to all hidden layers.

Performance evaluation metrics

We assessed the proposed model’s performance across two target tasks: classification and regression. For the classification task, we utilized accuracy (ACC), precision (\(P_C\)), and recall (\(R_C\)) to evaluate the model’s predictive performance. For the regression task, we employed Mean Absolute Error (MAE) and Root Mean Square Error (RMSE) as evaluation metrics.

Results

Recognition performance on the Chinese localized depression dataset

We evaluate the advantages of the proposed method from both the perspectives of speech feature extraction and predictive modeling. Additionally, cross-linguistic validation is conducted using the dataset referenced in AVEC2014, where comparisons are made against other state-of-the-art approaches.

In the context of the CLDD, the model’s predictive performance is assessed using metrics ACC, \(P_C\), and (\(R_C\)). In contrast, the performance on the AVEC2014 dataset is measured using MAE and RMSE.

Performance analysis of feature extraction

Five distinct features were extracted and compared for recognition performance on the CLDD. The first feature, denoted as \(f_{SR-ResNet}\), involved directly extracting the speaker-related features from the spectrogram of the depression data using a pre-trained ResNet-5030. The second feature, \(f_{SER-ResNet}\), was obtained by extracting the speaker’s emotion-related features from the same spectrogram with the pre-trained ResNet-5030. The third feature, labeled \(f_{SR-TDNN}\), utilized a speaker identification corpus for training a speaker-related feature extractor based on TDNN embeddings, followed by out-of-domain testing on the depression data to extract the speaker-related features. The fourth feature, \(f_{SER-tl}\), was derived from pre-training a speech emotion recognition (SER) model on ResNet-50 and subsequently applying transfer learning using the dataset referenced in AVEC2014 to create a speaker’s emotion-related feature extractor, which was then tested on the depression data. The fifth feature, denoted \(f_{share(SR-SER)}\), was generated by training an encoder to map \(f_{SR-TDNN}\) and \(f_{SER-tl}\) into a shared feature space, resulting in a fused feature representation. These five features were subsequently fed into a softmax layer for classification, and the experimental results are presented in Table 6.

Table 6 demonstrates that features extracted using pre-trained feature extractors effectively recognize depression. Fusing the speaker-related and emotion-related features significantly enhances the model’s recognition performance compared to using these features in isolation. This improvement can be attributed to the fact that the fused features simultaneously account for the subjects’ personality traits and emotional characteristics.

Moreover, the recognition performance of the speaker’s emotion-related features extracted from pre-trained models generally exceeds that of the speaker-related features. This finding suggests that the emotional information expressed in the speech of depressed patients is more pronounced in distinguishing between the two groups. Additionally, features obtained from feature extractors pre-trained on speaker recognition and speech emotion datasets significantly outperform those extracted directly from pre-trained ResNet models. This indicates that directly transferring pre-trained models using datasets with low volume and similarity is not very effective. In contrast, utilizing pre-trained models with larger datasets that exhibit higher similarity yields significantly better results.

Figure 5 presents the confusion matrix of classification for various deep features on the test set, indicating that feature \(f_{share(SR-SER)}\) exhibits the highest classification performance. Additionally, the deep features demonstrate significant discriminative power for recognizing depressed individuals, especially the speaker’s emotion-related features, which display the most potent discriminative capability. This further suggests that the speaker’s emotion-related features show more significant differences within the depression group than the speaker-related features.

Performance analysis of model structures

The performance of a simple classification model was compared with that of the proposed mixed expert multi-source domain adaptation classification model on the CLDD. We utilized the MoE approach to combine the performance of speaker-related and emotion-related features across multiple sub-tasks through ensemble learning. Given that the MoE model necessitates a fused feature set, we opted not to train on individual features separately. As a result, the outcomes reported for the MoE model are based solely on the fused features, and comparisons with the other four feature sets are not included. Therefore, the simple classification model utilized the \(f_{share(SR-SER)}\) features, which were fed into a softmax layer for training, referred to as \(M_{sof}\). In contrast, the mixed expert multi-source domain adaptation classification model was trained using a weighted sum of predicted probabilities from all expert models based on \(f_{share(SR-SER)}\), denoted as \(M_{moe}\).

The experimental results in Table 7 indicate that the \(M_{moe}\) classification model demonstrates superior classification performance. This suggests that the combined contributions of the speaker-related and emotion-related features, obtained through ensemble learning from five expert models, effectively enhance depression recognition capabilities.

Figure 6 presents the confusion matrix of classification for the proposed model and a simple classification model on the test set, indicating that both models exhibit relatively limited discriminative ability for non-depressed individuals while demonstrating more significant differentiation in recognizing depressed individuals. Overall, \(M_{moe}\) provides a viable approach for depression detection in real-world settings.

Performance analysis in different stimulation tasks

The experimental results indicate that recognition performance is optimized when utilizing the \(f_{share(SR-SER)}\) features in conjunction with the \(M_{moe}\) model. We evaluated the recognition accuracy of the \(f_{share(SR-SER)} + M_{moe}\) model across different stimulation tasks. We compared it with the outcomes from the \(f_{share(SR-SER)} + M_{sof}\) model, as illustrated in Figure 7.

The results reveal that the proposed method \(f_{share(SR-SER)} + M_{moe}\) demonstrates the best recognition performance across the five stimulation tasks, achieving an average accuracy of 73%, with the interview task attaining the highest accuracy of 74.3%. This superior performance may be linked to the nature of the interview simulation task; specifically, the content is likely to evoke more emotions in depressed patients, and the interview task benefits from a substantial volume of speech sample data.

From an overall recognition performance perspective, despite the imbalanced data distribution across the five tasks, the \(f_{share(SR-SER)} + M_{moe}\) model exhibits improved accuracy relative to the \(f_{share(SR-SER)} + M_{sof}\) model. This enhancement can be attributed to the collaborative decision-making process involving all five tasks, which collectively contribute to the final recognition results by integrating their respective decision values. These findings further demonstrate that the method proposed in this study effectively accommodates heterogeneous tasks and imbalanced data distributions, thereby enhancing the model’s generalization performance.

Performance analysis in different emotional valences

We further evaluated the recognition accuracy of the \(f_{share(SR-SER)} + M_{moe}\) model across different emotional valences, as illustrated in Figure 8. The proposed model demonstrates the highest recognition accuracy for both positive and negative emotions while showing the lowest accuracy for neutral emotions. This trend can be attributed to training the speech emotion feature extractor on corpora comprising happy and sad expressions, which enhances the model’s ability to detect happiness and sadness effectively.

Additionally, patients with varying degrees of depression typically exhibit negative and sad emotional symptoms31. As a result, negative emotional valence is often more representative of the characteristics associated with depressed patients, leading to relatively high recognition accuracy for tasks focused on negative emotions.

Recognition performance on the AVEC dataset

The proposed method was validated using the dataset referenced in AVEC2014. We pre-trained the speaker’s emotion-related feature extractor for depression using the CLDD. We then utilized 300 speech recordings from the AVEC2014 dataset as an external test set to extract speaker-related and emotion-related depression features from the two kinds of feature extractors.

We evaluated the predictive performance of our proposed method against baseline models and other state-of-the-art approaches that rely exclusively on the audio modality, using RMSE and MAE as evaluation metrics. In the comparison, we summarized the speech features and models used by the existing methods, with the results presented in Table 8.

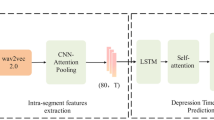

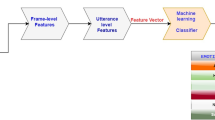

The results presented in Table 8 indicate that the prediction performance for depression based on deep features and models significantly surpasses that of traditional machine learning models employing handcrafted features. Studies36,37,38,39 predict depression by extracting various speech features, including both handcrafted and deep features, and constructing hybrid models with different architectures such as DCNN36, CNN+LSTM37, CNN+LSTM+Attention38, and DCNN+Self-Attention39.

The paper30 shares similarities with our proposed method, as both approaches extract features from two independent sub-tasks, speaker recognition (SR) and speech emotion recognition (SER), and train a hierarchical model for depression detection based on the coordinated information from these features. However, our method outperforms the paper30 for two primary reasons.

First, the paper30 extracts deep features by fine-tuning the fully connected layers of a ResNet model pre-trained on the AVEC dataset. Given the limited amount of depression data and its low similarity to the data used for pre-training the ResNet model, this straightforward fine-tuning approach leads to less accurate extraction of SR and SER features.

Second, integrating SR and SER for depression recognition is characterized by multi-task learning dynamics. Many deep neural network-based multi-task learning models can be sensitive to variations in data distribution and inter-task correlations. Intrinsic conflicts arising from task differences can negatively impact the prediction of the target task, especially when model parameters are extensively shared, a consideration not addressed in the referenced work. In contrast, our proposed method utilizes datasets relevant to both SR and SER tasks for model training, applying transfer learning on datasets pertinent to the target task while accounting for the interrelationships between the source and target tasks. As a result, our method demonstrates superior performance and excellent generalization capabilities.

Notably, while the audio-visual multimodal method presented in paper41 was the winning entry of the 2014 AVEC Challenge, its performance does not exceed that of the single-modal prediction method we proposed. This finding further underscores the superiority of our approach.

Additionally, we utilized large-scale speaker recognition and speech emotion datasets for pre-training to ensure that the model effectively addresses language and cultural differences. These datasets encompass a diverse range of samples from various backgrounds, including German, English, and Chinese. We also applied transfer learning techniques to extract relevant features from depression datasets, thereby improving the model’s adaptability to previously unseen data.

Conclusion

This paper presents a Mixture of Expert (MoE) multi-source domain adaptation classification model for depression recognition by integrating speaker-related and emotion-related features. We employed a speaker recognition method to develop a speaker-related feature extractor, and we applied transfer learning to the speech emotion recognition model to create a depression-related speech emotion feature extractor. These two feature types were then fused using the MoE model. The experimental results demonstrated the model’s effectiveness and advantages in recognizing depression, highlighting its ability to address the challenges posed by linguistic and cultural differences effectively. We recognize that current AI technologies demand substantial computing power, creating challenges for their practical implementation. While we are pleased with our model’s performance in academic experiments, we acknowledge that it faces significant challenges related to computational requirements, privacy protection, and continuous adaptation in real-world scenarios. To tackle these concerns, we will investigate the integration of edge computing and incremental learning in this study, along with automated model updates, to improve the performance of our work in real-world applications. Additionally, we aim to implement differential privacy techniques during data processing and model training to ensure user privacy, especially concerning sensitive health information. By introducing noise during data collection and processing, differential privacy protects users’ data from exposure while preserving the model’s overall performance.

Data availibility

The datasets used and analyzed in this study include the Aidatatang_1505zh speaker recognition dataset, the speech emotion datasets CREMA-D, EMO-DB, and CASIA, and two depression datasets, AVEC2014 and CLDD. The Aidatatang_1505zh dataset is available at https://github.com/anshuiyin/aidatatang_1505zh. The CREMA-D dataset can be accessed at https://github.com/CheyneyComputerScience/CREMA-D. The EMO-DB dataset is available at http://emodb.bilderbar.info/docu/#emodb. The CASIA dataset is found at https://pan.baidu.com/s/1cnnKrYQDheNfoEhcDoShyA (Extraction code: vk36). The CLDD and AVEC2014 datasets used in this study can be obtained from the corresponding author upon reasonable request.

References

Mathers, C. D. & Loncar, D. Projections of global mortality and burden of disease from 2002 to 2030. PLOS Medicine 3, 1–20. https://doi.org/10.1371/journal.pmed.0030442 (2006).

Cummins, N. et al. A review of depression and suicide risk assessment using speech analysis. Speech communication 71, 10–49 (2015).

Dong, Y. & Yang, X. A hierarchical depression detection model based on vocal and emotional cues. Neurocomputing 441, 279–290 (2021).

Depression, W. Other common mental disorders: global health estimates. Geneva: World Health Organization 24 (2017).

Low, D. M., Bentley, K. H. & Ghosh, S. S. Automated assessment of psychiatric disorders using speech: A systematic review. Laryngoscope investigative otolaryngology 5, 96–116 (2020).

Cummins, N. et al. A review of depression and suicide risk assessment using speech analysis. Speech communication 71, 10–49 (2015).

Asgari, M., Shafran, I. & Sheeber, L. B. Inferring clinical depression from speech and spoken utterances. In 2014 IEEE international workshop on Machine Learning for Signal Processing (MLSP), 1–5 (IEEE, 2014).

Wessman, A. Mood and personality (Holt, Reinhart and Winston, 1966).

Dehak, N., Kenny, P. J., Dehak, R., Dumouchel, P. & Ouellet, P. Front-end factor analysis for speaker verification. IEEE Transactions on Audio, Speech, and Language Processing 19, 788–798 (2010).

Barrett, L. F. Are emotions natural kinds?. Perspectives on psychological science 1, 28–58 (2006).

Vázquez-Romero, A. & Gallardo-Antolín, A. Automatic detection of depression in speech using ensemble convolutional neural networks. Entropy 22, 688 (2020).

Wu, P. et al. Automatic depression recognition by intelligent speech signal processing: A systematic survey. CAAI Transactions on Intelligence Technology 8, 701–711 (2023).

Jan, A., Meng, H., Gaus, Y. F. B. A. & Zhang, F. Artificial intelligent system for automatic depression level analysis through visual and vocal expressions. IEEE Transactions on Cognitive and Developmental Systems 10, 668–680 (2017).

Gideon, J., McInnis, M. G. & Provost, E. M. Improving cross-corpus speech emotion recognition with adversarial discriminative domain generalization (addog). IEEE Transactions on Affective Computing 12, 1055–1068 (2019).

Huang, Z. et al. Domain adaptation for enhancing speech-based depression detection in natural environmental conditions using dilated cnns. In INTERSPEECH, 4561–4565 (2020).

Feraru, S. M., Schuller, D. et al. Cross-language acoustic emotion recognition: An overview and some tendencies. In 2015 International Conference on Affective Computing and Intelligent Interaction (ACII), 125–131 (IEEE, 2015).

Huang, Z. et al. Domain adaptation for enhancing speech-based depression detection in natural environmental conditions using dilated cnns. In INTERSPEECH, 4561–4565 (2020).

Hinton, G. et al. Phoneme recognition using time-delay neural network. IEEE transactions on acoustics, speech, and signal processing 37, 328–339 (1989).

Desplanques, B., Thienpondt, J. & Demuynck, K. Ecapa-tdnn: Emphasized channel attention, propagation and aggregation in tdnn based speaker verification. arXiv preprint arXiv:2005.07143 (2020).

Snyder, D., Garcia-Romero, D., Sell, G., Povey, D. & Khudanpur, S. X-vectors: Robust dnn embeddings for speaker recognition. In 2018 IEEE international conference on acoustics, speech and signal processing (ICASSP), 5329–5333 (IEEE, 2018).

Cai, H. et al. Modma dataset: A multi-modal open dataset for mental-disorder analysis. arxiv 2020. arXiv preprint arXiv:2002.09283 (2020).

Jacobs, R. A., Jordan, M. I., Nowlan, S. J. & Hinton, G. E. Adaptive mixtures of local experts. Neural computation 3, 79–87 (1991).

Zhao, S., Li, B., Xu, P. & Keutzer, K. Multi-source domain adaptation in the deep learning era: A systematic survey. arXiv preprint arXiv:2002.12169 (2020).

Ruder, S., Ghaffari, P. & Breslin, J. G. Knowledge adaptation: Teaching to adapt. arXiv preprint arXiv:1702.02052 (2017).

Valstar, M. et al. Avec 2014: 3d dimensional affect and depression recognition challenge. In Proceedings of the 4th international workshop on audio/visual emotion challenge, 3–10 (2014).

Ringeval, F. et al. Avec 2019 workshop and challenge: state-of-mind, detecting depression with ai, and cross-cultural affect recognition. In Proceedings of the 9th International on Audio/visual Emotion Challenge and Workshop, 3–12 (2019).

Guo, W., Yang, H., Liu, Z., Xu, Y. & Hu, B. Deep neural networks for depression recognition based on 2d and 3d facial expressions under emotional stimulus tasks. Frontiers in neuroscience 15, 609760 (2021).

Valstar, M. et al. Avec 2013: the continuous audio/visual emotion and depression recognition challenge. In Proceedings of the 3rd ACM international workshop on Audio/visual emotion challenge, 3–10 (2013).

Povey, D. et al. The kaldi speech recognition toolkit. In IEEE 2011 workshop on automatic speech recognition and understanding (IEEE Signal Processing Society, 2011).

Dong, Y. & Yang, X. A hierarchical depression detection model based on vocal and emotional cues. Neurocomputing 441, 279–290 (2021).

Beck, Aaron T. & M. & Brad A. Alford, P. Depression (University of Pennsylvania Press, Philadelphia, 2009).

Valstar, M. et al. Avec 2014: 3d dimensional affect and depression recognition challenge. In Proceedings of the 4th international workshop on audio/visual emotion challenge, 3–10 (2014).

Senoussaoui, M., Sarria-Paja, M., Santos, J. F. & Falk, T. H. Model fusion for multimodal depression classification and level detection. In Proceedings of the 4th International Workshop on Audio/Visual Emotion Challenge, 57–63 (2014).

Senoussaoui, M., Sarria-Paja, M., Santos, J. F. & Falk, T. H. Model fusion for multimodal depression classification and level detection. In In Proceedings of the 4th International Workshop on Audio/Visual Emotion Challenge, 57–63 (2014).

Jan, A., Meng, H., Gaus, Y. F. B. A. & Zhang, F. Artificial intelligent system for automatic depression level analysis through visual and vocal expressions. IEEE Transactions on Cognitive and Developmental Systems 10, 668–680 (2017).

He, L. & Cao, C. Automated depression analysis using convolutional neural networks from speech. Journal of biomedical informatics 83, 103–111 (2018).

Niu, M., Tao, J., Liu, B. & Fan, C. Automatic depression level detection via lp-norm pooling 4559–4563 (Proc. INTERSPEECH, Graz, Austria, 2019).

Niu, M., Tao, J., Liu, B., Huang, J. & Lian, Z. Multimodal spatiotemporal representation for automatic depression level detection. IEEE transactions on affective computing 14, 294–307 (2020).

Zhao, Z. et al. Hybrid network feature extraction for depression assessment from speech. Neurocomputing (2020).

Niu, M., Liu, B., Tao, J. & Li, Q. A time-frequency channel attention and vectorization network for automatic depression level prediction. Neurocomputing 450, 208–218 (2021).

Williamson, J. R., Quatieri, T. F., Helfer, B. S., Ciccarelli, G. & Mehta, D. D. Vocal and facial biomarkers of depression based on motor incoordination and timing. In In Proceedings of the 4th international workshop on audio/visual emotion challenge, 65–72 (2014).

Acknowledgements

The authors acknowledge all subjects and thank Director Wang and Director Ding from Tianshui Third People’s Hospital for clinical diagnosis. The research leading to these results was partly funded by the National Natural Science Foundation of China (Grant No.62267008, No.62067008).

Author information

Authors and Affiliations

Contributions

Data curation, formal analysis, investigation, methodology, writing the original draft, review, and editing were contributed by Weitong Guo. Data curation, formal analysis, investigation, and writing the original draft were contributed by Qian He. Data curation, formal analysis, and investigation by Ziyu Lin. Xiaolong Bu, Ziyang Wang and Dong Li contributed to the experimental paradigm design, methodology, visualization, and resources. Hongwu Yang contributed to data curation, funding acquisition, investigation, project administration, resources, writing review, and editing. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

We declare that we have no financial and personal relationships with other people or organizations that can inappropriately influence our work and that there is no professional or other personal interest of any nature or kind in any product, service, and/or company that could be construed as influencing the position presented in or the review of the manuscript entitled.

Ethics approval and consent to participate

This study was conducted in accordance with the ethical standards specified by the Tianshui Third People’s Hospital in Gansu Province, and all procedures involving human participants were approved by the Tianshui Third People’s Hospital in Gansu Province. Written informed consent was obtained from all individual participants included in the study. For participants under the age of 18, written informed consent was also obtained from a parent or legal guardian.

Consent for publication

Participants were informed that their data would be used for research purposes and that results might be published. We have taken measures to ensure that participants’ identities are not disclosed in any published materials, including using anonymization techniques and removing personally identifiable information.

Compliance with ethical standards

The authors declare no conflicts of interest related to this research. This study complies with the current ethical standards for research involving human participants, as outlined in the Declaration of Helsinki. We have adhered to best practices in data protection, ensuring that all personal data collected is stored securely and access is restricted to authorized personnel only.

Risk assessment and mitigation

Prior to the commencement of the study, a thorough risk assessment was conducted to identify potential harm to participants. Based on this assessment, mitigation strategies were implemented to minimize risks. For example, participants were provided with detailed information about the study’s procedures, potential risks, and their rights as research subjects. Participants were also allowed to withdraw from the study at any time without penalty.

Data privacy and security

All data collected during the study was stored in a secure, password-protected database accessible only to authorized researchers. The data was anonymized to protect participants’ identities, and all personal identifiers were removed before analysis. Measures were taken to ensure that the data was handled in accordance with local data protection laws and regulations.

Fairness, accountability, and transparency

We acknowledge the importance of fairness, accountability, and transparency in AI research. Therefore, we have taken steps to ensure that our algorithms and models are designed to minimize bias and promote equitable outcomes. This includes conducting bias audits, implementing fairness constraints, and making our research methods and results publicly available to facilitate reproducibility and scrutiny by the wider research community. The corresponding author is responsible for submitting a competing interests statement on behalf of all authors of the paper. This statement must be included in the submitted article file.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Guo, W., He, Q., Lin, Z. et al. Enhancing depression recognition through a mixed expert model by integrating speaker-related and emotion-related features. Sci Rep 15, 4064 (2025). https://doi.org/10.1038/s41598-025-88313-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-88313-9