Text classification with Multi-Armed Bandit

What is Multi-Armed Bandit ?

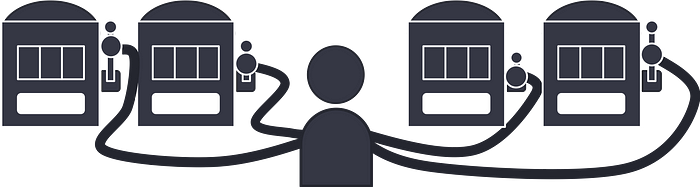

The Multi-Armed Bandit (MAB) algorithm is a type of reinforcement learning algorithm that addresses the trade-off between exploration and exploitation in decision-making. The name “Multi-Armed Bandit” is inspired by a classic gambling problem in which a gambler has to decide which of several slot machines, or “arms,” to play. Each arm provides a reward that is generated by an unknown probability distribution, and the gambler’s goal is to maximize their total reward over a given number of rounds.

In the context of the MAB algorithm, each arm represents a decision that can be taken, and the reward corresponds to some measure of performance or utility. The algorithm must balance exploring different arms to gather information about their expected reward, while also exploiting the knowledge it has gained to make decisions that are likely to result in high rewards. This trade-off is modeled as a stochastic process in which the algorithm selects arms based on some policy, and updates its estimates of the expected rewards based on the observed rewards.

There are many variants of the Multi-Armed Bandit algorithm, each with different policies for balancing exploration and exploitation. Some common variants include the epsilon-greedy algorithm, the softmax algorithm, and the upper-confidence-bound (UCB) algorithm. The choice of algorithm depends on the specific problem and the desired exploration-exploitation trade-off.

Text classification using Multi-Armed Bandit.

Text classification with a multi-arm bandit algorithm is a machine learning approach that can be used to optimize the performance of a text classifier over time. The idea behind using a multi-arm bandit for text classification is to balance the exploration of different classifiers and the exploitation of the classifier that has performed well so far.

Here’s a general outline of how text classification with a multi-arm bandit could be implemented:

- Preprocessing: Clean and preprocess the text data, such as converting text into numerical representations (e.g. bag of words or TF-IDF vectors) and splitting the data into training and testing sets.

- Define the classifiers: Choose a set of classifiers that you want to use, such as support vector machine (SVM), k-nearest neighbors (KNN), or decision tree, and initialize their parameters.

- Initialize the multi-arm bandit algorithm: Choose a multi-arm bandit algorithm, such as Upper Confidence Bound (UCB) or Thompson Sampling, and initialize its parameters, such as the number of arms, the exploration rate, and the rewards.

- Loop through the text data: In each iteration, select an arm using the multi-arm bandit algorithm and train the corresponding classifier on the training data. Evaluate the classifier on the test data and update the rewards for each arm.

- Update the parameters: Update the parameters of the multi-arm bandit algorithm and the classifiers based on the rewards obtained in the previous step.

- Repeat: Repeat steps 4 and 5 for a specified number of iterations or until a stopping criterion is met.

- Select the best classifier: Select the classifier that performed the best overall and uses it for future predictions.

It is important to note that the choice of multi-arm bandit algorithm and the classifiers used can have a significant impact on the performance of the text classification system. Therefore, it is important to carefully evaluate different algorithms and perform parameter tuning to obtain the best results.