In the ever-evolving landscape of Machine Learning, scaling plays a pivotal role in refining the performance and robustness of models. Among the multitude of techniques available to enhance the efficacy of Machine Learning algorithms, feature scaling stands out as a fundamental process. In this comprehensive article, we delve into the depths of feature scaling in Machine Learning, uncovering its importance, methods, and advantages while showcasing practical examples using Python.

Understanding Feature Scaling in Machine Learning:

Feature scaling stands out as a fundamental process. In this comprehensive article, we delve into the depths of feature scaling in Machine Learning, uncovering its importance, methods, and advantages while showcasing practical examples using Python.

Let’s give a simplified explanation for this:

Let’s say that in a dataset, we have the weight of men ranging between 15kg to 50 kg. Then feature scaling will standardize this as 0 and 1, where 0 means the lowest value and 1 being the highest.

Key Attributes of Feature Scaling in Machine Learning:

1. Uniform Feature Magnitudes for Optimal Learning

At the heart of scaling lies the principle of achieving uniformity in feature magnitudes. Scaling ensures that all features contribute proportionally to the learning process, preventing any one feature from dominating the algorithm’s behavior. This feature equality fosters an environment where the algorithm can discern patterns and relationships accurately across all dimensions of the data.

2. Algorithm Sensitivity Harmonization

Machine Learning algorithms often hinge on distance calculations or similarity measures. Features with divergent scales can distort these calculations, leading to biased outcomes. Scaling steps in as a guardian, harmonizing the scales and ensuring that algorithms treat each feature fairly. This harmonization is particularly critical in algorithms such as k-Nearest Neighbors and Support Vector Machines, where distances dictate decisions.

3. Convergence Enhancement and Faster Optimization

Scaling expedites the convergence of optimization algorithms. Techniques like gradient descent converge faster when operating on features with standardized scales. The even playing field offered by scaling empowers optimization routines to traverse the solution space efficiently, reducing the time and iterations required for convergence.

4. Robustness Against Outliers

Scaling, especially via methods like standardization, endows models with enhanced robustness against outliers. Extreme values that would otherwise exert disproportionate influence are tamed when features are scaled. This resilience to outliers translates into more reliable and stable predictions, as the model is less susceptible to extreme data points.

5. Model Interpretability and Transparency

Scaled features facilitate model interpretability. The common scale allows practitioners to discern the impact of each feature on predictions more clearly. Interpretable models build trust, enabling stakeholders to understand the rationale behind decisions and glean actionable insights from the model’s outputs.

6. Effective Regularization and Complexity Control

Scaling lays the foundation for effective regularization. Techniques like L1 and L2 regularization, designed to manage model complexity, perform optimally when features are scaled. Scaling ensures that regularization operates uniformly across all features, preventing any single feature from dominating the regularization process.

7. Stable and Consistent Model Behavior

Scaled features promote stability and consistency in model behavior. When input data exhibits varied scales, small changes in one feature might lead to erratic shifts in model predictions. Scaling mitigates this instability, resulting in more dependable and predictable model outcomes.

8. Reduction of Dimensionality Bias

In high-dimensional datasets, features with larger scales can inadvertently receive higher importance during dimensionality reduction techniques like Principal Component Analysis (PCA). Scaling prevents this bias, allowing PCA to capture the true variance in the data and extract meaningful components.

Key Application:

The application of feature scaling is rooted in several key reasons:

-

Alleviating Algorithm Sensitivity

Many Machine Learning algorithms are sensitive to the scale of input features. Without scaling, algorithms such as k-Nearest Neighbors (k-NN), Support Vector Machines (SVMs), and clustering techniques might not give accurate results as they calculate distances or similarities between data points.

-

Accelerating Convergence

Feature scaling can significantly speed up the convergence of iterative optimization algorithms, such as gradient descent. By normalizing or standardizing features, the optimization process becomes more stable and efficient.

-

Enhancing Model Performance

Scaling helps improve the performance of models by ensuring that no single feature dominates the learning process. This balance in influence contributes to more accurate predictions.

Advantages of Feature Scaling:

The advantages of employing feature scaling are multifaceted:

-

Enhanced Model Convergence and Efficiency

Feature scaling paves the path to faster and more efficient convergence during the training of Machine Learning algorithms. Optimization techniques, such as gradient descent, tend to converge more swiftly when features are on a comparable scale. Scaling assists these algorithms in navigating the optimization landscape more effectively, thereby reducing the time required for model training.

-

Algorithm Sensitivity Mitigation

Many Machine Learning algorithms rely on distance calculations or similarity metrics. Features with differing scales can unduly influence these calculations, potentially leading to suboptimal results. By scaling features to a standardized range, we mitigate the risk of certain features overpowering others and ensure that the algorithm treats all features equitably, thus enhancing the overall performance and fairness of the model.

-

Robustness to Outliers

Scaling techniques, particularly standardization, make Machine Learning models more robust to outliers. Outliers, which can disproportionately impact algorithms like linear regression, can be tamed by standardizing features. Standardization assigns less weight to extreme values, preventing outliers from unduly affecting model coefficients and predictions.

-

Equitable Feature Contributions

Feature scaling levels the playing field for all features in a dataset. When features have disparate scales, those with larger scales may dominate the learning process and overshadow the contributions of other features. Scaling ensures that each feature contributes proportionally to the model’s performance, promoting a balanced and accurate representation of the data.

-

Improved Model Interpretability

Scaled features lead to improved model interpretability. When features are on a common scale, it becomes easier to understand and compare their respective contributions to predictions. This interpretability is particularly valuable in scenarios where explaining model outputs is essential for decision-making.

-

Optimized Regularization Performance

Regularization techniques, such as L1 and L2 regularization, are more effective when features are scaled. Scaling prevents any single feature from dominating the regularization process, allowing these techniques to apply the desired level of constraint to all features uniformly. As a result, the model achieves better control over complexity, leading to improved generalization.

-

Stable Model Behavior

Feature scaling promotes stable and consistent model behavior. With scaled features, the model’s predictions become less sensitive to variations in the input data, reducing the likelihood of erratic outputs. This stability enhances the model’s reliability and trustworthiness.

Methods of Scaling in Machine Learning:

Two prominent methods of scaling are Normalization and Standardization:

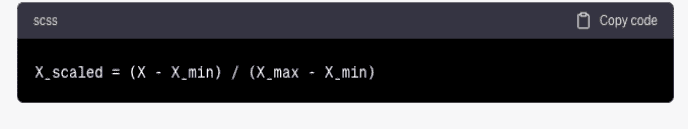

- Feature Scaling Data Normalization: Min-Max Scaling

Normalization transforms features to a range between 0 and 1. The formula for normalization is:

Where X is the original feature value, X_min is the minimum value of the feature, and X_max is the maximum value of the feature.

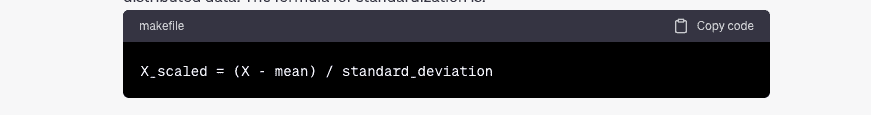

- Feature Scaling Data Standardization: Z-Score Scaling

Standardization scales feature to have a mean of 0 and a standard deviation of 1. The formula for standardization is:

Where X is the original feature value, mean(X) is the mean of the feature, and std(X) is the standard deviation of the feature.

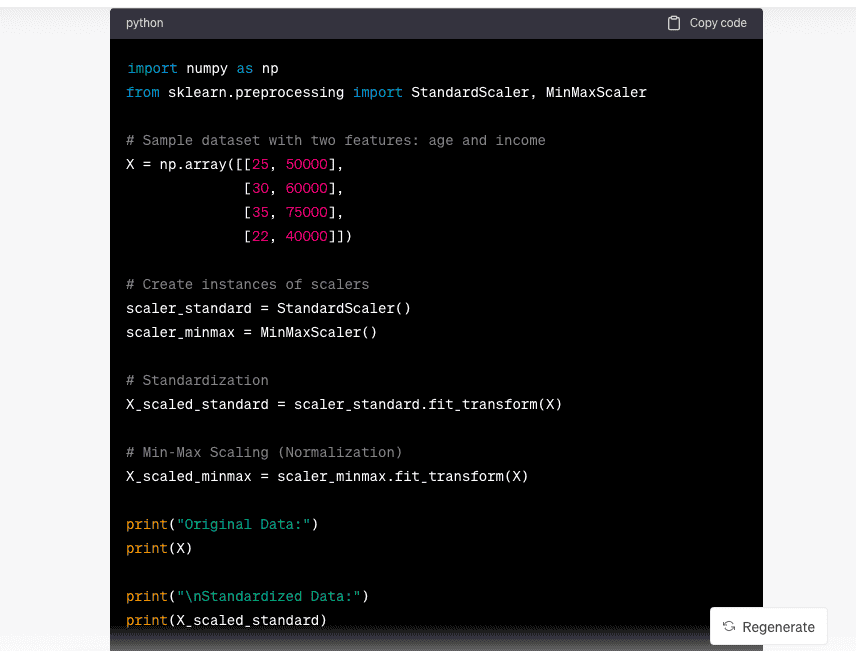

Feature Scaling in Python:

Feature scaling in Machine Learning refers to the process of standardizing or normalizing the numerical features in a dataset so that they are on a similar scale. Scaling is essential because many machine learning algorithms perform better when features are approximately on the same scale. Here’s an example of feature scaling in Python using the scikit-learn library:

Embracing the Significance of Scaling: The Path to Model Excellence:

Feature scaling, the unsung hero of Machine Learning preprocessing, plays an integral role in shaping model performance and robustness. By harmonizing feature scales, normalization, and standardization empower Machine Learning algorithms to operate optimally, laying the groundwork for more accurate predictions and data-driven insights.

Armed with a profound understanding of feature scaling methodologies and their profound implications, you are poised to unlock the full potential of your Machine Learning endeavors and propel your models to new heights of excellence.

The Way Ahead

With all this and much more, Machine Learning is a powerful technology. Having expertise in this domain will give you an edge over your competitors. To start your learning journey in Machine Learning, you can opt for a free course in ML. This course will help in developing your fundamentals in ML, and later you can pursue a full-time course to further sharpen your skills.

![Method Overriding & Method Overloading in Python [with Example]](https://www.pickl.ai/blog/wp-content/uploads/2023/08/Method-Overriding-Method-Overloading-150x150.jpg)