A Beginner’s Guide to Data Science

How I learned to stop worrying and love the field

This blog covers all the core themes to starting your career in data science:

🧭 Exploration vs Exploitation

🤓 Getting a theoretical edge

💼 Building your professional portfolio

🔥 Learning how to tell stories with data

❤️ Getting the pulse of the community

The demand for data scientists has been steadily rising in the past decade, with industries like retail, medicine and finance claiming the lion’s share. Based on current predictions (enabled by data science), this trend will continue, as more and more industries shift towards data-driven and automated solutions.

This frenzy has not lowered the entry barrier for a career in data science. Solid theoretical background in statistics and machine learning, experience with state-of-the-art deep learning algorithms, expert command of tools for data pre-processing, database management and visualisation, creativity and story-telling abilities, communication and team-building skills, familiarity with the industry. A typical job description in data science seems to be describing a Renaissance polymath, rather than a fresh graduate in the twenty-first century job market.

High expectations may be particularly daunting for someone about to start their career.

How can you be certain that you possess the skills necessary for a smooth and fruitful career while avoiding spending more time and effort than necessary?

🧭 Exploration vs Exploitation

At its core, this question features the, famous in the machine learning community, exploration versus exploitation dilemma. Whenever faced with a new problem, a learner, be it a human, animal or AI algorithm, needs to divide their efforts between two tasks: explore the world, in order to collect all the information necessary and, exploit their knowledge by using it to solve the problem. The learner’s dilemma is then continuously present on their training path: how do you know if this is the right moment to stop exploring and take your chances at the world?

This post aims to provide an overview of learning tools that are worthy to be part of your path towards becoming a data scientist.

This path aims towards two, equally important objectives: getting the skills most desired in the foreseeable future (which is rather short in data science) and developing the abilities necessary to become independent and evolvable enough to adjust to changes that may come your way (with probability 99%).

The learning dilemma does not have a solution, especially for a field as changing and complex as that of data science. For this reason, this post does not feature a foolproof list of the (insert arbitrary number) steps that will make you a successful data scientist. Instead, we gauge the community to find the tools that have dominated it and its opinion on important open questions in the field.

Your data science career is your personal matter. However, it can be fun and helpful to ask your community: How did you go about this?

And then go your own way.

🤓 Getting a theoretical edge

Experience by itself teaches nothing…Without theory, experience has no meaning. Without theory, one has no questions to ask. Hence without theory there is no learning. — W. Edwards Deming

One of the realisations that one experiences when moving from learning to doing, is that data science is more about finding the right questions rather than the right answers.

While you have probably heard that getting your hands dirty with data from early on is important, you may may unaware of the practical effects of theory:

- A good grasp of theory allows you to know the questions meaningful to be asking. Choices in the data collection process and assumptions made by your statistical tools are acting as constraints on the answers within your grasp. Is the sample big enough to reach the desired confidence level? Does confounding in the dataset prohibit you from establishing correlation? Do the assumptions about the data distribution agree with the real distribution?

- Knowledge of the theoretical model behind a machine learning model can make tuning less frustrating and quicker. The deep learning community has many secrets, the most prominent probably being that 90% of training a machine learning model is spent on everything else but training.

Being able to foresee the feasibility of your plans and tuning your models intelligently rather than randomly e can save you, your employer and the environment, valuable resources. This is particularly important for data science, where timelessness and efficiency matter.

Theoretical knowledge is to be sought in two very different places, that can be used complementary, books and online courses.

📕 Books

Theoretical books are a good source of self-contained knowledge that you can study at your own pace. Here’ some that stand out in my readings:

- An Introduction to Statistical Learning is a great short beginner’s handbook. Do not feel obliged to through the programming examples in R though, as you may prefer other languages and books.

- The Elements of Statistical Learning offers a deeper dive into the field.

- To anyone working on deep learning, Deep Learning is a bible. Although the field is progressing quickly, the content in this book will offer you familiarity with all concepts necessary to understand the state-of-the-art.

Bonus point: well-established scientific books like the ones mentioned above have been made available for free by their authors in an online format.

👩🏫 Courses

Online courses can offer you the curriculum you are seeking. These are the ones I followed during my Master internship, the point at which I became keenly interested in machine learning:

- Andrew’s NG ML course on coursera, With close to 5 million students, this is the most cult online course in the field. Do not be appalled by the fact that all code is in Matlab; it can be easily transferred to other high-level languages and the important bit is that you get to implement the algorithms yourself.

- Caltech’s course Learning from Data by Yaser ABu Mostafa is a must for the theoretically inclined.

💼 Building your professional portfolio

If you are at this point on your learning path that you are wondering if it is time to start writing code, then it is.

Although theoretical knowledge is your foundation, it is effectively invisible to your prospective interviewers. Your professional portfolio is the means by which you can prove, both to you and your employer, that data science is rightfully your trade.

Building your portfolio is a continuous process, during which you will get to know, reject and learn to love different tools that the software community has built for supporting it self. While this list is open-ended, here’s a good starter kit:

🗃 Stay organised with version control

The software projects in your portfolio are less like the Gioconta and more like London’s Tower Bridge. They are not made to be placed somewhere and admired for the years to come: they are living artefacts that you (and hopefully other people) will keep supporting and extending when needs evolve.

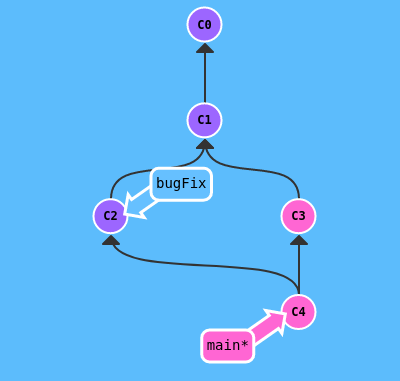

This need for continuous improvement and collaboration makes version control a necessity. While there are a couple of alternatives, Git is the most popular version control tool and undoubtedly the place to start.

Git is primarily used for coordinating work among multiple programmers working on the same project, but this does not make irrelevant for you portfolio if you are the sole contributor. As a PhD student working solo on most of my software projects, Git has proven invaluable in its ability to help me restore previous versions of files, keeping multiple versions and logging progress.

Disclaimer: version control can become quite complex. You certainly don’t need to master all its possibilities, but learning the basics from the start can be very useful. I recommend starting your journey with Git with learngitbranching, a graphical and intuitive walkthrough tutorial, and then jumping right on using it for your own projects.

🦚Become visible

Software development platforms originated as tools for the software community to develop and freely share code on the internet. For you, a profile in this kind of platform is important, as it serves as a pointer to all your software projects. On your CV, it is equally important to your email and more vital than your LinkedIn profile.

There’s a variety of platforms out there, the most popular being GitLab and GitHub. The former is up-and-coming and truly open-source, but GitHub is currently the most popular choice. This probably makes it the place to start, but it could a matter of months or years for this to change.

Bonus: along with your GitHub profile, you get free access to GitHub pages, a free hosting service for your website that many developers you as a blog to showcase their work.

📄 Find the right dataset

As with many other things like garbage and people, our society is very good at producing data, but not well-adapted enough to process them. This is not surprising, as in order to extract value from a dataset, one needs to take into account shortcomings and biases of the data collection process, handle missing entries and understand the features.

For this reason, places that curate and open-source datasets are valuable for the community:

- Kaggle is more of an establishment in data science rather than a mere repository, hosting numerous competitions and a blogpost. It’s definitely the place to start

- The UCI ML repository has a smaller but very well curated collection that may be under the radar of other developers.

For more dataset sources take a look at this curated list.

Datasets are the raw material of your projects. When picking one for your next project you may want to go through the following questions:

- Is it related to an industry field important to your job search? Adjusting the dataset to match the interests of your prospective employers may make you stand out.

- Does the subject excite you? Sometimes the best way to get your interviewers interested is to be yourself genuinely interested in the subject.

- Is the dataset information rich? Ultimately, the questions you can answer are constrained by the data. Datasets in the forms of multiple tables can help you be more creative in the questions you can ask compared to datasets that simply have a large number of examples.

🏛️ Stand the test of time

It takes all the running you can do, to keep in the same place. ― Lewis Carroll, Alice Through the Looking Glass

The world of software is constantly evolving, with new versions of libraries being released every few months and older versions becoming outdated. This means that a GitHub project that worked perfectly the day of your last commit, may give you an incomprehensible error the next time you try to run it.

How can you “freeze” all the dependencies around your own code so that one can easily run it no matter how much time has passed?

This is where environment management tools comes to play:

🏡 Environment management

Exclusively for python projects, CONDA makes a screenshot of a project’s packages and their versions in the form of a virtual environment. To use it, one needs to install all listed packages from scratch.

Docker wraps software in containers., which can be seen as independent virtual operating systems. Software in the container can be used without installation.

Docker seems to be the tool of choice for commercial purposes, but, wandering around research-oriented GitHub projects myself, I most often encounter CONDA environments. The choice is ultimately up to you and is not very crucial, as, ultimately, you will adjust to the tools of choice of your workplace.

👧🔥👦 Learning how to tell stories with data

“Data scientists are involved with gathering data, massaging it into a tractable form, making it tell its story, and presenting that story to others.”

— Mike Loukides

Your software projects do not speak for themselves. While they serve as a proof of your technical skills, they do not have the expressive power to convey the exciting messages you discovered in your dataset. Your future interviewers, blog readers and friends, are not software interpreters but people, which are notoriously known for being attracted to stories.

Luckily, a data science project has great story-telling potential. Regardless of the form you choose to present it, be it a report, a Jupyter notebook or a presentation, your story needs to revolve less around numbers and more around visuals.

You can see your visuals as belonging to one of these two broad categories:

- plots for displaying statistical analysis. In this case, the most important step is picking the type of plot, such as a pie chart or a bar plot. Making the right choice is surprisingly hard; it all boils down to figuring out what works for the type of data you have at hand, the message you want to convey and the type of audience. Execution is also important: there are many packages that automatically optimise for aesthetics, like seaborn, matplotlib and pyplot for Python. You may want to also checkout packages in R, a community primarily consisting of statisticians, that may offer you more out-of-the-box options.

- charts and diagrams. A variety of tools, like draw.io, Lucidchart and Inkscape can help you nail down that flow chart that will clearly explain your ML pipeline, without looking like it came from the ‘80s.

Bonus material: many things have been written about the power of visuals to influence readers. Take a look at the book How to lie with maps and listen to what the community has to say through blogposts.

❤️Getting the pulse of the community

Data science is one of those fields that tends to attract people passionate about their work. Beyond mastering current tools and solving real-world problems, we want to gauge the story behind the ideas and techniques.

Podcasts are a great way to get the pulse of the current culture. Some of my favourites are:

- Partially derivative focuses on statistics and data science.

- The Lex Fridman Podcast features conversations with amazing researchers in the field of AI and beyond.

- Brain Inspired is a neuroscience podcast, for those excited by the link between artificial and biological networks.

There’s many more out there so pick whatever puts a smile on your face during those long commuting hours.

Finally, in a field progressing at the dizzying speed that data science does, having a historical perspective is not just an intellectual luxury, but can help you tell the difference between passing trends and hype so that you can focus your development on what matters in the long-term.

Some of my personal favourite historical moments in AI are:

- Alan Turing asking in the ’50s if machines can think.

- Marvin Minsky attacking perceptrons in the 80s, a view believed to have contributed to the first AI winter.

- John Searle attacking Strong AI and giving us the Chinese experiment, which is used even today to talk about the limitations of AI.

- Yoshua’s Bengio keynote at Neurips 2020 that urged the AI community to move from System 1 to System 2 AI.

Applied Data Science Partners is a London based consultancy that implements end-to-end data science solutions for businesses, delivering measurable value. If you’re looking to do more with your data, please get in touch via our website. Follow us on LinkedIn for more AI and data science stories!