The Hand-icap of AI Art : Exploring the intricate challenge of drawing hands

Getting a grip on the technical and anatomical factors behind AI-Generated hand drawings... This story mainly target developers interested in AI-generated art.

Artificial intelligence has made significant advancements in recent years, enabling the creation of stunning artwork and digital designs. However, there is still a long way to go before AI can flawlessly replicate the intricacies of human-drawn art, especially when it comes to drawing hands. In this article, I explore the technical reasons behind this challenge but also delves (briefly) into the way our brain process information about human characteristic to further understand the complexities of hand drawings and our perception. Additionally, we’ll highlight opportunities for engineers to improve AI-generated hand drawings using open-source projects and novel techniques.

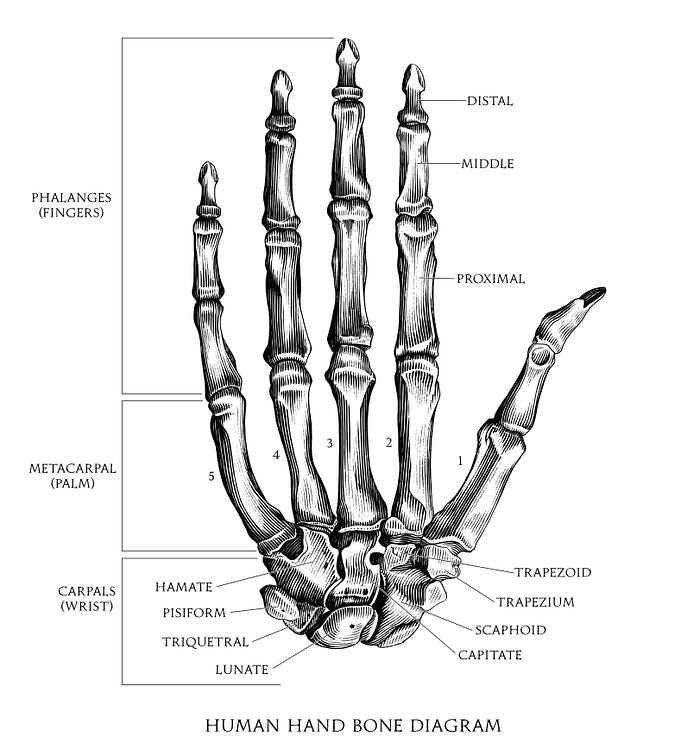

The complexity of human hands

Unique structure and proportions

One of the reasons that AI struggles to draw hands is the complex structure and proportions of human hands. Each hand consists of 27 bones, numerous joints, muscles, and tendons, all of which must be accurately represented in a drawing.

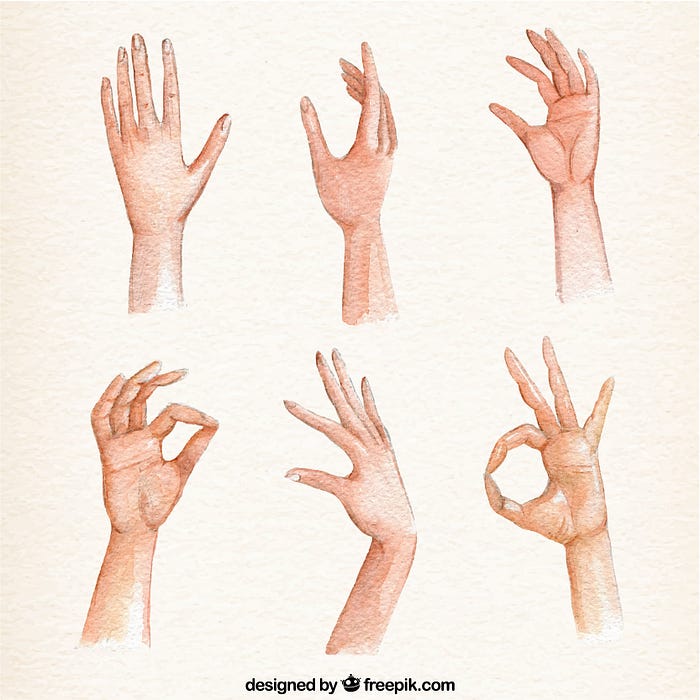

Wide range of poses and angles

Hands are incredibly versatile, capable of adopting a vast array of poses and angles. This variability makes it difficult for AI algorithms to generalize from a limited dataset and produce accurate hand drawings across different scenarios.

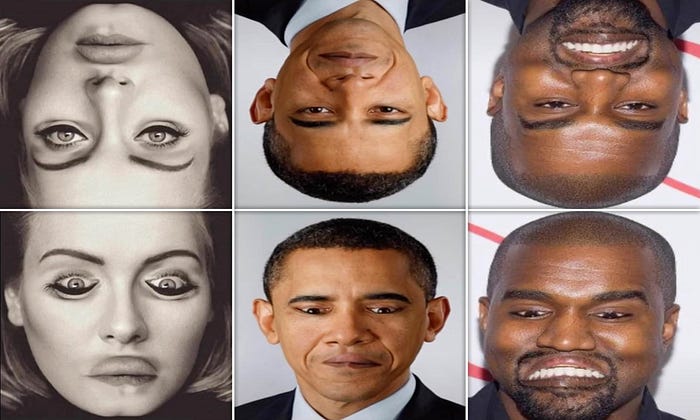

Face perception vs hand drawings

The Thatcher effect and our facial Perception

The Thatcher effect is a phenomenon where it becomes more difficult to detect local feature changes in an upside-down face, despite identical changes being obvious in an upright face.

For more detailed information, there is an article about this in the National Center for Biotechnology Information website : https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4021138/#B12

First reported by the University of York’s Professor Peter Thompson in 1980, this study helped demonstrate that people recognize faces by their individual parts, such as eyes, mouth, and nose. When shown an upside-down image, our brain does not process the face properly, leading us to overlook any unusual features until the face is oriented correctly.

How does it come in handy here ?

This psychological aspect adds another layer of complexity for AI algorithms, as they must generate hand drawings that satisfy our innate ability to detect inaccuracies.

AI Algorithms and hand drawing

What does it mean to “draw” for an AI ?

Generative Adversarial Networks (GANs)

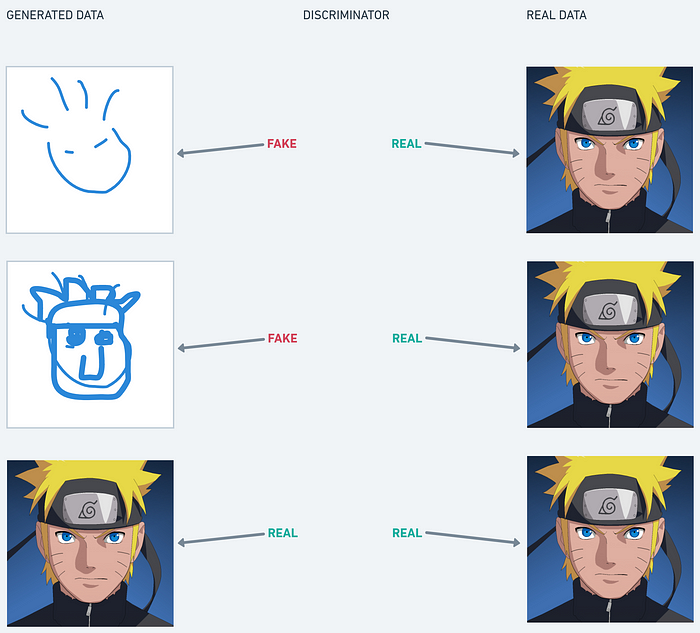

One popular approach to generating images using AI is through Generative Adversarial Networks (GANs). GANs consist of two neural networks, a generator and a discriminator, which compete against each other.

The generator creates images, while the discriminator evaluates their quality, comparing them to real examples. This iterative process helps the generator improve its image creation, but it still struggles with the intricate details of hands due to the reasons discussed in the previous section.

Convolutional Neural Networks (CNNs)

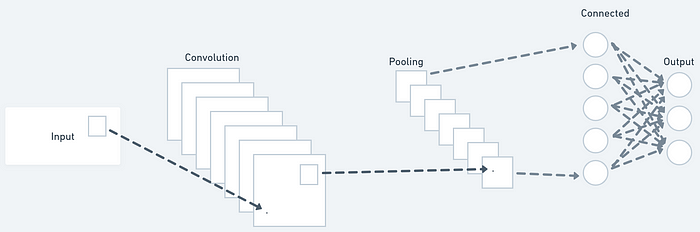

Convolutional Neural Networks (CNNs) are another type of AI algorithm used for image generation. CNNs are particularly adept at identifying and processing patterns in images, which makes them suitable for drawing hands. However, they face the same challenges in capturing the complexity and variability of human hands, leading to limitations in their ability to generate accurate hand drawings.

From a mathematical perspective, the key operation in a CNN is the convolution.

A convolution is a mathematical operation on two functions (f and g) that produces a third function (f*g) that expresses how the shape of one is modified by the other.

In a convolutional layer, a small filter slides over the input data and performs element-wise multiplication between the filter’s weights and the input data, followed by a summation of the products. This results in a feature map that highlights local patterns in the data.

The activation function, often a Rectified Linear Unit (ReLU), is then applied element-wise to introduce non-linearity into the network.

Pooling layers help reduce the spatial dimensions of the feature maps, with the most common pooling operation being max pooling, which selects the maximum value within a specified window.

Limitations in AI Training Data

Insufficient Diversity

AI tools rely heavily on their training data to learn and generate new content. If the dataset lacks diversity in hand drawings, the AI will struggle to produce a wide range of hand poses and styles.

Overfitting to the Training Data

AI algorithms can sometimes overfit to their training data, meaning they become too specialized in replicating the examples they’ve seen, and fail to generalize well to new scenarios. In the case of hand drawings, overfitting can result in AI-generated hands that closely resemble specific examples from the training data, but fail to capture the diversity and nuances of human hands.

Challenges in Fine-Tuning AI Algorithms

Balancing Realism and Artistic Style

Fine-tuning an AI algorithm to draw hands requires striking a delicate balance between creating realistic representations and preserving artistic style. Achieving this balance is difficult, as the AI must simultaneously learn the intricacies of human anatomy and the nuances of various artistic styles.

Difficulty in Defining Rules for Hand Drawing

Creating a set of rules or guidelines for AI to follow when drawing hands is challenging due to the vast array of possible hand poses and the subtle differences between individual hands. As a result, AI-generated hand drawings may lack the precision and accuracy found in human-drawn artwork.

Midjourney’s Approach and Challenges

Midjourney’s Algorithm

Midjourney uses a combination of GANs and CNNs to generate its artwork. By leveraging the strengths of both algorithms, Midjourney aims to create realistic and stylized images. However, the same challenges that plague GANs and CNNs individually also affect Midjourney’s ability to render hands accurately.

Overcoming the Hand Drawing Challenge

For those using tools like MidJourney, some strategies can help your AI art generator produce better outcomes, despite the complexity of hands. There are various methodologies like :

- Describing the hands position

- Using, if possible accesories like gloves, rings, …

- Using reference photos : To improve the accuracy of hand drawings, Midjourney can take into account a picture you could upload.

Incorporating an example of hands in various poses, styles, and anatomical details would help the algorithms better understand the nuances of hands.

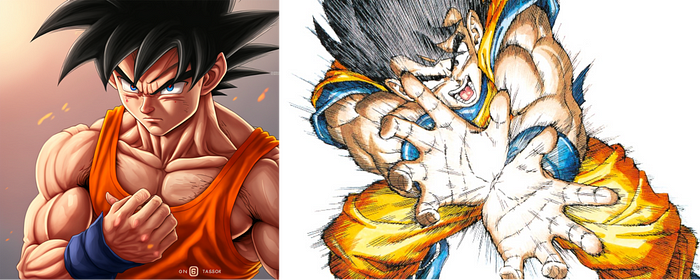

For artists : Learning from Popular Cartoons

Simplification and Stylization

Popular cartoons often simplify and stylize hands to make them more manageable to draw, while still maintaining their expressiveness. AI algorithms could benefit from adopting similar strategies, focusing on capturing the essence of hands rather than striving for anatomical perfection.

Consistent Style and Proportions

Cartoons like The Simpsons often maintain a consistent style and set of proportions when depicting hands. By learning from this approach, AI algorithms could be trained to generate hands that adhere to specific artistic styles and proportions, making the drawings more visually appealing and coherent, but won’t help for more realistic styles.

Opportunities for Engineers : Open-Source Projects and Techniques

RunwayML

RunwayML is an open-source project that offers a user-friendly platform for training, fine-tuning, and deploying machine learning models, including GANs and CNNs. Engineers interested in improving AI-generated hand drawings could experiment with this platform and its pre-trained models, modifying them to focus on hand-related features.

Github : https://github.com/runwayml

DALL-E: Generating Images from Text

OpenAI’s DALL-E is another open-source project that could inspire engineers to improve AI-generated hand drawings. DALL-E generates images based on textual descriptions, allowing for more control over the content of the generated images. Engineers could explore ways to adapt DALL-E’s approach to prioritize accurate hand rendering.

Data Augmentation Techniques

Engineers could also explore data augmentation techniques to increase the diversity and complexity of training data for AI algorithms. This approach involves applying various transformations to existing hand images, such as rotation, scaling, and flipping, effectively expanding the dataset and helping the AI better understand the nuances of hands.

What’s next ?

AI-generated hand drawings currently face limitations rooted in both technical and anatomical factors. The intricate structure of hands, human perception, and the limitations of training data all contribute to the challenges AI algorithms face when attempting to generate realistic hand drawings.

However, by leveraging open-source projects like RunwayML and DALL-E, experimenting with data augmentation techniques, engineers or ML enthusiasts can contribute to the ongoing development of AI-generated artwork.

As we continue to refine and optimize these algorithms, the day when AI-generated artwork can truly match human artistry comes closer into view. However, with these advancements also come new challenges and potential dangers. As AI-generated images become increasingly realistic, it may become harder to discern between authentic and manipulated content. This could lead to the spread of misinformation, the manipulation of public opinion, and the potential erosion of trust in visual media.

To mitigate these risks, engineers, researchers, and policymakers must work together to develop ethical guidelines, detection tools, and public awareness campaigns to ensure that AI-generated images are used responsibly and that society is prepared to tackle the emerging challenges posed by this technology.