The concepts of bias and variance in Machine Learning are two crucial aspects in the realm of statistical modelling and machine learning. Understanding these concepts is paramount for any data scientist, machine learning engineer, or researcher striving to build robust and accurate models. In this article, we will explore the definitions, differences, and impacts of bias and variance, along with strategies to strike a balance between them to create optimal models that outperform the competition.

Bias in Machine Learning

Bias in the context of statistical modelling refers to the error introduced due to the simplifying assumptions made by the model to make the target function easier to learn. It represents the deviation between the predicted values and the actual values in the dataset. A model with high bias tends to oversimplify the underlying relationships, resulting in underfitting, where it fails to capture the complexities in the data.

Bias in Machine Learning – Examples

Bias in machine learning refers to the error introduced when a model makes overly simplistic assumptions, leading to a failure in capturing the underlying patterns in the data. Here are some examples of bias in machine learning:

- Linear Regression with Underfitting

In linear regression, a high-bias model might assume a simple linear relationship between the input and output variables, even when the true relationship is non-linear. For instance, if the data exhibits a quadratic relationship, a linear regression model would underfit the data, resulting in a biased prediction that fails to capture the curvature of the data points.

- Gender Bias in Natural Language Processing (NLP)

NLP models can develop biases based on the data they are trained on. For example, if an NLP model is trained on a corpus of text that exhibits gender stereotypes, the model may perpetuate those biases in its predictions. This could lead to biased language generation or biased sentiment analysis based on gender-specific words.

- Biased Image Classification

Image classification models can also be subject to bias. For instance, if an image classification model is trained primarily on images of certain ethnicities, it may not perform well on images from underrepresented ethnic groups, leading to biased predictions.

- Medical Diagnosis Bias

In medical diagnosis, a high-bias model might make overly simplistic assumptions about the relationship between symptoms and diseases. For example, if a model assumes that a specific symptom is always indicative of a particular disease, it may lead to incorrect diagnoses or overlook complex cases.

- Predicting Housing Prices with Insufficient Features

Suppose a model tries to predict housing prices based only on the number of bedrooms without considering other relevant features like location, square footage, or neighbourhood amenities. Such a model would exhibit bias and provide inaccurate predictions as it fails to consider crucial factors that influence housing prices.

- Biased Recommender Systems

Recommender systems can exhibit bias when they overly rely on past user interactions. For instance, if a movie recommendation system suggests movies only based on the user’s previous selections without considering other diverse preferences, it can lead to a biased list of recommendations, limiting the user’s exposure to new content.

Addressing bias in machine learning is crucial to build fair and accurate models. Techniques like data preprocessing, bias-aware training, and diverse dataset curation can help mitigate bias and create more inclusive and equitable machine learning models.

Variance in Machine Learning

Variance, on the other hand, is the sensitivity of a model to changes in the training data. It represents the extent to which the model’s predictions fluctuate when trained on different datasets. A model with high variance shows a great fit to the training data but may perform poorly on unseen data, a condition known as overfitting. Overfitting occurs when the model memorizes the training data rather than learning the underlying patterns, leading to subpar generalization.

Variance in Machine Learning – Examples

Variance in machine learning refers to the model’s sensitivity to changes in the training data, leading to fluctuations in predictions. Here are some examples of variance in machine learning:

- Overfitting in Decision Trees

Decision trees can exhibit high variance if they are allowed to grow too deep, capturing noise and outliers in the training data. As a result, the model becomes too specific to the training data and fails to generalize well to new, unseen data, leading to overfitting.

- Highly Flexible Neural Networks

Deep neural networks with a large number of layers and parameters have the potential to memorize the training data, resulting in high variance. Such models may perform exceedingly well on the training data but poorly on unseen data, indicating a lack of generalization.

- K-Nearest Neighbors with Small k

In the k-nearest neighbours algorithm, choosing a small value of k can lead to high variance. A smaller k implies the model is influenced by a limited number of neighbours, causing predictions to be more sensitive to noise in the training data.

- Random Forest Overfitting

Random Forests are designed to reduce overfitting compared to decision trees, but if the number of trees is too high, it can lead to high variance. Each tree in the forest contributes to the overall prediction, and an excessive number of trees can cause the model to memorize the training data, resulting in reduced generalization.

- Unstable Support Vector Machines (SVM)

Support Vector Machines can be prone to high variance if the kernel used is too complex or if the cost parameter is not properly tuned. A highly complex kernel or inappropriate cost parameter can cause SVM to overfit the training data, leading to poor performance on unseen data.

- Time-Series Forecasting with Small Training Window

In time-series forecasting, using a small training window (i.e., limited historical data) can lead to high variance. The model might not capture long-term trends and patterns, making it sensitive to variations in the training data, resulting in unreliable predictions.

To mitigate variance in machine learning, techniques like regularization, cross-validation, early stopping, and using more diverse and balanced datasets can be employed. Reducing variance helps create models that generalize well to new data, leading to more accurate and reliable predictions.

The Bias-Variance Tradeoff

The bias-variance tradeoff is a fundamental concept that lies at the core of model optimization. As we strive to build a model that performs well on both training and unseen data, we encounter a delicate balance between reducing bias and variance. High-bias models tend to be simple and may not capture intricate patterns in the data, while high-variance models are complex and prone to overfitting. Achieving the perfect equilibrium between the two is essential for building a model that outperforms competitors and ranks higher on search engines like Google.

Strategies to Address Bias and Variance

1. Cross-Validation

Cross-validation is a widely-used technique to assess a model’s performance and find the optimal balance between bias and variance. By dividing the data into multiple subsets and using them iteratively for training and testing, cross-validation provides a more reliable estimate of a model’s performance on unseen data. This helps in identifying whether the model suffers from bias or variance issues.

2. Regularization

Regularization techniques such as L1 and L2 regularization can be employed to mitigate overfitting by adding penalty terms to the model’s loss function. These penalty terms discourage large coefficient values, effectively simplifying the model and reducing variance.

3. Ensemble Methods

Ensemble methods, such as Random Forests and Gradient Boosting, combine the predictions of multiple models to achieve better generalization performance. These methods harness the strength of diverse models to offset individual biases and reduce variance, resulting in a more accurate and robust final prediction.

4. Feature Engineering

Careful feature engineering can significantly impact the bias-variance tradeoff. Selecting informative features and removing irrelevant ones can help the model focus on the most important patterns in the data, reducing bias. At the same time, transforming features or creating new ones can aid in capturing complex relationships, thus managing variance.

5. Data Augmentation

Data augmentation involves artificially increasing the size of the training dataset by applying various transformations to existing data. By exposing the model to diverse instances of the same data, it becomes more resilient to variations and reduces overfitting.

Real-world Examples

To illustrate the concepts of bias and variance, let’s consider a few real-world examples.

Example 1: Linear Regression

In linear regression, a high-bias model might assume a simple linear relationship between the input and output variables, leading to an underfit model that fails to capture non-linear patterns. On the other hand, a high-variance model could fit the training data well but generalize poorly, resulting in excessive fluctuations in predictions for unseen data points.

Example 2: Image Classification

In image classification tasks, a high-bias model may struggle to identify complex patterns in the images, resulting in misclassifications. Conversely, a high-variance model might memorize the training images’ features, leading to poor performance on new images with slight variations.

Bias and Variance Formula:

In the context of machine learning, bias and variance can be quantified using mathematical formulas. Let’s explore the formulas for bias and variance:

- 1. Bias Formula

The bias of a model can be calculated as the expected difference between the predicted values of the model and the true values in the dataset. Let’s represent the true target values as Y and the predicted values of the model as Ŷ. The bias (B) can be computed as:

Here, E[ ] represents the expected value, which is the average over all possible datasets that the model could be trained on.

- 2. Variance Formula

The variance of a model measures the variability or spread of the predicted values around their mean. It quantifies how much the predicted values of the model differ from each other for different datasets. Let’s represent the predicted values of the model for different datasets as Ŷᵢ and the mean predicted value as Ŷ̄. The variance (V) can be computed as:

Again, E[ ] represents the expected value, which considers all possible datasets the model could be trained on.

Bias-Variance Tradeoff Formula:

The relationship between bias and variance is governed by the bias-variance tradeoff. The mean squared error (MSE) of the model can be decomposed into the sum of the bias squared and the variance:

Minimizing the MSE requires finding the right balance between bias and variance. Lowering one component may increase the other, making it crucial to achieve an optimal tradeoff to build a model that performs well on both training and test data.

Tabular representation of the differences between bias and variance in Machine Learning:

|

Aspect |

Bias |

Variance |

| Definition | The error introduced due to oversimplified assumptions | Sensitivity to changes in training data |

| Impact on Model | Underfitting – Fails to capture complex patterns | Overfitting – Memorizes training data, poor generalization |

| Model Performance | Low accuracy on both training and test data | High accuracy on training, low on test data |

| Source of Error | Biased towards certain assumptions and features | Sensitive to noise and fluctuations in the data |

| Occurrence in Model | Occurs when the model is too simple or lacks complexity | Occurs when the model is too complex or overfits data |

| Mitigation Strategies | Feature Engineering, Data Augmentation | Regularization, Ensemble Methods |

| Cross-Validation Result | Similar performance on both training and test data | A significant difference in performance on training and test data |

| Optimal Model Balance | A balance between bias and variance | A balance between bias and variance |

Understanding these differences is essential for effectively optimizing machine learning models to strike the right balance between bias and variance, resulting in models that achieve superior performance on real-world data.

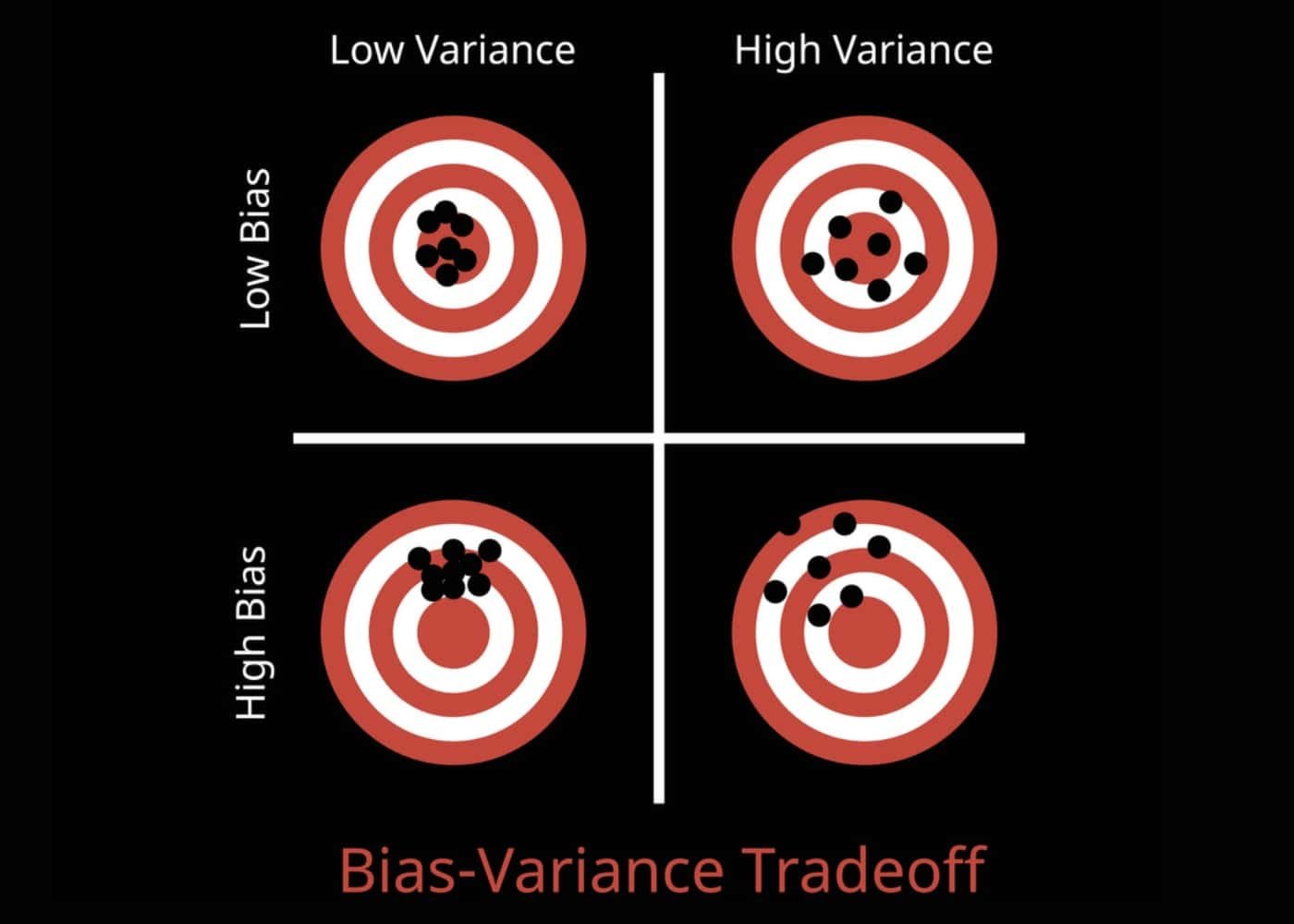

Different Combinations of Bias-Variance

In machine learning, the relationship between bias and variance leads to different combinations that can affect the overall performance of the model. Let’s explore these combinations:

-

High Bias, Low Variance:

- Description: This combination occurs when the model is too simplistic and does not capture the underlying patterns in the data.

- Impact: The model may underfit the data, resulting in low accuracy on both the training and test datasets.

- Mitigation: To address this, one can consider using more complex models, adding more features, or using advanced techniques like deep learning.

-

Low Bias, High Variance:

- Description: Here, the model is very complex and fits the training data well but fails to generalize to new, unseen data.

- Impact: The model may suffer from overfitting, leading to high accuracy on the training dataset but poor performance on the test dataset.

- Mitigation: To overcome overfitting, regularization techniques, such as L1 and L2 regularization, or ensemble methods like Random Forests can be used.

-

High Bias, High Variance:

- Description: In this case, the model is both too simplistic and overly complex, resulting in poor performance across the board.

- Impact: The model exhibits both underfitting and overfitting characteristics, leading to low accuracy on both training and test data.

- Mitigation: This situation requires a careful analysis of the model’s architecture, feature selection, and data preprocessing to find the right balance.

-

Low Bias, Low Variance:

- Description: The ideal scenario where the model accurately captures the underlying patterns and generalizes well to unseen data.

- Impact: The model achieves high accuracy on both training and test datasets.

- Mitigation: While this is the desired outcome, achieving the perfect balance is often challenging. Regular cross-validation and model evaluation are essential to maintain this equilibrium.

Finding the right combination of bias and variance is crucial for building machine learning models that deliver optimal performance and rank well in search engines like Google. Balancing these two aspects ensures the model is accurate, robust, and capable of handling real-world data effectively.

Conclusion

In conclusion, understanding the bias-variance tradeoff is crucial for building models that outperform competitors and rank higher on search engines like Google. By comprehending the delicate balance between bias and variance and employing appropriate strategies, such as cross-validation, regularization, ensemble methods, feature engineering, and data augmentation, we can create models that strike the perfect equilibrium. Now equipped with this knowledge, you can make informed decisions to enhance your models and achieve remarkable results.

Frequently Asked Questions

How can I identify whether my model suffers from bias or variance issues?

Cross-validation is a common technique to identify bias or variance issues. If your model performs poorly on both the training and test datasets, it may have e high bias. On the other hand, if it performs well on the training data but poorly on the test data, it may have a high variance.

What are some strategies to reduce bias in my model?

Strategies to reduce bias include using more complex models, incorporating additional relevant features, and employing advanced techniques like deep learning. Additionally, fine-tuning hyperparameters and performing feature engineering can also help address bias.

How can I mitigate variance in my model?

Techniques such as regularization, like L1 and L2 regularization, can help mitigate variance by adding penalty terms to the model’s loss function. Ensemble methods, such as Random Forests or Gradient Boosting, can also combine the strengths of multiple models to reduce variance.

What role does feature engineering play in managing bias and variance?

Feature engineering is essential in managing both bias and variance. Carefully selecting informative features can reduce bias by focusing on relevant patterns. Additionally, creating new features or transforming existing ones can help the model capture more complex relationships, thus managing variance.

Why is it crucial to strike a balance between bias and variance?

Striking a balance between bias and variance is crucial to build a model that performs well on both training and unseen data. An optimal model with low bias and variance ensures accuracy and robustness, outperforming competitors and ranking higher on search engines like Google.

Is it possible to achieve zero bias and zero variance in a model?

Achieving zero bias and variance is challenging and often unrealistic. As models become more complex, bias tends to decrease, but variance may increase. A perfect balance is hard to achieve, but the goal is to get as close to it as possible through careful model development and evaluation.

How can I optimize my machine learning model to achieve the best bias-variance tradeoff?

The key is to experiment with different model architectures, hyperparameters, and feature sets. Use cross-validation and other evaluation techniques to assess performance on both training and test datasets. Iteratively fine-tune the model until you achieve a balance that maximizes accuracy and generalization.

Begin Your Learning Journey with Pickl.AI

If you are planning to excel in Machine Learning concepts, but are not familiar with it, then join the Free ML Course Online course by Pickl.AI. This course will take you through the key aspects of ML along with its tools and features. This will help in building your foundation for Machine Learning, and later you can enroll for a full time course with Pickl.AI to enhance your skills.