Machine Learning models can leave you spellbound by their efficiency and proficiency. When you start exploring more about Machine Learning, you will come across the Gradient Boosting Algorithm. Basically, it is a powerful and versatile machine-learning algorithm that falls under the category of ensemble learning.

Before delving deeper into what is Gradient Boosting and its key applications, it is significant to understand what is the process of boosting.

Defining Boosting

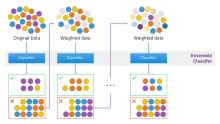

Boosting is an ensemble learning technique in machine learning where multiple weak models (often referred to as “learners” or “classifiers”) are combined to create a strong predictive model. Unlike traditional ensemble methods that work in parallel, boosting involves training these weak models sequentially.

The key idea behind boosting is to give more weight to instances that are misclassified by the previous model iterations, thereby focusing on the areas where the model has difficulties. This iterative process aims to improve the overall performance of the ensemble by correcting the errors made by the previous models.

The final prediction is typically a weighted combination of the predictions from all the weak models, resulting in a powerful and accurate ensemble model.

Types of Boosting

-

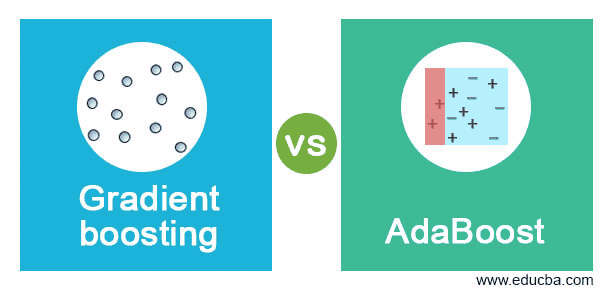

AdaBoost (Adaptive Boosting)

The original boosting algorithm assigns higher weights to misclassified instances and trains weak learners iteratively. It adjusts the weights of training instances based on their classification errors to improve performance.

-

Gradient Boosting

Iteratively builds weak learners, usually decision trees, by focusing on the residuals of the previous iteration’s predictions. It aims to minimize the loss function gradient to improve the ensemble’s predictive power.

Difference Between Gradient Boosting and Ada Boosting

| Aspect | AdaBoost | Gradient Boosting |

| Type | Classic boosting algorithm | Iterative boosting algorithm |

| Objective | Correct misclassified instances | Minimize loss function gradients |

| Focus on | Instances misclassified by previous models | Residuals of previous iteration’s predictions |

| Model Creation | Weighted majority vote of weak learners | Weighted combination of weak learners |

| Learning Rate | Reduced for each iteration | Multiplied by a small learning rate |

| Optimization Approach | Adjusts instance weights for focus | Adjusts model parameters using gradients |

| Sensitivity to Noise | Sensitive due to instance weighting | Less sensitive due to gradient descent |

| Outliers | Can be affected by outliers | Can handle outliers through gradient descent |

| Performance | This may lead to overfitting in some cases | Generally less prone to overfitting |

| Common Variants | XGBoost, LightGBM, CatBoost | XGBoost, LightGBM, CatBoost |

Exploring In-depth About Gradient Boosting

Gradient Boosting systematically hones its predictive capabilities. With each iteration, these weak learners address the shortcomings of their predecessors by focusing on the residuals—errors made in the predictions—of the ensemble.

By amalgamating the weighted contributions of these individual models, Gradient Boosting constructs a robust and accurate final predictor, offering a potent solution to a wide array of machine learning challenges.

Step-by-Step Guide on Gradient Boosting Algorithm for Machine Learning

Initialization: Start with a basic model

In Gradient Boosting, the journey begins with a straightforward model, often a single decision tree or a constant value. This initial model sets the foundation for further improvement.

Residual Calculation: Identify and quantify errors

For each training instance, determine the discrepancy between the actual target value and the prediction made by the current model. These differences, known as residuals, highlight the areas where the current model falls short.

Weak Learner Creation: Address model shortcomings.

Build a weak learner, usually a shallow decision tree, to understand and capture the patterns in the residuals. This new learner targets the errors that the initial model couldn’t grasp, refining the ensemble’s predictive prowess.

Weighted Contribution: Control learning from new models

Introduce the new weak learner to the ensemble, scaling its predictions by a learning rate. This controlled weight prevents overfitting, ensuring that each model’s impact is measured and manageable.

Ensemble Formation: Update and enhance predictions

Combine the predictions from the existing ensemble with the weighted predictions of the new weak learner. This cumulative approach enhances the ensemble’s predictive abilities iteratively.

5 Key Applications of Gradient Boosting Algorithm

Gradient Boosting Algorithm has found its way into a plethora of domains due to its impressive predictive capabilities and robustness. Here are five key applications where Gradient Boosting shines:

Financial Predictions

Gradient Boosting is widely used in the financial sector for tasks like stock price prediction, credit risk assessment, and fraud detection. Its ability to capture complex relationships in data makes it valuable for identifying patterns and trends in financial markets.

Image and Object Recognition

In the field of computer vision, Gradient Boosting has proven effective for image classification and object detection. It can be employed to recognize objects, faces, and patterns within images, contributing to applications like self-driving cars, medical imaging, and security systems.

Healthcare and Medical Diagnosis

Healthcare professionals utilize Gradient Boosting for disease diagnosis, medical image analysis, and patient outcome prediction. By learning from medical data, the algorithm assists in identifying potential health risks, predicting disease progression, and supporting clinical decision-making.

Natural Language Processing (NLP)

GB assists in sentiment analysis, text classification, and named entity recognition. It helps extract insights from text data, making it invaluable for applications such as social media sentiment analysis, customer reviews, and content categorization.

Anomaly Detection

Detecting anomalies in data is crucial across various domains, including cybersecurity, manufacturing, and industrial processes. Gradient Boosting can effectively identify abnormal patterns by learning from historical data, enabling early detection of unusual events or faults.

Closing Thoughts

Gradient Boosting’s versatility and adaptability have made it a go-to choice for complex and high-stakes tasks where accuracy and reliability are paramount. Its applications span across industries and continue to expand as researchers and practitioners uncover new ways to harness its potential.

As the domain of ML continues to expand, we will witness further refinement in the functioning of GB, and ML experts are going to play a pivotal role in this. To gain expertise in ML, one can now access free ML courses.

These courses help in building the fundamental concepts of Machine Learning. So, delay your learning process and start exploring the growth opportunities in the ML domain.