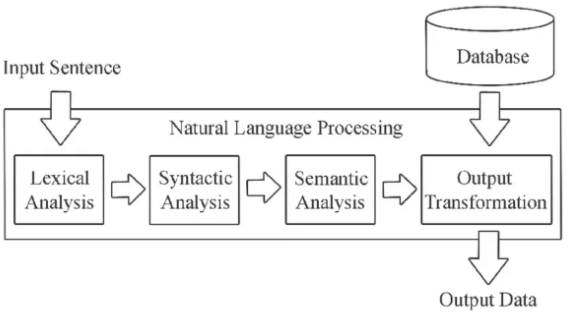

In the dynamic world of machine learning and natural language processing (NLP), database optimization is crucial for effective data handling.

Hence, the pivotal role of vector databases in the efficient storage and retrieval of embeddings has become increasingly apparent. These sophisticated platforms have emerged as indispensable tools, providing a robust infrastructure for managing the intricate data structures generated by large language models.

This blog embarks on a comprehensive exploration of the profound significance of vector databases. We will delve into the different types of vector databases, analyzing their unique features and applications in large language model (LLM) scenarios. Additionally, real-world case studies will illuminate the tangible impact of these databases across diverse applications.

Understanding Vector Databases and Their Significance

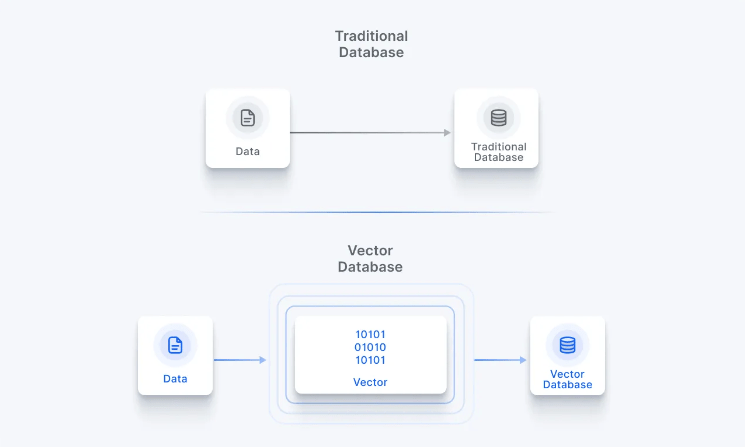

Vector databases represent purpose-built platforms meticulously designed to address the intricate challenges posed by the storage and retrieval of vector embeddings.

In the landscape of NLP applications, these embeddings serve as the lifeblood, capturing intricate semantic and contextual relationships within vast datasets. Traditional databases, grappling with the high-dimensional nature of these embeddings, falter in comparison to the efficiency and adaptability offered by vector databases.

The uniqueness of vector databases lies in their tailored ability to efficiently manage complex data structures, a critical requirement for handling embeddings generated from large language models and other intricate machine learning models.

These databases serve as the hub, providing an optimized solution for the nuanced demands of NLP tasks. In a landscape where the boundaries of machine learning are continually pushed, vector databases stand as pillars of adaptability, efficiently catering to the specific needs of high-dimensional vector storage and retrieval.

Exploring Different Types of Vector Databases and Their Features

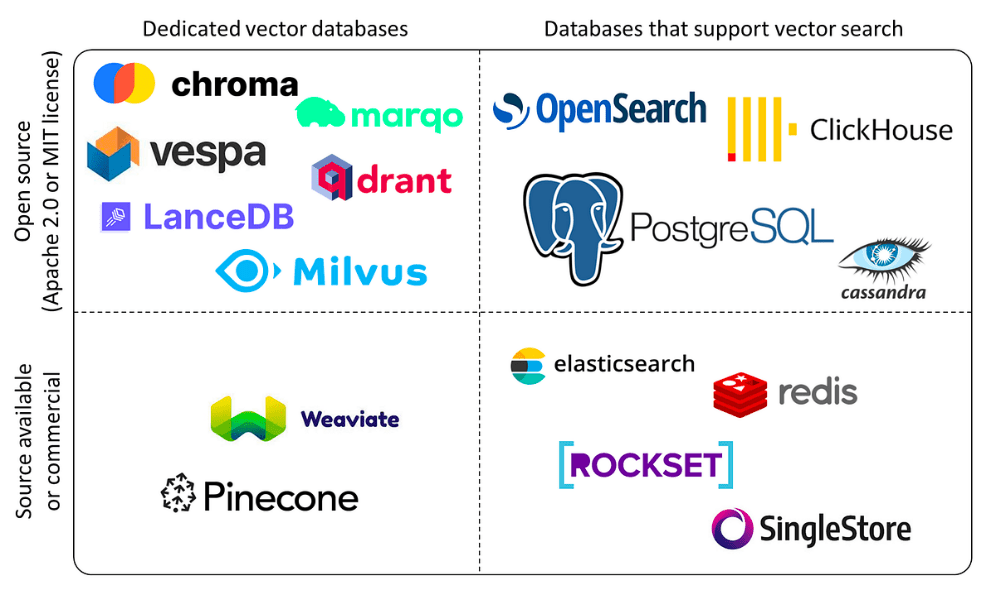

The vast landscape of vector databases unfolds in diverse types, each armed with unique features meticulously crafted for specific use cases.

Weaviate: Graph-Driven Semantic Understanding

Weaviate stands out for seamlessly blending graph database features with powerful vector search capabilities, making it an ideal choice for NLP applications requiring advanced semantic understanding and embedding exploration.

With a user-friendly RESTful API, client libraries, and a WebUI, Weaviate simplifies integration and management for developers. The API ensures standardized interactions, while client libraries abstract complexities, and the WebUI offers an intuitive graphical interface.

Weaviate’s cohesive approach empowers developers to leverage its capabilities effortlessly, making it a standout solution in the evolving landscape of data management for NLP.

DeepLake: Open-Source Scalability and Speed

DeepLake, an open-source powerhouse, excels in the efficient storage and retrieval of embeddings, prioritizing scalability and speed. With a distributed architecture and built-in support for horizontal scalability, DeepLake emerges as the preferred solution for managing vast NLP datasets.

Its implementation of an Approximate Nearest Neighbor (ANN) algorithm, specifically based on the Product Quantization (PQ) method, not only guarantees rapid search capabilities but also maintains pinpoint accuracy in similarity searches.

DeepLake is meticulously designed to address the challenges of handling large-scale NLP data, offering a robust and high-performance solution for storage and retrieval tasks.

Faiss by Facebook: High-Performance Similarity Search

Faiss, known for its outstanding performance in similarity searches, offers a diverse range of optimized indexing methods for swift retrieval of nearest neighbors. With support for GPU acceleration and a user-friendly Python interface, Faiss firmly establishes itself in the vector database landscape.

This versatility enables seamless integration with NLP pipelines, enhancing its effectiveness across a wide spectrum of machine learning applications. Faiss stands out as a powerful tool, combining performance, flexibility, and ease of integration for robust similarity search capabilities in diverse use cases.

Milvus: Scaling Heights with Open-Source Flexibility

Milvus, an open-source tool, stands out for its emphasis on scalability and GPU acceleration. Its ability to scale up and work with graphics cards makes it great for managing large NLP datasets. Milvus is designed to be distributed across multiple machines, making it ideal for handling massive amounts of data.

It easily integrates with popular libraries like Faiss, Annoy, and NMSLIB, giving developers more choices for organizing data and improving the accuracy and efficiency of vector searches. The diversity of vector databases ensures that developers have a nuanced selection of tools, each catering to specific requirements and use cases within the expansive landscape of NLP and machine learning.

A guide to exploring top vector databases in the market

Efficient Storage and Retrieval of Vector Embeddings for LLM Applications

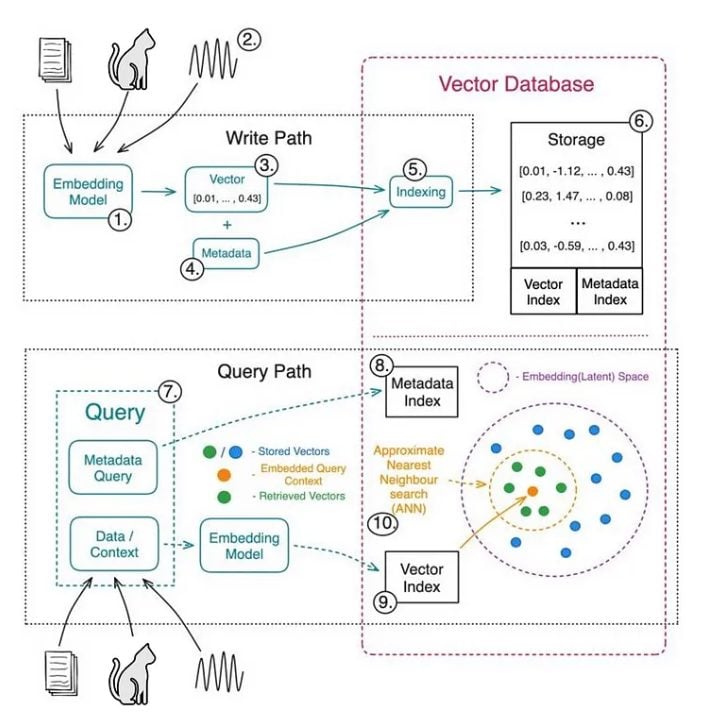

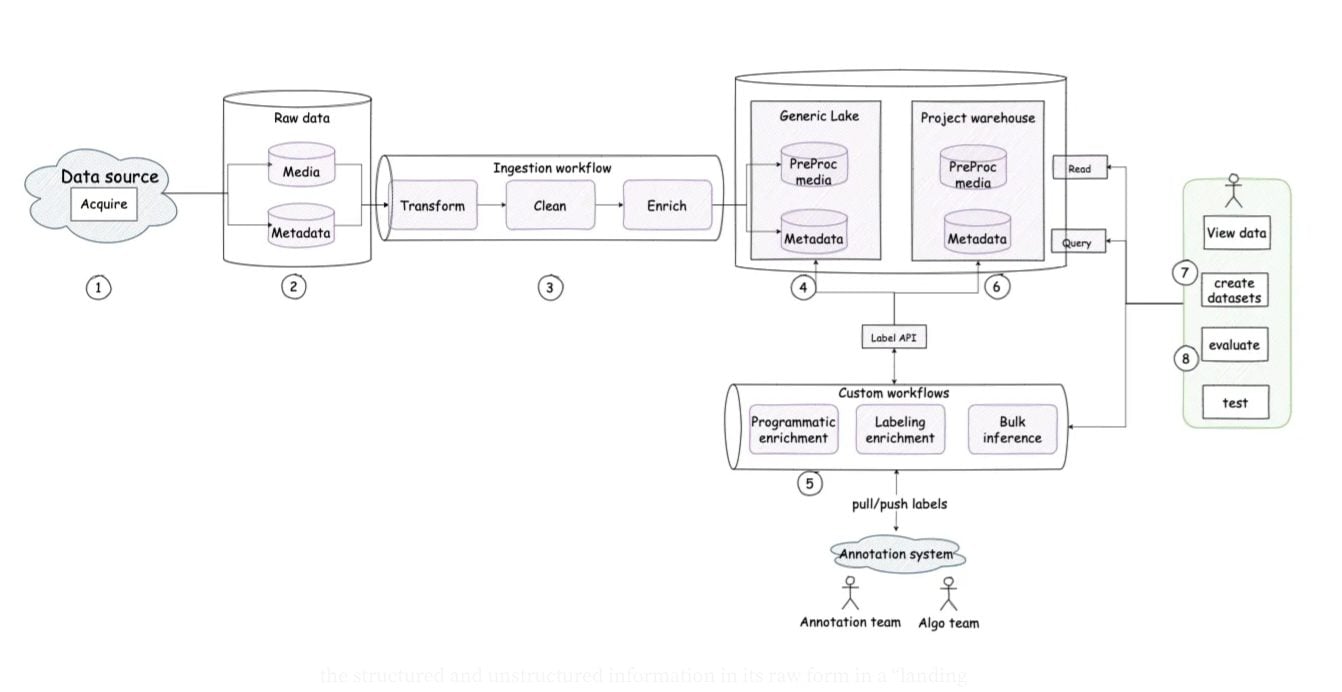

Efficiently leveraging vector databases for the storage and retrieval of embeddings in the world of large language models (LLMs) involves a meticulous process. This journey is multifaceted, encompassing crucial considerations and strategic steps that collectively pave the way for optimized performance.

Choosing the Right Database

The foundational step in this intricate process is the selection of a vector database that seamlessly aligns with the scalability, speed, and indexing requirements specific to the LLM project at hand.

The decision-making process involves a careful evaluation of the project’s intricacies, understanding the nuances of the data, and forecasting future scalability needs. The chosen vector database becomes the backbone, laying the groundwork for subsequent stages in the embedding storage and retrieval journey.

Integration with NLP Pipelines

Leveraging the provided RESTful APIs and client libraries is the key to ensuring a harmonious integration of the chosen vector database within NLP frameworks and LLM applications.

This stage is characterized by a meticulous orchestration of tools, ensuring that the vector database seamlessly becomes an integral part of the larger ecosystem. The RESTful APIs serve as the conduit, facilitating communication and interaction between the database and the broader NLP infrastructure.

Optimizing Search Performance

The crux of efficient storage and retrieval lies in the optimization of search performance. Here, developers delve into the intricacies of the chosen vector database, exploring and utilizing specific indexing methods and GPU acceleration capabilities.

These nuanced optimizations are tailored to the unique demands of LLM applications, ensuring that vector searches are not only precise but also executed with optimal speed. The performance optimization stage serves as the fine-tuning mechanism, aligning the vector database with the intricacies of large language models.

Language-specific Indexing

In scenarios where LLM applications involve multilingual content, the choice of a vector database supporting language-specific indexing and retrieval capabilities becomes paramount. This consideration reflects the diverse linguistic landscape that the LLM is expected to navigate.

Language-specific indexing ensures that the vector database comprehends and processes linguistic nuances, ultimately leading to accurate search results across different languages.

Incremental Updates

A forward-thinking strategy involves the consideration of vector databases supporting incremental updates. This capability is crucial for LLM applications characterized by dynamically changing embeddings.

The vector database’s ability to efficiently store and retrieve these dynamic embeddings, adapting in real-time to the evolving nature of the data, becomes a pivotal factor in ensuring the sustained accuracy and relevance of the LLM application.

This multifaceted approach to embedding storage and retrieval for LLM applications ensures that developers navigate the complexities of large language models with precision and efficacy, harnessing the full potential of vector databases.

Read about the role of vector embeddings in generative AI

Case Studies: Real-world Impact of Database Optimization with Vector Databases

The real-world impact of vector databases unfolds through compelling case studies across diverse industries, showcasing their versatility and efficacy in varied applications.

Case Study 1: Semantic Understanding in Chatbots

The implementation of Weaviate’s vector database in an AI chatbot leveraging large language models exemplifies the real-world impact on semantic understanding. Weaviate facilitates the efficient storage and retrieval of semantic embeddings, enabling the chatbot to interpret user queries within context.

The result is a chatbot that provides accurate and contextually relevant responses, significantly enhancing the user experience.

Case Study 2: Multilingual NLP Applications

VectorStore’s language-specific indexing and retrieval capabilities take center stage in a multilingual NLP platform.

The case study illuminates how VectorStore efficiently manages and retrieves embeddings across different languages, providing contextually relevant results for a global user base. This underscores the adaptability of vector databases in diverse linguistic landscapes.

Case Study 3: Image Generation and Similarity Search

In the world of image generation and similarity search, a company harnesses vector databases to streamline the storage and retrieval of image embeddings. By representing images as high-dimensional vectors, the vector database enables swift and accurate similarity searches, enhancing tasks such as image categorization, duplicate detection, and recommendation systems.

The real-world impact extends to the world of visual content, underscoring the versatility of vector databases.

Case Study 4: Movie and Product Recommendations

E-commerce and movie streaming platforms optimize their recommendation systems through the power of vector databases. Representing movies or products as high-dimensional vectors based on attributes like genre, cast, and user reviews, the vector database ensures personalized recommendations.

This personalized touch elevates the user experience, leading to higher conversion rates and improved customer retention. The case study vividly illustrates how vector databases contribute to the dynamic landscape of recommendation systems.

Case Study 5: Sentiment Analysis in Social Media

A social media analytics company transforms sentiment analysis with the efficient use of vector databases. Representing text snippets or social media posts as high-dimensional vectors, the vector database enables rapid and accurate sentiment analysis. This real-time analysis of large volumes of text data provides valuable insights, allowing businesses and marketers to track public opinion, detect trends, and identify potential brand reputation issues.

Case Study 6: Fraud Detection in Financial Services

The application of vector databases in a financial services company amplifies fraud detection capabilities. By representing transaction patterns as high-dimensional vectors, the vector database enables rapid similarity searches to identify suspicious or anomalous behavior.

In the world of financial services, where timely detection is paramount, vector databases provide the efficiency and accuracy needed to safeguard customer accounts. The case study emphasizes the real-world impact of vector databases in enhancing security measures.

The final word

In conclusion, the complex interplay of efficient storage and retrieval of vector embeddings using vector databases is at the heart of the success of machine learning and NLP applications, particularly in the expansive landscape of large language models.

This journey has unveiled the profound significance of vector databases, explored the diverse types and features they bring to the table, and provided insights into their application in LLM scenarios.

Real-world case studies have served as representations of their tangible impact, showcasing their ability to enhance semantic understanding, multilingual support, image generation, recommendation systems, sentiment analysis, and fraud detection.

By assimilating the insights shared in this exploration, developers embark on a path that brings them closer to harnessing the full potential of vector databases. These databases, with their adaptability, efficiency, and real-world impact, emerge as indispensable allies in the dynamic landscape of machine learning and NLP applications.