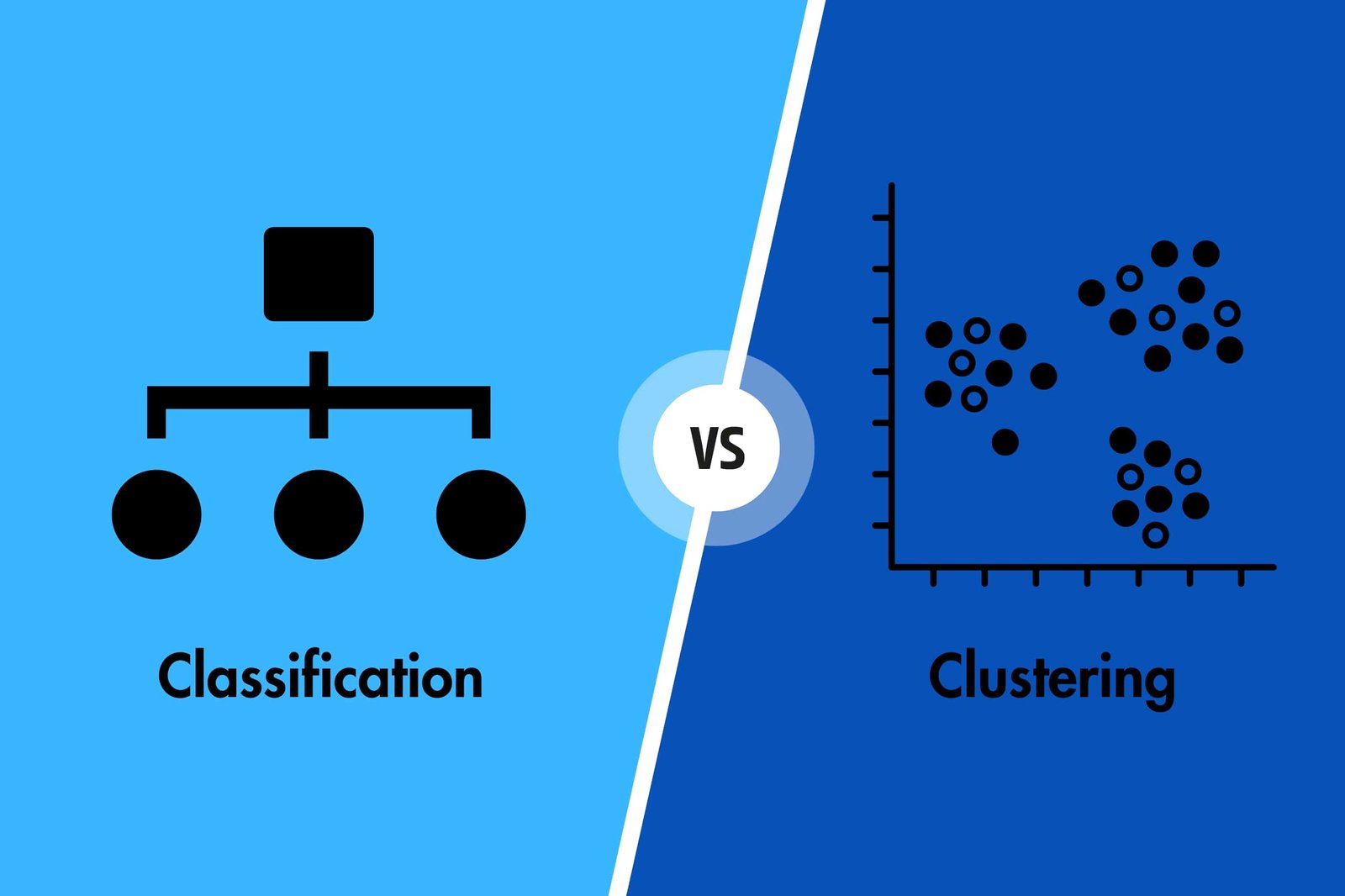

Machine Learning is a subset of Artificial Intelligence and Computer Science that makes use of data and algorithms to imitate human learning and improving accuracy. Being an important component of Data Science, the use of statistical methods are crucial in training algorithms in order to make classification. Certainly, these predictions and classification help in uncovering valuable insights in data mining projects. ML algorithms fall into various categories which can be generally characterised as Regression, Clustering, and Classification. While Classification is an example of directed Machine Learning technique, Clustering is an unsupervised Machine Learning algorithm. The blog will take you on a journey to know more about these algorithms and unfold a comparison of Classification vs. Clustering.

What is Classification?

Classification is basically a directed approach in Machine Learning which provides assistance to organisations in making prediction of the target values based on the provided input to the models. There are various types of classifications which includes binary classification and multi-class classification amongst many others. These are dependent on the number of classes which are included within the target values.

Types of Classification:

Logistic Regression

It is the kind of Linear model that is used in the process of classification. In case you need to determine the likelihood of an event occurring, the application of sigmoid function is important. Categorical values in classification requires the application of logistic regression which is one of the best approaches.

K-Nearest Neighbours (kNN)

In order to calculate the distance between one data point and every other accomplished parameter through using the metrics of distance like Euclidean distance, Manhattan distance and others. Therefore, in order to categorise the output correctly, you need a vote with a simple majority from each data’s K closest neighbours is highly important.

Decision Trees

Decision Trees are non-linear model unlike the logistic regression which is a linear model. The use of tree structure is helpful in construction of the classification model which includes nodes and leaves. The use of several if-else statements in this methods is used for breaking down a large structure into various smaller ones. This is then used to produce the final result. Both in case of regression and classification issues, the use of decision trees can be highly helpful.

Random Forest

The use of multiple decision trees in ensemble learning approach can be beneficial in predicting the results of large attribute. Consequently, each brand of the decision tree will yield a distinct result. The need for multiple decision trees is crucial for categorising the final conclusion in the classification problem. Regression problems are further solved by calculating the average of the projected values from the decision trees.

Naïve Bayes

Bayes’ Theorem works as the foundation for the method of classification. It essentially works on the assumption that the presence of one feature does not rely on the presence of other characteristics. Alternatively, there is no connection between the two of them. Therefore, the result of this supposition evaluates that it does not perform quite well with complicated data. The main reason is that the majority of the data sets have some type of connection between the characteristics. Hence, the assumption causes a problem.

Support Vector Machine

Classification algorithm makes use of a multidimensional representation of the data points. Hyperplanes are useful in separating the data points into groups. It is used in showing a n-dimensional domain for the n available features and helps in creating hyperplanes for splitting the pieces of data with greatest margin.

Applications

- Detecting unsolicited email

- Recognition of the face

- Determining whether a client is likely to leave or not

- Approval of Bank Loan

What is Clustering?

Clustering refers to the Machine Learning technique that belongs from the category of unsupervised learning. The purpose of the algorithm is to create clusters of out of the collections of data points that have certain effective properties. The data points certainly belong to the data points of a certain cluster that have similar characteristics. On the other hand, data points belonging to other clusters must have distinct characteristics from one another as humanely as possible. There are two different categories of clustering making up the entire concept including soft clustering and hard clustering.

Types of Clustering

K-Means Clustering

The beginning of establishing a fixed set of K segments and then using the metrics of distance to compute the distance that separates each data item from the cluster centres of the various segments is the K-Means Clustering. Accordingly, it places each data point with each of the k groups based on how far apart from the other points its is.

Agglomerative Hierarchical Clustering

The formation of a cluster is possible by merging data points based on the distance metrics and the criteria which is useful for connecting these clusters.

Divisive Hierarchical Clustering

It begins with the data sets combined into one single cluster and then it divides these datasets using the proximity of the metrics together with the criterion. Both the hierarchical clustering and contentious clustering methods are seen as dendrogram. It can also be used for determining the optimal number of clusters.

DBSCAN

This approach in clustering algorithm is based on the density whereby some algorithms like K-Means perform well on clusters with reasonable amount of space between them. It further produces clusters that have spherical image or shape as well. DBSCAN used when the form of input in arbitrary, although it is less suspected to aberrations than other techniques of scanning. Within a given radius, it brings together various datasets which are adjacent to large number of other datasets.

OPTICS

Density-based clustering like the DBSCAN makes use of the OPTICS strategy along with few other factors into consideration and has far greater computational burden. A reachability plot is created but it does not break the data into clusters which can aid with understanding of clustering.

BIRCH

In terms of organising the data into different groups it is important to generate the summary of it first. Consequently, it first summarises the data and then makes use of the summation to form clusters. However, it is important to focus on the fact that it is limited to only working with numerical properties that has the ability to express spatially.

Applications

- Based on customer preferences, market segmentation takes place.

- Investigating the existence of social networks

- Segmentation of an image

- Recommendation Engines.

Difference Between Classification and Clustering

The difference between Classification and Clustering can be explained in the tabular form below:

Applications of Classification and Clustering:

Applications of Clustering

Clustering is one of the most crucial and important part of the process of analysis in the field of Machine Learning. The different methods of Clustering are based on:

- Partitioning

- Hierarchical model

- Density

- Grid

- Model

Different applications of clustering further includes the following:

- Engines making suggestions

- Customer and market segmentation

- Study of the social networks

- Analysing the biological data

- Analysis of x-rays in medicine

- Detection of the presence of cancer cells

Applications of Classification

Classification method is applied for assigning label to each class generated as a result of classifying the available data in a predetermined number of categories. The two kinds of classified are:

- Binary classifier: the categorisation indulges in using two potential outcomes that correspond to two different classes. For instance, the categorisation of span and non-spam email.

- Multi-class Classifier: categorisation in this form is carried out using more than just two unique classes. Consequently, there can be different of categorisation including soil, segmentation of musical genres, etc.

Following are further the applications of classification:

- Content classification

- Handwriting analysis

- Biometric fingerprinting

- Speech acknowledgement

Most Common Classification Algorithms in Machine Learning

Classification is a job that is dependent entirely on Machine Learning techniques, especially when it comes to Natural Language Processing. Therefore, each algorithm technically has its own purpose for solving certain issue. Consequently, each algorithm is deployed in a distinct location based on the requirements.

Certainly, a dataset maybe subjected to any categorisation methods. In statistics, the discipline of classification is quite broad and the application of any single technique is entirely dependent on the dataset that you deal with. Following are the most common classification algorithms in ML:

- Decision Tree

- K-Nearest Neighbours

- Support Vector Machines

- Naïve Bayes

- Logistic Regression

Conclusion

Thus, Classification and Clustering are two of the most efficient Machine Learning techniques that are useful in enhancing business processes. The above mentioned difference of Classification vs. Clustering proves that you can use them differently to understand your customers and improve their experiences. by analysing and targeting consumers using ML Techniques, it is possible for businesses to create a loyal customer base and optimise their Return on Investment.

FAQ

What is the relationship between clustering and classification in machine learning?

Classification and Clustering are both Machine Learning algorithms which are used for different purposes. Classification algorithm is a supervised Machine Learning Algorithm which is used for making prediction about the different categories of the target value. In case of clustering, it is an unsupervised Machine Learning Algorithm which has the purpose of creating clusters out of the collection of data points that include certain effective properties.

What is hard and soft clustering in machine learning?

Hard and soft clustering are the two different types of clustering methods in Machine Learning. Soft Clustering is about the output providing a probability likelihood of data points belonging to each of the pre-defined number of clusters. On the other hand, hard clustering focuses on one data point which can belong to one cluster only.

Why do we need clustering?

Clustering is used by Data Scientists and other for gaining important insights on clusters of data points by observing them and applying the clustering algorithm to the data. Clustering is required for identifying groups of similar objects within a dataset with two or more variable quantities. In practical terms, the data may be collected from databases of marketing, biomedical, geospatial databases among many other places.