What Can AI Teach Us About Data Centers? Part 1: Overview and Technical Considerations

Conversational artificial intelligence has been around for almost 60 years now. Its first application was developed at the Massachusetts Institute of Technology in 1966, well before the dawn of personal computers.[1] The typical application familiar to readers is much more recent, when AI operates as chatbots, enhancing or at least facilitating the user experience on many websites. Recently, however, conversational AI has taken a giant leap forward.

Last November, researchers at OpenAI released a test version of conversational AI called ChatGPT, which allows people and machines to communicate in very human-like ways. [2] Ask it any question and you will usually get a well-crafted one-page response in just a few seconds. I used ChaptGPT to learn a host of things about data centers and will list those below and in Parts 2 and 3 of this series. To help avoid errors, incomplete answers, and controversies about this technology, I also cite other professional literature and videos to supplement what ChatGPT said and to refer readers to more information about how it was created and works.

Using ChatGPT

The queries we can pose to ChatGPT are as varied as the population of users who try it out.[3] Over a million users tested it in just its first week after release[4] and over 100 million people have used it since then. One of the cool things about this technology is its interactive nature — your conversations with it can go in any direction, or along any tangent or into any rabbit hole you like, as would many conversations you have in real life. Whether that happens with many large language models is largely, but not entirely, up to you.[5] For example, Bing’s new ChatGPT competitor (Bard) has been known to stray into strange conversations, so it’s up to you to keep your conversations with large language models on track.5

To fully grasp what ChatGPT has to offer, let your questions morph as you react to what it says. The more context, background, and feedback you provide to it as you rate its answers and ask additional questions, the more useful and focused its responses will be. Stop when you want or go back later for more.

Why Use AI to Learn About Data Centers and How Does It Work?

Why engage ChatGPT on a topic like data centers? Simply because the data center use case is broad, with several technical, staffing, business, political, and economic issues to consider. This post focuses on technical and staffing issues, while Parts 2 and 3 will focus on business and other issues, respectively. Such a wide range of topics makes it difficult to learn much without taking many different search approaches. A great deal of introductory information can be obtained much more quickly by starting with ChatGPT.

Thus, you may learn and convey more than what you already know on a topic, quickly and efficiently, by using ChatGPT. If you are a data scientist, manager, or executive with limited time and funds, wondering whether/how to invest in data centers and what the pros, cons, and costs would be, chances are you will start from a similar place as I — having some knowledge then looking for more, be that from humans, machines, or both. ChatGPT is well suited to this type of situation.

It is worth noting that ChatGPT is firmly in the camp of human and machine collaboration; it is not just machine derived. It uses a form of artificial intelligence called Reinforcement Learning from Human Feedback to produce answers based on human-guided computer analytics.2 In other words, using a chatbot function, users enter a question, get an answer from ChatGPT, then tell ChatGPT how helpful that answer was, and most importantly how the answer can be improved. This back and forth allows provides information ChatGPT needs to revise its answers, so they are tailored better to your needs. The pros and cons of this human/machine interaction are described later, and in OpenAI’s studies2 and commentaries.[6]

Questions for AI About Data Centers

To learn more about data centers I began by asking ChatGPT what Chief Transformation Officers should know about them. This interaction is described in my upcoming article in CXOTech Magazine. Next, I asked what a data center typically looks like and how it should be staffed. Then I asked how much of a company’s budget is typically devoted to data center use, compared to budgets for many other parts of the business. Structural issues are covered below, while staffing, budgeting, and other business issues are covered in Part 2 of this series.

Alternatives to data centers such as cloud storage and hybrid solutions exist, so I asked ChatGPT to compare those as well, and its responses are below. Then I asked about the build or buy options to finance data centers or alternatives; this is covered in Part 2 as well.

Since a key function of a data center is to house data and help users prepare data for complex analytics, I also asked ChatGPT to comment on the ability to conduct high-performance computing with versus without a data center. Its response is below.

Finally, I asked ChatGPT about security concerns, some compelling political and economic controversies around them, and how data centers are likely to evolve in the next 5–10 years. These are addressed in Part 3 of this series.

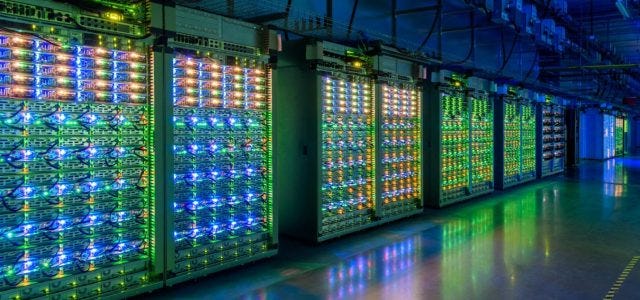

Photo from unsplash.com

Our Conversation About Technology

The following indented questions and answers are copied nearly verbatim from my interaction with ChatGPT. I did not correct any logic or substantive errors in this section, so you can see what to expect from this kind of interaction. I did correct several spelling and grammar mistakes, without changing the meaning of any responses. I also underlined several key phrases to make its text easier to track. My questions are in bold italics, and ChatGPT’s answers follow each question.

What are the different types of data centers, and what alternatives to using data centers exist?

There are several different types of data centers, each with its own unique characteristics and advantages:

1. Enterprise data centers: These are data centers that are owned and operated by a single organization and are used to support the organization’s internal IT needs.

2. Co-location data centers: These are data centers that are owned and operated by third-party providers and are used to house the IT equipment of multiple organizations.

3. Cloud data centers: These are data centers owned and operated by cloud providers, such as Amazon Web Services, Microsoft Azure, or Google Cloud Platform, and provide a range of services on a pay-as-you-go basis.

4. Edge data centers: These are data centers that are located closer to the edge of the network, where data is generated and consumed, rather than in central locations. Edge data centers are designed to support low-latency, high-bandwidth applications and services.

5. Hybrid data centers: This refers to a combination of different data center solutions such as using a mix of on-premises, co-location, and cloud-based data centers to meet specific needs.

Alternatives to using a data center:

1. Cloud Computing: Cloud computing is a popular alternative to using a data center, which allows organizations to outsource their IT needs to third-party providers and to access computing resources on-demand over the internet.

2. Remote Work: With technological advancements, companies are increasingly enabling remote work, which enables employees to work from anywhere and eliminates the need for a physical data center.

3. IoT (Internet of Things) Edge Computing: With the increasing number of connected devices and the amount of data generated, companies are implementing IoT edge computing, which uses edge devices, such as gateways, routers, or even small-scale data centers, to process and analyze data closer to the source, and reduce the need for central data centers.

It’s important to note that the choice of data center type, or even alternatives, will depend on the organization’s specific requirements, such as data security, compliance, cost, scalability, and more. Therefore, it’s important to conduct a thorough analysis of the organization’s needs before making a decision.

What is an edge data center?

An edge data center is a type of data center that is located closer to the edge of a network, such as at the edge of a city or a remote location, rather than in a centralized location. This allows for faster and more efficient processing of data by reducing the distance that data must travel.

Edge data centers are typically smaller than traditional, centralized data centers and can be used to support a wide range of applications, including Internet of Things (IoT) devices, 5G networks, and autonomous vehicles. They can also be used to support edge computing, which is a distributed computing model that involves processing data at or near the source of data collection, rather than sending it to a centralized location for processing.

One of the main advantages of edge data centers is that they can reduce latency and improve the responsiveness of applications and services, as data doesn’t have to travel as far to be processed. This is important for real-time applications such as autonomous vehicles, virtual and augmented reality and other low-latency applications.

Additionally, edge data centers can also provide greater security, as data can be processed and stored closer to the source, reducing the risk of data breaches. Also they can offload traffic from the centralized data centers which can help reduce the cost of data transfer and improve overall network performance.

What does the infrastructure of a data center look like?

The infrastructure of a data center typically includes several key components:

1. Racks: Servers, storage systems, and networking equipment are housed in racks that are usually arranged in rows.

2. Power Distribution Units (PDUs): These units distribute electrical power to the equipment in the racks.

3. Uninterruptible Power Supply (UPS): Provides backup power in the event of a power outage, to keep the equipment running long enough to perform an orderly shutdown.

4. Cooling systems: Data centers generate a lot of heat, so they need cooling systems to keep the temperature at a safe level. This can include air conditioning units, chillers, and computer room air handlers (CRAHs).

5. Networking equipment: This includes routers, switches, and firewalls that are used to connect the servers and storage systems to the Internet and to other parts of the organization.

6. Management software and tools: These are used to monitor and manage the data center’s performance, including tools for monitoring server and storage usage, and for automating routine tasks such as backups and software updates.

7. Security: Physical and electronic security are important aspects of data center infrastructure, and typically include measures such as security cameras, biometric access controls, and fire suppression systems.

All these items are connected in a central network and managed by Data Center Operation Team. All the systems are connected through cabling, fiber optics and via wireless interfaces.

What are the similarities and differences between data centers, data lake houses, and data lakes?

Data centers, data lake houses, and data lakes are all related to data storage and management, but they have some key differences.

A data center is a physical facility that houses servers and other computer equipment used for storing, processing, and managing data. They are typically used by organizations to store and manage their own data.

A data lake house is a hybrid approach that combines the benefits of a data lake and a data warehouse. A data lake house uses a data lake as the primary storage, but also integrates a data warehouse-like structure and management capabilities, such as a relational schema, to enable faster query processing and better governance.

A data lake is a centralized repository that allows you to store all your structured and unstructured data at any scale. Data lakes are designed to handle large volumes of data and can store data in its raw format, without enforcing any structure. This allows for flexible data ingestion and retrieval, but also requires additional management and processing to make the data usable for analysis.

In summary, data centers are physical facilities, data lake houses are hybrids between data lake and data warehouse, and data lakes are a centralized storage for all types of data, unstructured and structured.

Not a cloud computer😊 Photo from unsplash.com

Is cloud computing just using someone else’s data center?

Cloud computing is a service model in which users can access and use shared computing resources, such as servers, storage systems, and software, over the internet. These resources are typically owned and operated by a third-party provider, known as a cloud provider, and are available to users on-demand, often on a pay-as-you-go or subscription basis.

While cloud computing often involves the use of a cloud provider’s data center, it is not limited to that. Cloud providers offer a range of services, including Infrastructure as a Service (IaaS), Platform as a Service (PaaS) and Software as a Service (SaaS) which are all different types of cloud services.

IaaS is a service that provides customers with access to virtualized computing resources, such as servers and storage, over the internet. This can include the use of a cloud provider’s data center.

PaaS is a service that provides customers with a platform for developing, testing, and deploying software applications, without the need to manage the underlying infrastructure.

SaaS is a service that provides customers with access to software applications over the internet, without the need to install or run the applications on their own computers.

So, in summary, cloud computing can include the use of someone else’s data center, but it also encompasses a range of other services and technologies.

Photo from Unsplash.com

Can high-performance computing or quantum computing be done without a data center?

High-performance computing (HPC) and quantum computing are computational tasks that require powerful hardware and software resources. Both types of computing can be done without a data center, but it would require specialized equipment and a significant investment.

For HPC, it’s possible to use a cluster of powerful workstations or servers, each with multiple processors and large amounts of memory. These systems can be used to run HPC workloads, such as simulations and modeling, but they would be less efficient, and more expensive in terms of power consumption, cooling and space when compared to a data center designed for HPC workloads.

For Quantum computing, the hardware required to run quantum computing tasks is still in the early stages of development, and not yet available to the general market. The current systems are typically housed in research institutions or specialized data centers, and they need to be kept under controlled conditions with specialized cooling and power systems. Therefore, it is less likely for a company to invest in a dedicated quantum computing setup without a data center for this purpose.

In summary, it is technically possible to perform high-performance computing and quantum computing without a data center, but it would typically require specialized equipment and a significant investment. Additionally, data centers provide a cost-effective way to perform these types of computing tasks by providing the necessary resources, power and cooling, and physical security required for these tasks.

Discussion and Limitations

When I first tried ChatGPT last January, it reminded me of the futuristic computer frequetly relied upon by Captain James T. Kirk or First Officer Spock on the original Star Trek television series from the 1960s. Just for fun I asked ChatGPT to provide examples of the ship’s crew using their computer and to contrast it with ChatGPT’s capabilities. Here’s what it said:

In the Star Trek series, Captain Kirk frequently used the ship’s computer, known as the Enterprise’s computer, to access information and perform advanced calculations. For example, in the episode “The Squire of Gothos,” Kirk uses the computer to learn about the planet Gothos and the alien being Trelane who inhabits it. In another episode “The Doomsday Machine,” Kirk uses the computer to analyze data on an unknown planet-destroying weapon, allowing the crew to find a way to destroy it.

ChatGPT is a language model that can understand and generate text, but it does not have the same capabilities as Enterprise’s computer. ChatGPT can answer questions, provide information, and generate text based on what it has learned from the large amounts of text it has been trained on, but it does not have the same level of advanced computation and data analysis capabilities as the Enterprise’s computer. It is a language model with the ability to generate human-like text based on the input it receives, but it doesn’t have the ability to perform complex computations and simulations like the Enterprise computer has.

Alas, we are making progress but are not quite there yet!

Limitations

The hype about ChatGPT is already huge, so let’s consider some of its limitations in the context of our data center use case.

A key lesson when using ChatGPT is to supplement it with other source material, to generate a more complete knowledge set.

For example, when I typed the title of this paper into a Bing search, I came across an article about other uses of AI that can be leveraged by data centers. Seven are noted by John Edwards that were not mentioned in as much detail by ChatGPT.[7] Quoting from his article, these include:

1. Many different types of data centers can benefit from using AI …. but the ones that generally benefit the most are large-scale facilities, such as big enterprise data centers, public cloud data centers, co-location data centers, and outsourced data centers.

2. AI helps data centers become more efficient, help(ing) reduce power, report cooling inefficiencies, and analyze the health status of critical mission systems.

3. AI leads to stronger data center security.

4. AI can optimize data center performance by relentlessly monitoring and tweaking resources, including processing, networking, and memory.

5. AI is set to improve infrastructure management. AI will soon be able to predict, to the moment, when specific facilities will need service, upgrading, and replacing.

6. AI is becoming a powerful data center planning tool … to plan and provision power resources, for example, as well as to forecast cooling needs.

7. AI will manage an increasing number of data center tasks with little or no human involvement.

Edwards provides more context for each item and his article is well worth reading.

I also typed other questions above into a Bing search, and sometimes found additional material that might be useful. Examples include mention of a different type of data center, a hyperscale data center (which basically means a very big one, with up to millions of servers)[8] and additional specificity of the types of skills data centers need.[9] These were not mentioned by ChatGPT. This should be no surprise, because ChatGPT is a summarizer, not a census taker. It won’t count and list everything it finds; it is just trying to provide subset of key points it is guided toward in the questions we ask it.4

Next, every answer obtained from ChatGPT was limited to how I phrased my questions. Since AI is not sentient it cannot infer what the user has in mind as conversations progress. These are known limitations.2 The lesson here is that if you want additional context, you should provide that for the AI to consider when you type your questions. If you don’t know what to ask, gather information from a trusted advisor and/or another search engine, or from peer-reviewed or professional literature before and after engaging with ChatGPT.

Users who want to dig much deeper with other sources of text can find several books about data centers on Amazon.com or elsewhere. My Bing search for data center textbooks yielded a list of 18 books, all of which focused on heavily technical aspects. A shorter list and brief summary of 11 books was posted in an article by Tess Hanna, in the Best Practices section on Medium.com, on September 16, 2020.[10] Amazon’s listed only a few textbooks, which is another reason to cast a broad net in your search for knowledge.

Another key limitation of ChatGPT is that the data it mines is primarily from 2021 and earlier. This is in stark contrast to search engines such as Google, Bing, etc., which include more recent information.4 Users who want more information about data centers or other topics should consult their favorite search engines.

Finally, concerns about the accuracy of all language models have been described noted,[11] and ChatGPT’s is no exception. Its developers have learned much from their earlier efforts and now search for online content to summarize by focusing on databases expected to be more accurate, with less inflammatory or explicit material, and with less misinformation. This will always be a work in progress.

Conclusion

Readers are advised to use multiple platforms to find information about any topic of interest to them. AI’s utility for data centers is no exception. Looking beyond ChatGPT for information about its pros and cons and for information about your subject matter of interest will generate a more complete knowledge base, leading to better decision making. Despite its limitations ChatGPT is a helpful tool because of its ability to provide several quick and reasonably detailed insights, as it did regarding data centers. It won’t be perfect but what query or knowledge gathering process ever is?

Note About Authorship

Finally, there is a brewing controversy about whether ChatGPT should be listed as an author of professional papers. Some journal editors frown upon this because a key attribute of authorship is accepting responsibility for written contributions, analytic methods, the use of underlying theory and other conceptual material, and any interpretations offered. ChatGPT does not have that inherently human ability.[12] For this paper on data centers, I take full responsibility for content because I guided ChatGPT in its quest to answer my questions. Nevertheless, I list it as a co-author because it developed much of the text, so it deserves credit for that. Readers are likely to vary in their approaches and are advised to consider this issue as they use this great new technology.

References

1. The history and evolution of chatbots, on https://insights.daffodilsw.com/blog/the-history-and-evolution-of-chatbots#:~:text=The%20first-ever%20chatbot%20was%20introduced%20even%20before%20the,output%20according%20to%20a%20defined%20set%20of%20rules.

2. ChatGPT: Optimizing language models for dialogue, on https://openai.com/blog/chatgpt/

3. Boyd E. General availability of Azure OpenAI Service expands access to large advanced AI models with added enterprise benefits, on https://azure.microsoft.com/en-us/blog/general-availability-of-azure-openai-service-expands-access-to-large-advanced-ai-models-with-added-enterprise-benefits/?utm_source=substack&utm_medium=email

4. Singh S, ChatGPT vs Google: The ultimate comparison of 2023, on https://www.demandsage.com/chatgpt-vs-google/

5. Roose K, A Conversation With Bing’s Chatbot Left Me Deeply Unsettled, on https://www.nytimes.com/2023/02/16/technology/bing-chatbot-microsoft-chatgpt.html

6. ChatGPT: The most advanced AI chatbot in 2022, on https://chatgpt.pro/

7. Edwards J, 7 things to know about AI in the data center, CIO December 20, 2018

8. Maysteel Industries, Types of data centers: How do you choose the right data center? On https://maysteel.com/blog/types-of-data-centers-how-do-you-choose-the-right-data-center

9. Data center career paths (techtarget.com)

10. Hanna T. The 11 most essential books for data center directors, on https://solutionsreview.com/backup-disaster-recovery/the-most-essential-books-for-data-center-directors/#:~:text=The%2011%20Most%20Essential%20Books%20for%20Data%20Center,Migrating%20to%20New%20Data%20Centers%20…%20More%20items

11. Radford A, Wu J, child R, et al. Language models are unsupervised multitask learners, on https://cdn/openai.com/bette-language-models/language_models_are_unsupervised_multitask_learners.pdf

12. Stokel-Walker C, ChatGPT listed as author on research papers: many scientists disapprove, Nature, January 18, 2023, on https://www.nature.com/articles/d41586-023-00107-z

Article by Ron Ozminkowski, PhD and ChatGPT

Ron is an internationally recognized consultant, analytics leader, and chief scientist whose published research has been viewed by people in over 90 countries. Educated in the SUNY system and The University of Michigan. Specializing in healthcare analytics, business strategy, economics, policy, and program evaluation. Founder & President of Analytics Strategy & Consulting LLC. Known for exceptional service to clients in The White House, federal and state agencies, life sciences companies, large employers and insurers, industry groups, and health systems. Over 100 endorsements each for leadership, analytic skills, and industry knowledge, on LinkedIn (see https://www.linkedin.com/in/ronozminkowski/). More info also can be found on https://www.analyticstrategiesconsulting.com and ronozminkowski.wordpress.com.

Originally posted on OpenDataScience.com

Read more data science articles on OpenDataScience.com, including tutorials and guides from beginner to advanced levels! Subscribe to our weekly newsletter here and receive the latest news every Thursday. You can also get data science training on-demand wherever you are with our Ai+ Training platform. Subscribe to our fast-growing Medium Publication too, the ODSC Journal, and inquire about becoming a writer.