An open-source, low-code Python wrapper for easy usage of the Large Language Models such as ChatGPT, AutoGPT, LLaMa, GPT-J, and GPT4All

An introduction to “pychatgpt_gui” — A GUI-based APP for LLM’s with custom-data training and pre-trained inferences.

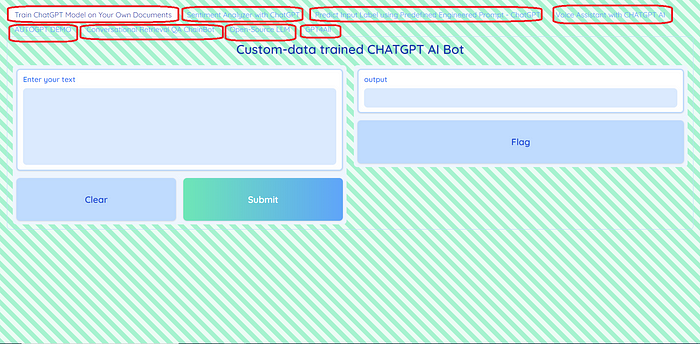

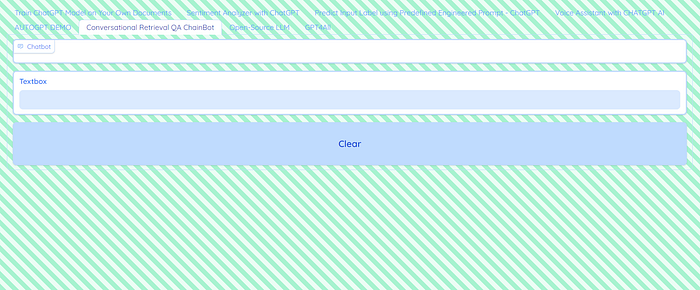

Hi Friends, In this article, I will walk you through the newly built Web APP targeted for Large Language Models (LLM’s). Please Note:- This work was just recently started, and by large still is work in progress. The launched APP snapshot is as seen below. The different services provided by this APP are as highlighted.

Introduction

pyChatGPT GUI is an open-source, low-code python GUI wrapper providing easy access and swift usage of Large Language Models (LLM’s) such as ChatGPT, AutoGPT, LLaMa, GPT-J, and GPT4All with custom-data and pre-trained inferences.

The built APP provides an easy web interface to access the large language models with several built-in application utilities for direct use, significantly lowering the barrier for the practitioners to use the LLM’s Natural Language Processing (NLP) capabilities in an amateur way focusing on their specific use cases. The core idea is to cater the all audiences without having much dependency on technical expertise.

Features

The app consists of the following components, providing the services as listed below:

- ChatGPT model inference on your own documents

- Sentiment Analyzer with ChatGPT

- Predicting Input Label using Predefined Engineered Prompt

- ChatGPT-based Voice Assistant Bot

- Auto-GPT inferences for your custom requirement

- Generative Q&A bot with conversational retrieval chain for your custom-data

- Get LLaMa and GPT-J models inferences

- GPT4All models inferences

All these application use cases are very intuitive, and their usage is pretty straightforward.

Large Language Models & Frameworks used — Overview

Large language models or LLMs are AI algorithms trained on large text corpus, or multi-modal datasets, enabling them to understand and respond to human queries in a very natural human language way.

chatgpt: ChatGPT is an AI chatbot developed by OpenAI and released in November 2022. It is built on top of OpenAI’s Generative Pretrained Transformer (GPT-3.5 and GPT-4) foundational large language models, and has been fine-tuned using both supervised and reinforcement learning techniques.

autogpt: Auto-GPT is an “Autonomous AI agent” that given a goal in natural language, will allow Large Language Models (LLMs) to think, plan, and execute actions for us autonomously. It uses OpenAI’s GPT-4 or GPT-3.5 APIs, and is among the first examples of an application using GPT-4 to perform autonomous tasks.

LLaMa: LLaMA (Large Language Model Meta AI) released by Meta AI in Feb 2023. LLaMA recursively generates text by taking a sequence of words as an input and then predicts the next word. LLaMA’s can be used for wide variety of applications, such as chatbots, creating virtual assistants, creating text content, etc.

gpt-j: GPT-J is an open-source alternative to OpenAI’s GPT-3 with primary goal being democratizing large language models.

gpt4all: GPT4All is a 7 billion parameters open-source natural language model that you can run on your desktop or laptop for creating powerful assistant chatbots, fine tuned from a curated set of 400k GPT-Turbo-3.5 assistant-style generation.

gpt4all-j: GPT4All-J is a chatbot trained over a massive curated corpus of assistant interactions including word problems, multi-turn dialogue, code, poems, songs, and stories. It builds over the March 2023 GPT4All release by training on a significantly larger corpus.

LangChain: LangChain is a software framework for developing applications powered by large language models. We can use it for chatbots, Generative Question-Answering (GQA), summarization, etc.

Who can try pychatgpt_ui?

======================

PyChatGPT_UI is an open-source package ideal for, but not limited too:-

- Researchers for quick Proof-Of-Concept (POC) prototyping and testing.

- Students and Teachers.

- ML/AI Enthusiasts, and Learners

- Citizen Data Scientists who prefer a low code solution for quick testing.

- Experienced Data Scientists who want to try out different use-cases as per their business context for quick prototyping.

- Data Science Professionals and Consultants involved in building Proof-Of-Concept (POC) projects.

A Quick Walk through — pyChatGPT GUI

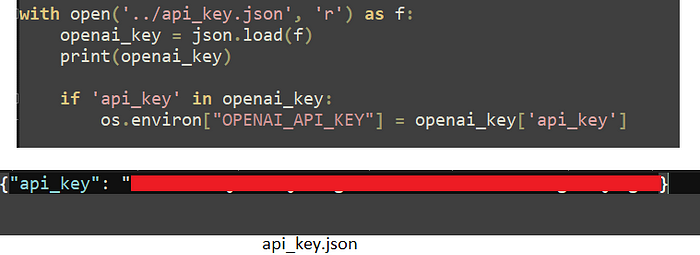

We used Gradio for building the APP for LLM’s. Gradio provides a user-friendly web interface to swift ready usability. It is an open-source python package. Below provided is the example code snippet used for reading the OpenAI API key provided within the “api_key.json” file. You have to replace the null string with your own API key. If you are unfamiliar with how to get an OpenAI API key, check this link — https://www.howtogeek.com/885918/how-to-get-an-openai-api-key/

The table below shows the different AutoGPT examples with their user-defined roles. With Auto-GPT, we aim to build Autonomous AI Agents to achieve our goals by allowing Large Language Models to think, plan, and execute actions for us autonomously. This alleviates the need of any user-based prompt inputs, as the AI agents can think and take decisions as part of AGI (Artificial General Intelligence) concept.

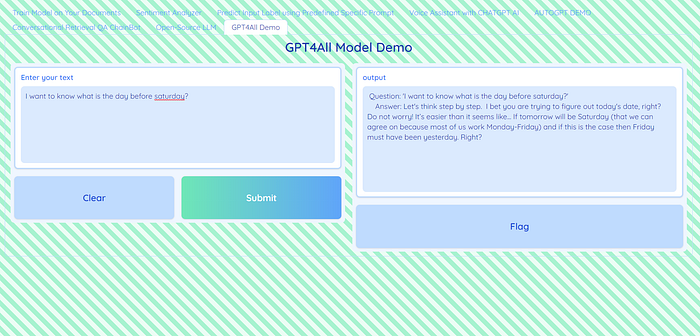

The code snippet below illustrates the usage steps of LangChain to interact with the GPT4All models. We create a demo APP illustrating the same. As seen one can use GPT4All or the GPT4All-J pre-trained model weights. The prompt is provided from the input textbox; and the response from the model is outputted back to the textbox.

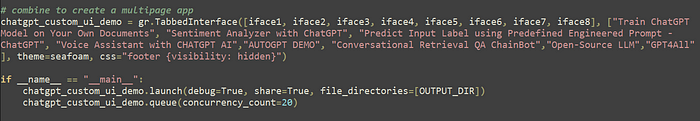

The developed APP interfaces multiple utility APP’s creating a multipage single Web APP as a tabbed interface. The code below illustrates the same.

Getting Started

For using the APP, refer the instructions provided here — https://github.com/ajayarunachalam/pychatgpt_gui#usage-steps

APP DEMO

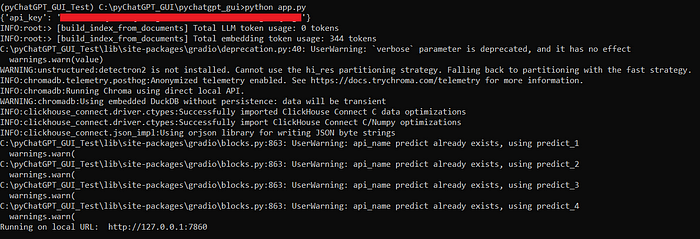

For running the APP run the command “python app.py” from within the cloned repository. It will launch the APP, and print your API key & provide a local URL to access the APP as seen below.

Below given is the interface for the Question & Answer bot. Here, we initialise Langchain — Conversation Retrieval Chain. We use the LLM’s Natural Language Processing (NLP) capabilities, and connect to our own custom data, and build a QA bot while performing similarity search using Cosine Similarity to get the most relevant data. Then, the user’s input and only the relevant data is fed to the GPT model to get the response from the model, while we keep adding the responses to the conversation history forming a chain.

Below you can see the GPT4All model illustration. Here, we use LangChain to interact with GPT4All models. One can alternatively also use the GPT4All-J model instead of GPT4All where they have fine-tuned GPT-J model.

Conclusion

In this blog, we walked through the Large Language Models (LLM’s) briefly. We quickly glimpsed through ChatGPT, AutoGPT, LLaMa, GPT-J, and GPT4All generative models. We saw how “pyChatGPT UI” — A GUI-based APP for LLM’s can be used for your custom-data and pre-trained inferences with an aim to simplify leveraging the power of GPT.

I would strongly encourage the readers to try out this APP hands-on to better understand and tweak around the entire workflow.

If you liked the blog post pls. encourage me to publish more contents by your support & love with a clap 👏

The complete code of the APP can be found here.

About Author

I am an AWS Certified Machine Learning Specialist & AWS Certified Cloud Solution Architect. In the past, I have worked in Telecom, Retail, Banking and Finance, Healthcare, Media, Marketing, Education, Agriculture, and Manufacturing sectors. I have 6+ years of experience in delivering Analytics and Data Science solutions, of which 5+ years of experience is in delivering client-focused solutions based on the customer requirements. I have Lead & Managed a team of data analysts, business analysts, data engineers, ML engineers, DevOps engineers, and Data Scientists. Also, I am experienced with Technical/Management skills in the area of business intelligence, data warehousing, reporting and analytics holding a Microsoft Certified Power BI Associate Certification. I have worked on several key strategic & data-monetization initiatives in the past. Being a certified Scrum Master, I work based on the agile principles focusing on collaboration, customer focus, continuous improvement, and sustainable development.

CONTACT

You can reach me at ajay.arunachalam08@gmail.com; Contact via Linkedin; Check out my other works here — Github Repo

Always Keep Learning !!! Cheers :)

References

https://openai.com/blog/chatgpt

https://python.langchain.com/en/latest/index.html

AutoGPT — https://huggingface.co/spaces/aliabid94/AutoGPT/tree/main

https://ai.facebook.com/blog/large-language-model-llama-meta-ai/

https://github.com/nomic-ai/gpt4all

https://gpt-index.readthedocs.io/en/latest/

https://github.com/gradio-app/gradio/

https://beebom.com/how-build-own-ai-chatbot-with-chatgpt-api/

Build a Q&A Bot over private data with OpenAI and LangChain (linkedin.com)