Activation Functions: The Other ReLUs — A Deep Dive

In our previous article, ‘Activation Functions: ReLU vs. Leaky ReLU,’ we looked into what an activation function was, what ReLU was, and how two of its basic variant implementations were to affect the working of neural networks. Now, let’s look into TWO other types of ReLU — Parametric ReLU and Exponential Linear Unit. These implementations of ReLU are not mainly discussed or taught in classes — so I hope this article is informative and insightful. Let’s dive right in!

Parametric ReLU:

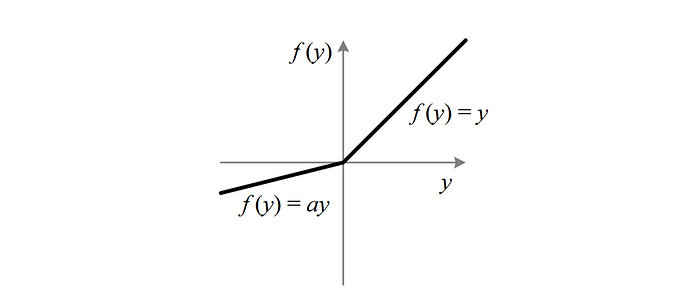

Parametric ReLU (PReLU) is a variation of the Rectified Linear Unit (ReLU) activation function that introduces learnable parameters to determine the slope of the negative values. Unlike traditional ReLU, where negative values are set to zero, PReLU allows the slope to be adjusted during training.

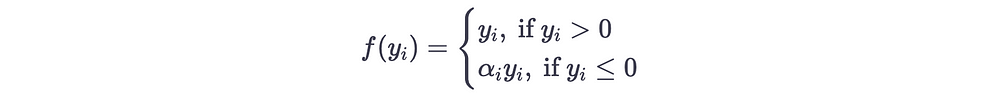

The PReLU function is defined as follows:

f(x) = max(ax, x)

where x is the input, f(x) is the output, and ‘a’ is a learnable parameter. The value of a determines the slope for negative inputs. During training, ‘a’ value is updated through backpropagation along with other network parameters.

The primary motivation behind using PReLU is to provide the neural network with more flexibility in learning complex patterns. By allowing negative values to have a small slope, the network can capture information that may be discarded by traditional ReLU. This can be particularly useful when dealing with datasets containing positive and negative values, as it allows the network to learn different activation patterns for positive and negative inputs.

During the forward pass, PReLU behaves similarly to ReLU: positive values are passed through unchanged, and negative values are multiplied by the learnable parameter ‘a’. The output is determined by taking the element-wise maximum between ‘ax’ and ‘x.’

During the backward pass (backpropagation), the gradient with respect to both ‘x’ and ‘a’ is calculated. The gradients are then used to update the values of a and other network parameters through an optimization algorithm such as gradient descent.

By allowing the slope to be learned, PReLU introduces more parameters to the model than traditional ReLU. This increased flexibility can improve model performance, especially in scenarios where ReLU may result in dead neurons or limited representation capacity.

However, it’s important to note that PReLU comes with an additional computational cost due to the extra learnable parameter. Also, the introduction of more parameters may increase the risk of overfitting, so appropriate regularization techniques should be applied to mitigate this issue.

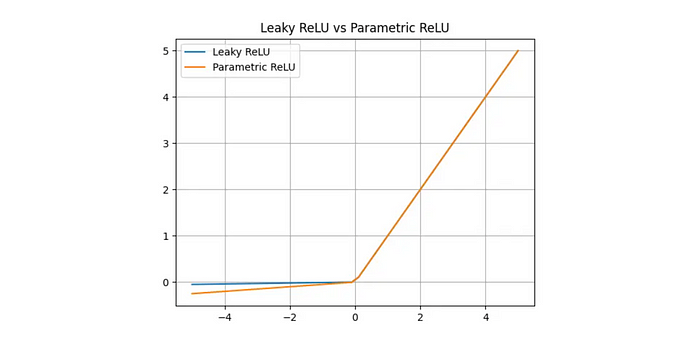

Reading the above, one is bound to think, “How is Parametric ReLU different from Leaky ReLU?” — Let’s address this.

The main difference between PReLU and Leaky ReLU lies in the way they determine the slope of negative values:

Leaky ReLU: In Leaky ReLU, a fixed small slope is assigned to negative values, typically as a predefined constant (e.g., 0.01). The function is defined as follows:

f(x) = max(ax, x)

Here, x is the input, f(x) is the output, and a is a small constant that determines the slope for negative values. The value of a remains the same throughout training and inference.

Parametric ReLU (PReLU): In PReLU, the slope for negative values are learned during training rather than fixed. PReLU introduces a learnable parameter that determines the slope dynamically.

In essence, Leaky ReLU uses a fixed predefined slope for negative values, while PReLU learns the slope adaptively during training. This adaptability of PReLU allows the neural network to learn the most appropriate slope for each neuron, potentially enhancing the model’s capacity to capture complex patterns.

Both PReLU and Leaky ReLU address the problem of ‘dead neurons’ by allowing some gradient flow for negative inputs. By introducing non-zero slopes, these variants enable the network to avoid the flat or zero gradient that can cause the dying ReLU problem. The choice between PReLU and Leaky ReLU depends on the specific problem and the model's performance during training and testing.

Exponential Linear Unit:

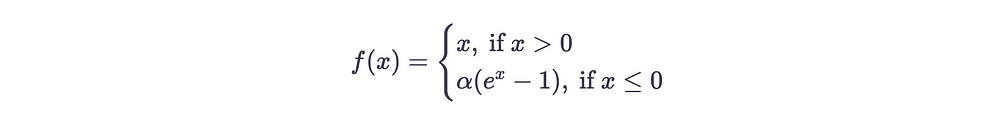

The Exponential Linear Unit (ELU) is an activation function designed to address some limitations of the Rectified Linear Unit (ReLU) and its variants. ELU introduces a smooth curve for both positive and negative inputs, allowing for better gradient flow and avoiding some issues associated with ReLU.

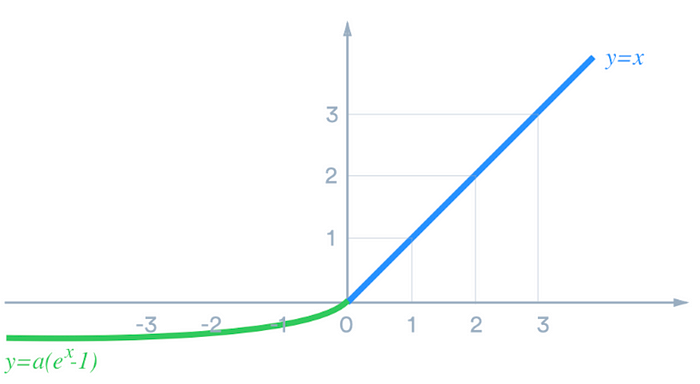

The ELU function is defined as follows:

f(x) = { x, if x > 0; a * (exp(x) — 1), if x <= 0 }

where x is the input, f(x) is the output, and a is a constant controlling the saturation point.

The ELU function behaves similarly to ReLU for positive inputs, where it simply passes the input value through unchanged. However, ELU introduces a curve that smoothly approaches a negative saturation point for negative inputs. The choice of the constant “a” determines the value to which the function saturates for negative inputs.

The main advantages of ELU over ReLU and its variants are as follows:

- Smoothness: ELU provides a smooth curve for both positive and negative inputs, unlike ReLU, which has a sharp threshold at zero. This smoothness ensures better continuity of the function and allows for more stable gradient flow during backpropagation.

- Avoiding dead neurons: ELU helps mitigate the issue of “dying ReLU” where certain neurons can become non-responsive. By providing non-zero outputs for negative inputs, ELU encourages the flow of gradients and helps prevent neurons from completely deactivating.

- Negative saturation: The negative saturation point in ELU allows the function to handle highly negative inputs more effectively. This can help the network capture information from negative inputs lost with ReLU or its variants.

- Approximation of identity function: ELU approximates the identity function for positive inputs, which can be beneficial when dealing with vanishing gradients during deep network training.

Despite its advantages, ELU also has some considerations. The exponential function in the ELU formulation may introduce additional computational complexity compared to simpler activation functions. Additionally, the saturation point should be chosen carefully, as a very high saturation point can lead to vanishing gradients, while a low saturation point may cause the function to become similar to ReLU.

The following article will discuss other Activation Functions.

This mini-series has been a fun learning process for me, and I hope y’all will find it to be the same.

Until next time! Adios!!