Imagine a tool so versatile that it can compose music, generate legal documents, assist in developing vaccines, and even create artwork that seems to have sprung from the brush of a Renaissance master.

This isn’t the plot of a sci-fi novel but the reality of generative artificial intelligence (AI). Generative AI is transforming how we approach creativity and problem-solving across various sectors. But what exactly is this technology, and how is it being applied today?

In this blog, we will explore the different key concepts and generative AI use cases.

What is generative AI?

Generative AI refers to a branch of artificial intelligence that focuses on creating new content – be it text, images, audio, or synthetic data. These AI systems learn from large datasets to recognize patterns and structures, which they then use to generate new, original outputs similar to the data they trained on.

For example, in biotechnology, generative AI can design novel protein sequences for therapies. In the media, it can produce entirely new musical compositions or write compelling articles.

How generative AI works?

Generative AI operates by learning from vast amounts of data to generate new content that mimics the original data in form and quality. Here’s a simple explanation of how it works and how it can be applied:

How Generative AI Works:

- Learning from Data: Generative AI begins by analyzing large datasets through a process known as deep learning, which involves neural networks. These networks are designed to identify and understand patterns and structures within the data.

- Pattern Recognition: By processing the input data, the AI learns the underlying patterns that define it. This could involve recognizing how sentences are structured, identifying the style of a painting, or understanding the rhythm of a piece of music.

- Generating New Content: Once it has learned from the data, generative AI can then produce new content that resembles the training data. This could be new text, images, audio, or even video. The output is generated by iteratively refining the model’s understanding until it can produce high-quality results.

Explore the best 7 online courses offered on generative AI

Top Generative AI Use-Cases:

- Content Creation: For marketers and content creators, generative AI can automatically generate written content, create art, or compose music, saving time and fostering creativity.

- Personal Assistants: In customer service, generative AI can power chatbots and virtual assistants that provide human-like interactions, improving customer experience and efficiency.

- Biotechnology: It aids in drug discovery and genetic research by predicting molecular structures or generating new candidates for drugs.

- Educational Tools: Generative AI can create customized learning materials and interactive content that adapt to the educational needs of students.

By integrating generative AI into our tasks, we can enhance creativity, streamline workflows, and develop solutions that are both innovative and effective.

Key terms in generative AI

Generative Models: These are the powerhouse behind generative AI, where models generate new content after training on specific datasets.

Training: This involves teaching AI models to understand and create data outputs.

Supervised Learning: The AI learns from a dataset that has predefined labels.

Unsupervised Learning: The AI identifies patterns and relationships in data without pre-set labels.

Reinforcement learning A type of machine learning where models learn to make decisions through trial and error, receiving rewards. Example: a robotic vacuum cleaner that gets better at navigating rooms over time.

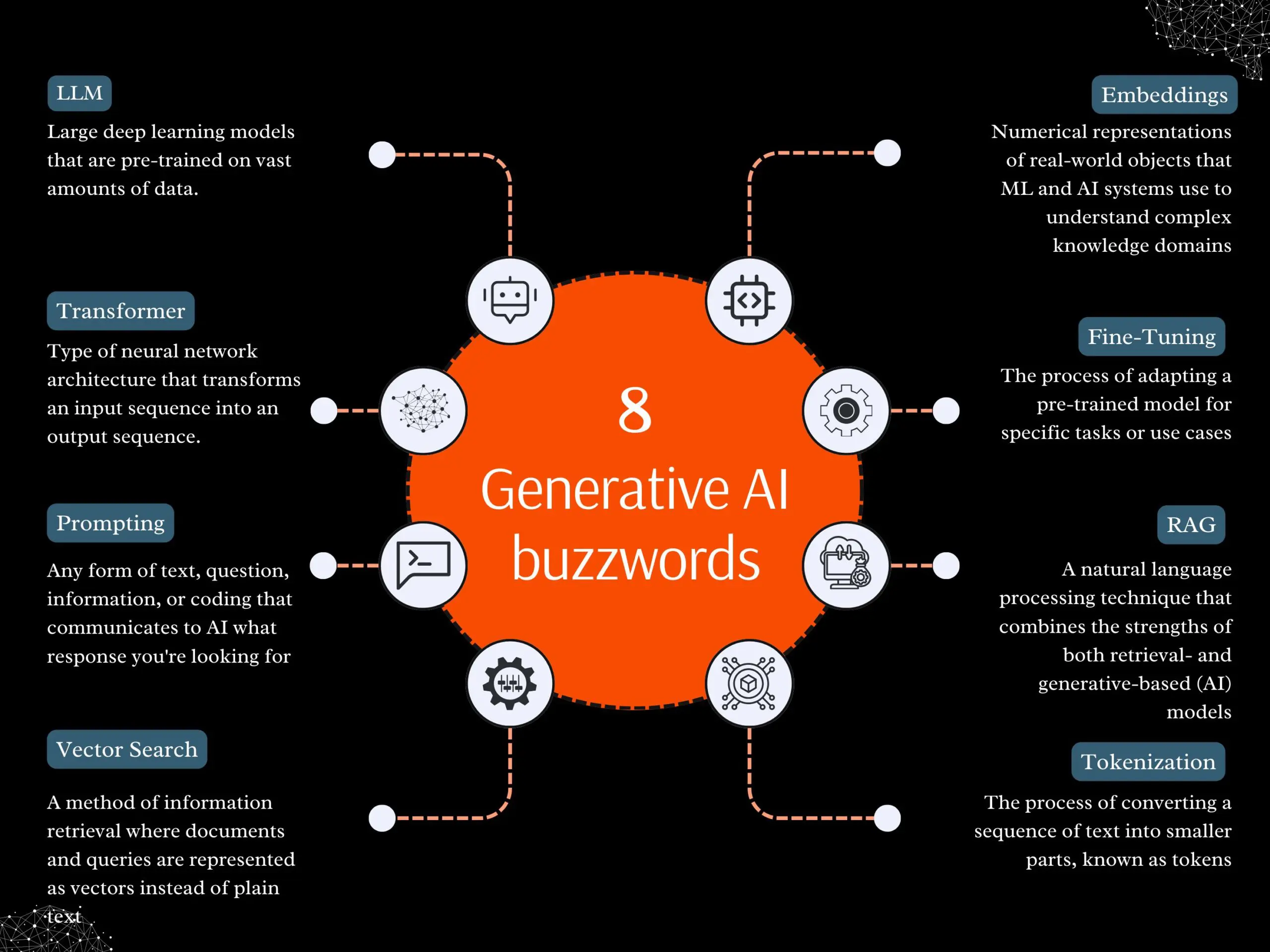

LLM (Large Language Models): Very large neural networks trained to understand and generate human-like text. Example: GPT-3: writing an article based on a prompt.

Embeddings: representations of items or words in a continuous vector space that preserve context. Example: Word vectors are used for sentiment analysis in reviews.

Vector Search: Finding items similar to a query in a dataset represented as vectors. Example: Searching for similar images in a database based on content.

Navigate the ethical and societal impact of generative AI

Tokenization: Breaking text into smaller parts, like words or phrases, which facilitates processing. Example: Splitting a sentence into individual words for linguistic analysis.

Transformer: A model architecture that handles sequences of data, important for tasks like translating languages. Example: Translating a French text to English.

Fine-tuning: Adjusting a pre-trained model slightly to perform well on a specific task. Example: Adjusting a general language model to perform legal document analysis.

Prompting: Providing an input to an AI model to guide its output generation. Example: Asking a chatbot a specific question and it will generate an answer.

RAG (Retrieval-Augmented Generation): Enhancing model responses by integrating information retrieval during generation. Example: A QA system searches a database to answer a query more accurately.

Parameter: Elements of the model that adjust during training. Example: Weights in a neural network that change to improve the model’s performance.

Token: The smallest unit of processing in NLP, often a word or part of a word. Example: The word ‘AI’ is a token in text analysis.

Training: The overall process where a model learns from data. Example: Training a deep learning model with images to recognize animals

Generative AI use cases

Several companies are already leveraging generative AI to drive growth and innovation:

1. OpenAI: Perhaps the most famous example, OpenAI’s GPT-3, showcases the ability of Large Language Models (LLMs) to generate human-like text, powering everything from automated content creation to advanced customer support.

2. DeepMind: Known for developing AlphaFold, which predicts protein structures with incredible accuracy, DeepMind utilizes generative models to revolutionize drug discovery and other scientific pursuits.

3. Adobe: Their generative AI tools help creatives quickly design digital images, offering tools that can auto-edit or even generate new visual content based on simple descriptions.

The future of generative AI

As generative AI continues to evolve, its impact is only expected to grow, touching more aspects of our lives and work. The technology not only promises to increase productivity but also offers new ways to explore creative and scientific frontiers.

In essence, generative AI represents a significant leap forward in the quest to blend human creativity with the computational power of machines, opening up a world of possibilities that were once confined to the realms of imagination.